FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Refresh Timeout Error.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Refresh Timeout Error.

Hello team,

Hope you all are doing good. I'm facing a problem of Timed Out error in service. I have a pro account and when I initially refresh the dataset on service, it gives "Timed Out" error after 2 hours. So what are the ways to refresh the data on service?

Thank you for your efforts in advance..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

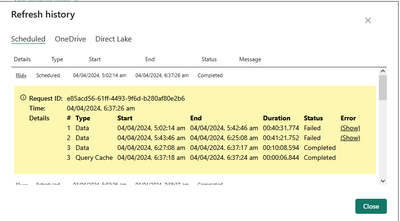

Hoping someone can help with this. Here is a screenshot the number of refresh attempts. As you can the whole process took around 2 hours

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Apologies if I am brining up an old topic. I am facing a similar issue. How did you manage to resolve yours ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Uniqueusername ,

In our case the data was significantly big and as the refresh occurs in a shared memory in pro account, we moved towards premium account which gave us a dedicated memory to refresh the report after consulting with Microsoft Support team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for that. We are on a premium subscription as well. Were you facing the slow refresh times while scheduling auto refresh through the gateway ?

I have been noticing my reports taking a lot longer to refresh for some reason as compared to some of the other reports which are on the same workspace on the gateway. My reports seem to take for hours compared to a forced, on-demand refresh taking a few minutes

The storage mode my model has is a mixed with DirectQuery , dual and import modes

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having issues this morning with timeouts on publishing from the desktop to the BI service. Anyone else seeing this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi. Power Bi Pro has a limit of 2 hours to refresh your data.if you exceed that time you can't continue. This can happen for different reasons like bandwitdh (if you have a gateway) or dataset size.

You have to consider reading about downsizing data model. You can find amazing youtube videos from guy in a cube and others in order to make your model smaller. Usually making a good star schema helps.

Another thing you can check is if your source is returning the data fast. You might have a big crazy native query to the source that takes a lot. Those scenarios might need a middle store procedure building a table in the data base engine in order to help Power Bi only reading the resulted table and avoiding running a complex query.

If you are on premise you can also check bandwidth but that might be the last option because the first two I have mentioned are the most common issues.

I hope that make sense,

Happy to help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this limitation applied also on power BI server on premise ( which installed on our sql server) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ibarrau , Thank you so much for your reply.

Everything you mentioned makes sense and I have taken care of every point you have mentioned and also the dataset has a large amount of data. Is there any other way by which I can refresh the dataset?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are sure you have an amazing star schema connected to a source that runs fast single tables (not native query with logics) and you still have 2 hours limit, then you should consider a different license like PPU (premium per user) or moving dataset to Analysis Services. There is also a premium license. If you are sure you have optimized all then the limit can't be skipped, that's showing you that Pro License is not enought for your model.

I know incremental refresh is now available for Pro, but I'm not sure if that will let you skip this limit. You can try it if you have a DB source with Query folding (SQL server, Synapse, postgress, etc).

I hope that helps,

Happy to help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for your response. So, does this mean that the only way to counter the 2hrs limit is to move to a higher license? If yes, will moving to Power BI Embedded help?

And also I'm facing one more weird issue which is when I publish the dataset (Incremental Refresh is configured) to the service then usually the initial refresh should be triggered on its own but this doesn't seem to happen currently...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want to expend the 2 hours limit yes, you need a new license. You can do it with embed, I think that AAS is a cheaper option (but it includes migration) or if there are not so much people shared PPU might be cheaper too.

Regarding the second question I'm sorry but I'm not sure about the answer. However I think there Patrick from Guyinacube youtube channel mentioned that in one of his videos. You might want to check his incremental refreshes videos before jumping to embed capacity.

Regards

Happy to help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for your response. However when I publish the dataset, the auto-refresh triggers, and the refresh continues for 2 hours and then gives a time-out error. When I refresh it (On demand) again, the dataset gets refreshed successfully. So every time the first refresh gives a time-out error and the second refresh successfully gets executed. Doesn't it seem to be behaving weirdly?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you ever fix this issue ?

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 22 | |

| 11 | |

| 10 | |

| 9 | |

| 9 |

| User | Count |

|---|---|

| 55 | |

| 40 | |

| 39 | |

| 27 | |

| 25 |