FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Memory error: You have reached the maximum all...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Memory error: You have reached the maximum allowable memory allocation for your tier.

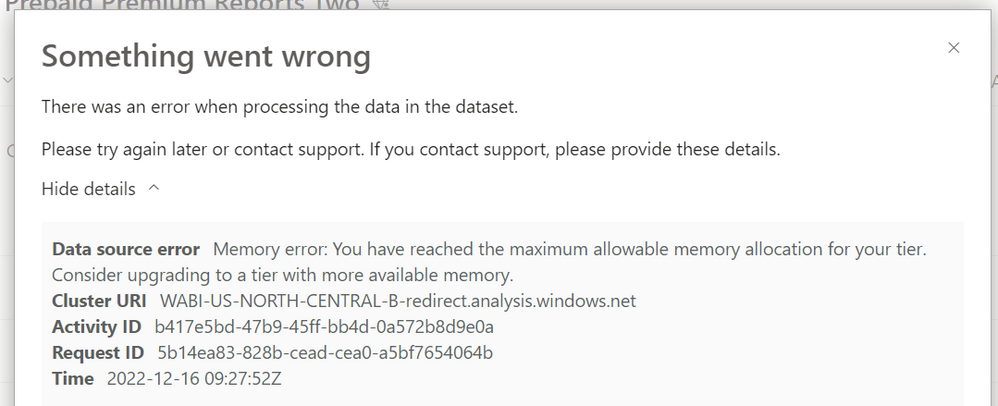

Hello, One of my reports is giving me error like the below,

Memory error: You have reached the maximum allowable memory allocation for your tier. Consider upgrading to a tier with more available memory.

Earlier that same report was not giving me any while refreshing. but from the last few days, I'm facing this error while the On-demand refresh.

I have tried a few suggestions also which are given here in the community. but those are not working for me and still facing errors.

please help me out with that error.

Thanks.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @Anonymous

You need to upgrade the shared capacity with premiumn capacity to solve this problem, because you have used resources that exceed the upper limit, or you need to optimize the underlying data source to make the query return less data.

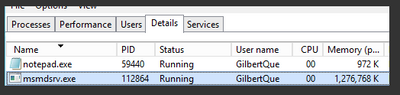

You can download the pbix file and refresh it, see how much memory it consumes when refreshing. You can monitor this by going into task manager and looking for "msmdsrv.exe" this is the analysis services engine.

If you exceed the allowable capacity, then you will want to consider upgrading your capacity to Premium capacity and purchasing the appropriate SKUs.

What is Power BI Premium Gen2? - Power BI | Microsoft Learn

Best Regards,

Community Support Team _ Ailsa Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @Anonymous

You need to upgrade the shared capacity with premiumn capacity to solve this problem, because you have used resources that exceed the upper limit, or you need to optimize the underlying data source to make the query return less data.

You can download the pbix file and refresh it, see how much memory it consumes when refreshing. You can monitor this by going into task manager and looking for "msmdsrv.exe" this is the analysis services engine.

If you exceed the allowable capacity, then you will want to consider upgrading your capacity to Premium capacity and purchasing the appropriate SKUs.

What is Power BI Premium Gen2? - Power BI | Microsoft Learn

Best Regards,

Community Support Team _ Ailsa Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I encountered the same error message one week ago, but I couldn't duplicate the error message. I have even created a test data model with double size, but no error when I published it to PB service. So I guess this is a temporary issue on PB service. Could someone reveal more lights on this type of error?

Here is a similar thread on the issue: https://learn.microsoft.com/en-us/archive/msdn-technet-forums/94758523-b9fa-4567-b3d9-1759abb54a84

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I encountered the same error message this morning. I would like to know which factors decides the RAM consumption. Is it the tables size in the data model or a specific transformation queries in Power Query? I assume the DAX measure don't impact RAM consumption because I couldn't open any page in Power BI.

The data model itself is 77 MB, in task manager, it shows the report takes over 2 GB in PB Desktop. Is 77 MB a large data model already?

@Anonymous

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jeanxyz,

I am no expert and quite new to PowerBI but have used a lot of other tools over the years.

It would seem that the dataset is the main thing to look at help reduce the size of your file and help the RAM and even CPU used as a result.

You can reduce the size of your reports dataset by understanding what fields use more data and what to remove. You can see a lot of this information in Dax Studio, but I don't have a link to the guide I used.

A field that has a few options repeated over millions of rows will most likely be compressed with a dictionary and this should compress very well.

Our worst offender was a description field that could be any size and totaly free text.

Removig this and only bringing in the Short Description fixed at 80 chars long instead on all tables took hundreds of MB from the report file size resolving our issue.

I did not design the report and would not do these things myself, but when looking into the design of the report model and data fields being pulled into our report, I removed further fields and even tables that had been duplicating data.

Another thing causing problems in the report was DAX calcualted columns. From my reading, when creating these in DAX, they are not compressed, when doing these calculated columns in the Power Query transform stage, they are compressed. Some things you can't move easily to Power Query, but most of the ones I looked at in our report should of been done in Power Query, reducing the file size further.

Im not sure about Dax Measures, but would think its more CPU power than memory. I tend to keep my Dax measures simple and do as much pre-work in calcualted columns created in Power Query when possible, so my Dax measures mainly sum, count or filter the result of a calcualted column.

There is alot of reading to do on the subject and DAX studio can help you see what is using the most space in your dataset.

Hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks @pjg , I went through your comments. It seems to me there isn't much I can do to reduce the dataset size. According to DAX studio, the fields that takes large memories are doc IDs, invoice IDs, unfortunately, those fields have to be kept for the current year (~ 1 million records). I have already aggregated history data there.

Not sure if there are other solutions to reduce the data model size.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We had an error like this in our test enviroment, but the report worked fine in our premium live enviroment.

Im not sure if its the exact same message as I didn't record it and was a couple of months ago, but the report PBIX was just over 1gig in size.

We reduced the dataset size by only importing fields from data tables that are used in the report. Some fields had been imported by the developer but not used in the report. A couple of the fields contained a lot of data like ASCII encoded attachements so this worked well. As an extra step, we also excluded very old historical data and kept only a rolling 3 years in the report.

After we dropped the unused fields, limited the number of years of data, it has been working fine since and is about 1/3 of the size.

Im guessing your have tried this as the error message would point you in this direction, but posted just incase as you had not said what you have tried.

Hope it helps.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!