FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

View all the Fabric Data Days sessions on demand. View schedule

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflows and the enhanced compute engine

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflows and the enhanced compute engine

Hi all, apologies in advance, this is going to be long...just because there are a lot of questions and potentially I'm not doing something quite right.

I'm hoping that dataflows with the enhanced compute engine can solve a general class of problem we have - which is joining data from different databases. Power Query is very slow at this because the query can't be pushed down - since it crosses multiple databases. My hope was that the new "enhanced compute engine", which I believe stores entities not only as CSV files but also in a backend database, would help make these types of operations fast and easy. Microsoft promoted this with "Now you will be able to efficiently combine and transform different data sources and manage TB’s of data using dataflows. With the new engine, calculation of computed entities can now be up to x20 faster when working with large scale data volumes." Dataflows June 2019 Feature Summary

Having seen this, I started testing on a very basic scenario - load billing data from one database, and patient data (which can join to the billing data) from a different database. Specifically, I did the following steps:

- Enabled the enhanced compute engine in our dev capacity, which is a P2. We allocated 20% memory for data flows (which should be 5 GB), and I set the enhanced compute engine on with 1024 MB allocated to it.

- I created a "source" dataflow, where I loaded the billing data into one entity

- In the same "source" dataflow, loaded the patient data into a second entity

- I then created a "final" dataflow, where I use the billing and patient data as linked entities, and built a final table which starts with the billing data and adds columns from patient (using a merge operator, left outer join)

In my mind, the two entities are NOT very big. The Billing entity has about 5.6 million rows, and the patient data is 3.7 million rows. I'm not sure how to estimate how much space these take up in the enhanced compute engine, other than noting that if I load the entities directly in to Power BI desktop, I see that billing shows as 1.26 GB and patient as 345 MB.

In other words, not that many rows, and way less than the "Terabytes" size in the announcement. In general - this is not working, and is leading to a ton of questions. Can anyone help answer these?

- When exact are the entities loaded into the backed SQL server database of the enhanced compute engine?

- After the ingest flow completes?

- Before used in another flow as a computed entity?

- ? something else? - Can we control which entities will load into the enhanced compute engine database and which don't? Do ALL entities load into the database? What happens if we allocate 1 GB to enhanced compute engine and I have 5 GB worth of linked entities? Or 500 GB? Or a TB?

- Why are merges (even using left outer join) still SUPER slow in the GUI? When I create the dataflow "final" table, using the linked entities for billing and patient, it can take 5 - 10 minutes before results show up in the web GUI.

- Is the size of the underlying entity csv file, which we can see in Power BI desktop when loading a dataflow entity, the same size used in the back end enhanced compute engine database? aka does a 1 GB text file mean it takes 1 GB in the database? If not - how do we have any idea how much memory something will take, so we can allocate appropriately?

- What happens if trying to join dataflow entities that are too large to fit in the enhanced compute engine memory?

I apologize if these are answered somewhere - I searched and couldn't really find details. I was really hoping this would work - because we have multiple compelling reasons why being able to join the results of queries against multiple databases would be SUPER helpful. But so far not really having any luck.

Thanks!

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- When exact are the entities loaded into the backed SQL server database of the enhanced compute engine?

- After the ingest flow completes?

- Before used in another flow as a computed entity?

- ? something else

Data is loaded to the compute engine's sql db as part of the ingest flow. All data loaded to entities in a workspace backed by a capacity with the engine enabled are loaded to the sql db

- Can we control which entities will load into the enhanced compute engine database and which don't? Do ALL entities load into the database? What happens if we allocate 1 GB to enhanced compute engine and I have 5 GB worth of linked entities? Or 500 GB? Or a TB?

You can only control this by which workspaces are backed by capacities with the compute engine enabled. The allocation setting in the capacity admin page is about memory allocation. This is memory that is set aside for the engine and not able to be used by the tabular models on the capacity. because the sql db's are not in memory objects, the size of the data loaded to dataflows is not related to the memory allocation. instead the DB size consumes the 1TB of disk storage associated with each capacity.

- Why are merges (even using left outer join) still SUPER slow in the GUI? When I create the dataflow "final" table, using the linked entities for billing and patient, it can take 5 - 10 minutes before results show up in the web GUI.

I don't know. Its hard to make definite or even likely statements about performance without more detail.

- Is the size of the underlying entity csv file, which we can see in Power BI desktop when loading a dataflow entity, the same size used in the back end enhanced compute engine database? aka does a 1 GB text file mean it takes 1 GB in the database? If not - how do we have any idea how much memory something will take, so we can allocate appropriately?

See first answer. No there are no easy metrics here. That would be nice.

- What happens if trying to join dataflow entities that are too large to fit in the enhanced compute engine memory?

see first answer. since the DB is not an in memory object, this only happens as part of query execution. both power query and, i assume, the compute engine sql server have memory paging systems.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could we get somebody from MS to answer those questions?

These are real life questions not covered by any docs and info about dataflows and real life performance is scarse. My test, even though less conslusive lead to completely giving up on this service as it was too slow to use. Would love to look into it again once there is more visibility what works and how so that we can set expectations properly.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

I would recommend that you contact the author of dataflow under the document, they can answer your questions more comprehensively and professionally.

Here is the link: https://docs.microsoft.com/en-us/power-bi/service-dataflows-linked-entities

If this post helps, then please consider Accept it as the solution to help the other members find it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Two more questions I've come up with:

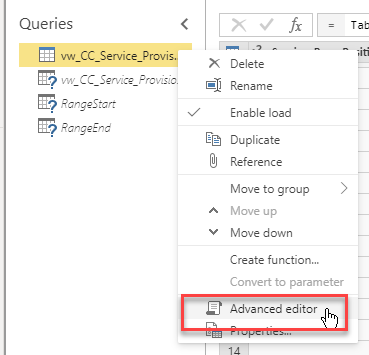

1. Is there anyway to see the full M code for an entity? In Power BI desktop I can click on the "Advanced Editor" button to see the full code for a table. In the service, it looks like the only way to see all code is to go through "step by step" and copy the code one step at a time?

2. How would I migrate a dataflow from a "development" workspace to a production workspace?

Thanks!

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would really love to see many of these questions answered @Anonymous as I'm experiencing similar headaches to you, very frustrating are all the lengthy waiting periods working with PowerQuery in the service for such things like preview results (inparticular with merge steps), endless issues eith refresh timeouts, and even to simply do the validation required to save & exit out of the PowerQuery 'edit' screen can be herendous for me depending on the complexity of what i'm doing.. but even something as simple as changing a few data types can have me waiting literally an hour+ for the query to validate and save.. hair-pulling stuff I'm sure you'll agree). On average I've experienced about 80% if time watching spinning wheels, and 20% actually getting anywhere with progressing a relatively simple dataflow model (with entitites of around the same size as yours 6M - 12M rows (we're on P1 Capacity however)) towards completion.

Besides all that.. all I have to offer you currently is that yes - you can actually view your M query inside the PowerQuery editor in the service - just right-click on your table in the 'Queries' pane and choose 'Advanced Editor'.

Best of luck getting some helpful 'real-world experience' responses to these questions!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Completely agree. I have exactly the same issues.

Such a great feature (dataflows), but when you try to build more logic it gets painfully slow.

this has to change!

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!