FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflow incremental refresh with primary key

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow incremental refresh with primary key

Hi All,

Loving dataflows, but would love the ability to do an incremental refresh in more of an 'upsert' style. That is, maintain the uniqueness of a primary key within the dataset. Any ideas on how to do this? I assume it will look something like excuting some sort of M-style merge after the dataflow incremental refresh pulls in more data.

For refrence my table size is ~1 million rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I SO GLAD someone asked this question too!

Currently incremental refresh works for the data was updated based on datetime stamp, say last 10 days. It does not take into consideration the Primary Key that might have been inserted in the past.

Eg: lets say a "Sales Order" was created 3 months with a Status "Open", but only today its status was changed to "Shipped". Since I am only looking for past 10 days data for incremental refresh, a new Sales Order row gets inserted into the Dataset causing my "Sales Order" table to be duplicated.

How can we avoid this? Ideally along with the Datetime stamp, there needs to be a option where we specify the Unique Key column too. If incremental refresh contains any of the Unique Keys loaded in the past, they need to be deleted too and reinserted.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey SamRock,

Any update regarding consideration of primary key when incrementally refreshing? I have a similar situation and I am not sure Power BI dataflows can solve my issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Currently incremental refresh only works with dates and unfortunately not with a primary key

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there any timeline for if/when it will be added as a feature for dataflows? This can be seen as a critical feature for refreshing data dependent on past state changes (i.e. Orders, Status, Deliveries, Inventory, Requests, etc)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The underlying technology for incremental refresh seems to be based on Partitions from SSAS. Therefore my guess is I don't think this will fundamentally change to support a primary key upsert any time soon.

Assuming therefore that when a record is updated it will appear in your incremental refresh therefore creating a duplicate you should add extra steps in your MCode to remove duplicates keeping the Max Primary Key therefore pruning the data in your Dataflows ETL process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not think that this is something that will be happening anytime soon.

You could have an additional column which is the modified datetime which could be used to update?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This possibilite already able disponible to users Pro?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume the meaning of the question is similar to my own..

I can use a Date field in my data to sync only the last day for example. But my 'Upsert' question is will it be able to replace existing data if the primary key shows it already exists?

Lets say I have 10 records, one record created each day. If a user of my system updates record 2 that was originally created 2 says ago but updated today, will the incremental refresh 'Upsert' it? Or will this break the refresh?

When I read about partitions and how incremental refresh seemed to work in original Analysis Services it seems to be purely additive for new data incrementally.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there

That would work as either by making the incremental refresh look at the DateTime column of the updated data.

Or you could look at the following below when setting it up

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there

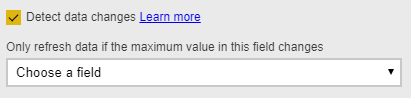

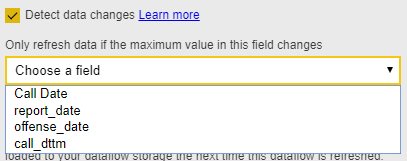

What you can do is to also enable the option for Detect Changes, where you can update the column based on a date, which will be included in the incremental process

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ Thanks. But this does not take care of my scenario. If only depends on Max date in "Changes detected". If my date column is not falling in the rangeof teh incremental refresh cycle , it will still miss the older rows.

Let me know if I missed something

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding is that it will also look for changes to the changed column and if it is newer than the last date, it will process that data too?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes it does.. but you will need another date column thats different from the Incremental refresh date.

Do you have any example or scenario that can help me understand how to use this option?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are more details on how it works

https://docs.microsoft.com/en-us/power-bi/service-dataflows-incremental-refresh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As it currently stands you have to use a DateKey in order to do the incremental refreshing.

THis will allow you to possibly only refresh the latest day. And with only having 1 million rows in total, it should be really quick.

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 17 | |

| 11 | |

| 8 | |

| 7 | |

| 6 |

| User | Count |

|---|---|

| 46 | |

| 38 | |

| 36 | |

| 25 | |

| 25 |