- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power BI Premium per user and 10Gb limit for a single table on gateway

I was wondering if with the new license model "Premium per user" we will avoid to incur in this error message we sometimes get during refresh via on-prem gateway:

"The amount of uncompressed data on the gateway client has exceeded the limit of 10 GB for a single table. Please consider reducing the use of highly repetitive strings values through normalized keys, removing unused columns, or upgrading to Power BI Premium".

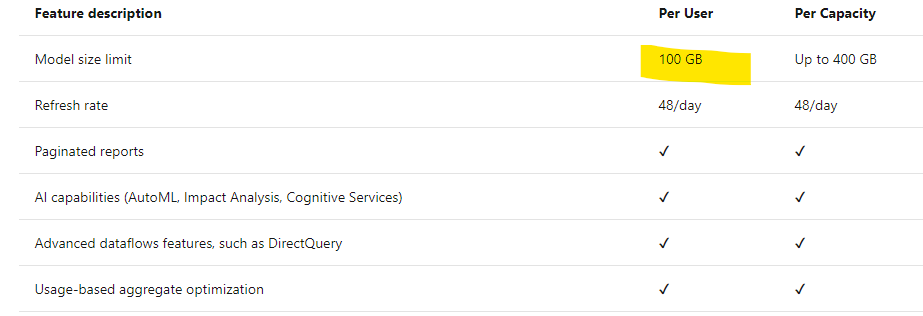

I see that the model size limit increases up to 100GB with this new license, but does this restriction still apply?

Thanks

Regards

Alberto

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AbhiSSRS your reply, unfortunatley, is incorrect. The limit you are referencing (100gb per dataset) is not relevant to what the OP is actually asking: they are hitting the limit that is imposed upon tables in the datasource -- with Pro and PPU, there is a limit when refreshing through the gateway of 10gb per table. This is a totally different limit than the 100gb dataset maximum that you are referencing.

Pro and PPU accounts both used shared capacities, and the 10gb-per-table on gatewayrefresh limit applies to all shared capacities. The only way to avoid this limit is with a Premium capacity, as it is not a shared capacity.

@albertoserinf I'd be interested to hear what solution you eventually came up with to avoid encountering the same error message. I imagine you were forced to modify your table-size in the database itself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @albertoserinf

According to your description, I think the restriction still apply when you upgraded to the PPU, because the model size limit of PPU is 100 GB per user, but not 100GB per dataset.

Therefore, I think reducing the use of highly repetitive strings values through normalized keys and removing unused columns can be the more useful way for you to avoid this problem.

https://docs.microsoft.com/en-us/power-bi/admin/service-premium-per-user-faq

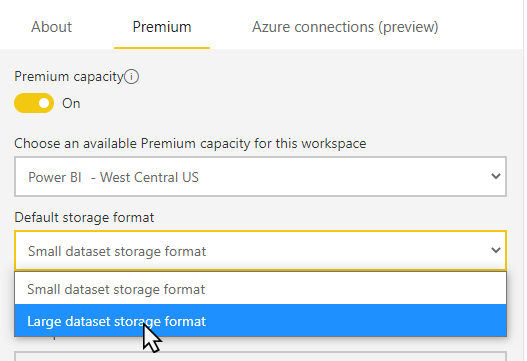

What’s more, you can also consider turning on the large dataset storage format in the premium capacity to make the dataset size limited by the Premium capacity size or the maximum size set by the administrator if it’s possible.

https://docs.microsoft.com/en-us/power-bi/admin/service-premium-large-models

Thank you very much!

Best Regards,

Community Support Team _Robert Qin

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

<removing the incorrect response>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AbhiSSRS your reply, unfortunatley, is incorrect. The limit you are referencing (100gb per dataset) is not relevant to what the OP is actually asking: they are hitting the limit that is imposed upon tables in the datasource -- with Pro and PPU, there is a limit when refreshing through the gateway of 10gb per table. This is a totally different limit than the 100gb dataset maximum that you are referencing.

Pro and PPU accounts both used shared capacities, and the 10gb-per-table on gatewayrefresh limit applies to all shared capacities. The only way to avoid this limit is with a Premium capacity, as it is not a shared capacity.

@albertoserinf I'd be interested to hear what solution you eventually came up with to avoid encountering the same error message. I imagine you were forced to modify your table-size in the database itself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mholloway , considering that a Premium capacity license was (and still is) out of budget, we first decided to switch from "import mode" to "direct query" for tables exceeding this limit.

Result was poor performance for end users.

So we added some aggregatated tables at datawarehouse level but loosing some details requested from analysts.

Final solutions was moving from an on-prem db to parquet files stored in ADLS gen2 as data source, in that way we are able to reduce size of tables. And we do not need a gateway anymore.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply alberto, very interesting to hear. We also considered DirectQuery (like you, Premium is out of budget) but we had concerns it would be too slow for the end-user -- so it sounds like you've confirmed our fears in that department! Thanks again for the reply.

Helpful resources

| Subject | Author | Posted | |

|---|---|---|---|

| 10-02-2023 01:23 PM | |||

| 06-17-2024 09:57 AM | |||

| 03-24-2024 05:50 PM | |||

| 07-28-2023 01:45 AM | |||

| 09-02-2022 12:48 AM |