Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- PipelineException when running dataflow. Compresse...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PipelineException when running dataflow. CompressedDataSize exceeds!

Another day, and another strange message from PQ online. I was trying to refresh a PBI online dataflow, and received the following:

PipelineException: With compression algorithm the compressed data size in a packet exceeds the max ServiceBus limit: GatewayCompressor - CompressedDataSize (25906718) of a non-compressed packet exceeds the maximum payload size of 8500000 .

I'm not sure how to resolve this exactly. I was pulling a very large chunk of json, and trying to encode it in a dataflow-table for subsequent use in another table (a "computed entity"). However it appears that PQ is not willing or able to help me out.

Does anyone have any idea how to send a very large chuck of json out into this storage account where my dataflows live?

I wish this error message was more meaningful. Most people shouldn't have to know or care about "servicebus", nor should they worry about bumping into some random/arbitrary 8.5 MB payload maximum.

Any help would be appreciated. This error doesn't come up in any google searches related to Power BI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While trying to fix this, I get another error with a similar format.

This time its complaining about uncompressed sizes.

PipelineException: The uncompressed data size specified in a packet header exceeds max limit: GatewayDecompressor - Header.UncompressedDataSize (193364724) of a compressed packet exceeds the maximum allowed uncompressed payload of 157286421 .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

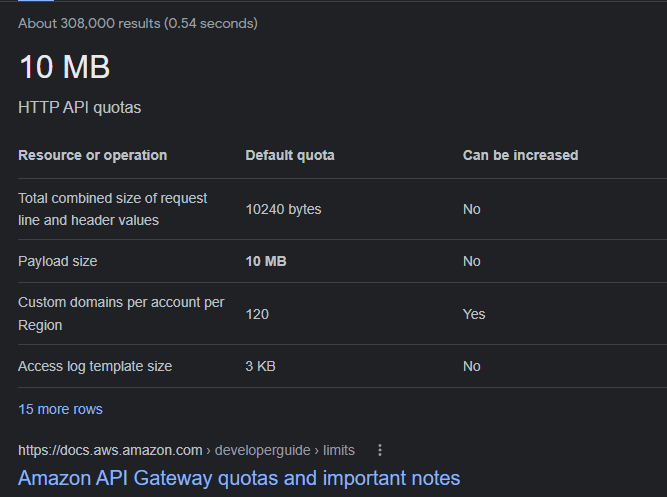

Gateways and APIs have payload size limits, AWS for instance

I think moving to Premium capacity could help you out.

Regards

Amine Jerbi

If I answered your question, please mark this thread as accepted

and you can follow me on

My Website, LinkedIn and Facebook

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are already on premium.

I really wish dataflows were more user-friendly. There should be a way to serialize arbitrary objects out there, for the sake of related entities ("computed entities").

These CSV-compatibility requirements are just plain obnoxious. There should be a way to save XML, JSON, text, and lots more. Even binary formats like parquet would be very helpful!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can submit your idea/issue in here

https://community.powerbi.com/t5/Issues/idb-p/Issues

Who knows they may help you out

Regards

Amine Jerbi

If I answered your question, please mark this thread as accepted

and you can follow me on

My Website, LinkedIn and Facebook

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the tip @aj1973

Who knows? I know. I'm still waiting for some "public previews" that have been a work-in-progress for a couple years. I will wait for the more important items to be fixed before adding to the backlog.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

| User | Count |

|---|---|

| 18 | |

| 7 | |

| 7 | |

| 7 | |

| 6 |

| User | Count |

|---|---|

| 46 | |

| 43 | |

| 25 | |

| 23 | |

| 23 |