FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Issues in Incremental refresh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issues in Incremental refresh

Hi,

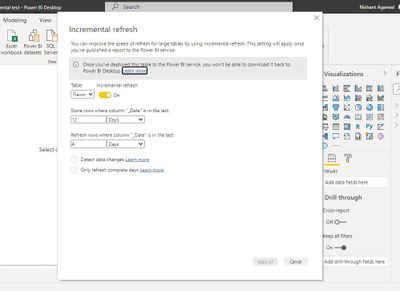

Our DB has only 4 days data, I have setup incremental refresh for storing 12 last days and refresh daily for last 4 days.

Here's my model:

Relationships:

1) Scores.StoreKey with Image.StoreKey( one to many)

2) Image._FileName with Flavor._FileName( one to many)

After deploying to service, refresh fails with following error:

Data source errorColumn '<pii>_FileName</pii>' in Table '<pii>Image</pii>' contains a duplicate value '<pii>POSM.jpg</pii>' and this is not allowed for columns on the one side of a many-to-one relationship or for columns that are used as the primary key of a table.

I checked the tables in DB, there are no duplicates on the one side of relationship.

Here's my incremental refresh setup:

I have tried couple of online available solutions but to no avail.

Any help will be appreciated.

Thanks,

Nishant

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

Please refer to the following solutions to see if they are helpful to you.

Problem: Refresh fails because of duplicate values

Cause: Post dates have changed

With a refresh operation, only data that has changed at the data source is refreshed in the dataset. As the data is divided by a date, it’s recommended post (transaction) dates are not changed.

If a date is changed accidentally, then two issues can occur: Users notice some totals changed in the historical data (that is not supposed to happen), or during a refresh an error is returned indicating a unique value is not in fact unique. For the latter, this can happen when the table with incremental refresh configured is used in a 1:N relationship with another table as the 1 side and should have unique values. When the data is changed (for a specific ID), that ID then appears in another partition and the engine will detect the value is not unique.

Solution: Refresh specific partitions

Where there is a business need to change some past data from the dates, a possible solution is to use SSMS to refresh all partitions from the point where the change is located up to the current refresh partition, thus keeping the 1 side of the relationship unique.

Please refer to the following documents.

https://docs.microsoft.com/power-bi/connect-data/incremental-refresh-troubleshoot

Best Regards,

Community Support Team _Charlotte

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Had the same problem.

In the Native Query I was using "Between" instead of >= and <

and as the incremental refresh was not sending 1 query for all the historical data but several small queries of partitions and putting them back together, the between statement in SQL is including both days (start and end) and that's the reason I had an overlap and a double counting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @v-zhangti, great answer.

LOT of mis-information going around about this issue. I have situation where the duplicate error is on a PK INT field so obviously there's no duplicate on the source side.

So if I'm reading this correctly....when the post date changes thus causing a move in partitions, PBI "may" reload the new partition before the old thus causing the row to be present twice in the dataset model. You would think they'd delete everything from the changed partitions and reload at same time but must not be or this wouldn't be an issue.

This is unfortunate for those of us where we can't control what happens to the partitioning dates. All our data (like posting date) can change over time. Should it? No. But we have no control over what the business does in our sources. And we have a number of monster dimensions where it would be nice to use incremental refresh but alas it won't work in our situation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What you are looking for is differential refresh or CDC. Not something Power BI is currently offering. Incremental refresh is designed to work with immutable data. If you know that your data changes post factum you will have to add a periodic full refresh to your schedule.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lbendlin The fact that so many people are struggling with this issue means we aren't alone. We have no data that is immutable in our organization. If you're doing householding, golden record matching/survivorship or merging customer accounts then you will have changing history so I think this has to be a very common need.

Are you saying it will also be problematic for fact updates when they move partitions? I hope not. What I read is it sounds like it's only issue with M:1 dimension to fact type of relationship. We haven't hit a duplicate error yet with fact incrementals in our testing. Not to say it can't happen however.

We will be doing full refresh weekly but daily full refresh isn't practical for us in enterprise models. The attraction to PBI datasets over SSAS (besides dumping SSAS servers) is it's built-in support for incremental and partitioning as full refreshes even with SSAS take to long with larger models.

I wish someone would do deep dive into how this really works behind the scenes. Please let me know if you have seen someone do that. Everything I've seen just talks about how to set it up but not how it really works in-depth.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We're in the same boat. Lots of data changing over time (like an opportunity status, or an order amount). The only way we have found that is halfway acceptable is to have a separate process that judges which partitions need refreshing, and then initiates these refreshes via XMLA. If this sounds like the OLAP cube refreshes of yore - yep, that's what it basically is.

To add insult to injury - if you use standard incremental refresh partition management then you are bound by regular calendar periods. We use a fiscal calendar that often results in more partitions (or parts thereof) being refreshed than strictly needed.

There is no magic to any of this, it is all tedious work.

https://docs.microsoft.com/en-us/power-bi/connect-data/incremental-refresh-xmla Advanced incremental refresh and meta data changes

https://docs.microsoft.com/en-us/power-bi/admin/service-premium-incremental-refresh#override-increme... special features for incremental refresh

https://learn.microsoft.com/en-us/power-bi/connect-data/incremental-refresh-troubleshoot Troubleshoot incremental refresh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Late reply but for those that come here after a search:

I believe it needs an immutable datetime column, not necessarily that the whole row needs to be immutable. If you have an immutable column like a creationdate log column you can use that together with detect data changes on a modifieddate log column. If you set your partitions at the month level for example it will scan each month for a change in last modifieddate and then refresh the entire partition.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Martin1986 I was more talking about the general concept of incremental refresh. It works well with any data that does not change after the fact (like IoT sensor readings). It does not work well with SCDs like order line status changes etc. For that you would need differential refresh, the ability to touch individual rows in a partition. For now it is flush and fill on partition level (well, blitting).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thanks for the quick reply. I'd say that flush and fill on partition level with detect data changes is still a huge improvement on load compared to a full refresh in many cases. I wanted to state that because otherwise ppl who do not have very strict requirements might come to this thread and not try incremental refresh because their data isn't immutable. Or am I missing something except for that it doesn't handle potential deletes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"detect data changes" comes with a huge penalty. You get a canary dataset semantic model in addition to your regular one. We usually recommend against that. You as the business owner should know which data has changed, and you can then refresh the affected partitions yourself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you elaborate on 'canary semantic model' ?

I do not know the exact workings, but I guess it stores a table that lists for each partition the max modifieddate to compare against when it checks for changes?

And if you move to scripting such logic yourself, e.g. writing a script that checks which partitions are changed and use the Power BI API to refresh only those... then a) is the only benefit avoiding the canary semantic model? b) aren't you then already on a level of dealing with data that can handle the upserts as well and you don't need the build in incremental refresh policy settings at all?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"detect data changes" is a myth. you can't really do that, so you resort to smoke and mirror. That means you clandestinely load data into a shadow dataset (the canary) and then compare against what the user sees (the original dataset). Once a difference is found you then alert the user "hey, I detected a data change".

You can see that when looking at the DMVs, they will mention "canary". These artifacts also show on Dataflows.

Think of Incremental Refresh as a (convenient) corner case for partition management. You can create, fill, and merge your own partitions via XMLA, regardless if you need this to be incremental or not.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding of 'detect data changes' is that it simply queries your source for the MAX ModifiedDate logfield you set as column to detect changes on and compares it with its previous value. It does this for every partition. So if you have 24 months of refresh scope it would do 24 queries for each start-end date per partition and retrieve the max modified date. So my understanding is that as long as you have such a log field in your source it would work quite well? I would use the same logic to detect a change in data myself to be honest if I were to script it.

I also have set a custom polling M query so that it can accept a different field to monitor. For example I use it with an API which can't be queried for MAX modifieddate as it doesn't fold, but I entered a custom M query that calls the API to sort on modifieddate and return first row.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lbendlin You're not the bearer of good news...in fact I'd say you just did dropped the american proverbial chunk of coal into my stocking. I was afraid the ultimate answer was what you suggest but didn't want to go there. Only easy-button answers allowed here from now on 😁

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

Has your problem been solved, if so, please consider Accept a correct reply as the solution or share your own solution to help others find it.

Best Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Anonymous

Please refer to the following solutions to see if they are helpful to you.

Problem: Refresh fails because of duplicate values

Cause: Post dates have changed

With a refresh operation, only data that has changed at the data source is refreshed in the dataset. As the data is divided by a date, it’s recommended post (transaction) dates are not changed.

If a date is changed accidentally, then two issues can occur: Users notice some totals changed in the historical data (that is not supposed to happen), or during a refresh an error is returned indicating a unique value is not in fact unique. For the latter, this can happen when the table with incremental refresh configured is used in a 1:N relationship with another table as the 1 side and should have unique values. When the data is changed (for a specific ID), that ID then appears in another partition and the engine will detect the value is not unique.

Solution: Refresh specific partitions

Where there is a business need to change some past data from the dates, a possible solution is to use SSMS to refresh all partitions from the point where the change is located up to the current refresh partition, thus keeping the 1 side of the relationship unique.

Please refer to the following documents.

https://docs.microsoft.com/power-bi/connect-data/incremental-refresh-troubleshoot

Best Regards,

Community Support Team _Charlotte

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all. I had a similar situation. we enabled incremental refresh on a dataflow. When i go to refresh history and download it, it shows "NA" as number of rows processed. How is this even possible ?? When we run a full load or incremental load, it will process certain amount of rows right?? Any help would be greatly appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If Power BI says there are duplicates then that means there are duplicates.

NOTE: Power Query is case sensitive. Power BI is not. So while you may not have duplicates in Power Query you may have them in Vertipaq

NOTE: a value with a CR/LF at the end (like from a bad Excel copy/paste) is nearly indistinguishable from a value without that issue. Chase it in Power Query using the string length.

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 46 | |

| 38 | |

| 36 | |

| 25 | |

| 25 |