Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Compete to become Power BI Data Viz World Champion! First round ends August 18th. Get started.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Incremental Refresh on a dataset with Dataflow...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Incremental Refresh on a dataset with Dataflows as a data source

Hi,

I have a question about implementing Incremental Refresh on a dataset that connects solely to Dataflows. I tried setting it up but it appears Dataflows do not support query folding.

Few questions:

- Is it possible in any way to get Incremental Refresh working on a dataset that runs on Dataflows at this moment?

- If not, is there anything on the roadmap to enable this?

- If not, is there any way to refresh only part of a Dataflow using a workaround?

It feels really weird to have all the data in Azure Data Lake (Dataflows) but not being able to load it into a dataset due to memory issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

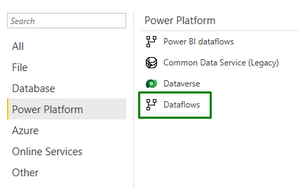

This seems to be working with the "Dataflow" connector (but not with the "Power BI Dataflow" connector).

The source Dataflow should be on SQL Cache (Enhanced Compute Engine On) to enable DirectQuery so the Incremental Refresh filter can be folded.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any updates on this?

June 2022...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you find a solution? I'm having the same problem and I can't find anything about it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous @Anonymous

Any luck at all? I just found this thread while searching for a solution:

1) I have a 50+ million row dataflow which updates incrementally (under 1 minute to refresh which is pretty neat)

2) The visualization developers need to create datasets based on my dataflow, and make some additional transformations or even join multiple entities

This led to an initial challenge since PBI desktop tried to download all 50 million rows. As a workaround, I created an entity with a small slice of the data. They have parameters for workspace_id, dataflow_id, and entity_name that they can swap over to point to the real historical dataflow once they are done.

3) They seem to need to schedule their dataset to refresh (which is based on my dataflow), and it appears to do a complete refresh and does not respect the incremental nature of the dataflow source at all. Seems like a waste of resources and time.

The ideal state for me would be: 1) I publish an incremental dataflow, 2) people connect to that dataflow and make different datasets, 3) my scheduled dataflow refresh would refresh all downstream datasets incrementally as well

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ldacey ,

Unfortunately not. To summarize:

- Incremental Refresh implemented on datasets that connect to Power Bi Dataflows does not work at the moment. The reason is that the queries can not get folded to the data source (https://docs.microsoft.com/en-us/power-query/power-query-folding). Which is weird, obviously.

- If your workspaces are in a Premium capacity, you could try out DirectQuery on Dataflows (https://powerbi.microsoft.com/en-us/blog/power-bi-dataflows-direct-query-support/). I haven't tested this yet, so I can't share any learnings. As far as I know though, there are some limitations to DirectQuery in general (https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-use-directquery). So you might want to scroll through that page before trying it.

What we did on our side is manage this in our database, where we create SQL VIEWS per use case which we ingest into datasets directly. Since our data source (Snowflake) supports query folding, we can use Incremental Refresh to ingest the data.

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @pat_mecee ,

While composite models is a great solution to a whole lot of problems, I'm afraid it won't 'solve' this one. As far as I know, it won't change the environment that Dataflows is set up in and so it does not support query folding (needed for incremental refresh).

You could of course try to use DirectQuery on Dataflows, but I'm not sure if you will reach the same level of performance as storing it in-memory in a data model.

I am seriously considering cutting out Dataflows entirely and directly connect to the database from the PBI dataset. It seems like less overhead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on some tests I did on enhanced compute dataflows (which do support some query folding, see https://ssbipolar.com/2020/09/16/dataflows-enhanced-compute-engine-will-it-fold/ and within that article there is a link to which queries will fold), it seems like incremental dataset refreshes against an incremental dataflow can work.

I didn't proceed very far, but I set up an incremental refresh dataset which is pointed towards a dataflow (enhanced compute turned on, incremental refresh turned on). I then checked the XMLA endpoint in SSMS and I inspected the partitions. They contained the dates/rows I was expecting and each time I refreshed the test dataset it updated the correct partitions.

But yes, dataflows have been a headache with some weird failures and behavior. Sad because it is nice to allow people to access data and do their own thing without requiring access to the source (either DB or Azure Blob parquet datasets in this case). And I do not need to open up PBI desktop at all which is nice because I can do my job (providing data access) within PBI service. I am hoping for more improvements and expanded documentation..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ldacey,

Thanks for replying. Sounds like that could be a viable solution.

In theory, Dataflows are an awesome way to share data sources throughout the company and I still believe it's the way forward. I'm curious to hear from you once you have some more test results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Anonymous,

Any updates from your/Microsoft side ?

Could you please officially confirm, that our requirement

- Incremental Refresh of Dataflow

- Incremental Refresh of Datasets depending on this Dataflow

is technically possible and supported by Microsoft.

Thanks and best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ ,

Sorry to bother you again. I read the related blogs you wrote about increment refresh, it have good explanation of increment refresh. May I ask for your help answer @Anonymous 's question about increment refresh of dataflow? Thanks for your help in advance.

Any updates from your/Microsoft side ?

Could you please officially confirm, that our requirement

- Incremental Refresh of Dataflow

- Incremental Refresh of Datasets depending on this Dataflow

is technically possible and supported by Microsoft.

Best Regards

Rena

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as I know it is possible to incrementally refresh the dataflow.

I do not think it is currently supported in Power BI pro to incrementally refresh off a dataset depending on a dataflow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ ,

Thanks for your quick response and answer !

According to Microsoft (https://docs.microsoft.com/en-us/power-bi/transform-model/service-dataflows-incremental-refresh) "Dataflow incremental refresh and dataset incremental refresh are designed to work in tandem", i.e. in an Enterprise Datawarehouse (DWH) setting the Dataflows (usually many of them) ingesting large amounts of data every night into Power BI Azure (implicit) datalake need to use the Incremental Data Refresh and the depending Datasets, that have to be refreshed after the ingest, must be incrementally refreshed as well to keep within the timelimits set by a nightly dataload (usually only a few hours after all other source systems have submitted the data to the DWH).

If this (incremental refresh of Dataflows and incremental refresh of depending Datasets) is not supported/working then Power BI is not really ready for Enterprise DWH reporting/analyses.

What is your opinion on this or do you see an alternative way to use Power BI in an Enterprise scenario ?

Thanks and best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Always if you want an enterprise DW then you would need to build an enterprise data warehouse that would be separate to dataflows in my opinion.

Whilst dataflows are great they do not have all the features that you would expect to have in a data warehouse table.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GilbertQ

Thank you very much for your answer, you are right that Dataflows do not offer all features of a "real DWH" - therefore the setup described above is using an AWS Redshift DWH => Incremental Refresh on Dataflows => Incremental Refresh on depending Datasets because we would like to use Power BI (Service) to query/anlayse the data from the DWH.

We were planning to use Dataflows as an abstraction layer and ingest mechanism as proposed by Microsoft so that this ingested data can be used by the depending (Shared-) Datasets and thereby to implement a modern Self-Service BI solution.

Because of the size of the data and the limited time available during nightly data refesh we require an incremental data refresh on all stages of the dataload (Dataflows & Datasets) to provide our users with updated date every day at 07:00 latest.

So the the incremental data refreshs have to work in tandem - currently it appears that this (incremental refreshs for both Dataflows and depending Datasets) is not working (yet) and we still have no official confirmation from Microsoft if this will be working/implemented in the future. If this is not working/or not designed this way we don't see that Power BI can be used in larger Enterprise environments...

Thanks again for your support and best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Currently you would have to stager them.

And yes currently unless you are using Power BI Premium you can use Enhanced data flow which should allow for incremental refreshing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Databeest,

You are absolutely correct - Power BI Dataflows ingest the data from external sources into the (implicit) Azure Datalake so the question is if Datasets can execute "query folding" towards Dataflows as this is a requirement for the efficient implementation of "Incremental Refresh", i.e. the queries have to check any changes in the underlying data partitions as created by the Incremental Refresh settings.

So the simple question to Microsoft is - can Datasets use Incremental Refreshs on Dataflows ? As the "tandem" Dataflow => Datasets is the proposed Data Ingest and Query Strategie within Power BI I strongly hope (request !) that this is working - if not Power BI NOT ready for Enterprise Business Intelligence !

Thanks for your clarifying on this, best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

Thanks for backing me up :).

I tried to ask this simple question multiple times in this thread but it doesn't seem to land properly. I just want to know if it's on the roadmap at least. Anyways, it became a lower priority for me since the rollout of Direct Query on Dataflows. Haven't gotten the chance yet to test it properly, but at least in theory it offers a solution to the unnecessary refresh time of the datasets that are running on Dataflows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Thanks for your answer and hint regarding "DirectQuery on Dataflows".

I am not sure if this is really an "equivalent" solution because like this we cannot use the (speed & performance) advantage of importet Datasets (i.e. in-memory/columnar engine) so I wonder if you have already results and user feedback for interactive queries/filters on "DirectQuery-based" reports ? Are they still performant (latency ?) at all and especially with a larger number of users sending direct queries every time to the underlying Dataflow ?

@AnonymousCould you please check again on the "simple question" if the setup "Incremental Data Refresh of Datasets on incrementally refreshed Dataflows" works and is officially supported by Microsoft, thank you.

Best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous Not yet. I also don't think it will be an equally performing solution. While it feels like it should be the type of solution that helps us leverage Dataflows to scale throughout large organisations.

Anyways, it doesn't seem like we are getting a satisfying answer from Microsoft regarding our problem.

I'll let you know when we've tested the DirectQuery solution.

Helpful resources

| User | Count |

|---|---|

| 39 | |

| 14 | |

| 12 | |

| 12 | |

| 11 |

| User | Count |

|---|---|

| 49 | |

| 35 | |

| 25 | |

| 21 | |

| 18 |