Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Enhance your career with this limited time 50% discount on Fabric and Power BI exams. Ends August 31st. Request your voucher.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Deployment Pipline, staging

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

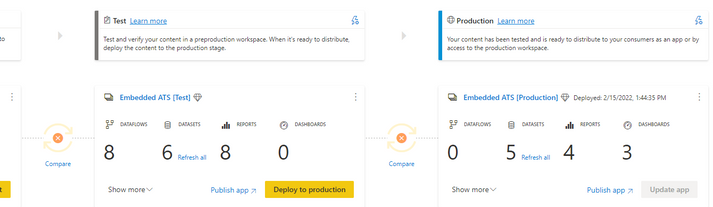

Deployment Pipline, staging

Hi, a question about best practices when using Deployment Pipelines. When deploying a dataset with a report from Test to Production, it seems that only the meta data are copied, not the refreshed data in the dataset. And the production versjon of the dataset is overwritten, so it needs to be refreshed before the production deployment can be used by anyone. In my case this takes an hour, so the report which is based on the dataset is really unavailable to the users for all that time. It would have been better if the original dataset in production was not overwritten until the refresh was finished, as is the case when publishing directly from Power BI Desktop to Power BI Service. Is there a better way to do this?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. I will check out the pipeline extension you referred to. I have now "solved" the issue by creating alternate workspaces with their own pipeline which I now use for staging and swapping when deployting into production.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

to re-iterate on @edhans words- as long as there aren't breaking changes, you should be able to deploy it into Production dataset and without any downtime for the users. When there are breaking changes, you will get a notification and you can decide to stop the deployment.

Another option is to use pipeline's ADO extension to schedule deployments at night time, and run a refresh right after that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. I will check out the pipeline extension you referred to. I have now "solved" the issue by creating alternate workspaces with their own pipeline which I now use for staging and swapping when deployting into production.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding is the data will not be overwritten unless you changed the model, and the model of the production data doesn't match up with the new metadata from Test.

The assumption is (right or wrong) by the time you get to Production, the model is pretty stable.

Can you give more specifics on the changes you are making?

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok thanks, so what I am looking for is a way to deploy from test to production (by using the Deployment Pipeline) with changes to the model where the target dataset is not overwritten until the very moment that the refresh is finished. So that the report stays accessible/functionable for the users all the time. Like the way it works (I assume) when I deploy from Power BI Desktop to Power BI Service. Having a downtime for one hour for every such deploy is not really acceptable for our customers.

Is it perhaps possible to achieve this some other way, e.g. by using the XMLA enpoints?

It could be useful to be able to schedule the deployment to nighttime in some way.

E

Helpful resources

| User | Count |

|---|---|

| 35 | |

| 14 | |

| 11 | |

| 11 | |

| 8 |

| User | Count |

|---|---|

| 44 | |

| 43 | |

| 19 | |

| 18 | |

| 17 |