FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Learn from the best! Meet the four finalists headed to the FINALS of the Power BI Dataviz World Championships! Register now

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflow vs Dataset refresh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow vs Dataset refresh

Haven't found any documentation on how data refresh works with respect to a dataflow and then a dataset sourced from that dataflow.

So looking for feedback, based on what I've discovered:

- Both a dataflow and dataset need data to be refreshed

- So I assume the dataflow is much like a data storage component on it's own that manages the updating from the data source, wherever that may be

- And the dataset will refresh data from the dataflow 'storage'

- Thus a logical refresh sequence (such as setting a scheduled refresh) would see the dataflow update first then the dataset aftewards (maybe 30 mins later as I suspect doing both at the same time may not yield the right results)

I've come to this conclusion after seeing the behaviour of having one or the other set for scheduled refresh.

I'm also seeing inconsistency in the workspace contents view where it shows last and next refresh times.

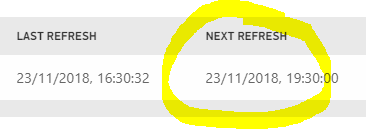

For this dataflow, I've toggled off the scheduled refresh but it still shows a Next Refresh time (I would expect not to see any time stamp):

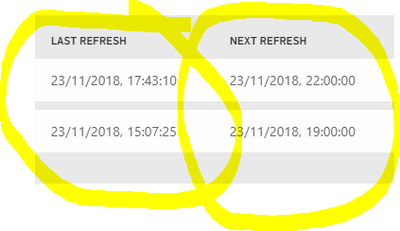

For these datasets, they have both had a refresh more recently than indicated here

Here's the first one:

And the second:

A bug?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>So I assume the dataflow is much like a data storage component on its own that manages the updating from the data source, wherever that may be

That's correct, technically PBI's Dataflow uses Azure Data Lake Gen2 for storage.

One use case I plan to use this dual refresh structure for, is to handle sources (e.g. static files) that don't need to be refreshed in Dataflows where they'll be imported but not under scheduled refresh. I've found PBI's scheduled refreshes to fail easily, so cutting down the service's scheduled refreshes to sources that actually need to be refreshed should lower incidents (e.g. web API timeouts, credential issues etc.).

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After internal checking, it seems that the next refresh time update (without browser refresh) was fixed and is should be available in the following updates.

I will keep monitor it.

Thanks,

Assaf

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe Dataflows now have DirectQuery capability in Premium, therefore you could simply avoid refreshing the dataset.

Though given the performance and stability of dataflows, I would think a composite model would be a better approach to limit the amount of DirectQuery happening.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

I dont understand... why do we have to Schedule the Dataset? From all the Microsoft articles that I read about Dataflow, it was made to look like dataset will be automatically connect to Dataflow and show users the latest data. Does this mean if I create 10 datasets using my Enterprise Dataflow, I need to configure all my 10 Datasets to refresh sepeartely? On top, we have to rely on API to get faster updates? This is totally unproductive and totally beats the purpose of having all my data on Cloud services already!

I hope this is all not true and I dont have to keep having separet schedules Dataflow and Datasets!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous dataflows are not pushing data directly to datasets, they're just making the data available in a datalake. You might even have a dataflow that does not have a destination dataset. You do have to schedule refreshes in your datasets separately from your dataflows, just like you have to schedule refreshes against any other data source.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@otraversThanks for the response. I still feel this defeats the purose of doing the ETL on PowerBI's own storage where my Datasets also reside. The purpose of Dataflow was to have a Centralized data source that all reports can consume. Now its basically telling me to take casre of indivudal report refreshes, inspite of having everything in a "centralized" place?! Ideally Dataset should work like a "Direct" Querying to Dataflow, not an Import

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous wrote: Ideally Dataset should work like a "Direct" Querying to Dataflow, not an Import

Let me try and explain why I disagree. That is really not "ideal", as Direct Query is meant to be used over structured data sources, not unstructured data lakes. Azure Data Lake is not supported as a source for DQ:

https://docs.microsoft.com/en-us/power-bi/desktop-directquery-data-sources

Moreover Import performs better than DQ and is really Power BI's preferred mode unless you have too much data or need real-time updates.

You can make the case that it should be easier to sync dataflow and dataset refreshes, but I think you're possibly confused about some of the architectural options offered by Power BI. The fact that you're using dataflows as a data source in a dataset should not limit how that dataset can work or be refreshed.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If I have a Power BI report with a single data source that is a Dataflow, and I schedule a refresh of the dataset in the service - that "refresh" is just pulling down the data already there in the dataset? i.e. the dataset refresh is not triggering another refresh in the lake?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@thisisausername dataflow and dataset refreshes are completely separate. Refreshing one does not automatically refresh the other, if you want to trigger one refresh when the other is done you'll have to set that up using APIs.

Edit: watch the following video from Guy in a Cube if this still needs further clarification

https://www.youtube.com/watch?v=xA6YouSI6kY

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do I understand it correctly that you found out that both the Dataflow and the Dataset needs to be updated (either manually or scheduled). That's a quite unfortunate dependency... I expected the Dataflow to need to be scheduled, but the the Dataset to be "DirectQuery".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do love dataflows and what they allow us to do (preparing entities only once and using them everywhere is a dream come true).

However, that's really disappointing... I will now suffer from more delay: the dataflow will have to refresh first (let's say at 9:00), and half an hour later the dataset (9:30). I wish datasets would automatically refresh when the dataflow does...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I don't understand this either. Hopefully someone can elaborate. Even if my report only connects to a single dataflow, it still creates a "dataset" when I publish it and thus I have to manage two refresh schedules (one for dataflow and one for dataset). I can see some use cases but I would think most of the time the preferred functionality is for a dataflow refresh to automatically kick off the associated dataset refresh.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

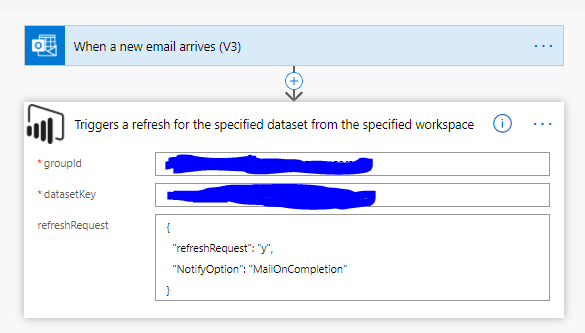

The connection between Dataflow and Dataset refreshes could be automated using the API and MS Flow. Something like this:

1. Trigger your dataflow refresh via the API, for instance using PowerShell, which you could host/run from Azure Functions

- https://docs.microsoft.com/en-us/rest/api/power-bi/dataflows/refreshdataflow

- https://insightsquest.com/2019/03/03/refresh-power-bi-datasets-with-powershell/

- https://eriksvensen.wordpress.com/2017/08/16/updating-a-powerbi-dataset-using-microsoft-flow-azure-f...

Incidentally this approach opens you up more fine-grained control of your refreshes than using the static scheduling options in the Power BI service. For instance you could trigger your dataflow refreshes based on sources being updated, or integrate your Power BI refreshes in broader Azure Data Factory processes:

- https://blog.crossjoin.co.uk/2018/10/21/calling-the-power-bi-rest-api-from-microsoft-flow-part-2-ref...

- https://altis.com.au/greater-control-of-your-power-bi-datasets-with-the-power-bi-rest-api/

2. Use NotifyOption in the API call above with MailOnCompletion sending an email to an inbox monitored by a Flow workflow. This email is your Flow trigger.

3. Trigger your "client" dataset refresh via the Power BI REST API as the follow-up action in the same Flow, as explained here:

- https://medium.com/@Konstantinos_Ioannou/refresh-powerbi-dataset-with-microsoft-flow-73836c727c33

- https://docs.microsoft.com/en-us/rest/api/power-bi/datasets/refreshdataset

4. Push a notification (email, RSS, Teams etc.) once the thing is complete, based on a similar flow triggered by the dataset refresh's success (or failure). A side benefit of handling your own notifications is that you get to choose the format, content, notification channel, and recipient(s), whereas the Power BI service hard-codes all of these.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @otravers

I have created custom connector in Power Automate to refresh dataflow but i am not getting an email on refresh completion. I have followed the links you have provided. Do you have any idea why i am not recieving the email?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey

Make sure you set the "NotifyOption" parameter to "MailOnCompletion". You will receive email both on failure and on success of teh refresh

Refer to this Doc: https://docs.microsoft.com/en-us/rest/api/power-bi/dataflows/refreshdataflow#notifyoption

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

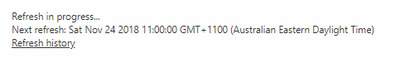

I ended up writing a blog post elaborating on my initial entry to tie everything together in Power Automate. However, even though I'm using the NotifyOption, I'm not getting the emails on success/failure dataflow refreshes - not even from the documentation Try It button. I'll update once I figure out why but this is puzzling as I followed the documented syntax to the letter.

https://www.oliviertravers.com/power-automate-powerbi-dataset-dataflow-refreshes/

@Anonymous can you share screenshots of how you set it up?

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@otravers

Here is a screenshot of flow setup. I use the same for Dataflow as well

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous and you're actually getting emails for dataflows? I contacted Microsoft support and they pointed me to this page that states:

"Triggers a refresh for the specified dataflow. The only supported mail notification options are either in case of failure, or none. MailOnCompletion is not supported."

Edit: I got confirmation that the documentation is incorrect and will be corrected in the next couple of weeks.

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

New dataflow API call to retrieve dataflow refresh requests:

https://docs.microsoft.com/en-us/rest/api/power-bi/dataflows/getdataflowtransactions

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good news, detailed triggers and actions for dataflows are coming soon to Power Automate which will make this refresh orchestration much easier. See 33 minutes into this video:

https://myignite.microsoft.com/sessions/d784f75a-f081-468d-84b2-d500142c5998

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Power BI Monthly Update - February 2026

Check out the February 2026 Power BI update to learn about new features.