FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflow Timeout

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow Timeout

Hi all,

Anyone know a way to stop the dataflow timing out.

Datasource is on-prem SQL database, using a native query. Result set is in the millions

Thanks

Mike

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

Please send to our support email the screenshot with the failure you got, so we can investigate further.

Thanks,

Galia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The default timeout on the SQL Connector is 10 minutes but there is a parameter in the connector's config where you can specify a longer duration. Here's an example setting the timeout on the command to 30 minutes:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure if anyone is still concern about this; but you can split the whole datatable in SQL into multiple segments; then the oldest data is loaded overnight one by one. Then at the transforming phase, you can merge them all together using resource of Power BI service. That should work to bring in huge amount of data like you said.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same issue, I have been searching for a solution for the past week.

When trying to refresh a dataflow of small size it is always succesful, but when the size gets bigger it fails in 4 or 6 minutes ( exactly) which makes me think it is a timeout setting.

I don't know where to address this issue and get an actual answer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

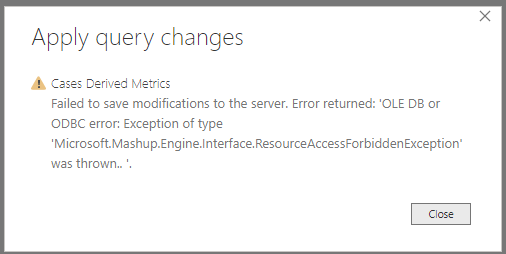

Same issue here.... if I paste M code from advanced editor, sometimes it works when I save & close, and sometimes it still fails saying i have a query error. The error is that the query timed out.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can anyone share the solution to this? Not sure the marked solution is actually a solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have no solution for the problem, but did use the following workaround.

I created the desired query in PowerBI Desktop. Then I captured the M code from the advanced editor and pasted it into the editor in Dataflows. Then I just clicked 'Close and Save' and navigated away without waiting for the preview to finish.

The dataflow loaded without problem, but managing the query has to be done out of Power Query Editor, and then copied into the editor in the Dataflows interface.

Not a very satisfying approach, but it is functional.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interestingly I tried to save my entity again after-hours and it worked a treat, so guessing some kind of memory/capacity thing?

I work for a global company and I'm based in UK I was trying to get this done at 2pm today, just as our friends across the pond come online. I had success at 6pm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the solution to this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous,

Please refer to below:

There are a few known limitations to using Enterprise Gateways and dataflows:

- Each dataflow may use only one gateway. As such, all queries should be configured using the same gateway.

- Changing the gateway impact the entire dataflow.

- If several gateways are needed, the best practice is to build several dataflows (one for each gateway) and use the compute or entity reference capabilities to unify the data.

- Dataflows are only supported using enterprise gateways. Personal gateways will not be available for selection in the drop down lists and settings screens.

Community Support Team _ Jimmy Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are using an enterprise gateway and just have one gateway for all dataflows.

Regards

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

Can you please share the refresh log (by downloading the CSV file from the refresh history)?

Please mail it to dataflow support DL: pbidf@microsoft.com

Thanks,

Galia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Galia

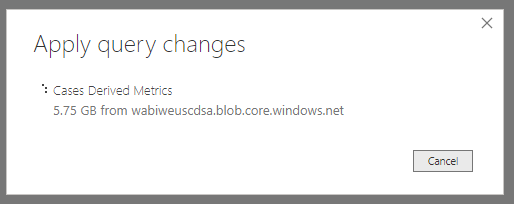

I don't get to the refresh stage as the dataflow times out when I am previewing the data when building the dataflow.

I am unable to save the dataflow.

Regards

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

Please send to our support email the screenshot with the failure you got, so we can investigate further.

Thanks,

Galia

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am also having a problem like this. Do we know any more about Time Out control?

I am trying to retrieve a portion of a large table - about 10 of 88MM rows. If I load that data into its own SQL table, the Dataflows priview loads instantly. But if I try to load from the correct table, DataFlows works at it for about 10 minutes and quits.

I wouldn't expect the query to complete in SSMS in that time. How can we get Dataflows preview to be a little more patient?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@RBuntingHow are you managing to download such huge dataset to your Desktop? I still dont understand why PowerBI Desktop needs to download the entire Dataflow into the desktop application. I am talking about 10s of GBs of data. Make no sense.

@v-yuta-msft

I tried to highlight some of the issues with Dataflow in y post here, but there is ablsutely no response ! My whole Org was couting on using Dataflow for enterprise level ETL.

https://community.powerbi.com/t5/Service/Dataflow-Dataset-NEED-more-Clarity/td-p/735330

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AnonymousI think there's been some misunderstanding. I am loading about 10 million rows, but that's not anything like 10s of Gigs.

The problem in my case is that it simply takes time for SQL Server to pull those records out of the large table. If they are in a table by themselves they load easily. It's not a matter of size -- I just wish the Dataflow's time-out when loading the preview would be more generous, or be managed manually.

To avoid this problem, I build the M code in Desktop since it's an easier environment to work in. But I don't load any of that data into Desktop's model. I only want the code out of power query. Then I paste that into the Dataflow's advanced editor.

At this point, waiting for the preview would create a time-out error. So I don't wait. I just save and close, confident from my preview in desktop that I'm getting the data I need. Naturally, I'll do some QA on the dataflow once it's properly loaded.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it. So you are still in the first stage of frustation.

Once the dataflow is loaded, you will experience what I am going through! Having to load the complete data to desktop in my case its 54M rows! 🙂

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 17 | |

| 10 | |

| 9 | |

| 8 | |

| 7 |

| User | Count |

|---|---|

| 48 | |

| 40 | |

| 31 | |

| 27 | |

| 25 |