Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Dataflow Incremental Refresh Update Specific P...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflow Incremental Refresh Update Specific Partitions

I understand how a specific partition can be refreshed in Power BI Dataset using SSMS or Tabular Editor via the XLMA Endpoints. Thanks @GuyInACube

My question is how can I refresh specific partition for Power BI Dataflow. The XLMA Endpoint connect will only show the Datasets because these are SSAS Tabular Model. The Dataflow are not SSAS. They are objects in the Datalake.

How can I refresh the partitions between 5 Years and 14 Days without deactivating and re-activating the Incremental Load?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The possibility to refresh a single partition in incremental dataflows is indeed a necessity for many.

I haven't found a way to do it yet.

The product team should definitely add the feature.

I just added my vote to the corresponding idea: Microsoft Idea (powerbi.com)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Daryl-Lynch-Bzy

PowerBI dataflow supports incremental refresh, It doesn't matter if it's not SSAS.

As long as these two conditions are met, incremental refresh of dataflow can be performed:

- Using incremental refresh in dataflows created in Power BI requires that the dataflow reside in a workspace in Premium capacity. Incremental refresh in Power Apps requires Power Apps Plan 2.

- In either Power BI or Power Apps, using incremental refresh requires that source data ingested into the dataflow have a DateTime field on which incremental refresh can filter.

More details in doc:

Using incremental refresh with dataflows - Power Query | Microsoft Docs

Have you tried it? Any errors?

Did I answer your question? Please mark my reply as solution. Thank you very much.

If not, please feel free to ask me.

Best Regards,

Community Support Team _ Janey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

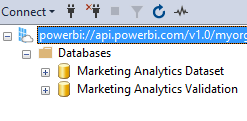

@v-janeyg-msft - Yes - the Incremental Refresh is up an running, but you have not understood the question. I want some of the older partitions. In my situation, the refresh is only updating records within the last 14 calendar day. I would like to update the partitions for 2021 to include additional data. If my incremental load was into a Power BI Dataset, I can use XLMA endpoint to refresh the older partitions. However, I need to update the partitions in Power BI Dataflow. These do not show up in the XLMA Endpoint.

In theory, I should be able to overwrite the old partitions with my update.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Daryl-Lynch-Bzy

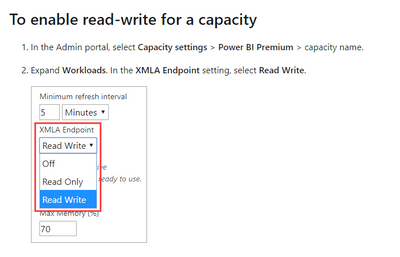

You can check it:

Can't you see the partition when you turn it on?

Did I answer your question? Please mark my reply as solution. Thank you very much.

If not, please feel free to ask me.

Best Regards,

Community Support Team _ Janey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

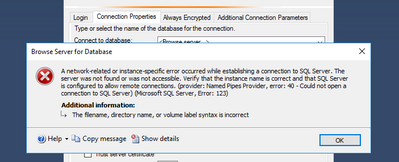

This is the error message using SQL Database connection:

And there are only two visible options for the Analysis Services connector:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Daryl-Lynch-Bzy

In this doc:

incremental-refresh-implementation-details

More details:

What's the data source of your dataflow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

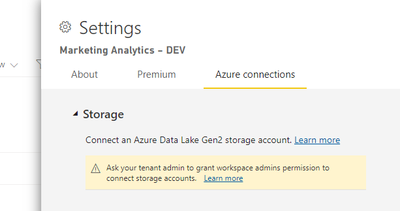

@v-janeyg-msft

It is another Dataflow in the same workspace. The JSON file is loading into a Standard Dataflow. The Incremental Dataflow is reading from this initial Dataflow. Are both the Standard and Incremental Dataflows not considered "Azure Data Lake Storage". Would the Workspace need to be configured to use Gen 2 storage?

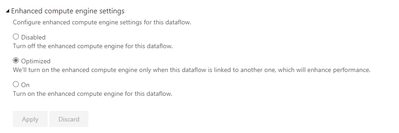

Or do both the dataflow need to be switched to "Enhanced Compute Engine" (I thought be default this would be on because the Dataflows are linked?):

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The incrementally refreshed partitions should not be visible in the service and need to use external tools like ssms. Your data source may not support.

Incremental refresh for datasets and real-time data in Power BI - Power BI | Microsoft Docs

Best Regards,

Community Support Team _ Janey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank for your help @v-janeyg-msft - I think will need to close this and leave it as unsolved. Perhaps the documentation on the following page needs to updating:

Using incremental refresh with dataflows - Power Query | Microsoft Docs

I believe the sentence "After XMLA-endpoints for Power BI Premium are available, the partition become visible." This is true for Datasets (i.e. because they are Analysis Services Tabular Databases, but it is not true for Dataflow.

Dataflows would be JSON and CSV files in the Power BI's Azure Data Store. These can't be accessed using the XMLA Endpoints because it is not analysis service object.

Perhaps the documentation needs to be updated by: @bensack , @DCtheGeek and @steindav

to say that this is not possible or to explain how it is possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Daryl-Lynch-Bzy @Anonymous

Update: XMLA Endpoint can be only used to connect to Power BI Premium Dataset for now, and Dataflow cannot use this way to refresh some specific partition. The customer needs to either do full refresh or re-configure the Incremental refresh policy to include the year data to be refreshed.

Best Regards,

Community Support Team _ Janey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Daryl-Lynch-Bzy

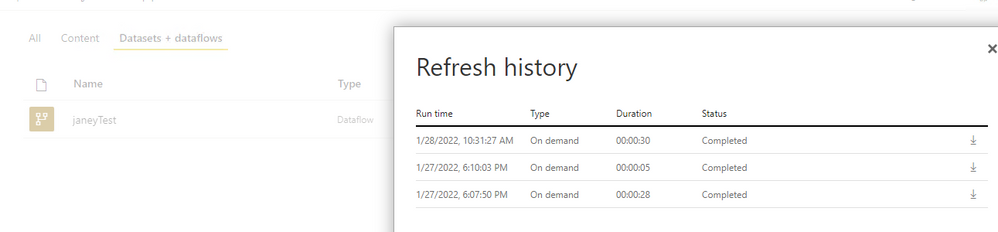

I only have the data source of the SQL database. After I set the incremental refresh of the dataflow, check the refresh history and download the results, and I can see the partition name in excel. As for what can be done to the partition, I didn't find any settings on the service.

Best Regards,

Community Support Team _ Janey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the link, @v-janeyg-msft

The Incremental Dataflow sits between an initial Dataflow and the final Datasets. The Initial Dataflow is import a JSON file and transforms it into a couple of Tables. These initial dataflow tables are limited to 30 days of activity. The Incremental Dataflow is working as designed. Each day is reads the last 30 days of activity, but only updates the last 14 days. My Incremental load contains data back through Oct 2021. If was hoping to have the flexibity to update some of the old partitions to add, remove or replace them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could I have a link to the documentation page please?

No, I can't see the Dataflow in the XLMA Endpoint. I only see the Datasets.

Here is a screenshot of the capacities settings:

Are the Dataflows hidden from the list, but I still connect with the right "Database" or "Initial Catalog" name.

Do I connect using Analysis Services or SQL Database or a different one?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm also very interested in this topic.

Given a dataflow, which has incremental refresh set up (keeping last 12 months of data, with incremental refresh of 2 months), meaning every exeution refresh only current month and previous, I would like to have a way to refresh only one partition 4 month ago.

For Datasets this is easy

- Datasets are stored in underneath Analysis services. This analysis service is accessible via SSMS(SqlServerManagementStudio) using workspace XMLA endpoint.

- From SSMS, refresh to single partition can be executed, so you are in full control of which partition you want to refresh. In my example, I could manually refresh my partition of 4 months ago.

For Dataflows:

- This is fully diferent story. Dataflows are stored in ADLS, not in Analysis Services, so obviously they cannot be reached from SSMS via analysis services connection. So same approach used for datasets do not apply to dataflows.

- Question is: Is there any way to refresh a dataflow concrete partition? (I know how to do full refresh, and how to run incremental refresh for last n partitions. But questio is how to process only one partition, which is not in the range of the incremental refresh last last n partitions).

Many thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe this is required feature for many.

In my case, I have huge table loaded into my dataflow and would like to process the partitions seperately when I need reprocess all the partitions.

Only option now is loading all of the partitions sequentally and then incrementally after that.

Can someone confirm that there is no way of processing dataflow partitions sepperately?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Solvisig,

- Can you please elaborate this in details regarding only way available. I am stuck in same situation. I have a dataflow 0.8 Million rows. After setting up the incremental refresh when I refresh the dataflow, it timeouts in 3 hours. Thinking to do the partitioning in SQL server then bringing the table in dataflow. Will there be any way then to refresh the partitions one by one? If yes, please do tell. Also, if there are any other ways, it will be really helpful. Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only option is using the refresh button which refreshes all the partitions sequentially for the initial load and then incrementally after that. There is currently no way to have control over your partitions when using dataflows. Thats why I am not using dataflows for many of my workloads so I use standard datasets instead and can easily manage the partitions in tabular editor and other tools.

However with Fabric now being the new hot thing, this must be on the development backlog.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is definitely a required feature. I need to move a dataset currently working under incremental refresh and managed through XMLA endpoint to a dataflow, so we can improve the refresh performance by doing all of the transformation within dataflows. I cannot currently see a way to manage the partitioning, beyond the initial setup of the incremental refresh.

Helpful resources

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 19 | |

| 7 | |

| 7 | |

| 7 | |

| 7 |

| User | Count |

|---|---|

| 49 | |

| 45 | |

| 25 | |

| 25 | |

| 23 |