FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

View all the Fabric Data Days sessions on demand. View schedule

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: determine to which group a value in a row of a...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

determine to which group a value in a row of a query belongs to

Hello,

I am stuck with what I thought would be a simple step... I cannot figure it out!

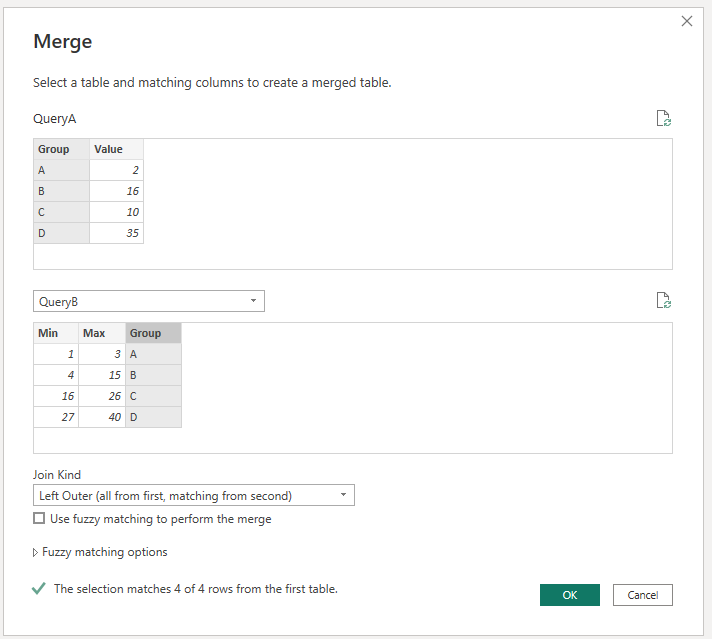

I have a query (QueryA) with a column containing numbers. I have another query (QueryB) with 3 columns: min/max/group.

I need to attribute a Group to the number in QueryA, checking that it is higher than what's in column "Min" and smaller than what's in Column "Max" for the same row, and then looking up the corresponding Group Name.

| Min | Max | Group |

| 1 | 3 | A |

| 4 | 15 | B |

| 16 | 26 | C |

| 27 | 40 | D |

Can you please help me in how to do this?

Thanks!

Kind regards

Valeria

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay. If QueryA rows are going to stay below maybe 500k, then I think the DAX solution would be fastest/simplest all round:

You'll need to apply both QueryA and QueryB to you data model, then add a calculated column to QueryA like this:

..group =

CALCULATE(

VAR __numberToCheck = VALUES(QueryA[NumberColumn])

RETURN

MAXX(

FILTER(

QueryB,

QueryB[Min] <= __numberToCheck

&& QueryB[Max] >= __numberToCheck

),

QueryB[Group]

)

)

If your QueryA gets to huge numbers of rows, or your QueryB gets to huge numbers of GROUPS you may find this gives you performance issues at runtime (as a calculated column is materialised into memory) in which case I would switch to a segregated Dataflow solution, but I think this will be fine.

Let me know how you get on.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ValeriaBreve ,

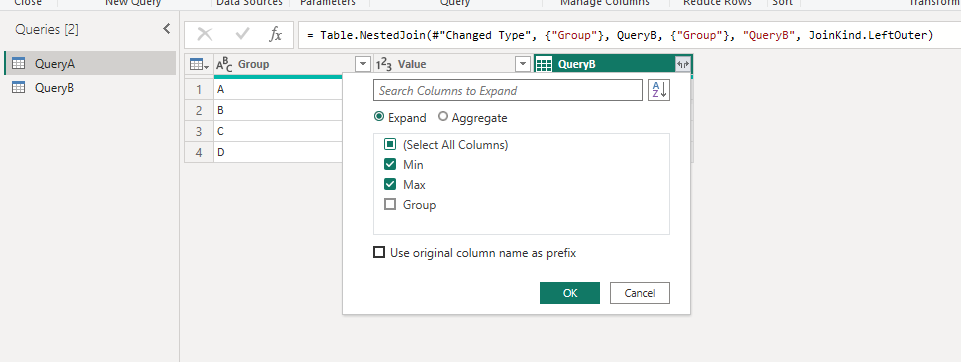

You can merge QueryA with QueryB.

Now you got the Min and Max in QueryA.

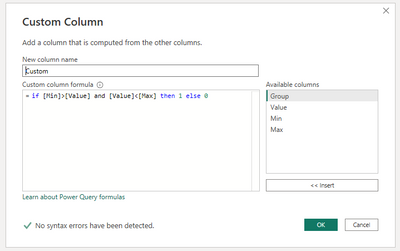

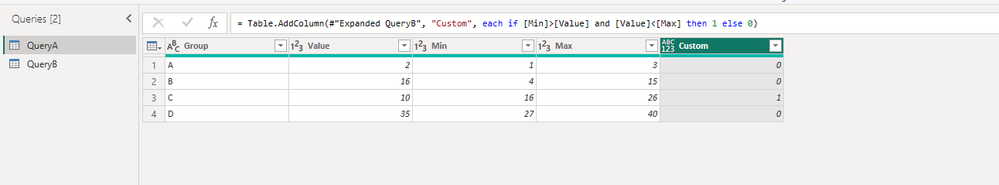

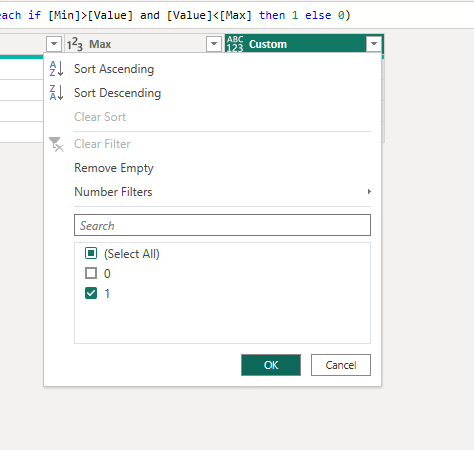

Add a custom column for filtering.

The custom column contains 1 is is higher than what's in column "Min" and smaller than what's in Column "Max" for the same row.

Filter them out according to your needs.

Best Regards,

Stephen Tao

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, thanks, but I don't have anything to merge the query onto - the first query only contains numbers, not the groups... I am trying to retrieve the groups...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ValeriaBreve ,

For the sake of simplicity, I'd probably just expand QueryB into an explicit list of the numbers in each group, disbale load of QueryB, then merge onto QueryA using the number columns now in each.

To expand QueryB:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTIGYkelWJ1oJRMgy9AUSDiBuYZmQKYRiHAG843MgUwTAyDhohQbCwA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Min = _t, Max = _t, Group = _t]),

chgTypes = Table.TransformColumnTypes(Source,{{"Min", Int64.Type}, {"Max", Int64.Type}, {"Group", type text}}),

addGroupList = Table.AddColumn(chgTypes, "groupList", each {[Min]..[Max]}),

expandGroupList = Table.ExpandListColumn(addGroupList, "groupList")

in

expandGroupListBasically create a new column '= {[Min]..[Max]}', then expand this nested list column to new rows.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! This works, thank you 🙂 However, I am wondering if it can give any performance issues - I made it short for the example here, but numbers in my data go up to over 60 million from zero, so that's a lot of rows...

So I am not sure of there is another approach to minimize execution time.

Also if I may - an extra question - the last number of my table in QueryB, the "max" of the last group, is taken as the max of the number in QueryA (List.Max(...)).

That was my idea to make sure I did not have any extra rows in there, but at the same time when I try to lookup the group in QueryA, I get a circular reference error. Is there a way this could be avoided?

Otherwise I will just give a static max number in QueryB which will be very high, and disable the refresh and load.

Thanks!!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My example was based on simplicity, not necessarily speed, so the numbers going up to 60M is definitely a relevant consideration.

There's a few questions here to see if we can go faster, but this may be at the cost of increased complexity:

- Is your model Direct Query/Live or Import? (I assume Import as the list generator I suggested would have broken DQ 🙂 )

- If Import, how frequently do QueryA and QueryB need to refresh? NOT the report, just these queries.

- What are the data sources for each of your queries, A & B? SQL Server, Excel, SharePoint etc.?

- If SQL, do you have the facility to write Views on the server?

- How many distinct GROUPS are there in QueryB?

- Does this HAVE to be done in Power Query, or are you also open to DAX suggestions? (This scenario is similar to handling SCD tables which I find fastest to manage in DAX).

Regarding the MAX value, I'm not sure you need it. Depending on your answers to the above, we may just be able to leave it null and make the last condition 'if greater than <LastGroup>[MIN] then <LastGroup>' if that makes sense?

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Pete!

Thanks for the extensive reply 🙂 Here are the answers:

- Is your model Direct Query/Live or Import? --> IMPORT

- If Import, how frequently do QueryA and QueryB need to refresh? NOT the report, just these queries. --> Both monthly (well so weekly from the service)

- What are the data sources for each of your queries, A & B? SQL Server, Excel, SharePoint etc.?

Both QueryA and QueryB are Excels

- How many distinct GROUPS are there in QueryB? Just a handful - 4 for now

- Does this HAVE to be done in Power Query, or are you also open to DAX suggestions? (This scenario is similar to handling SCD tables which I find fastest to manage in DAX). I am open to DAX suggestions, my DAX is not to where I would like it to be though so it might need some thorough explanation 😁

Thanks again!!!!!

Kind regards

Valeria

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay. If QueryA rows are going to stay below maybe 500k, then I think the DAX solution would be fastest/simplest all round:

You'll need to apply both QueryA and QueryB to you data model, then add a calculated column to QueryA like this:

..group =

CALCULATE(

VAR __numberToCheck = VALUES(QueryA[NumberColumn])

RETURN

MAXX(

FILTER(

QueryB,

QueryB[Min] <= __numberToCheck

&& QueryB[Max] >= __numberToCheck

),

QueryB[Group]

)

)

If your QueryA gets to huge numbers of rows, or your QueryB gets to huge numbers of GROUPS you may find this gives you performance issues at runtime (as a calculated column is materialised into memory) in which case I would switch to a segregated Dataflow solution, but I think this will be fine.

Let me know how you get on.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Pete, thanks!

I will test it 🙂

In the meantime you gave me an idea to test in powerQuery as well with your DAX formula...

So what I have done is I have added a calculated column, and then as a formula (where [Number] is the column from QueryA):

= if [Number]>=QueryB[Min]{0} and [Number]<QueryB[Max]{0} then QueryB[Group]{0} else ......

//and the same for 1,2,... until the last Row of the table (that I calculated as Table.RowCount(QueryB)).

This works well but I it is static in the sense that I don't know how to loop through the rows so I need to literally specify {0},{1},... in the row indicator.

Is there a way to have PowerQuery perform a loop such as

For i= each Row from 0 to LastRow -1 then

if [Number]>=QueryB[Min]{i} and [Number]<QueryB[Max]{i} then QueryB[Group]{i}

Next i

if Row=LastRow then if [Number]>=QueryB[Min]{LastRow} then QueryB[Group]{LastRow}

Thanks!

This issue seemed very complicated to me and now I have 2 options to test 🙂

Kind regards

Valeria

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You may be able to do something like this with List.Generate or a custom function, but I think you're going backwards on both performance and simplicity here. All things being equal (row counts etc.) I think the DAX solution will be superior in both regards.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Pete! Just tested the DAX solution. Works like a charm, super fast 🙂

I have a few other tables with the same structure, so I will re-use the same approach. Thanks!!!!!!!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cool! Glad it's worked well for you. 👍

Yes, as I mentioned previously, that DAX structure is also the simplest and fastest way to deal with SCD Type 2 tables that I've found. I normally avoid creating DAX calculated columns but, for this type of use, it's the best method without a doubt 🙂

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

| User | Count |

|---|---|

| 11 | |

| 8 | |

| 6 | |

| 5 | |

| 3 |