FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Merge rows with matching cell and consecutive ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Merge rows with matching cell and consecutive dates

I need help with merging rows that have the same job title and consecutive dates.

Example:

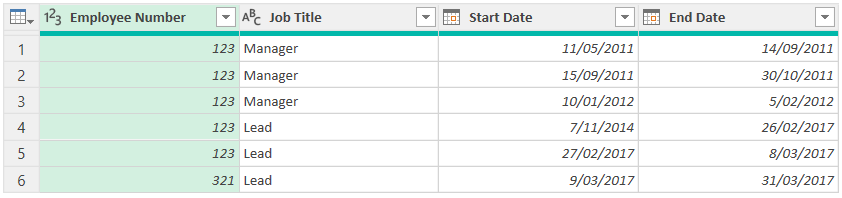

| Employee Number | Job Title | Start Date | End Date |

| 123 | Manager | May 11, 2011 | Septemer 14, 2011 |

| 123 | Manager | September 15, 2011 | October 30, 2011 |

| 123 | Lead | November 7, 2014 | February 26, 2017 |

I want to transform this table to become this table:

| Employee Number | Job Title | Start Date | End Date |

| 123 | Manager | May 11, 2011 | October 30, 2011 |

| 123 | Lead | November 7, 2014 | February 26, 2017 |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

here a simply way just use a group by function: copy this code in a blank query for you to see a example I did.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjQyVtJRAqLcxLzE9NQiIMtQ31DfyAAsDmRAmLE6OJQaI5QaGmBRW1yapIBQbwQzT0fJGKY1NhYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [employee = _t, number = _t, #"job title" = _t, date = _t, #"end date" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"employee", Int64.Type}, {"number", type text}, {"job title", type text}, {"date", type date}, {"end date", type date}}),

#"Changed Type with Locale" = Table.TransformColumnTypes(#"Changed Type", {{"date", type date}}, "en-US"),

#"Changed Type with Locale1" = Table.TransformColumnTypes(#"Changed Type with Locale", {{"end date", type date}}, "en-US"),

#"Grouped Rows" = Table.Group(#"Changed Type with Locale1", {"employee", "job title"}, {{"Date", each List.Min([date]), type nullable date}, {"End date", each List.Max([end date]), type nullable date}})

in

#"Grouped Rows"

Did I answer your question? Mark my post as a solution! / Did it help? Give some Kudos!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A generic PQ solution

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjQyVtJR8k3MS0xPLQKzKhUMDXUUjAwMDYHc4NSCktTcpNQiBUMTqGCsDqYuJGWmcL3+ySX5ICFjAzw6vRLzShOLgHZCFBkBhdxSk4rAYqZQIYQ+n9TEFCDll18Gsc0crMIEWZORGVjMHFMXTImROVQFyB1FyRkKFkg6jI0METog0pZoyo0NYepjAQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"Employee Number" = _t, #"Job Title" = _t, #"Start Date" = _t, #"End Date" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Employee Number", Int64.Type}, {"Job Title", type text}, {"Start Date", type date}, {"End Date", type date}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 0, 1, Int64.Type),

Grouped =

let rows = Table.ToRecords(#"Added Index")

in Table.Group(

#"Added Index",

Table.ColumnNames(#"Added Index"),

{"grp", each [Start = List.Min([Start Date]), End = List.Max([End Date])]},

0,

(x,y) => Byte.From(

x[Employee Number]<>y[Employee Number]

or

y[Start Date] - #duration(1,0,0,0) <> rows{y[Index]-1}[End Date])

),

#"Expanded grp" = Table.ExpandRecordColumn(Table.RemoveColumns(Grouped, {"Start Date","End Date","Index"}), "grp", {"Start", "End"})

in

#"Expanded grp"

From

to

Expertise = List.Accumulate( {Days as from Today}, {Skills and Knowledge}, (Current, Everyday) => Current & Day.LearnAndPractise(Everyday) ) |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try a more direct approach like @Mahesh0016 or mine, looks like yours works but that will have a performance impact in the refresh query when data gets bigger and bigger, you can achieve the same using group by only.

Did I answer your question? Mark my post as a solution! / Did it help? Give some Kudos!

Proud to be a Super User!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@brandonab

Create Below Two Measure StartDate And EndDate

StartDate =

CALCULATE (

MIN ( 'Table X'[Start Date] ),

ALLEXCEPT ( 'Table X', 'Table X'[Job Title] )

)

########################################################

EndDate =

CALCULATE (

MAX ( 'Table X'[End Date] ),

ALLEXCEPT ( 'Table X', 'Table X'[Job Title] )

)

Other Way Is Below

>> Go to Power Query >> Select "Employ Number" and "Job Title" column >> Home Tab in Group By >> in group by Operation select MIN and Column in Start Date then add more Aggregation in Operation select MAX and Column in End Date >> Click On Ok >> Get Your OutPut

Here Is Code paste your power query blank query

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjQyVtJR8k3MS0xPLQKzKhUMDXUUjAwMDYHc4NSCktTcpNQiBUMTqGCsDqYuJGWmcL3+ySX5ICFjAwydPqmJKUDKL78MosscrMIEKOSWmlRUmlhUqWBkBhYzV4qNBQA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"Employee Number" = _t, #"Job Title" = _t, #"Start Date" = _t, #"End Date" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Employee Number", Int64.Type}, {"Job Title", type text}, {"Start Date", type date}, {"End Date", type date}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Employee Number", "Job Title"}, {{"StartDate", each List.Min([Start Date]), type nullable date}, {"EndDate", each List.Max([End Date]), type nullable date}})

in

#"Grouped Rows"

@brandonab THANK YOU!!

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!