FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Limit Dimension Data based on Fact Table in Po...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Limit Dimension Data based on Fact Table in Power Query

There are 2 tables:

1. Dimension Table D - EMPNO (25,000,000 rows) sourced from Oracle DB

2. Fact Table F - Excel source

join on D.EMPNO=F.EMPNO using Merge queries in Power Query.

The performance is extremely slow using Import/DirectQuery mode. I tried to limit the rows in Dimension table by following the below steps:

1. Buffered List to extract unique list of EMPNO in F - EmpList

Emplist = let

Source = List.Distinct(F[EMPNO]),

#"Buffered List" = List.Buffer(Source)

in

#"Buffered List"

2. Filter Dimension D i.e. Table.SelectRows(#"Changed Type",each List.Contains(EmpList, [EMPNO]))

It works but it is extremely slow when "Enable Load" is enabled for Dimension D. Although the Step (2) returns few rows(129 rows), when I apply the changes in Power Query, it loads all the rows for Dimension D.

I was hoping to get some guidance if there is anything wrong or can be done differently.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. Your current query setup won't allow query folding.

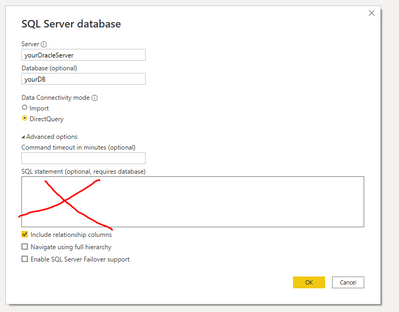

Can you try connecting without using an explicit SQL statement please?

Connect to the DB again, and leave the SQL statement blank:

Just hit OK, then select your dim_employee table from the table list and import.

Assuming this goes as planned, select the [EMPNO] column in your new dimension table and filter just one value. This should be fast to implement and sets us up a new step with the correct structure.

Edit that new step in the formula bar, so it uses our streaming list filter, something like this:

= Table.SelectRows(

previousStepName,

each List.Contains( List.Buffer( factTableName[EMPNO] ), [EMPNO] )

)

This setup should allow PQ to stream the [EMPNO] values from your Excel source into the SQL native query sent to the SQL source for your dim_employee table.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

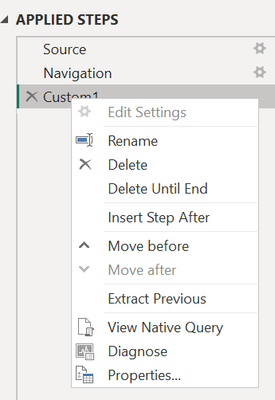

If you right-click your #"Filtered Rows" step, is 'Native Query' now selectable, or is it still greyed-out?

If it's still greyed out, try changing one of the column data types to the same type, but not a text column. For example, change a date type column to date type, or a decimal type column to decimal. This is purely to force PQ to re-evaluate the query and hopefully force it to generate a native query to source.

If native query IS selectable, then you're probably at the limit of optimisation. The Oracle Data Access Client (ODAC) requirement isn't the best in this regard.

Pete

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had the same issue. The solutions below didn't work for me, but this one did. It took me hours to get it to work. I'm unsure why the other solutions didn't work and this one did, as it looks very similar to the other solutions.

= let

DistinctKey = List.Buffer( List.Distinct(FctTable[DimKey])),

DimFiltered = Table.SelectRows(dbo_DimTable, each List.Contains(DistinctKey, [DimKey]))

in DimFiltered

It's really important that you can see "View Native Query" in the screenshot below, otherwise it will be doing the processing in PowerBI rather than doing the query on the server side. The query will work much faster if the query is running on the server. Power BI will attempt to put the list into an "IN" statement in SQL Server; this works fine if you have less than 1000 rows you want to filter; if you want to filter many more rows than this, you might run into some issues. Say if you have 500,000 rows in your dimension, but you only need 200 rows, this method would work, but if you want to filter to 100,000 rows, this method will not work well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This worked well for my situation. Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It should be super fast. I use this method all of the time against SQL Server and it folds. Using List.Contains To Filter Dimension Tables — ehansalytics

Are you getting an IN operator in the folded statement to your server? What is the backend?

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @edhans

My Fact Table is excel based source and Dimension is Oracle table.

May be it works fine for Relational data sources but it is extremely slow. Can you share a sample file where you have implemented the above , just in case I am missing anything?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I cannot provide a sample file because it was connected to my instance of SQL Server. If you install SQL Server and the Contoso or AdventureWorks sample databases, you can test the code in my blog post I linked to.

On the Oracle issue, I have seen VERY SLOW query performance with Power BI and Oracle, even when it folds, and I 100% blame the horrible Oracle driver Power BI uses. Clearly Power BI can do this as it works great with SQL and Snowflake databases, and clearly Oracle is a good RDB, but Power BI+Oracle is a substandard mix.

So much so I have a client that used to use Oracle as their datawarehouse and are now moving everything to SnowFlake because it offers superior performance with Power BI.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

The first thing you can try is to shortcut your buffered list filter:

Remove your 'Buffered List' query then change the filter step in your dimension table to something like this:

Table.SelectRows(

#"Changed Type",

each List.Contains(List.Buffer(List.Distinct(F[EMPNO])), [EMPNO])

)

To be honest, I can't imagine this will speed things a up a whole lot, but worth a try.

The next thing you can do is to delete the merge and just send both tables to the data model. Relate them on D[EMPNO] = F[EMPNO]. It's likely to be significantly quicker than doing an unfolded Power Query merge.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

Thanks for your response.

I have tried it but doesn't make much difference:

Table.SelectRows(

#"Changed Type",

each List.Contains(List.Buffer(List.Distinct(F[EMPNO])), [EMPNO])

)As the fact data is excel file based, I cannot join them in the database at the moment.

As a workaround, I have used DirectQuery mode however, the report is slow.

Any other ideas please?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Did you try removing the merge and relating in the data model?

I think the fastest setup you'll get to in this scenario would be to use the buffered list filter on the dimension table in PQ, then relate both in the model.

You could also try filtering your dimension table in other ways in PQ before loading. To have 25M employee numbers in a dimension table I have to assume it goes back many years. Is it feasible to filter to maybe only employees active in the last few years? Maybe filter it for certain departments and create separate reports for each department?

This PQ filter will also be included in visual queries sent to the source when using Direct Query.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What didn't work? Has it not sped up the process, or did you get an error or something?

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

The report works fine based on the established relationship.

But the filter the Dim table based on Buffered list and save the changes steps takes a long time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Ok. My guess is that you're applying a query step to your dimension table that is breaking query folding or causing unnecesary processing. Using the method that we have, Power Query should be streaming the F[EMPNO] values to your SQL server in a native query, and should be really fast. Can you right-click the final step in your dimension query and check whether the 'Native Query' option is lit up or greyed-out please?

If it's greyed-out, select your dimension table as we have it set up now, go to the Home tab, select Advanced Editor and copy all the code in there. Then paste the whole lot into a code window here using this button:

Before you close the code window, please just overtype any sensitive connection string values (file paths, server paths etc.) with 'XXX', but keep the code structure itself, as this may be important.

I should be able to see if there's anything breaking query folding or causing unnecessary processing time that we can work around.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

Below are the steps I have done:

DIM_EMPLOYEE=

let

Source = Oracle.Database("XXX", [HierarchicalNavigation=true, Query="SELECT * FROM XX.DIM_EMPLOYEE"])

in

Source

Create a reference table as below:

Filtered_Emp=

Filtered_Emp= let

Source = DIM_EMPLOYEE,

#"Filtered Rows" = Table.SelectRows(Source, each List.Contains(List.Buffer(EmpList),[CUSTOMER_NUMBER]))

in

#"Filtered Rows"

The native query is greyed out.

I have disabled load for everything except the F and Filtered_Emp. When I hit apply in Power Query, it tries to load all the 2 million records athough the Filtered_Emp has 200 records only.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. Your current query setup won't allow query folding.

Can you try connecting without using an explicit SQL statement please?

Connect to the DB again, and leave the SQL statement blank:

Just hit OK, then select your dim_employee table from the table list and import.

Assuming this goes as planned, select the [EMPNO] column in your new dimension table and filter just one value. This should be fast to implement and sets us up a new step with the correct structure.

Edit that new step in the formula bar, so it uses our streaming list filter, something like this:

= Table.SelectRows(

previousStepName,

each List.Contains( List.Buffer( factTableName[EMPNO] ), [EMPNO] )

)

This setup should allow PQ to stream the [EMPNO] values from your Excel source into the SQL native query sent to the SQL source for your dim_employee table.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

I followed the steps that you suggested but still no change in the performance.

let

Source = Oracle.Database("XXXX", [HierarchicalNavigation=true]),

XX = Source{[Schema="XX"]}[Data],

DIM_EMPLOYEE = XX{[Name="DIM_EMPLOYEE"]}[Data],

#"Filtered Rows" = Table.SelectRows(DIM_EMPLOYEE, each List.Contains(List.Buffer(Fact[EMPNO]),[EMPNO]))

in

#"Filtered Rows"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

If you right-click your #"Filtered Rows" step, is 'Native Query' now selectable, or is it still greyed-out?

If it's still greyed out, try changing one of the column data types to the same type, but not a text column. For example, change a date type column to date type, or a decimal type column to decimal. This is purely to force PQ to re-evaluate the query and hopefully force it to generate a native query to source.

If native query IS selectable, then you're probably at the limit of optimisation. The Oracle Data Access Client (ODAC) requirement isn't the best in this regard.

Pete

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

I tried to perform the suggested steps from scratch and when I am applying the filter to limit the rows in the dimension, it says"This stepsresults in a query that is not supported in DirectQuery mode".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

You shouldn't need to use Direct Query mode any more.

I believe you only wanted to use DQ due to the large number of rows in your dimension table. If we can get this method to fold to the source, it will work very quickly in Import mode as you will only actually be importing the number of rows that correlate to to values in your fact table (about 126 IIRC).

Delete your DQ dimension table in Power Query, then set it up in exactly the same way again using Import mode. Then check to see if 'Native Query' is lit up on your final step or not.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

It worked 🙂 But I also noticed that the moment I add any steps to the Dimension i.e. rename column/change datatype, the Native query option is greyed out and it started loading the data again. I will probably add a note in PQ not to add any steps in future else it might stop working.

Thank you so much @BA_Pete for the solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @BA_Pete

It worked 🙂 But I also noticed that the moment I add any steps to the Dimension i.e. rename column/change datatype, the Native query option is greyed out and it started loading the data again. I will probably add a note in PQ not to add any steps in future else it might stop working.

Thank you so much @BA_Pete for the solution.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 12 | |

| 11 | |

| 10 | |

| 6 | |

| 5 |