Join the Fabric User Panel to shape the future of Fabric.

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Sign up now- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric certified for FREE! Don't miss your chance! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Data key - value

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data key - value

Hi Experts

I just started, so hold my hand please. I have 2-3 questions

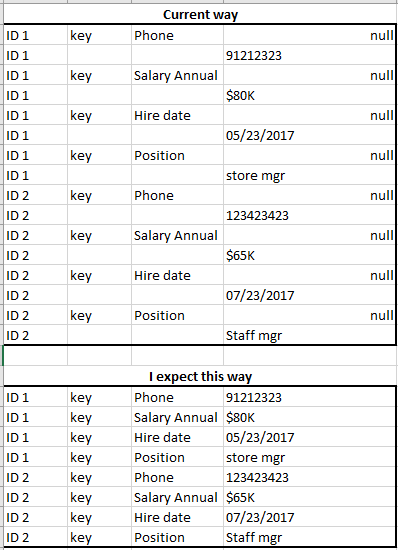

I got a straight forward data, but **bleep** for each ID, in alternate rows are data key and data values

This is what I did, I pivoted, merged and split now what I have is following columns

For each 1. ID(Repeated n times in rows), 2. Every second row Key (with alternate blank rows) 3. Name of the Key 4. Value with Every alternate row (Null, Null in the row corresponding to Key name, and in next row Value).

Please need help to move value column to move up in place of Null for each ID ..

So I have 3 coloumns

ID , Key name, Key value as attached in picture.

as shown in picture ( I will delete the key column).

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @acerNZ

check out this solution.

it applies a replace-value to be sure empty strings are replaced with null. Then a Fill-down and a Fill-up is applied. The table is alternated, to keep every second row only

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45W8nQxVNJRCsjIz0sF0kqxOjAhIDIyBAJjQyTB4MScxKJKhcS8vNLEHAz1piamUAEjTDONIGoswABJDIeRUOVmBgZKsbEA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [ID = _t, Type = _t, Value = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", type text}, {"Type", type text}, {"Value", Int64.Type}}),

#"Replaced Value" = Table.ReplaceValue(#"Changed Type","",null,Replacer.ReplaceValue,{"Type", "Value"}),

#"Filled Down" = Table.FillDown(#"Replaced Value",{"Type"}),

#"Filled Up" = Table.FillUp(#"Filled Down",{"Value"}),

#"Removed Alternate Rows" = Table.AlternateRows(#"Filled Up",1,1,1)

in

#"Removed Alternate Rows"

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jimmy801 Oops my message did not go through.

It worked as charm, on the test file attached. But maybe my mistake, I did not explain it well

My actual data has for each ID, several hundreds of key and values in the next row, next coloumn and pattern is unknown.

I googled and found this https://youtu.be/V_ULyeHNJFY but this too will not work as for my problem

For each ID ( for each data key, the value is data key [Coloumn +1, Row+1])

I am not sure, how to achieve this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @acerNZ

check out this solution.

it applies a replace-value to be sure empty strings are replaced with null. Then a Fill-down and a Fill-up is applied. The table is alternated, to keep every second row only

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45W8nQxVNJRCsjIz0sF0kqxOjAhIDIyBAJjQyTB4MScxKJKhcS8vNLEHAz1piamUAEjTDONIGoswABJDIeRUOVmBgZKsbEA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [ID = _t, Type = _t, Value = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", type text}, {"Type", type text}, {"Value", Int64.Type}}),

#"Replaced Value" = Table.ReplaceValue(#"Changed Type","",null,Replacer.ReplaceValue,{"Type", "Value"}),

#"Filled Down" = Table.FillDown(#"Replaced Value",{"Type"}),

#"Filled Up" = Table.FillUp(#"Filled Down",{"Value"}),

#"Removed Alternate Rows" = Table.AlternateRows(#"Filled Up",1,1,1)

in

#"Removed Alternate Rows"

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jimmy801 Oops my message did not go through.

It worked as charm, on the test file attached. But maybe my mistake, I did not explain it well

My actual data has for each ID, several hundreds of key and values in the next row, next coloumn and pattern is unknown.

I googled and found this https://youtu.be/V_ULyeHNJFY but this too will not work as for my problem

For each ID ( for each data key, the value is data key [Coloumn +1, Row+1])

I am not sure, how to achieve this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

But this should have no impact how many keys you have for every id as one dataset is distributed on 2 rows and with fill up and down and alternating through the table should always work. The result should be as you were posting in your first post.

Br

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @acerNZ

Can you post your original table in text format, i.e. just do copy table in Power BI and paste it here. It can tehn be copied easily to run some tests and come up with the solution

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey AIB Super User,

Thanks a ton. Unfortunately, I have some confidential personal data in the reports, and hence created a dummy sample. Please can you help me with this, only thing to take care is I have ID rows going N times different for each ID... all I need is to value column to align in the row of Key name replacing null ( but I will wait for your advice).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Paste the dummy then please

Please mark the question solved when done and consider giving kudos if posts are helpful.

Contact me privately for support with any larger-scale BI needs, tutoring, etc.

Cheers

Helpful resources

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 11 | |

| 7 | |

| 6 |