FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! It's time to submit your entry. Live now!

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Conditionally combining multiple rows into one...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Conditionally combining multiple rows into one column

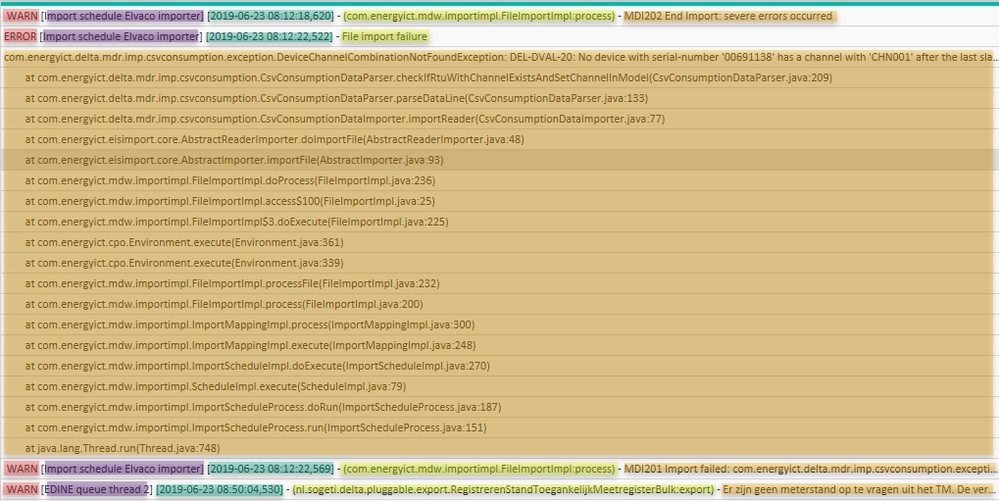

I have a folder full of files in the format below. I want to load these into PowerBI using Power Query.

See attached picture, I colored every column with its own color.

Loading and splitting the columns that are NOT colored orange are not a problem.

The orange values are an error message. Most of the time these are short string in the same line, but sometimes they contain detailed error logs of mulitple rows.

How can I get the orange colored values into one column, where the multiple row logs will end up in one "cell" or value ?

WARN [Import schedule Elvaco importer] [2019-06-23 08:12:18,620] - (com.energyict.mdw.importimpl.FileImportImpl:process) - MDI202 End Import: severe errors occurred

ERROR [Import schedule Elvaco importer] [2019-06-23 08:12:22,522] - File import failure

com.energyict.delta.mdr.imp.csvconsumption.exception.DeviceChannelCombinationNotFoundException: DEL-DVAL-20: No device with serial-number '00691138' has a channel with 'CHN001' after the last slash ('/') in the external name.

at com.energyict.delta.mdr.imp.csvconsumption.CsvConsumptionDataParser.checkIfRtuWithChannelExistsAndSetChannelInModel(CsvConsumptionDataParser.java:209)

at com.energyict.delta.mdr.imp.csvconsumption.CsvConsumptionDataParser.parseDataLine(CsvConsumptionDataParser.java:133)

at com.energyict.delta.mdr.imp.csvconsumption.CsvConsumptionDataImporter.importReader(CsvConsumptionDataImporter.java:77)

at com.energyict.eisimport.core.AbstractReaderImporter.doImportFile(AbstractReaderImporter.java:48)

at com.energyict.eisimport.core.AbstractImporter.importFile(AbstractImporter.java:93)

at com.energyict.mdw.importimpl.FileImportImpl.doProcess(FileImportImpl.java:236)

at com.energyict.mdw.importimpl.FileImportImpl.access$100(FileImportImpl.java:25)

at com.energyict.mdw.importimpl.FileImportImpl$3.doExecute(FileImportImpl.java:225)

at com.energyict.cpo.Environment.execute(Environment.java:361)

at com.energyict.cpo.Environment.execute(Environment.java:339)

at com.energyict.mdw.importimpl.FileImportImpl.processFile(FileImportImpl.java:232)

at com.energyict.mdw.importimpl.FileImportImpl.process(FileImportImpl.java:200)

at com.energyict.mdw.importimpl.ImportMappingImpl.process(ImportMappingImpl.java:300)

at com.energyict.mdw.importimpl.ImportMappingImpl.execute(ImportMappingImpl.java:248)

at com.energyict.mdw.importimpl.ImportScheduleImpl.doExecute(ImportScheduleImpl.java:270)

at com.energyict.mdw.importimpl.ScheduleImpl.execute(ScheduleImpl.java:79)

at com.energyict.mdw.importimpl.ImportScheduleProcess.doRun(ImportScheduleProcess.java:187)

at com.energyict.mdw.importimpl.ImportScheduleProcess.run(ImportScheduleProcess.java:151)

at java.lang.Thread.run(Thread.java:748)

WARN [Import schedule Elvaco importer] [2019-06-23 08:12:22,569] - (com.energyict.mdw.importimpl.FileImportImpl:process) - MDI201 Import failed: com.energyict.delta.mdr.imp.csvconsumption.exception.DeviceChannelCombinationNotFoundException: DEL-DVAL-20: No device with serial-number '00691138' has a channel with 'CHN001' after the last slash ('/') in the external name.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PhilC thank you for your help, I think I solved it using parts of your script.

1. Import file in 1 column

2. Insert new column, with values of original column only if the line starts with (ERROR, WARN, FATAL). So the messages containing multiple lines will not be put into this new column

3. Fill new column down

4. Group by on this new column as the key (it contains a timestamp so thats possible), and the original column as the value, and removing linefeeds in the grouping process:

#"Group" = Table.Group(#"Fill Down", {"Temp"}, {{"Column1", each Text.Combine([Column1],"#(lf)"), type text}})5. This solves the original problem. Now split by delimiters and set column types to finish

@PhilC please explain what your thinking process was by adding the index column and the logic that uses this index column? I didn't need this part and this made it slow. Maybe I'm missing something?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

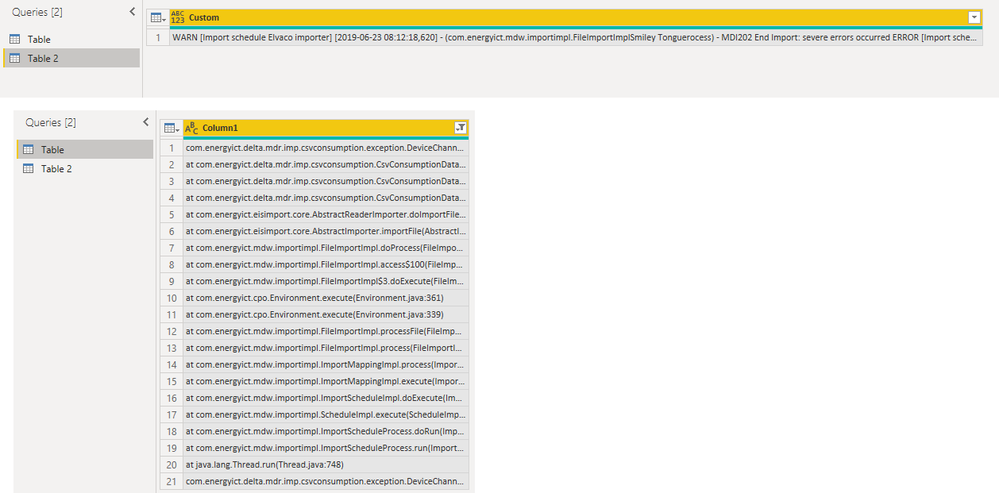

Do you want to get the output below?

If so, we could achieve that in Query Editor. Please refer to the Applied Steps in my attachement.

If you still need help, please share your desired output so that we could help further on it.

Best Regards,

Cherry

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

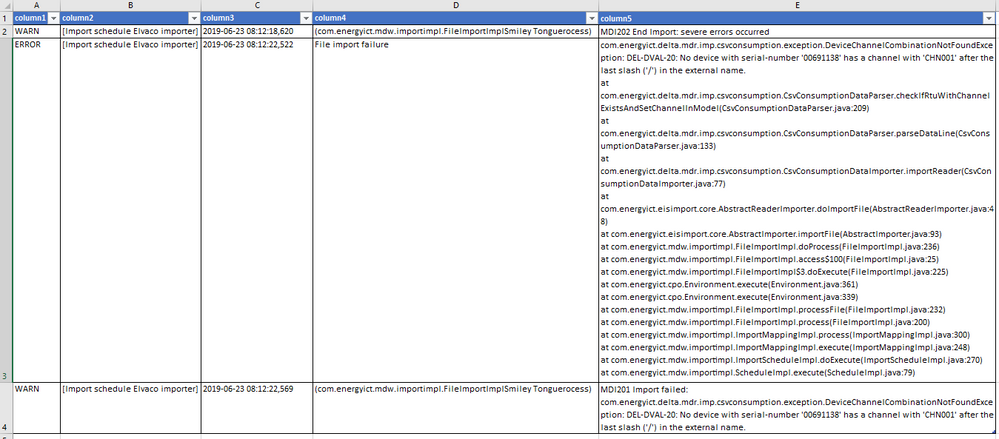

Hi @v-piga-msft

Thanks for your reply. Unless I understand your solution incorrectly, this is not the output I'm looking for. I attached a screenshot of an Excel table with the preferred output. The first 4 columns are not a problem for me, column5 is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI, in the data you provided initially, has it been edited at all or is it exactly as per the original file? I am wondering why for the second record the "com...." is on its own line and not following on from "File import failure"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PhilC , the data is original (except for the emoticons parsed by this forum).

What you are referring to is the exact reason I need help 🙂

When a row starts with a value not equal to ERROR, then the last value in the row is the error message.

When a row starts with value ERROR, then the last value is not available, and the error message is in the following lines until a new line appears starting with starting value in ([space]WARN, ERROR, FATAL).

It seems I cannot attach a txt file here so here is a new example, sliglty edited to make it shorter:

FATAL [CPS queue thread 0] [2019-06-25 00:30:52,426] - (com.energyict.nl.cps.mail:checkBouncyCastleProvider) - Can't find BC provider - adding WARN [EDINE queue thread 3] [2019-06-25 00:50:11,005] - (nl.sogeti.delta.pluggable.export.export) - Er zijn geen meterstand op te vragen uit het TM WARN [EDINE queue thread 3] [2019-06-25 01:50:29,938] - (nl.sogeti.delta.pluggable.export.export) - Er zijn geen meterstand op te vragen uit het TM ERROR [FTP queue thread 3] [2019-06-25 02:41:49,751] - Connection timed out: connect java.net.ConnectException: Connection timed out: connect at java.net.DualStackPlainSocketImpl.waitForConnect(Native Method) at java.lang.Thread.run(Thread.java:748) WARN [FTP queue thread 3] [2019-06-25 02:41:49,767] - (com.energyict.mdw.exportimpl.logFailure) - Export handler error : java.net.ConnectException: Connection timed out ERROR [FTP queue thread 2] [2019-06-25 02:41:52,184] - java.net.ConnectException: Connection timed out: connect java.lang.RuntimeException: java.net.ConnectException: Connection timed out: connect at com.energyict.mdw.exportimpl.SFtpActionStrategy$SftpClient.connectAndLogIn(SFtpActionStrategy.java:206) at java.lang.Thread.run(Thread.java:748) Caused by: java.net.ConnectException: Connection timed out: connect at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) ... 17 more WARN [FTP queue thread 2] [2019-06-25 02:41:52,184] - (com.energyict.mdw.exportimpl.logFailure) - Export handler error : Connection timed out: connect

Also attached a screenshot of this text in Notepad++ including the indicators for Carriage Return, Line Feed, Spaces and Tabs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a functional idea how to do this but I'm not skilled enough in M to write script for this.

"Break up into seperate columns" is not specified because it should not be the problem here.

IF SUBSTRING(line, 1,5) in ([space]WARN, FATAL) THEN read entire line until carriage return line break, and then break up in seperate columns

IF SUBSTRING(line, 1,5) IN (ERROR) then remove every carriage return line break for the current line and all following lines until a new line starts with SUBSTRING(line, 1,5) in ([space]WARN, FATAL), and then break up in seperate columns

I found options in M to replace/remove carriage raturn / line breaks but this seems to work only after importing, and it should be done before importing / at the importing stage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The following code works for the original data saved as a text file, hoping it works for the full dataset. It feels like there should be a more elegant solution, but would require a level of knowledge greater than mine.

let

Source = Csv.Document(File.Contents("N:\DVC-R\8 ED(RO)\Data and Statistics\Subscriptions, Resources and Software\Power BI\Support\Log File Extract.txt"),[Delimiter="|", Columns=1, Encoding=1252, QuoteStyle=QuoteStyle.None]),

#"Added Custom" = Table.AddColumn(Source, "Row Begin Check", each if Text.Start(Text.Trim([Column1]),1) = Text.Upper(Text.Start(Text.Trim([Column1]),1)) then [Column1] else null, type text),

#"Added Index" = Table.AddIndexColumn(#"Added Custom", "Index", 1, 1),

#"Changed Type" = Table.TransformColumnTypes(#"Added Index",{{"Index", Int64.Type}}),

#"Added Custom1" = Table.AddColumn(#"Changed Type", "Custom", each try

if [Row Begin Check] <> null and #"Changed Type"[Row Begin Check]{[Index]} = null then " - "&#"Changed Type"[Column1]{[Index]} else null

otherwise null),

#"Added Custom2" = Table.AddColumn(#"Added Custom1", "Custom.1", each try

if #"Changed Type"[Row Begin Check]{[Index]-2} <> null and [Row Begin Check] = null then 1 else null

otherwise null),

#"Filtered Rows" = Table.SelectRows(#"Added Custom2", each ([Custom.1] = null)),

#"Inserted Merged Column" = Table.AddColumn(#"Filtered Rows", "Merged", each Text.Combine({[Row Begin Check], [Custom]}, ""), type text),

#"Replaced Value" = Table.ReplaceValue(#"Inserted Merged Column","",null,Replacer.ReplaceValue,{"Merged"}),

#"Filled Down2" = Table.FillDown(#"Replaced Value",{"Merged"}),

#"Added Custom3" = Table.AddColumn(#"Filled Down2", "Custom.2", each if [Row Begin Check] = null then [Column1] else [Merged]),

#"Grouped Rows1" = Table.Group(#"Added Custom3", {"Merged"}, {{"Combined", each Text.Combine([Custom.2],"#(lf)"), type text}}),

#"Removed Other Columns" = Table.SelectColumns(#"Grouped Rows1",{"Combined"}),

#"Split Column by Delimiter1" = Table.SplitColumn(#"Removed Other Columns", "Combined", Splitter.SplitTextByEachDelimiter({"["}, QuoteStyle.Csv, false), {"Column 1", "Column Temp"}),

#"Split Column by Delimiter2" = Table.SplitColumn(#"Split Column by Delimiter1", "Column Temp", Splitter.SplitTextByEachDelimiter({"] ["}, QuoteStyle.Csv, false), {"Column 2", "Column Temp"}),

#"Split Column by Delimiter3" = Table.SplitColumn(#"Split Column by Delimiter2", "Column Temp", Splitter.SplitTextByEachDelimiter({"] - "}, QuoteStyle.Csv, false), {"Column 3", "Column Temp"}),

#"Split Column by Delimiter4" = Table.SplitColumn(#"Split Column by Delimiter3", "Column Temp", Splitter.SplitTextByEachDelimiter({" - "}, QuoteStyle.Csv, false), {"Column 4", "Column 5"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter4",{{"Column 1", type text}, {"Column 2", type text}, {"Column 3", type text}, {"Column 4", type text}, {"Column 5", type text}})

in

#"Changed Type1"Cheers,

PhilC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! I guess there is a infinite loop problem because with a full production file it just keeps on loading and says the file is gigabytes in size, and eventually my system is out of memory and it crashes 🙂 But I will try to understand your steps and find the problem, it's a good start.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah, sorry. Using the Index offset might be the issue on a larger number of rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@PhilC thank you for your help, I think I solved it using parts of your script.

1. Import file in 1 column

2. Insert new column, with values of original column only if the line starts with (ERROR, WARN, FATAL). So the messages containing multiple lines will not be put into this new column

3. Fill new column down

4. Group by on this new column as the key (it contains a timestamp so thats possible), and the original column as the value, and removing linefeeds in the grouping process:

#"Group" = Table.Group(#"Fill Down", {"Temp"}, {{"Column1", each Text.Combine([Column1],"#(lf)"), type text}})5. This solves the original problem. Now split by delimiters and set column types to finish

@PhilC please explain what your thinking process was by adding the index column and the logic that uses this index column? I didn't need this part and this made it slow. Maybe I'm missing something?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

Great you found a way to use the code and get it to work for you.

Regarding the INDEX column, it looked like the third row should be in column 5 (from the original data posted), but there was no "-" delimiter to split between column 4 and column 5 after "File Import Failure" (one row 2), I used the index and offset to bring that row up and add in the "-" so I could split on it.

If it is not needed, then great, no need to have complexity if not required.

Cheers

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! It's time to submit your entry.

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 8 | |

| 7 | |

| 7 |