Huge last-minute discounts for FabCon Vienna from September 15-18, 2025

Supplies are limited. Contact info@espc.tech right away to save your spot before the conference sells out.

Get your discount- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Score big with last-minute savings on the final tickets to FabCon Vienna. Secure your discount

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Can I connect to a Amazon S3 bucket using Powe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can I connect to a Amazon S3 bucket using Power Query?

Hi

Amazon stores billing data in S3 buckets, i want to retrieve the CSV files and consolidate them. Is PowerBI/Power Query able to connect to S3 buckets?

Regards

Remi

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @fenixen,

As the Amazon S3 is a web service and supports the REST API. You can try to use web data source to get data. See:

Amazon S3 REST API Introduction

How to call REST APIs and parse JSON with Power BI

Another I can think of is importing data from Amazon S3 into Amazon Redshift. then in Power BI desktop, use Amazon Redshift connector get data. See: Loading Data from Amazon S3.

Best Regards,

Qiuyun Yu

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

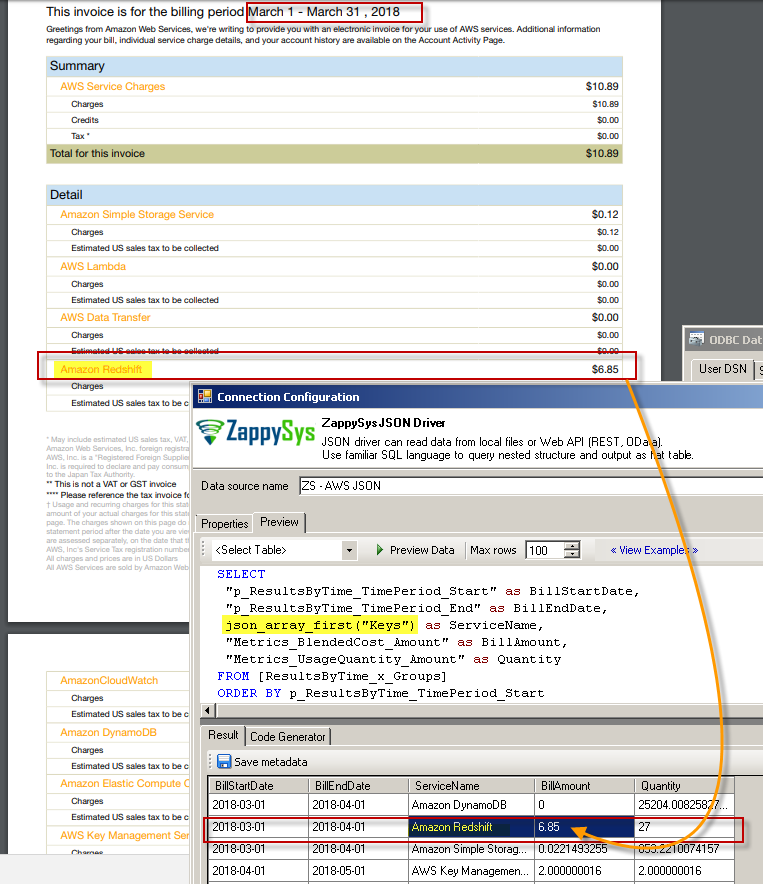

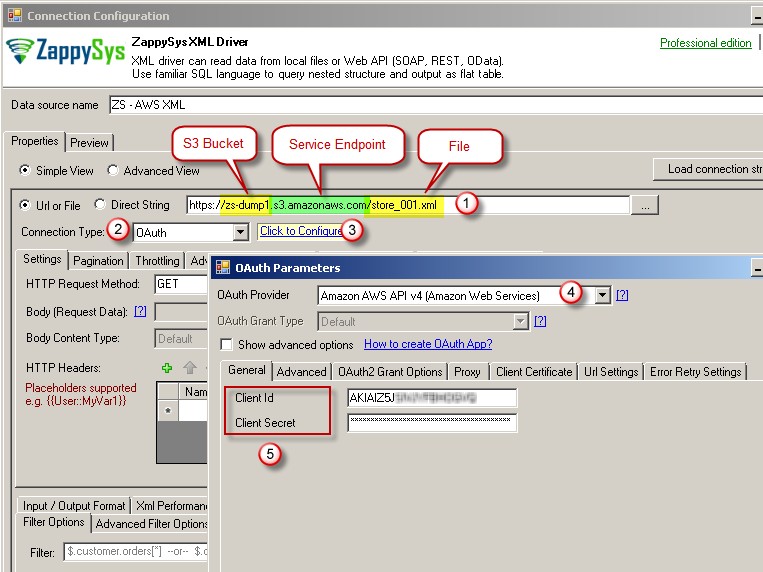

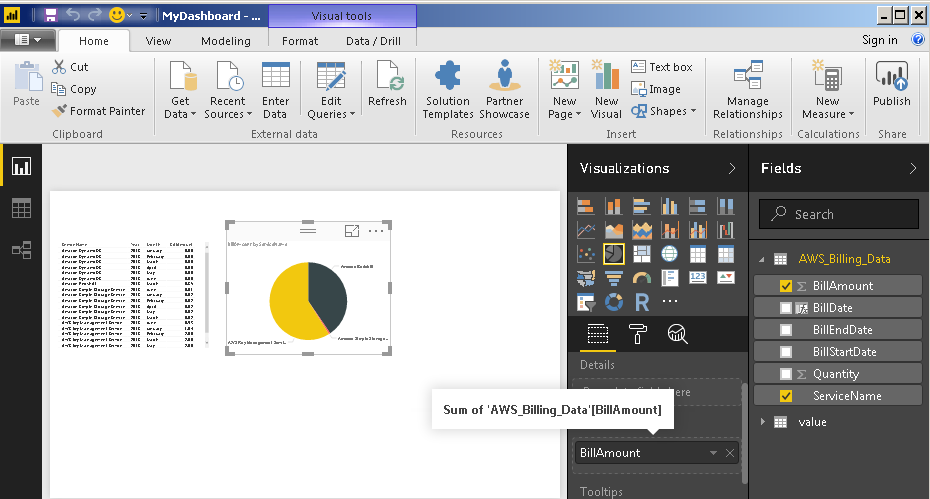

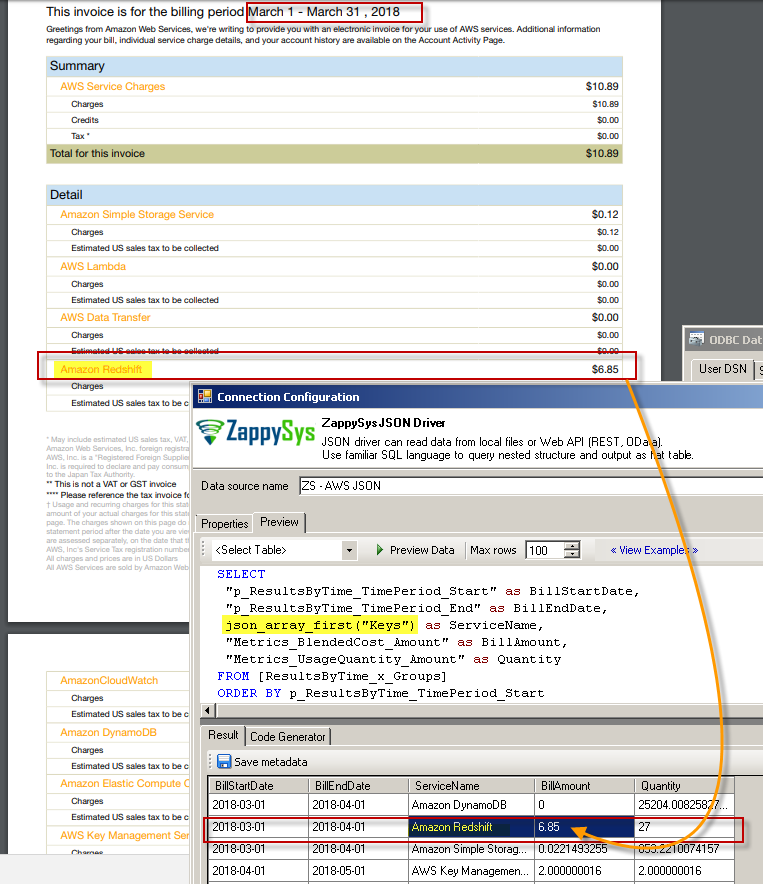

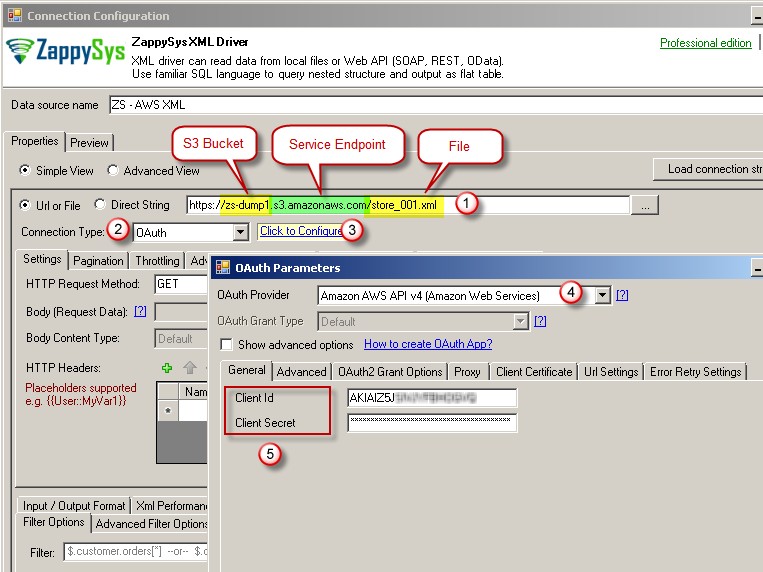

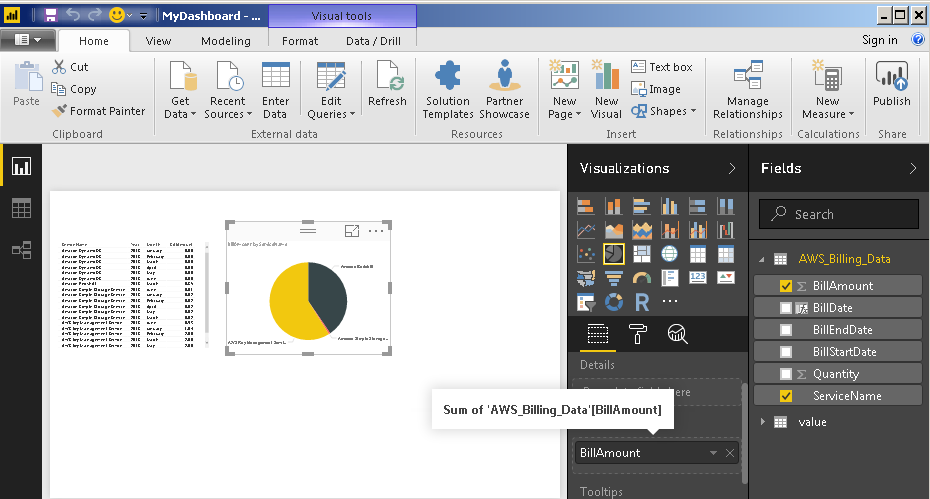

ZappySys has released API drivers (XML and JSON) with AWS API support. See below blog post it explains scenario of how to access AWS S3 data in Power BI. It also explains Billing / Cost API usecase via API calls. ZappySys will rease CSV driver very soon which will support your scenario of reading CSV from S3 in Power BI but until that you can call Billing API (JSON format)

https://zappysys.com/blog/read-amazon-s3-data-power-bi-aws-json-xml-api/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I used a python script in Power BI. See my suggestion at:

https://stackoverflow.com/questions/51801521/connecting-power-bi-to-s3-bucket/63185488#63185488

Hope it helps.

DB Waller

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello! I applied a similar solution to our process extracting data from a MP Tool, but to autorefresh a dashboard you need to set up a On-premise app and this lives in your local computer, hence if it is not on it will not refresh because the script is there

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have tried using Azure Data Factory to pull CSV from S3 bucket. If you have some ETL or SSIS experience, then you are good to go with ADF. I managed to pull the CSV into a blob storage. You can then insert the data from CSV into any SQL database and connect to Power BI.

Good luck!

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great Peter ... do you have any examples (code, screenshots) that you could share with me?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me know if you need more help! Hope the Data Factory solution meet your expectation.

Good luck and have a good day!

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check this website

First build the Connections for the S3 with bucket name, key and secret, and another to connect to your SQL database. Then run a Copy pipeline to pull from S3 and sink in Azure SQL.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @fenixen , could you solve your problem? I´m facing the same situation now. I´m able to get url, credentials and s3path with Power Query (Power BI) but not sure how to use them to get the csv file with the data that I need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gdecome

I landed on a semi-manual solution using https://s3browser.com/ to retrieve the data files and then connecting to the files using Power Query. I didn't have people available to help me with API.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Best idea is to use Redshift in this case.

PBI Architect

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

ZappySys has released API drivers (XML and JSON) with AWS API support. See below blog post it explains scenario of how to access AWS S3 data in Power BI. It also explains Billing / Cost API usecase via API calls. ZappySys will rease CSV driver very soon which will support your scenario of reading CSV from S3 in Power BI but until that you can call Billing API (JSON format)

https://zappysys.com/blog/read-amazon-s3-data-power-bi-aws-json-xml-api/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @fenixen,

As the Amazon S3 is a web service and supports the REST API. You can try to use web data source to get data. See:

Amazon S3 REST API Introduction

How to call REST APIs and parse JSON with Power BI

Another I can think of is importing data from Amazon S3 into Amazon Redshift. then in Power BI desktop, use Amazon Redshift connector get data. See: Loading Data from Amazon S3.

Best Regards,

Qiuyun Yu

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been through this information and the process seems non-trivial, as compared to connecting to other REST API services. Has anyone actually connected PBI to an S3 bucket as a data source? I'm looking for actual experience and not theory per se. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is anyone still looking for this? I have this up and running .... I can't share my query, but I might be able to point you in the right direction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

please share the steps to connect to S3 bucket from PBI desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure. This is how I do it:

1. Create a new Lambda Function ... it can be empty for now

2. Set up a new API in API Gateway

3. Create a new GET method

3.1 Select Lambda Function for the integration type

3.2 Select the Use Lambda Proxy integration option

3.3 Select the region and type in the name of the lambda function you created in step 1

4. Edit your lambda function.

4.1 Using the AWS SDK, generate a url w/ pre-signed key for your file

4.2 Return a 303 redirect to the url from step 4.1

Here is a sample of a lambda function in python 2.7:

bucket = 'bucket-name'

key = 'path-to-file'

client = boto3.client('s3')

link = client.generate_presigned_url(

'get_object',

{'Bucket': bucket, 'Key': key},

7200, 'GET')

return {

"statusCode": 303,

"headers": {'Location': link}

}

You can use this PowerBI query as a starting point:

let

// Replace the URI with the "invoke url" of your API Gateway endpoint

// See: https://docs.aws.amazon.com/apigateway/latest/developerguide/how-to-call-api.html#how-to-call-api-console

uri = "https://your-web-api.execute-api.us-east-1.amazonaws.com/stage/endpoint",

// PowerBI should understand the 303 redirect and follow it to your presigned s3 url

// Make sure to set IsRetry to true so that PowerBI gets a fresh url with a

// fresh presigned key every time

raw = Web.Contents(uri, [IsRetry=true]),

// My file is a gzipped utf-8 tsv, so I need to decompress it

// and tell the csv parser the delimiter and encoding

binary = Binary.Decompress(raw, Compression.GZip),

csv = Csv.Document(binary, [

Delimiter="#(tab)",

extraValues=ExtraValues.Ignore,

Encoding=65001]) // 65001 is utf8

in

csv

Once you get everything working with a single file, you can parameterize your API and Lambda function to accept an s3 path so that you can pull in anything from your S3 account.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Surely, this helped many people, that is the way I ended up setting the lambda - powerBi but now what is happing is that the lambda is being called twice by power BI I had tables references to the main table but I disable their refreshing and only is refresing the main call, do you know what can be happening?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is great, I am trying to understand, if in my case I have a CSV, what do I replace this part of code with?

// My file is a gzipped utf-8 tsv, so I need to decompress it

// and tell the csv parser the delimiter and encoding

binary = Binary.Decompress(raw, Compression.GZip),

csv = Csv.Document(binary, [

Delimiter="#(tab)",

extraValues=ExtraValues.Ignore,

Encoding=65001]) // 65001 is utf8

Or I just ignore?

Thank you,

Best

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is great, I am trying to understand, if in my case I have a CSV, what do I replace this part of code with?

// My file is a gzipped utf-8 tsv, so I need to decompress it

// and tell the csv parser the delimiter and encoding

binary = Binary.Decompress(raw, Compression.GZip),

csv = Csv.Document(binary, [

Delimiter="#(tab)",

extraValues=ExtraValues.Ignore,

Encoding=65001]) // 65001 is utf8

Or I just ignore?

Thank you,

Best

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Before you roll this out to production you should think about some kind of authentication. The normal AWS IAM authentication tokens don't play nicely with PowerBI, so you will need to use a custom authentication method for your API Gateway: https://docs.aws.amazon.com/apigateway/latest/developerguide/use-custom-authorizer.html. I ended up using basic auth which is not the strongest thing in the world, but API Gateway uses https, so it should be secure enough.