FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Calculate average based on dynamic column refe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calculate average based on dynamic column reference

Hi all

I have source file with following columns: Country, Retailer, Division, Product, 007.2020, 008.2020, 009.2020, 010.2020, 011.2020, 012.2020, 001.2021, Result.

I would like to calculate the average over all columns referring to a month (i.e. in above case 007.2020 to 001.2021).

However, next time i receive the source file, the months may be different, but they will always be sitting in the same position and there will always be 6 months.

I already tried this formula, but get an error:

= Table.AddColumn(#"Pivoted Column", "Average", each List.Average({List.FindText(Table.ColumnNames(#"Pivoted Column"),"202")}), type number)

This is the error I get:

Expression.Error: We cannot apply operator - to types List and List.

Details:

Operator=-

Left=[List]

Right=[List]

Would anyone know how i could get this to work, in either M or DAX?

Thanks!

Bart

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @BartVW

in order to create a dynamically solution, you have to tell us what can be a rule, to select the correct columns. Here an example where I calculated the average of all columns that contain a ".". If this is your requirement, you can use the code, otherwise tell us all names of your columns, and we will find another logic. Replace the source-step and changedtype with your real data table

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTICYmMgNgFiUyA2A2JzpdhYAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Column1 = _t, Column2 = _t, #"007.2020" = _t, #"008.2020" = _t, #"009.2020" = _t, #"010.2020" = _t, Result = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Column1", Int64.Type}, {"Column2", Int64.Type}, {"007.2020", Int64.Type}, {"008.2020", Int64.Type}, {"009.2020", Int64.Type}, {"010.2020", Int64.Type}, {"Result", Int64.Type}}),

AddAverageOfMonths = Table.AddColumn

(

#"Changed Type",

"AverageMonth",

(row)=> List.Average(Record.FieldValues(Record.SelectFields(row, List.Select(Table.ColumnNames(#"Changed Type"), each Text.Contains(_,".")))))

)

in

AddAverageOfMonths

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @BartVW

in order to create a dynamically solution, you have to tell us what can be a rule, to select the correct columns. Here an example where I calculated the average of all columns that contain a ".". If this is your requirement, you can use the code, otherwise tell us all names of your columns, and we will find another logic. Replace the source-step and changedtype with your real data table

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTICYmMgNgFiUyA2A2JzpdhYAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Column1 = _t, Column2 = _t, #"007.2020" = _t, #"008.2020" = _t, #"009.2020" = _t, #"010.2020" = _t, Result = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Column1", Int64.Type}, {"Column2", Int64.Type}, {"007.2020", Int64.Type}, {"008.2020", Int64.Type}, {"009.2020", Int64.Type}, {"010.2020", Int64.Type}, {"Result", Int64.Type}}),

AddAverageOfMonths = Table.AddColumn

(

#"Changed Type",

"AverageMonth",

(row)=> List.Average(Record.FieldValues(Record.SelectFields(row, List.Select(Table.ColumnNames(#"Changed Type"), each Text.Contains(_,".")))))

)

in

AddAverageOfMonths

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jimmy801 thank you, this works great and solves my immediate challenge.

As a PQ beginner, i would love to understand more how this works though, and had some questions:

- what is the "(row) ==> " syntax about? It looks like a function but without 'let' and 'in'?

- what is the reasoning to go from ColumnNames to List.Select to Record.SelectFields and Record.FieldValues? Why did my original solution not work, just using "each List.Average(List.FindText(Table.ColumnNames(#"Pivoted Column"),"202"))"

- why do you use the _ variable?

Appreciate all the help you can provide, and if you have any good links i could look at for these questions, that would be great too.

Cheers

Bart

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @BartVW

If my answer solved the problem, I would appreciate if you could mark it as solution.

I will shortly try to answer your question, but you could search the documentation for this as well.

- ()=> is the syntax of a function (or each would also work). I could have used also let and in to split my steps, but i prefered to put everything in on line, so no let and in is needed

- columnnames gibs you the headernames, list select filters according your logic (searching for the "." in your header names), Record.Selectfields applies a filter to a row, using the output of list.select as trigger, and Record.Values gives you the values of the rows that where filtered.

- the "_" is needed when you use the each keyword, and represents the input value of this function. (in my case in the the List.Select function and the _ represents a item in the list)

Hope things are a little more clearer now 🙂

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

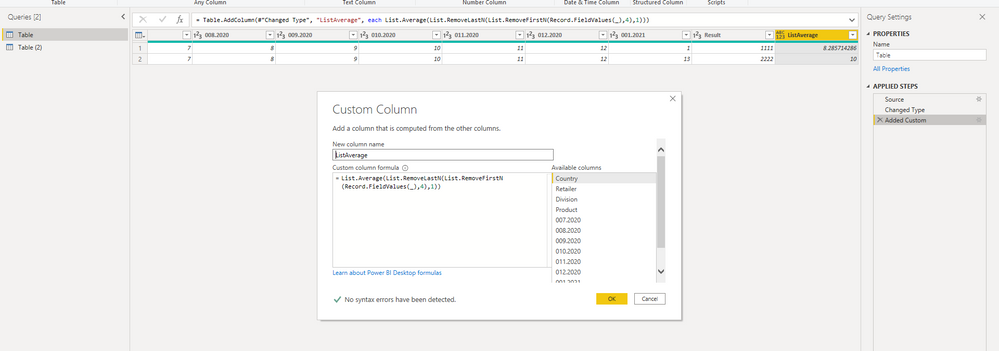

Hi @BartVW ,

Try this:

Add a custom column like so:

List.Average(List.RemoveLastN(List.RemoveFirstN(Record.FieldValues(_),4),1))

Or, try this:

1. UnPivot other columns.

2. Group.

3. Expand.

4. Pivot.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WcjZU0lEKAhEuICIARJgDsQUQWwKxoQGIAIkaGoEIMA/Ij9UB6gWJBIEIFxARYERArzGQMAICpdhYAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Country = _t, Retailer = _t, Division = _t, Product = _t, #"007.2020" = _t, #"008.2020" = _t, #"009.2020" = _t, #"010.2020" = _t, #"011.2020" = _t, #"012.2020" = _t, #"001.2021" = _t, Result = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Country", type text}, {"Retailer", type text}, {"Division", type text}, {"Product", type text}, {"007.2020", Int64.Type}, {"008.2020", Int64.Type}, {"009.2020", Int64.Type}, {"010.2020", Int64.Type}, {"011.2020", Int64.Type}, {"012.2020", Int64.Type}, {"001.2021", Int64.Type}, {"Result", Int64.Type}}),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(#"Changed Type", {"Country", "Retailer", "Division", "Product", "Result"}, "Attribute", "Value"),

#"Grouped Rows" = Table.Group(#"Unpivoted Other Columns", {"Country", "Retailer", "Division", "Product"}, {{"All", each _, type table [Country=nullable text, Retailer=nullable text, Division=nullable text, Product=nullable text, Result=nullable number, Attribute=text, Value=number]}, {"Avg", each List.Average([Value]), type number}}),

#"Expanded All" = Table.ExpandTableColumn(#"Grouped Rows", "All", {"Attribute", "Value"}, {"Attribute", "Value"}),

#"Pivoted Column" = Table.Pivot(#"Expanded All", List.Distinct(#"Expanded All"[Attribute]), "Attribute", "Value"),

#"Reordered Columns" = Table.ReorderColumns(#"Pivoted Column",{"Country", "Retailer", "Division", "Product", "007.2020", "008.2020", "009.2020", "010.2020", "011.2020", "012.2020", "001.2021", "Avg"})

in

#"Reordered Columns"

Best Regards,

Icey

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @BartVW

Without a glance of your full dataset, I can so far only come up with such suggestions.

- List.FindText func returns a list, thus no more {} are needed here;

- Besides, the resulting list is a list of text, it's supposed to be enclosed in Number.From for a conversion before using List.Average.

As far as I'm concerned, I'd usually use DAX to average a list of values within a specific range. But it's not absolute as such; it depends on, more or less, the dataset.

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 14 | |

| 11 | |

| 8 | |

| 7 | |

| 6 |