Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Compete to become Power BI Data Viz World Champion! First round ends August 18th. Get started.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Add a unique identifier column to blocks with repe...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add a unique identifier column to blocks with repeating values

Hey All.

I have time series data of the following structure.

The "Stage" values are repeating across the table for different periods of time.

| seconds | Stage |

| 1 | A |

| 2 | A |

| 3 | B |

| 4 | B |

| 5 | B |

| 6 | B |

| 7 | C |

| 8 | C |

| 9 | A |

I would like to add a "Stage_ID" column which identifies each stage period uniquelly.

| seconds | Stage | Stage_ID |

| 1 | A | 111 |

| 2 | A | 111 |

| 3 | B | 222 |

| 4 | B | 222 |

| 5 | B | 222 |

| 6 | B | 222 |

| 7 | C | 333 |

| 8 | C | 333 |

| 9 | A | 444 |

Is there a way to achieve this with Power Query / DAX?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @melmell

here a similar approach like @CNENFRNL , but stage period numbered starting from 1, 2 etc.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUXJUitWJVjKCs4yBLCcwywTOMoWzzOAscyDLGcyygLMsIabEAgA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [seconds = _t, Stage = _t]),

GroupLocal = Table.Group

(

Source,

"Stage",

{{"allrows", each _}},

GroupKind.Local

),

AddIndex = Table.AddIndexColumn(GroupLocal, "Index", 1, 1),

ExpandTable = Table.ExpandTableColumn(AddIndex, "allrows", {"seconds"}, {"seconds"})

in

ExpandTable

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @melmell

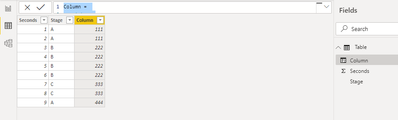

Based on your description, I created data to reproduce your scenario. The pbix file is attached in the end.

Table:

You may create a calculated column as below.

Column =

var tab =

ADDCOLUMNS(

'Table',

"Flag",

var laststage =

LOOKUPVALUE('Table'[Stage],'Table'[Seconds],[Seconds]-1,Blank())

return

IF(

ISBLANK(laststage)||laststage<>[Stage],

1,0

)

)

var newtab =

ADDCOLUMNS(

tab,

"Result",

var val =

SUMX(

FILTER(

tab,

[Seconds]<=EARLIER('Table'[Seconds])

),

[Flag]

)

return

val*100+val*10+val

)

return

SUMX(

FILTER(

newtab,

[Seconds]=EARLIER('Table'[Seconds])&&

[Stage]=EARLIER('Table'[Stage])

),

[Result]

)

Result:

Best Regards

Allan

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @melmell

here a similar approach like @CNENFRNL , but stage period numbered starting from 1, 2 etc.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUXJUitWJVjKCs4yBLCcwywTOMoWzzOAscyDLGcyygLMsIabEAgA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [seconds = _t, Stage = _t]),

GroupLocal = Table.Group

(

Source,

"Stage",

{{"allrows", each _}},

GroupKind.Local

),

AddIndex = Table.AddIndexColumn(GroupLocal, "Index", 1, 1),

ExpandTable = Table.ExpandTableColumn(AddIndex, "allrows", {"seconds"}, {"seconds"})

in

ExpandTable

Copy paste this code to the advanced editor in a new blank query to see how the solution works.

If this post helps or solves your problem, please mark it as solution (to help other users find useful content and to acknowledge the work of users that helped you)

Kudoes are nice too

Have fun

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

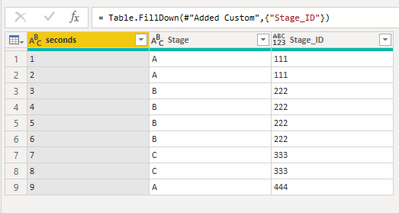

Hi, @melmell , you may want to try this pattern

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUXJUitWJVjKCs4yBLCcwywTOMoWzzOAscyDLGcyygLMsIabEAgA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [seconds = _t, Stage = _t]),

bins = Table.Group(Source, {"Stage"}, {{"bin", each List.Min([seconds])}}, GroupKind.Local)[bin],

#"Added Custom" = Table.AddColumn(Source, "Stage_ID", each [pos = List.PositionOf(bins, [seconds]), result = if pos=-1 then null else Text.Repeat(Text.From(pos+1),3)][result]),

#"Filled Down" = Table.FillDown(#"Added Custom",{"Stage_ID"})

in

#"Filled Down"

| Thanks to the great efforts by MS engineers to simplify syntax of DAX! Most beginners are SUCCESSFULLY MISLED to think that they could easily master DAX; but it turns out that the intricacy of the most frequently used RANKX() is still way beyond their comprehension! |

DAX is simple, but NOT EASY! |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @CNENFRNL ,

I tried your solution. It works well for small datasets.

I have a 200K table and the processing hung and didn't finish.

Thinking about using Python

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @melmell

did you check my solution? I'm wondering how the performance is of this code

BR

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Jimmy801

Just finished the testing. Worked like a charm.

Performence wise - 200K rows loaded within several minutes.