FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Microsoft Fabric Community

- Fabric community blogs

- Power BI Community Blog

- Power BI: Gateway Monitoring & Administrating- Par...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

As a PowerBI administrator, it's always difficult to monitor the on-premises gateways within our organization, especically when the number of gateways has been growing rapidly. Today I'm going to explain how you can effecitivley administrate and monitor those gateways in PowerBI itself. Along with that, I'm going to give lots of Tips & Tricks.

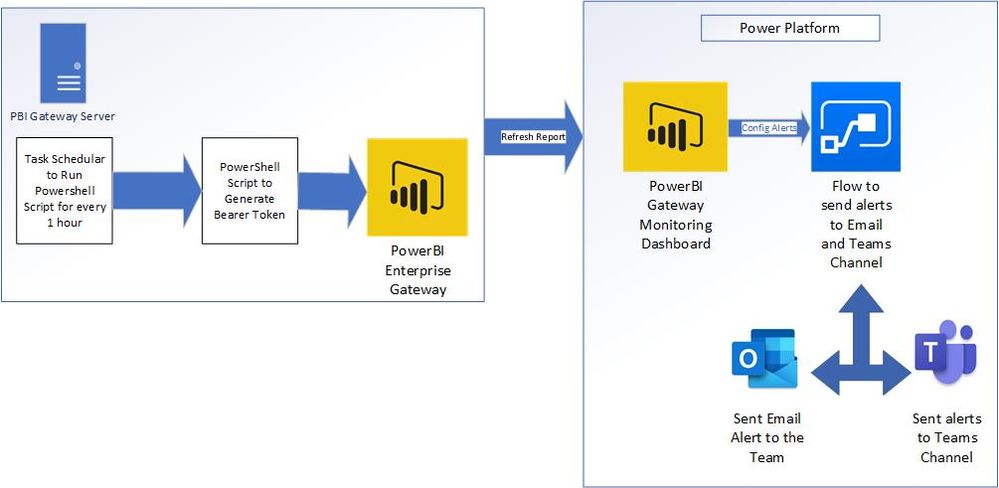

Architecture

The below Visio Pic is the Architecture of our Entier Gateway monitoring System.

PowerBI Service Account

In order to begin the first most step is to have a Service Account with a Pro License, here we are going to call some API's. So instead of using your persona account, you should have a service account with a pro license.

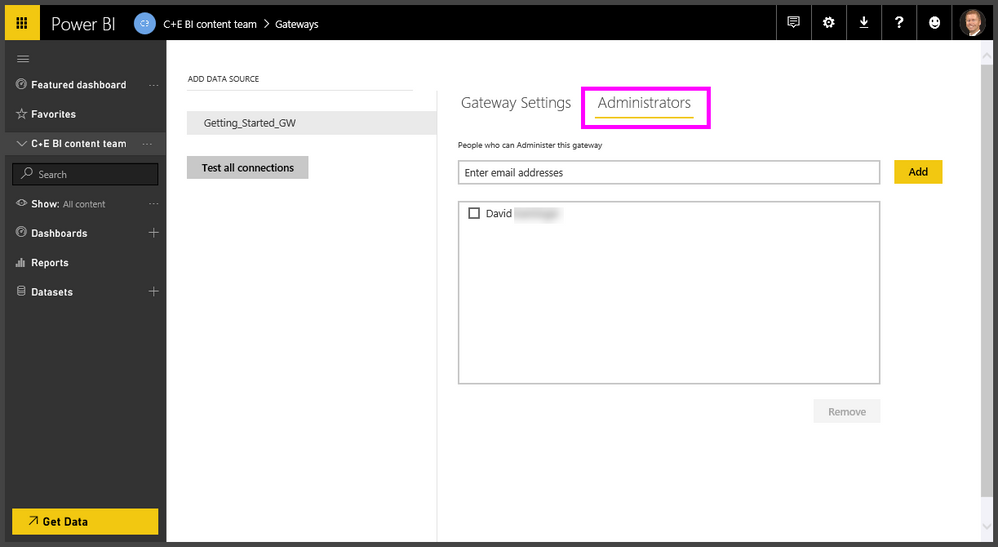

Once you have a service account you should be add these service account to all your Gateway's as a Administrator.

Tips: Instead of adding the service account directorly to the gateway admin's try to create a Distribution List (Group) and add the Service account to the DL, for ease of management.

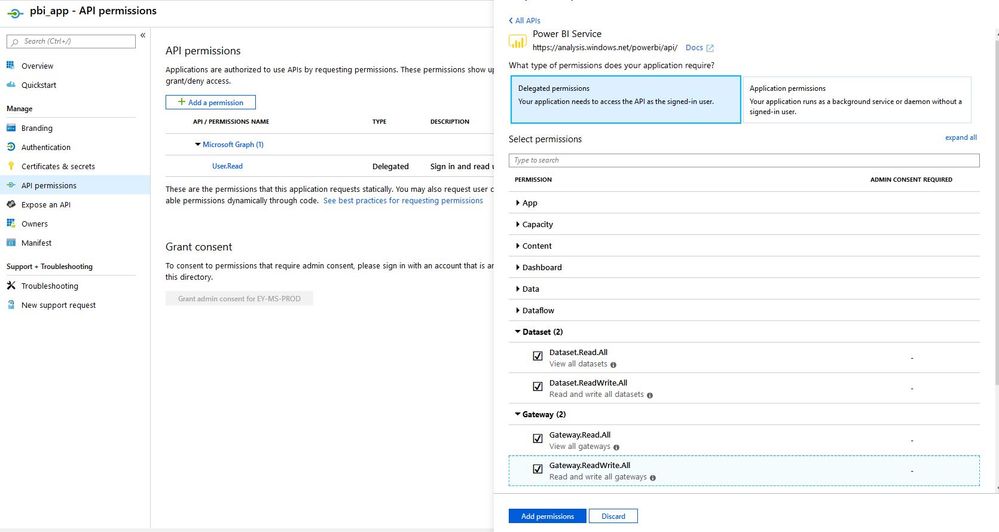

In order to call the API's we also need to have a Application Id (Client ID) for that you need the below API permissions.

Now we have the Service account and Application id with these we are ready to generate the Bearer token and call the API.

Bearer Token using PowerShell

We are going to automating the part of generating the bearer token to call the API. So for this the best way is to use PowerShell, we can also use PBI M-query to generate the Bearer but in that case we need to provide the service account user name and password with in the report which is a kind of unsecure where we expose the username and password with-in the report.

The below PowerShell script I've used to generate the Bearer token and save it in a Text File

Function Get-AADToken {

Param(

[parameter(Mandatory = $true)][string]$Username,

[parameter(Mandatory = $true)][string]$Password,

[parameter(Mandatory = $true)][guid]$ClientId,

[parameter(Mandatory = $true)][string]$path,

[parameter(Mandatory = $true)][string]$fileName

)

[Net.ServicePointManager] :: SecurityProtocol = [Net.SecurityProtocolType]::Tls12

$authorityUrl = "https://login.microsoftonline.com/common/oauth2/authorize"

$SecurePassword = $Password | ConvertTo-SecureString -AsPlainText -Force

## load active directory client dll

$typePath = "C:\Jay\works\powershell\Microsoft.IdentityModel.Clients.ActiveDirectory.dll"

Add-Type -Path $typePath

Write-Verbose "Loaded the Microsoft.IdentityModel.Clients.ActiveDirectory.dll"

Write-Verbose "Using authority: $authorityUrl"

$authContext = New-Object -TypeName Microsoft.IdentityModel.Clients.ActiveDirectory.AuthenticationContext -ArgumentList ($authorityUrl)

$credential = New-Object -TypeName Microsoft.IdentityModel.Clients.ActiveDirectory.UserCredential -ArgumentList ($UserName, $SecurePassword)

Write-Verbose "Trying to aquire token for resource: $Resource"

$authResult = $authContext.AcquireToken("https://analysis.windows.net/powerbi/api", $clientId, $credential)

Write-Verbose "Authentication Result retrieved for: $($authResult.UserInfo.DisplayableId)"

New-Item -path $path -Name $fileName -Value $authResult.AccessToken -ItemType file -force;

return "SuccessFully Writted on the file";

}

Tips: I've used a command [Net.ServicePointManager] :: SecurityProtocol = [Net.SecurityProtocolType]::Tls12 which will enforce to use the TLS12 if you don't put this in some of the old machines it will fail as those are using the old TLS Version.

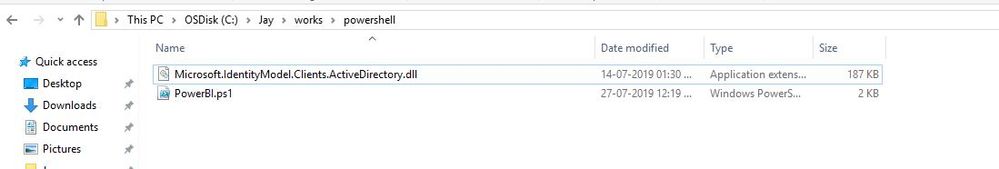

You can find the full code along with DLL here

The Actual path of DLL is hardcoded in the above script you need to change as per your location.

You can find the DLL in the Attachments

Task Scheduling

Create a new Task in the Task Schedular to run the above PowerShell script for every 1 hour since the bearer token will be expired after 1 after.

In the Action, choose powershell and for the arguments give the command like below

-executionpolicy bypass -command "& {. C:\Jay\works\powershell\PowerBI.ps1; Get-AADToken -Username "xxxx@xx.com" -Password "xxxyyyzzz" -ClientId "xxxx11-xx11-x1-x12-xxxx" -path "C:\Jay\works\pbi\Gateway_Report" -fileName "Bearer.txt"} -WindowStyle Hidden

In this command you will give the

- username

- password

- clientid

- Path where the Bearer token file should be generated

- FileName for the Bearer token file

Now we are all set to create our report in PowerBI ![]()

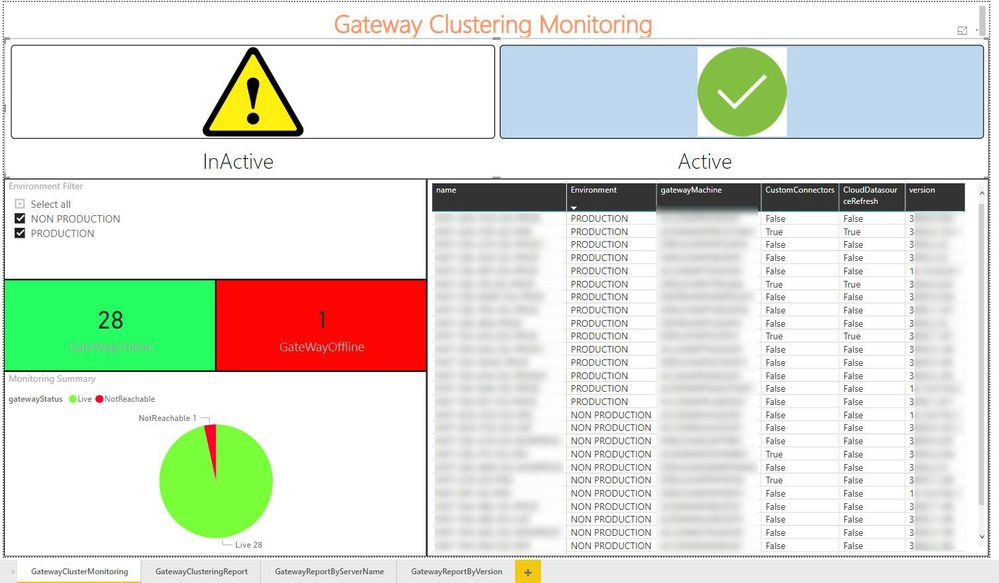

Creating Gateway Monitoring Report in PBI Desktop

This is the most interesting and important part, where we are going to call the API directly in our PBI Report. Let's gets started with a blank query.

Our most of the part will be in the Advanced Editor where we will define our custom M-Queries

Tricks Here I'm going tell you a trick to use a Special API which you couldn't able to find in the PowerBI REST API Documentation ![]() The API is

The API is

https://api.powerbi.com/v2.0/myorg/me/gatewayclusters?$expand=memberGateways&$skip=0

This API will give you the Main Cluster gateway and it's corresponding clusters gateways

Now lets continue our M-query editor in the PBI Desktop, before writing the code let's put up the steps needed to achieve our goal

1. We need to call the Gateway API

2. In order to call the API we need the Bearer Token which we already have it in a Text File called (Bearer.txt) from the previous powershell script through automation from Task Schedular

The Code for this is

let

TokenType = "Bearer",

Token = Text.FromBinary(File.Contents("C:\Jay\works\pbi\Gateway_Report\Bearer.txt")),

Source = Json.Document(Web.Contents("https://api.powerbi.com/v2.0/myorg/me/gatewayclusters?$expand=memberGateways&$skip=0",[Headers=[Authorization=TokenType & " " & Token]])),

value = Source[value],

#"Converted to Table" = Table.FromList(value, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"id", "name", "dataSourceIds", "type", "options", "memberGateways"}, {"id", "name", "dataSourceIds", "type", "options", "memberGateways"}),

#"Expanded options" = Table.ExpandRecordColumn(#"Expanded Column1", "options", {"CloudDatasourceRefresh", "CustomConnectors"}, {"CloudDatasourceRefresh", "CustomConnectors"})

in

#"Expanded options"

Is that Cool !!

Now we have the full gateway clusters with us. Wait .. With this list we only having the gateway details but we couldn't able to get the current gatewayStatus which we are looking for !!

Tricks If you looked into PowerBI Gateways API documentation it is wrongly mentioned that this API will give the status, but actually it will not give you the status of the gateway. I've confirmed this from officially from the PowerBI community forum

Inorder to get the current gateway status we need to call another api called Gateways - Get Gateway unforunately this API call we need to for each and every gateways seperately and not as a bulk operation.

Let's continue our coding, the below code will call the Get Gateway API for each and every gateway id which we obtained from the previous result

#"Add Column" = Table.AddColumn(#"Expanded options", "DataFromURLsColumn", each Json.Document(Web.Contents("https://api.powerbi.com/v1.0/myorg/",[RelativePath= "gateways/" & [id] , Headers=[Authorization=TokenType & " " & Token]])))

Now finally our code looks like the below one

let

TokenType = "Bearer",

Token = Text.FromBinary(File.Contents("C:\Jay\works\pbi\Gateway_Report\Bearer.txt")),

Source = Json.Document(Web.Contents("https://api.powerbi.com/v2.0/myorg/me/gatewayclusters?$expand=memberGateways&$skip=0",[Headers=[Authorization=TokenType & " " & Token]])),

value = Source[value],

#"Converted to Table" = Table.FromList(value, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"id", "name", "dataSourceIds", "type", "options", "memberGateways"}, {"id", "name", "dataSourceIds", "type", "options", "memberGateways"}),

#"Expanded options" = Table.ExpandRecordColumn(#"Expanded Column1", "options", {"CloudDatasourceRefresh", "CustomConnectors"}, {"CloudDatasourceRefresh", "CustomConnectors"}),

#"Add Column" = Table.AddColumn(#"Expanded options", "DataFromURLsColumn", each Json.Document(Web.Contents("https://api.powerbi.com/v1.0/myorg/",[RelativePath= "gateways/" & [id] , Headers=[Authorization=TokenType & " " & Token]])))

in

#"Add Column"

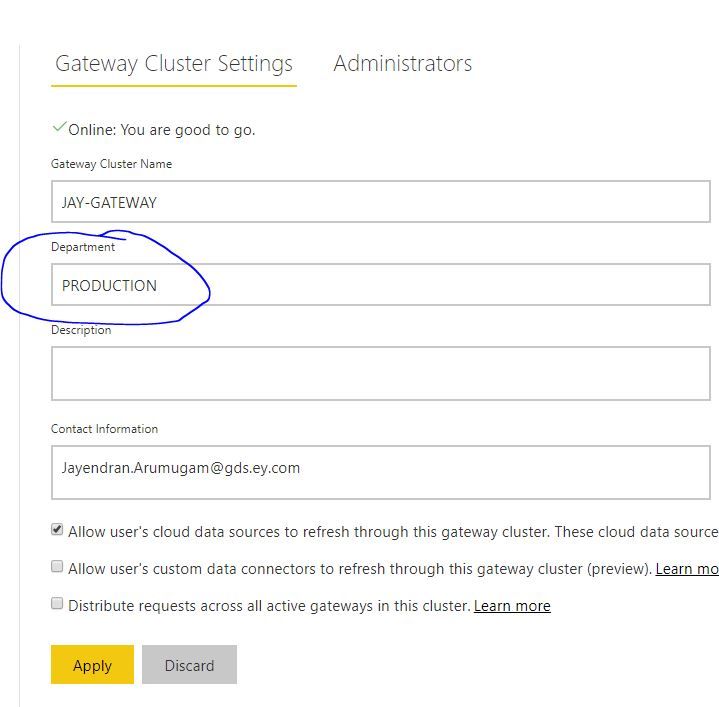

Tips : In order to identify the gateway environment like Production/non-production, you can specify in the gatewayDepartment while configuring the gateway itself, which will be used for us to create filters in our report.

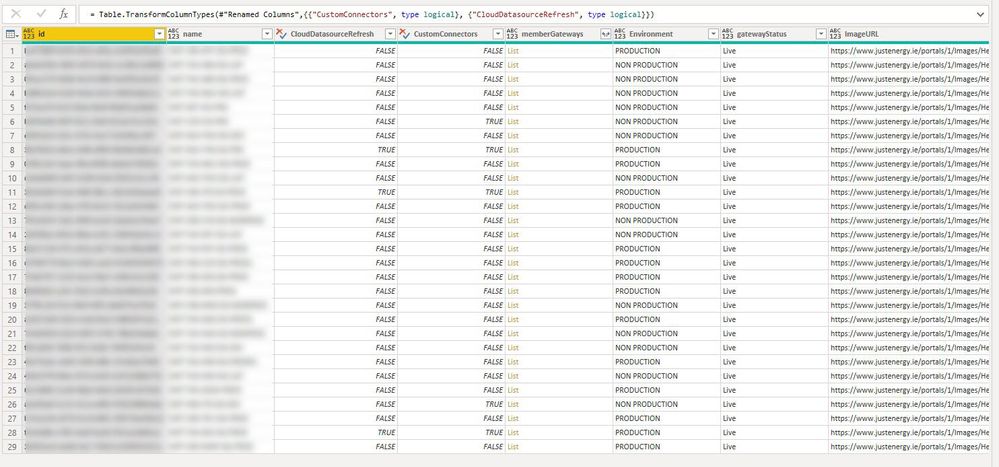

Now after doing some cleanup in the modeling with the below code

let

TokenType = "Bearer",

Token = Text.FromBinary(File.Contents("C:\Jay\works\pbi\Gateway_Report\Bearer.txt")),

Source = Json.Document(Web.Contents("https://api.powerbi.com/v2.0/myorg/me/gatewayclusters?$expand=memberGateways&$skip=0",[Headers=[Authorization=TokenType & " " & Token]])),

value = Source[value],

#"Converted to Table" = Table.FromList(value, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"id", "name", "options", "memberGateways"}, {"id", "name", "options", "memberGateways"}),

#"Expanded options" = Table.ExpandRecordColumn(#"Expanded Column1", "options", {"CloudDatasourceRefresh", "CustomConnectors"}, {"CloudDatasourceRefresh", "CustomConnectors"}),

#"Add Column" = Table.AddColumn(#"Expanded options", "DataFromURLsColumn", each Json.Document(Web.Contents("https://api.powerbi.com/v1.0/myorg/",[RelativePath= "gateways/" & [id] , Headers=[Authorization=TokenType & " " & Token]]))),

#"Expanded DataFromURLsColumn" = Table.ExpandRecordColumn(#"Add Column", "DataFromURLsColumn", {"gatewayAnnotation", "gatewayStatus"}, {"gatewayAnnotation", "gatewayStatus"}),

#"Parsed JSON" = Table.TransformColumns(#"Expanded DataFromURLsColumn",{{"gatewayAnnotation", Json.Document}}),

#"Expanded gatewayAnnotation" = Table.ExpandRecordColumn(#"Parsed JSON", "gatewayAnnotation", {"gatewayDepartment"}, {"gatewayDepartment"}),

#"Replaced Value" = Table.ReplaceValue(#"Expanded gatewayAnnotation",null,"",Replacer.ReplaceValue,{"gatewayDepartment"}),

#"Added Conditional Column" = Table.AddColumn(#"Replaced Value", "ImageURL", each if [gatewayStatus] = "NotReachable" then "https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcSWRknHmZCepToQHUUSlVIiNzZMEX3ALW38FYzdON9P6USruQX-BA" else "https://www.justenergy.ie/portals/1/Images/Help%20and%20Support/GreenStar_ServiceStatus_Final-03.jpg?ver=2017-09-01-144013-867"),

#"Replaced Value1" = Table.ReplaceValue(#"Added Conditional Column","","NON PRODUCTION",Replacer.ReplaceValue,{"gatewayDepartment"}),

#"Renamed Columns" = Table.RenameColumns(#"Replaced Value1",{{"gatewayDepartment", "Environment"}}),

#"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns",{{"CustomConnectors", type logical}, {"CloudDatasourceRefresh", type logical}})

in

#"Changed Type"

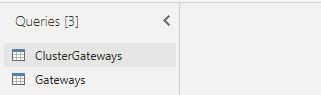

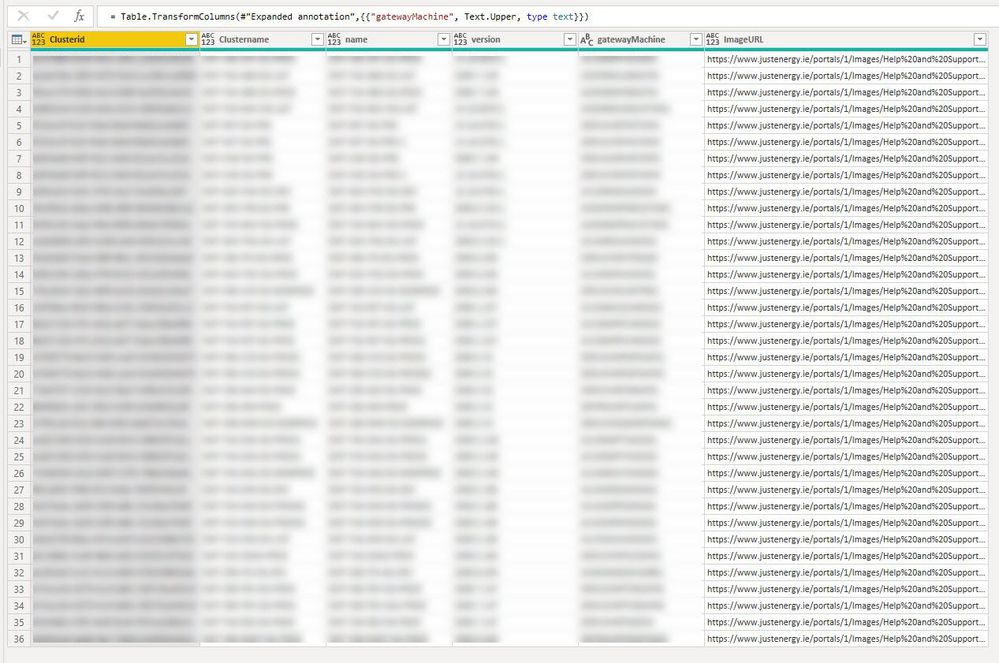

Now I'm going to group them based on the data. I've named this query as ClusterGateways because this information is on the cluster level. Then I've created another query with the Reference of the ClusterGateways named as Gateways because this is going to be the tell how many nested/child clusteres available with-in the main Cluster Table

Now Lets me do some modeling in the Gateways with the below code.

let

Source = ClusterGateways,

#"Removed Columns" = Table.RemoveColumns(Source,{"gatewayStatus", "Environment", "CustomConnectors", "CloudDatasourceRefresh"}),

#"Renamed Columns" = Table.RenameColumns(#"Removed Columns",{{"name", "Clustername"}, {"id", "Clusterid"}}),

#"Expanded memberGateways" = Table.ExpandListColumn(#"Renamed Columns", "memberGateways"),

#"Expanded memberGateways1" = Table.ExpandRecordColumn(#"Expanded memberGateways", "memberGateways", {"name", "version", "annotation"}, {"name", "version", "annotation"}),

#"Parsed JSON" = Table.TransformColumns(#"Expanded memberGateways1",{{"annotation", Json.Document}}),

#"Expanded annotation" = Table.ExpandRecordColumn(#"Parsed JSON", "annotation", {"gatewayMachine"}, {"gatewayMachine"}),

#"Uppercased Text" = Table.TransformColumns(#"Expanded annotation",{{"gatewayMachine", Text.Upper, type text}})

in

#"Uppercased Text"

Now we completed our Modeling Part. Let's catch up on the Visualization , Publishing and configuring Alerts inthe flow in the Next Part ... Stay Tuned for the Second Part !

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- What Happens Actually When You Change Cross Filter...

- SQL's APPLY Clause in PowerBI DAX ?

- Power BI TMDL View: Tasks You Can Finally Do Witho...

- QuickViz Challenge | Matrix Magic 🪄

- Fabric Data Days | Digital Flair!

- Meet the Judges | Dataviz Contest for Pros | Fabri...

- QuickViz Challenge | Time traveler

- From Chaos to Clarity: Designing a Scalable Data M...

- Exploring Simple Scenario Analysis in Power BI: In...

- How to Create Reusable Power BI Sample Data Using ...

-

suparnababu8

on:

What Happens Actually When You Change Cross Filter...

suparnababu8

on:

What Happens Actually When You Change Cross Filter...

-

tharunkumarRTK

on:

Power BI TMDL View: Tasks You Can Finally Do Witho...

tharunkumarRTK

on:

Power BI TMDL View: Tasks You Can Finally Do Witho...

-

slindsay

on:

QuickViz Challenge | Matrix Magic 🪄

slindsay

on:

QuickViz Challenge | Matrix Magic 🪄

-

Rudra_2007

on:

Fabric Data Days | Digital Flair!

on:

Fabric Data Days | Digital Flair!

- maheshshinde on: QuickViz Challenge | Time traveler

-

ajaybabuinturi

on:

From Chaos to Clarity: Designing a Scalable Data M...

on:

From Chaos to Clarity: Designing a Scalable Data M...

-

gopy6243

on:

Exploring Simple Scenario Analysis in Power BI: In...

on:

Exploring Simple Scenario Analysis in Power BI: In...

-

ajaybabuinturi

on:

How to Create Reusable Power BI Sample Data Using ...

on:

How to Create Reusable Power BI Sample Data Using ...

- Presi on: Understanding about The Pivot, Unpivot and Transpo...

-

axlrn

on:

QuickViz Challenge | Raise the Bar!

on:

QuickViz Challenge | Raise the Bar!

-

How To

737 -

Tips & Tricks

717 -

Events

179 -

Support insights

121 -

Opinion

91 -

DAX

66 -

Power BI

65 -

Power Query

62 -

Power BI Dev Camp

45 -

Power BI Desktop

40 -

Roundup

38 -

Dataflow

31 -

Featured User Group Leader

27 -

Power BI Embedded

20 -

Time Intelligence

19 -

Tips&Tricks

18 -

Data Protection

16 -

PowerBI REST API

12 -

Power Query Tips & Tricks

8 -

finance

8 -

Power BI Service

8 -

Direct Query

7 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

Tips and Tricks

6 -

Income Statement

5 -

Dax studio

5 -

powerbi

5 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

M language

4 -

Life Sciences

4 -

Paginated Reports

4 -

External tool

4 -

Power BI Goals

4 -

Desktop

4 -

PowerShell

4 -

Bookmarks

4 -

Line chart

4 -

Group By

4 -

community

4 -

RLS

4 -

Visualisation

3 -

Administration

3 -

M code

3 -

Visuals

3 -

SQL Server 2017 Express Edition

3 -

R script

3 -

Aggregation

3 -

Webinar

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

CALCULATE

3 -

R visual

3 -

Reports

3 -

PowerApps

3 -

Data Science

3 -

Azure

3 -

Data model

3 -

Conditional Formatting

3 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Split

2 -

measure

2 -

Microsoft-flow

2 -

Paginated Report Builder

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

parameter

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

Formatting

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

slicers

2 -

SAP

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

index

2 -

RANKX

2 -

Date

2 -

PBI Desktop

2 -

Date Dimension

2 -

Integer

2 -

Visualization

2 -

Power BI Challenge

2 -

Query Parameter

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

How Things Work

2 -

Tabular Editor

2 -

rank

2 -

ladataweb

2 -

Troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

Healthcare

2 -

Incremental Refresh

2 -

Number Ranges

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Custom visual

2 -

VLOOKUP

2 -

pivot

2 -

calculated column

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

inexact

2 -

Date Comparison

2 -

Power BI Premium Per user

2 -

Power Pivot

1 -

API

1 -

Kingsley

1 -

Merge

1 -

variable

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

Q&A

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1 -

Multivalued column

1 -

Pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

update

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Training

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

CICD

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

Workspace

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Excel

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

Power BI On-Premise Data Gateway

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Show and Tell

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi argentina

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

refresh M language Python script Support Insights

1 -

Tutorial Requests

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

powerbi cordoba

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Get row and column totals

1 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Azure AAD

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

Get latest sign-in data for each user

1

- 11-23-2025 - 11-29-2025

- 11-16-2025 - 11-22-2025

- 11-09-2025 - 11-15-2025

- 11-02-2025 - 11-08-2025

- 10-26-2025 - 11-01-2025

- 10-19-2025 - 10-25-2025

- 10-12-2025 - 10-18-2025

- 10-05-2025 - 10-11-2025

- 09-28-2025 - 10-04-2025

- 09-21-2025 - 09-27-2025

- 09-14-2025 - 09-20-2025

- 09-07-2025 - 09-13-2025

- 08-31-2025 - 09-06-2025

- View Complete Archives