Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Remove Duplicates with Multiple Conditions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove Duplicates with Multiple Conditions

I have a table that currently has 10.8 million rows and yesterday 11k rows were added. It is a record of inventory status for each item, in each warehouse, in each division only if that item currently has inventory available in that warehouse. Otherwise, no record is written for that item on that warehouse that day. For most purposes, the latest date for each unique combination of item and warehouse is needed. I've seen a couple of faulty approaches (remove duplicates in Power Query, filter max date on the date column*) in our existing datasets and hoping for some ideas on a better one, taking into account the size of the table.

Here's a simplified version of the data and what the end result needs to look like. The real data has many more columns and obviously, many more rows.

| Division | Warehouse | Item | Date | OH Qty | OH Value |

| 1 | A | 12345 | 8/1/2022 | 1 | 10 |

| 1 | B | 12345 | 8/1/2022 | 2 | 20 |

| 2 | C | 12345 | 8/1/2022 | 3 | 30 |

| 2 | C | 12345 | 8/10/2022 | 10 | 100 |

| 1 | A | 54321 | 8/12/2022 | 10 | 1000 |

| 1 | A | 54321 | 8/14/2022 | 1 | 100 |

| 1 | A | 54321 | 8/13/2022 | 1 | 100 |

| Division | Warehouse | Item | Date | OH Qty | OH Value |

| 1 | A | 12345 | 8/1/2022 | 1 | 10 |

| 1 | B | 12345 | 8/1/2022 | 2 | 20 |

| 2 | C | 12345 | 8/10/2022 | 10 | 100 |

| 1 | A | 54321 | 8/14/2022 | 1 | 100 |

*In case anyone is curious: according to everything I've read, Remove Duplicates will not necessarily leave the most recent record, even if the dataset is sorted. However, the other problem with 10 million records and thousands of unique item + warehouse combinations is that it seems to be very difficult computationally and generally fails to refresh when uploaded to the service. Doing the max date filter without any conditions on the item and warehouse fields appears to have selected only rows that have yesterday's date. Thus in the table above, it would only return the very last record for item 54321 in warehouse A for 8/14.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's the best solution I've come up with. I put in a custom SQL query in the Get Data>SQL Server dialog (under Advanced), and this is the query:

select item, warehouse, max(date) as enddt FROM [database].[table] where date>= '2022-01-01' group by item, warehouse order by item, warehouse

This gives me a query that is only 3 columns, the Item, the Warehouse, and the Date. I then concatenated these into a single column using Merge Columns (Warehouse-Item-Date). In order to do this, I did have to change the Date column to Text first. I also limited the date being after 1/1/2022 in this case but it might be different in different cases.

Then in a second query, I pulled in all the columns I wanted from that table, and added a custom column that matched my merged column from the first query (Warehouse-Item-Date). Then going back to the first query, I did a Left Outer Join matching the two columns, so that I was pulling in only the matching rows from query 2. Thus I ended up with only the last entry for each item in each warehouse, and was able to pull in the additional columns.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's the best solution I've come up with. I put in a custom SQL query in the Get Data>SQL Server dialog (under Advanced), and this is the query:

select item, warehouse, max(date) as enddt FROM [database].[table] where date>= '2022-01-01' group by item, warehouse order by item, warehouse

This gives me a query that is only 3 columns, the Item, the Warehouse, and the Date. I then concatenated these into a single column using Merge Columns (Warehouse-Item-Date). In order to do this, I did have to change the Date column to Text first. I also limited the date being after 1/1/2022 in this case but it might be different in different cases.

Then in a second query, I pulled in all the columns I wanted from that table, and added a custom column that matched my merged column from the first query (Warehouse-Item-Date). Then going back to the first query, I did a Left Outer Join matching the two columns, so that I was pulling in only the matching rows from query 2. Thus I ended up with only the last entry for each item in each warehouse, and was able to pull in the additional columns.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Try this M code

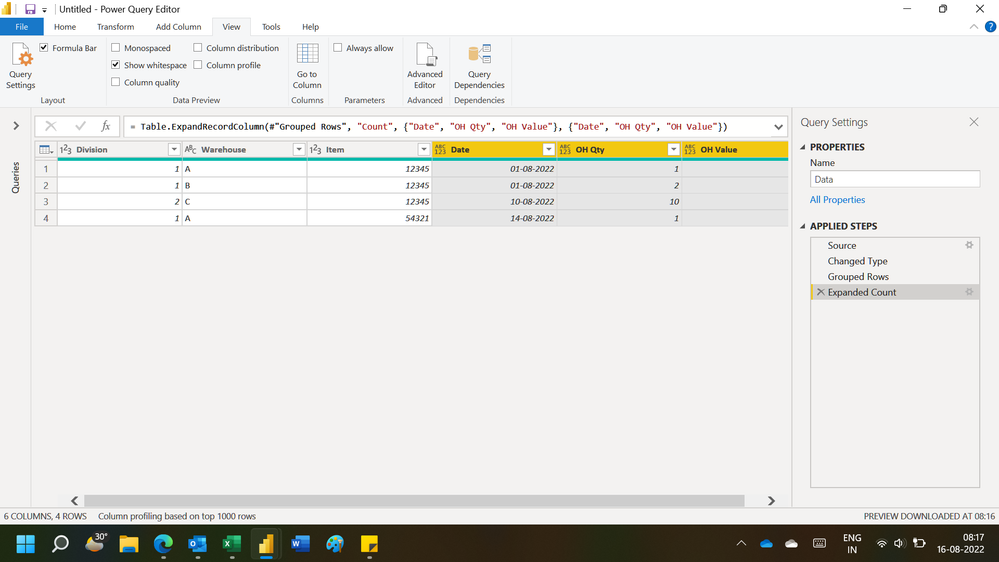

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("dc/BCcAwCAXQXTwnoF8DvbYdI2S3ztLJGpNDC42HLyIP1FpJKNHeI1Ar99U7lsxbBgM+9jC1NOURy5EpvT1jqZ5ICn+38yjvfr+0mEKmxcKG2H5vhVRXtD0=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Division = _t, Warehouse = _t, Item = _t, Date = _t, #"OH Qty" = _t, #"OH Value" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Division", Int64.Type}, {"Warehouse", type text}, {"Item", Int64.Type}, {"Date", type date}, {"OH Qty", Int64.Type}, {"OH Value", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Division", "Warehouse", "Item"}, {{"Count", each Table.Max(_,"Date")}}),

#"Expanded Count" = Table.ExpandRecordColumn(#"Grouped Rows", "Count", {"Date", "OH Qty", "OH Value"}, {"Date", "OH Qty", "OH Value"})

in

#"Expanded Count"Hope this helps.

Regards,

Ashish Mathur

http://www.ashishmathur.com

https://www.linkedin.com/in/excelenthusiasts/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think this might work for a smaller table, but it took about 40 minutes to do the group by and I haven't yet gotten the expand part to load. Based on previous experience I think that refreshes will fail if I upload that to the service.

I am investigating using a more detailed SQL query to pull in the data from the SQL Server rather than trying to do all the transformations in Power Query. If it works I will post my solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Xaraja , for DAX solution refer to my blog

For Power Query

https://exceleratorbi.com.au/remove-duplicates-keep-last-record-power-query/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I follow your blog and I have read that one a few times! I don't think it fits my situation, though, as I am not trying to sum anything. I basically need to filter so that I have unique values of the warehouse, item and date, with the filter criteria being that latest date. Once that is done, various visuals need to be able to use the columns from those rows individually, not as measures necessarily. For example, one of the columns I didn't include in my simplified example above is companyrank. All items have a companyrank, so in a table sometimes the companyrank is displayed, not as a calculation but as a piece of data (I can't recall if it's text but it mights as well be).

Also I'm not sure about the wisdom of trying to manipulate 10.8 million rows in DAX? Especially as the table is growing every day. Wouldn't that run into performance issues also? Possibly even at the moment when the end user is trying to interact with the visuals, as opposed to when I refresh the dataset, right?

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.