Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Enhance your career with this limited time 50% discount on Fabric and Power BI exams. Ends August 31st. Request your voucher.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Design advice needed

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Design advice needed

Hello,

Being new to Power BI, I am not sure what the optimal design for the following problem is. Any thoughts/ideas would be appreciated.

I would like to create a business user friendly data model that consists of the following tables:

1) Customers

2) Accounts

3) AccountTransactions

4) FraudCodes

Customers, Accounts and AccountTransactions are in a SQL Server database with the following relationships:

Customers 1---* Accounts 1----* AccountTransactions

FraudeCodes is an Excel file that provides the following mappping:

FraudCode 1 --- * AccountTransactions.TransactionCode

My problem is AccountTransactions contains 55 million rows without any filtering. Even if I apply date and transaction code filer, I still have 3.8 millions rows that I need to process. The table widget is already crashed with error "Can't display the visual"

Any ideas how to best model this problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hm, why do you want millions of records in a table visualiization?

Have you tried visualizations that aggregate the data, like bar charts?

Follow me on Twitter: https://twitter.com/AdolfoSocorro

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Our financial crime have asked for a tabular output, showing each transaction in the 1 Half of 2015. I have emailed them asking them to reconsider their requirements because even SSRS would struggle with the vast number of rows.

Being new to Power BI / Excel Data Models, I would like to understand the optimaldesign aproaches to use.

So far, I have picked up from the previous replies to my quesiton (thanks everyone):

- Use Direct Query (as opposed to import)

- Group/Aggregate the data (as opposed to large number of "atomic" rows)

Are there are any other important deisgn principles to follow?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@amir_tohidi Another option is to show aggregated visuals, (giving actual value to see things) and if they want to look at the data - have them use the "Export Data". Worth seeing if this works in the Service, that way, if they want to sit and wait for the records to load, they can.

Best case I would think is to meet them half way, set up visuals that are meaningful, slice them down to smaller chunks, and then they can export smaller pieces. Regardless of what anyone says, a report with a million rows is meaningless, there is no way they are looking at it all. If they take that and filter it down, then have them do that prior to exporting it from Power BI... Another option would be for them to Analyze in Excel off a report if they want to get more granular. (just some thoughts).

Looking for more Power BI tips, tricks & tools? Check out PowerBI.tips the site I co-own with Mike Carlo. Also, if you are near SE WI? Join our PUG Milwaukee Brew City PUG

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Eno1978. I will discuss the various options with the users and update the thread in due couse with how we decided to overcome the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there some way that you can roll-up the AccountTransactions before it gets to this point? e.g. Can you do a Group By AccountID, Date, Transaction Type to reduce the number of raw records?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

asocorro - Only the FraudCodes data is in Excel. Everything else is in SQL Server. My problem is the large amount of SQL Server data that I need to process.

silolondon - I can't roll up the account transactions because our Financial crime team want to anlyses each and every single transaction in 1 half of 2015.

I am using 64 bit Power BI which I thought can handle large number of rows. Is that not the case?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @amir_tohidi,

I think @asocorro has suggested a nice way.

Since there are large nuymber of records in transaction table, it may be difficult to process the data in native connection mode (Import mode ) for power BI.

There is another connection mode called "Direct Query" which does not load data into power BI but just facilitates visualisation.

Processing happens at back end.

Since you have SQL server, import excel data in SQL Server.

Then Connect to SQL Server and all other tables with Direct Query.

This will help you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi achinm45,

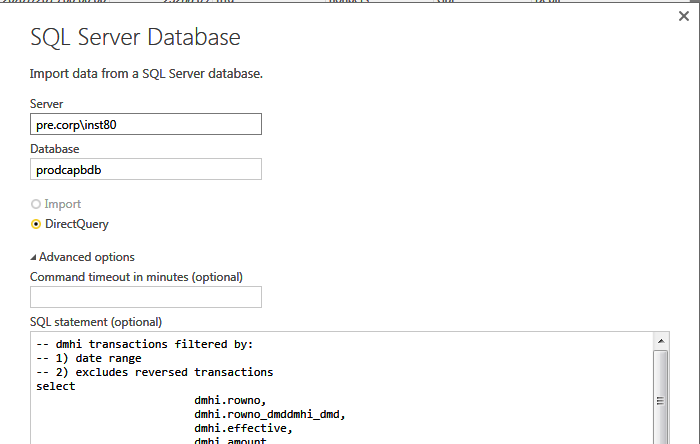

I am using DirectQuery already as I din't want to load so many rows into Power BI.

Hereis the data model (FraudCodes hasn't been added yet)

Finally, here is the error:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried using a much smaller subset of transactions to ascertain whether it's a size problem, or a setup problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since you are connecting to an Excel file, I suppose you are importing the data rather than using DirectQuery mode. How about importing the Excel data to the SQL Server database and switching to DirectQuery mode?

Follow me on Twitter: https://twitter.com/AdolfoSocorro

Helpful resources

| User | Count |

|---|---|

| 78 | |

| 74 | |

| 43 | |

| 32 | |

| 28 |

| User | Count |

|---|---|

| 104 | |

| 95 | |

| 51 | |

| 50 | |

| 46 |