New Offer! Become a Certified Fabric Data Engineer

Check your eligibility for this 50% exam voucher offer and join us for free live learning sessions to get prepared for Exam DP-700.

Get StartedJoin us at the 2025 Microsoft Fabric Community Conference. March 31 - April 2, Las Vegas, Nevada. Use code FABINSIDER for $400 discount. Register now

- Microsoft Fabric Community

- Fabric community blogs

- Power BI Community Blog

- Parquet, ADLS Gen2, ETL, and Incremental Refresh i...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Parquet, ADLS Gen2, ETL, and Incremental Refresh in one Power BI Dataset

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

A year ago, I was developing a solution for collecting and analyzing usage data of a Power BI premium capacity. There were not only some simple log files, but also data that I had to convert into a slowly changing dimension type 2. Therefore, I decided for the following architecture - Azure Data Factory pipelines collect data on daily basis, the raw data is stored in a data lake forever, and the cleansed data is then moved to a SQL Server database. Because the data is stored on a SQL Server, I can use incremental refresh in Power BI service. It works perfectly.

But the times are changing, new requirements are coming, and I have found a new straight way, how to load logs directly from the data lake into a Power BI dataset. And all that incrementally! Where I need a SCD, a SQL Server database will stay in the middle, whereas for all other data I can use a new mindset.

In this article I want to show you how you can load parquet files stored in an Azure data lake direct into your Power BI dataset. It involves an incremental refresh and an ETL process, too!

Checking all the ingredients

Before we start cooking, let’s check all the ingredients in our recipe.

We need an Azure blob storage or even better an Azure data lake storage Gen2 (ADLS Gen2) which adds a hierarchical namespace. The performance benefit of the latter option is described in a great blog post written by Chris Webb.

Do not forget to own sufficient rights for the data you want to access. You need at least the RBAC role Storage Blob Data Reader or Read+Execute in ACL. For more information, please go to Access control model for Azure Data Lake Storage Gen2 | Microsoft Docs.

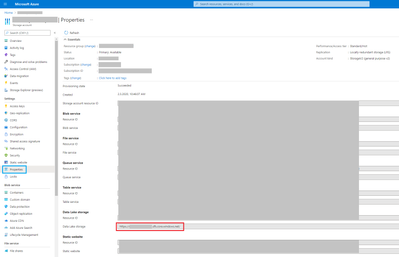

When we are already in the Azure Portal, let’s find the URL of the storage account. Go to the Group Settings in the left pane and navigate to Properties. You see a lot of URLs. Copy the link of the data lake storage.

The next ingredient is mastering the incremental refresh together with ETL. If you have not read my previous deep-dive blog post Power BI Incremental Refresh with ETL yet, I highly recommend that you do so now.

And the last important ingredient is the brand-new parquet connector. Well, I speak all the time about parquet files, but we can also do the same with CSV, JSON, or XML files.

Let’s start cooking!

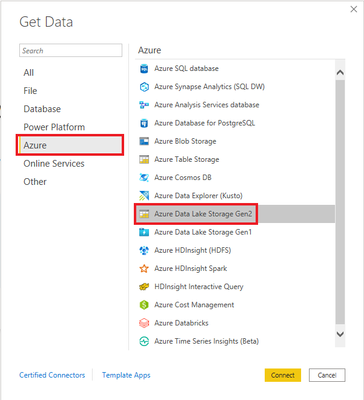

We create an empty dataset in Power BI Desktop and open Power Query Editor. Then, we want to get new data from our ADLS Gen2.

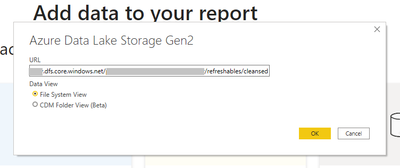

The connector expects a URL of a container in our storage, but we can also navigate to a folder and from there load files recursively. Let’s navigate to the folder refreshables/cleansed – it is the folder that contains the cleaned files we want to load into our empty dataset.

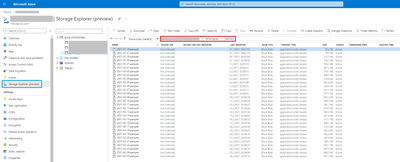

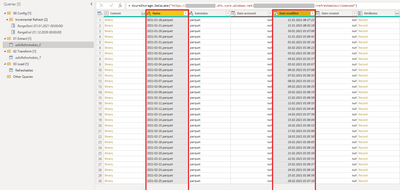

After a click on OK and then on Transform data, Power Query Editor shows a preview of files from our data lake.

We can see that there are two columns which can be used to filter by date: Name and Date modified. It is up to you and up to your use case, which column you will use as a filter for the incremental refresh.

It would be also great to see the content of the parquet files. As you can see in the screenshot above, there is a column called Content of the data type binary. We need to interpret the data as a parquet file.

Unfortunately, there is not such a column transformation, which converts some binaries directly to parquet. Do not panic, we can still use one of these options and modify the M code in formula bar. There is a function called Parquet.Document, which converts a parquet binary into a table.

After this short extension, let’s go back to incremental refresh. As we have already seen, we have two columns as good candidates for the incremental refresh filter. We choose the more complicated way now, that means, we take the text column Name as filter column.

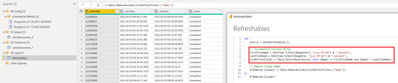

At first, our query adlsRefreshables_E (E is a short for extract) loads metadata from the data lake. Then, it creates two variables FirstFileName and LastFileName, which identify the first and the last file according to the incremental refresh parameters RangeStart and RangeEnd. (Do you feel lost? Do not worry and visit my previous blog post Power BI Incremental Refresh with ETL for a deep-dive explanation.) On the line 7 of the M code is written the condition for the incremental refresh. It says: Load only files with names >= FirstFileName and < LastFileName. According to the official documentation, it does not matter on which side you place the equal sign, as long as there is only one.

The next steps are nothing spectacular. We select two columns Content and Name, parse the parquet binaries, and expand table columns.

You may have noticed on the last screenshot that it is just a reference to the query adlsRefreshables_E. Yes, it is true. We do not do any additional transformations in this example to keep the solution as simple as possible.

After the boring transformation step, we will have fun in the load part of ETL again. We have to apply the incremental refresh filter already used in the extraction step once again. This repeated filter does not change the set of rows and it is completely useless. Why do we still apply that again? Because if we do not, Power BI Desktop does not allow us to set up an incremental refresh.

Power BI Desktop says that it is unable to confirm if the query can be folded. It is not bad news, because the ADLS Gen2 connector was developed by smart guys. It downloads only metadata at first and loads the binaries later when we really need them. Long live lazy evaluation!

Do you need a proof? Here we go!

I have prepared some proof because I wanted to see with my own eyes that it works in the exact way I have expected.

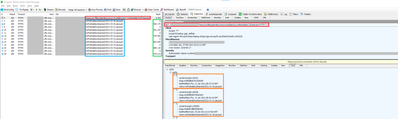

Proof nr. 1: Fiddler

The first thing to point out is that Power BI downloads only metadata. That is the red highlighted request in the top of all HTTP requests. It asks for all files in the folder refreshables/cleansed recursively. The response is an array of paths, look at the orange highlighted boxes. There is a size, a name, and the last modified timestamp of files. The data is the same we saw at the beginning of this article in Power Query Editor.

A little bit later, when the Power Query engine needs the content of files, it downloads them one by one, look at the blue box. There are always two requests for one file. The first one is a HEAD request, the second one is a GET request. You can recognize them also by size of the HTTP response. HEAD requests have the length of 0 bytes, the GET requests contain some data in the vast majority of cases. You can see that in the green box.

Proof nr. 2: Refresh in Power BI Desktop

If you do not have Fiddler and you want to see if your filter works well, you can observe the amount of data downloaded from your data lake in Power BI Desktop.

The first animation shows downloading of all data without any filter.

The second animation shows downloading of filtered rows. It proves that only a subset of files is downloaded and not all of them.

Proof nr. 3: Partitions

And the last check examines the incremental refresh in Power BI service. I want to see that the model contains partitions which were created on different timestamps.

We have configured the incremental refresh that it refreshes the last two whole days only. It means we should see different timestamps in the last 2 partitions in comparison to previous ones. Let’s check it.

First, we need to refresh our dataset twice in Power BI service. Please remember proximate timestamps of refreshes: 15:35 and 15:37 in my local time zone. Remove one hour to get UTC.

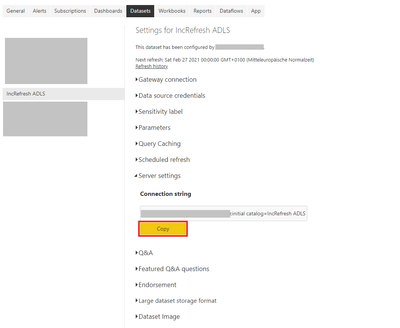

Then, we go to the settings of our dataset in Power BI service and copy the connection string. You can do it by clicking on the button Copy.

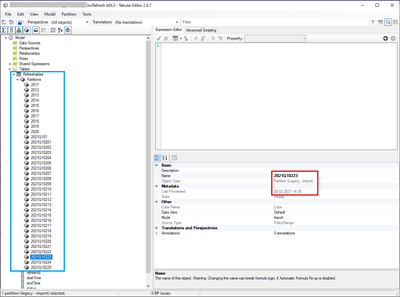

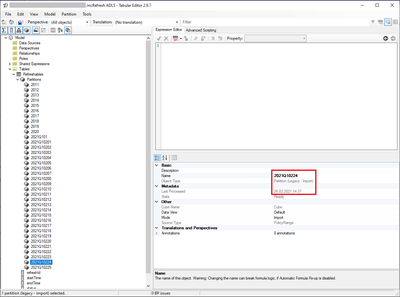

We open Tabular Editor and click in the menu on Connect to Tabular Server. We paste the copied connection string from the previous step into the field server and click on OK. Tabular Editor connects to our dataset and shows us the model of it. We navigate to the table Refreshables and expand partitions.

All partitions were initially created at approximately 14:35 UTC. This is the timestamp of our first refresh of the dataset.

But the last screenshot of this article shows that the partition 2021Q10224 was created approximately two minutes later. Exactly as we expected that. Only the last two partitions were processed in the second refresh, again.

A short conclusion

That is all for today. Now you know how to combine parquet, ADLS Gen2, ETL, and an incremental refresh in one Power BI dataset. If you have any questions, please let me know down in the comments.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Understanding TMDL in Power BI: A Game-Changer for...

- 🏆 Power BI DataViz World Championships | Week 1 F...

- 🏆 Power BI DataViz World Championships | Week 1 W...

- 🏆 Power BI DataViz Campeonato mundial! Semana 1!

- Fabric Capacity Scaling and Power BI - What happen...

- 🏆 Power BI DataViz World Championships | ¡La sema...

- 🏆 Power BI DataViz World Championships | Week 2 C...

- How to Use Multi-Cursor in Power BI TMDL View

- 🏆 Power BI DataViz World Championships | Meet the...

- Securing Data using Sensitivity Labels in Power BI

-

Pragati11

on:

🏆 Power BI DataViz World Championships | Week 1 W...

Pragati11

on:

🏆 Power BI DataViz World Championships | Week 1 W...

-

slindsay

on:

🏆 Power BI DataViz World Championships | Week 2 C...

slindsay

on:

🏆 Power BI DataViz World Championships | Week 2 C...

-

slindsay

on:

🏆 Power BI Data Visualization World Championships...

slindsay

on:

🏆 Power BI Data Visualization World Championships...

-

slindsay

on:

🏆 Power BI DataViz World Championships | Week 1 C...

slindsay

on:

🏆 Power BI DataViz World Championships | Week 1 C...

- Jalə on: Developing an Azerbaijan Shape Map

- ccarawan_1 on: Frequently Asked Questions | Power BI DataViz Worl...

- LYPowerBI on: Using Variables While Creating Custom Columns in P...

- cravity-hub on: Embedding Power BI Report in Web Pages

-

Poweraegg

on:

Connecting Fact Tables in Microsoft Fabric: A Brid...

on:

Connecting Fact Tables in Microsoft Fabric: A Brid...

-

PradipVS

on:

Power BI - Powered by Copilot

PradipVS

on:

Power BI - Powered by Copilot

-

How to

647 -

Tips & Tricks

616 -

Events

121 -

Support insights

121 -

Opinion

80 -

DAX

66 -

Power BI

65 -

Power Query

62 -

Power BI Dev Camp

45 -

Power BI Desktop

40 -

Roundup

37 -

Power BI Embedded

20 -

Time Intelligence

19 -

Tips&Tricks

18 -

Featured User Group Leader

15 -

PowerBI REST API

12 -

Dataflow

9 -

Power Query Tips & Tricks

8 -

finance

8 -

Power BI Service

8 -

Data Protection

7 -

Direct Query

7 -

Power BI REST API

6 -

Auto ML

6 -

financial reporting

6 -

Data Analysis

6 -

Power Automate

6 -

Data Visualization

6 -

Python

6 -

Income Statement

5 -

Dax studio

5 -

powerbi

5 -

service

5 -

Power BI PowerShell

5 -

Machine Learning

5 -

M language

4 -

Paginated Reports

4 -

External tool

4 -

Power BI Goals

4 -

PowerShell

4 -

Desktop

4 -

Bookmarks

4 -

Line chart

4 -

Group By

4 -

community

4 -

RLS

4 -

Visualisation

3 -

Administration

3 -

M code

3 -

Visuals

3 -

SQL Server 2017 Express Edition

3 -

R script

3 -

Aggregation

3 -

calendar

3 -

Gateways

3 -

R

3 -

M Query

3 -

Webinar

3 -

CALCULATE

3 -

R visual

3 -

Reports

3 -

PowerApps

3 -

Data Science

3 -

Azure

3 -

Data model

3 -

Conditional Formatting

3 -

Forecasting

2 -

REST API

2 -

Editor

2 -

Split

2 -

Life Sciences

2 -

measure

2 -

Microsoft-flow

2 -

Paginated Report Builder

2 -

Working with Non Standatd Periods

2 -

powerbi.tips

2 -

Custom function

2 -

Reverse

2 -

PUG

2 -

Custom Measures

2 -

Filtering

2 -

Row and column conversion

2 -

Python script

2 -

Nulls

2 -

DVW Analytics

2 -

parameter

2 -

Industrial App Store

2 -

Week

2 -

Date duration

2 -

Formatting

2 -

Weekday Calendar

2 -

Support insights.

2 -

construct list

2 -

slicers

2 -

SAP

2 -

Power Platform

2 -

Workday

2 -

external tools

2 -

index

2 -

RANKX

2 -

PBI Desktop

2 -

Date Dimension

2 -

Integer

2 -

Visualization

2 -

Power BI Challenge

2 -

Query Parameter

2 -

Date

2 -

SharePoint

2 -

Power BI Installation and Updates

2 -

How Things Work

2 -

Tabular Editor

2 -

rank

2 -

ladataweb

2 -

Troubleshooting

2 -

Date DIFF

2 -

Transform data

2 -

Tips and Tricks

2 -

Incremental Refresh

2 -

Number Ranges

2 -

Query Plans

2 -

Power BI & Power Apps

2 -

Random numbers

2 -

Day of the Week

2 -

Custom Visual

2 -

VLOOKUP

2 -

pivot

2 -

calculated column

2 -

M

2 -

hierarchies

2 -

Power BI Anniversary

2 -

Language M

2 -

inexact

2 -

Date Comparison

2 -

Power BI Premium Per user

2 -

API

1 -

Kingsley

1 -

Merge

1 -

variable

1 -

Issues

1 -

function

1 -

stacked column chart

1 -

ho

1 -

ABB

1 -

KNN algorithm

1 -

List.Zip

1 -

optimization

1 -

Artificial Intelligence

1 -

Map Visual

1 -

Text.ContainsAll

1 -

Tuesday

1 -

help

1 -

group

1 -

Scorecard

1 -

Json

1 -

Tops

1 -

financial reporting hierarchies RLS

1 -

Featured Data Stories

1 -

MQTT

1 -

Custom Periods

1 -

Partial group

1 -

Reduce Size

1 -

FBL3N

1 -

Wednesday

1 -

Power Pivot

1 -

Quick Tips

1 -

data

1 -

PBIRS

1 -

Usage Metrics in Power BI

1 -

Multivalued column

1 -

Pipeline

1 -

Path

1 -

Yokogawa

1 -

Dynamic calculation

1 -

Data Wrangling

1 -

native folded query

1 -

transform table

1 -

UX

1 -

Cell content

1 -

General Ledger

1 -

Thursday

1 -

Table

1 -

Natural Query Language

1 -

Infographic

1 -

automation

1 -

Prediction

1 -

newworkspacepowerbi

1 -

Performance KPIs

1 -

HR Analytics

1 -

keepfilters

1 -

Connect Data

1 -

Financial Year

1 -

Schneider

1 -

dynamically delete records

1 -

Copy Measures

1 -

Friday

1 -

Q&A

1 -

Event

1 -

Custom Visuals

1 -

Free vs Pro

1 -

Format

1 -

Active Employee

1 -

Custom Date Range on Date Slicer

1 -

refresh error

1 -

PAS

1 -

certain duration

1 -

DA-100

1 -

bulk renaming of columns

1 -

Single Date Picker

1 -

Monday

1 -

PCS

1 -

Saturday

1 -

update

1 -

Slicer

1 -

Visual

1 -

forecast

1 -

Regression

1 -

CICD

1 -

Current Employees

1 -

date hierarchy

1 -

relationship

1 -

SIEMENS

1 -

Multiple Currency

1 -

Power BI Premium

1 -

On-premises data gateway

1 -

Binary

1 -

Power BI Connector for SAP

1 -

Sunday

1 -

Training

1 -

Announcement

1 -

Features

1 -

domain

1 -

pbiviz

1 -

sport statistics

1 -

Intelligent Plant

1 -

Circular dependency

1 -

GE

1 -

Exchange rate

1 -

Dendrogram

1 -

range of values

1 -

activity log

1 -

Decimal

1 -

Charticulator Challenge

1 -

Field parameters

1 -

deployment

1 -

ssrs traffic light indicators

1 -

SQL

1 -

trick

1 -

Scripts

1 -

Color Map

1 -

Industrial

1 -

Weekday

1 -

Working Date

1 -

Space Issue

1 -

Emerson

1 -

Date Table

1 -

Cluster Analysis

1 -

Stacked Area Chart

1 -

union tables

1 -

Number

1 -

Start of Week

1 -

Tips& Tricks

1 -

Workspace

1 -

Theme Colours

1 -

Text

1 -

Flow

1 -

Publish to Web

1 -

Extract

1 -

Topper Color On Map

1 -

Historians

1 -

context transition

1 -

Custom textbox

1 -

OPC

1 -

Zabbix

1 -

Label: DAX

1 -

Business Analysis

1 -

Supporting Insight

1 -

rank value

1 -

Synapse

1 -

End of Week

1 -

Tips&Trick

1 -

Showcase

1 -

custom connector

1 -

Waterfall Chart

1 -

Power BI On-Premise Data Gateway

1 -

patch

1 -

Top Category Color

1 -

A&E data

1 -

Previous Order

1 -

Substring

1 -

Wonderware

1 -

Power M

1 -

Format DAX

1 -

Custom functions

1 -

accumulative

1 -

DAX&Power Query

1 -

Premium Per User

1 -

GENERATESERIES

1 -

Report Server

1 -

Audit Logs

1 -

analytics pane

1 -

step by step

1 -

Top Brand Color on Map

1 -

Tutorial

1 -

Previous Date

1 -

XMLA End point

1 -

color reference

1 -

Date Time

1 -

Marker

1 -

Lineage

1 -

CSV file

1 -

conditional accumulative

1 -

Matrix Subtotal

1 -

Check

1 -

null value

1 -

Excel

1 -

Cumulative Totals

1 -

Report Theme

1 -

Bookmarking

1 -

oracle

1 -

mahak

1 -

pandas

1 -

Networkdays

1 -

Button

1 -

Dataset list

1 -

Keyboard Shortcuts

1 -

Fill Function

1 -

LOOKUPVALUE()

1 -

Tips &Tricks

1 -

Plotly package

1 -

Healthcare

1 -

Sameperiodlastyear

1 -

Office Theme

1 -

matrix

1 -

bar chart

1 -

Measures

1 -

powerbi argentina

1 -

Canvas Apps

1 -

total

1 -

Filter context

1 -

Difference between two dates

1 -

get data

1 -

OSI

1 -

Query format convert

1 -

ETL

1 -

Json files

1 -

Merge Rows

1 -

CONCATENATEX()

1 -

take over Datasets;

1 -

Networkdays.Intl

1 -

refresh M language Python script Support Insights

1 -

Governance

1 -

Fun

1 -

Power BI gateway

1 -

gateway

1 -

Elementary

1 -

Custom filters

1 -

Vertipaq Analyzer

1 -

powerbi cordoba

1 -

Model Driven Apps

1 -

REMOVEFILTERS

1 -

XMLA endpoint

1 -

translations

1 -

OSI pi

1 -

Parquet

1 -

Change rows to columns

1 -

remove spaces

1 -

Get row and column totals

1 -

Retail

1 -

Power BI Report Server

1 -

School

1 -

Cost-Benefit Analysis

1 -

DIisconnected Tables

1 -

Sandbox

1 -

Honeywell

1 -

Combine queries

1 -

X axis at different granularity

1 -

ADLS

1 -

Primary Key

1 -

Microsoft 365 usage analytics data

1 -

Randomly filter

1 -

Week of the Day

1 -

Azure AAD

1 -

query

1 -

Dynamic Visuals

1 -

KPI

1 -

Intro

1 -

Icons

1 -

ISV

1 -

Ties

1 -

unpivot

1 -

Practice Model

1 -

Continuous streak

1 -

ProcessVue

1 -

Create function

1 -

Table.Schema

1 -

Acknowledging

1 -

Postman

1 -

Text.ContainsAny

1 -

Power BI Show

1 -

Get latest sign-in data for each user

1