FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Learn from the best! Meet the four finalists headed to the FINALS of the Power BI Dataviz World Championships! Register now

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: large datasets - incremental refresh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

large datasets - incremental refresh

I was wondering, if I have a p1 capacity, and set up incremental refresh. Can the total dataset size grow beyond the 25 gig max as long as the incremental refresh itself does not consume more than 25 gig?

is there no upper limit then?

or do you need to go to a higher capacity once your dataset is 25 gig?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @jessegorter ,

it's simple not possible that a dataset can outgrow the available memory of your P1 capacity.

The table provided in the latest post by @solvisig inidcates a 400GB dataset size limit. This is absolutely true - but for a P5 capacity.

This article What is Power BI Premium Gen2? - Power BI | Microsoft Docs shows a table that has the dataset size limits per capacity.

I assume that you have transformed you P1 capacity to the new Gen 2 architecture (simply by toggling a button). one of the benefits of Gen 2 is that each artifact can grow to the available memory.

In regards to your initial question about your P1 capaciy - the dataset can not outgrow the available memory.

Memory is of course not the same as storage. Storage is 100TB per capacity.

Sometimes I got asked why there is so much storage in comparison to the memory.

The answer is simple, the underlying assumption of the Power BI Premium (Gen 1 and Gen 2) is that there are many datasets and not just one. Power BI is juggling the datasets that are loaded to the available memory.

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @jessegorter,

it's difficult to estimate the required memory for a fact table with a billion rows, this is due to all the compression that happens in the in-memory colum-oriented world of the Tabular model. This article will get you started: Inside VertiPaq - Compress for success - Data Mozart (data-mozart.com)

If the origin of the fact table is relational it will be likely that there is a column that has a unique identifier for each row inside a fact table, most of the time this unique identifier is of no use, but can add a tremendous memory footprint inside Tabular models.

The most problematic issue with large datasets is the initial load. I doubt that a fact table with a billion (10^9) rows requires a P5.

Fact tables with that number of rows require some thinking, e.g. considering using measures instead of pre-computed columns can create a lot of unique values. These columns will burn your memory in seconds 🙂

Good luck,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks all! I didn't know the surrogate key could add such a footprint also.

Having said that, the direct query generated by Power BI didn't seem so smart also: fot a distinct count dax, it just sent a "select 2 billion rows from sql table" - query 🙂

is there a way to influence your direct query query based on the dax?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My understanding is this:

- Your dataset can grow beyond 25GB

- Incremental refresh size depends on your capcity settings. If the memory consumptions during refresh is up to half of your capacity you can start to see performance issues and should therefore scale out the capacity

- Dataset upper limit is 400GB

- No need to go to higher capacity when your dataset grows beyond 25GB as long as the incremental refresh partitions are not consuming this much memory and causing performance issues.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @solvisig ,

unfortunately the dataset size can not outgrow the available memory of the capacity P1 eq 25GB.

This article https://docs.microsoft.com/en-us/power-bi/enterprise/service-premium-large-models has a Considerations and limitations section, unfortunately it is not explicitely mentioned bullet point 5 is relevant here (next to my personal experience).

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Refreshing large datasets: Datasets that are close to half the size of the capacity size (for example, a 12-GB dataset on a 25-GB capacity size) may exceed the available memory during refreshes. Using the enhanced refresh REST API or the XMLA endpoint, you can perform fine grained data refreshes, so that the memory needed by the refresh can be minimized to fit within your capacity's size."

I don't think this is referring to storage. Only memory on the capacity processing the dataset.

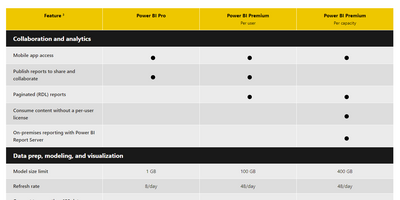

On the subscription comparison table you can see the model size limit(dataset size limit)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @jessegorter ,

it's simple not possible that a dataset can outgrow the available memory of your P1 capacity.

The table provided in the latest post by @solvisig inidcates a 400GB dataset size limit. This is absolutely true - but for a P5 capacity.

This article What is Power BI Premium Gen2? - Power BI | Microsoft Docs shows a table that has the dataset size limits per capacity.

I assume that you have transformed you P1 capacity to the new Gen 2 architecture (simply by toggling a button). one of the benefits of Gen 2 is that each artifact can grow to the available memory.

In regards to your initial question about your P1 capaciy - the dataset can not outgrow the available memory.

Memory is of course not the same as storage. Storage is 100TB per capacity.

Sometimes I got asked why there is so much storage in comparison to the memory.

The answer is simple, the underlying assumption of the Power BI Premium (Gen 1 and Gen 2) is that there are many datasets and not just one. Power BI is juggling the datasets that are loaded to the available memory.

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you!

It makes sense it cannot grow beyond the capacity limit, I was confused by some of the documentation. I had a large fact table around 1 billion rows, and it seems I could not even fit that into a p5 without incremental refresh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @jessegorter,

it's difficult to estimate the required memory for a fact table with a billion rows, this is due to all the compression that happens in the in-memory colum-oriented world of the Tabular model. This article will get you started: Inside VertiPaq - Compress for success - Data Mozart (data-mozart.com)

If the origin of the fact table is relational it will be likely that there is a column that has a unique identifier for each row inside a fact table, most of the time this unique identifier is of no use, but can add a tremendous memory footprint inside Tabular models.

The most problematic issue with large datasets is the initial load. I doubt that a fact table with a billion (10^9) rows requires a P5.

Fact tables with that number of rows require some thinking, e.g. considering using measures instead of pre-computed columns can create a lot of unique values. These columns will burn your memory in seconds 🙂

Good luck,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @jessegorter ,

the dataset can not outgrow the available memory. This article provides a table with the limits that apply to all the capacities: What is Microsoft Power BI Premium? - Power BI | Microsoft Docs

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The OP is referring to the large dataset format:

https://docs.microsoft.com/en-us/power-bi/enterprise/service-premium-large-models

1. How to get your question answered quickly - good questions get good answers!

2. Learning how to fish > being spoon-fed without active thinking.

3. Please accept as a solution posts that resolve your questions.

------------------------------------------------

BI Blog: Datamarts | RLS/OLS | Dev Tools | Languages | Aggregations | XMLA/APIs | Field Parameters | Custom Visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @jessegorter ,

as @otravers already mentioned, using the large dataset format, can grow the dataset size limit to the size of the capacity, for this reason I adjusted my initial request. I changed "... dataset size limit ..." to "... available memory ..."

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Power BI Monthly Update - February 2026

Check out the February 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 44 | |

| 42 | |

| 36 | |

| 25 | |

| 23 |