Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Why use Dataflows in Deployment Pipelines?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why use Dataflows in Deployment Pipelines?

Good afternoon,

I have a set of workspaces that I created for the purpose of housing my Dataflows; one for dev, test, and prod. Is there any reason why I would want to set up the dataflows into a Deployment Pipeline? I wouldn't want to overwrite the Dataflows in Deployment Pipelines, so what is the purpose?

For example: my Dev database is pointed to my Test and Dev workspaces, and my Prod database is pointed to my Prod workspace. Therefore if the workspaces were in a deployment pipeline, wouldn't my Prod workspace just get overwriten with the Dev configuration?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

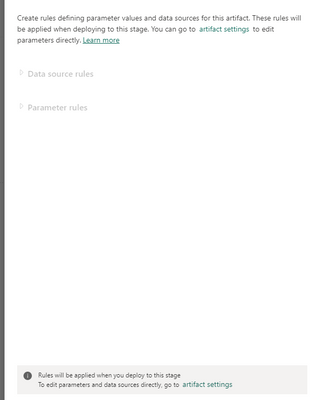

In a deployment pipeline, you can set rules for the data source and parameters for each dataset. So when you deploy from test to pre-prod to prod, you can change the connection string for the database connection.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In a deployment pipeline, you can set rules for the data source and parameters for each dataset. So when you deploy from test to pre-prod to prod, you can change the connection string for the database connection.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How would you actually go about doing this? When I look in the deployment rules, the "Data Source Rules" are greyed out. So its a bit confusing to me. It says I can go to "artifact settings" to change the data source settings, but when I go there, I don't see an option to change the actual data source.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, I'm going through that process and I do see those options. Looks like its asking me to modify the settings from the artifact settings. Will go through this process and see how it works. thanks!

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 52 | |

| 21 | |

| 11 | |

| 11 | |

| 10 |