Huge last-minute discounts for FabCon Vienna from September 15-18, 2025

Supplies are limited. Contact info@espc.tech right away to save your spot before the conference sells out.

Get your discount- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Score big with last-minute savings on the final tickets to FabCon Vienna. Secure your discount

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Why the need for Dataflows and Datamarts when ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why the need for Dataflows and Datamarts when Semantic Models can do all of this already??

This might seem like a basic question, but I am curious about when to use Power BI data flows, datamarts, or just stick with the semantic data model by itself. For the last eight years I have been developing Power BI dashboards perfectly and have had no issue building everything I need with Power BI desktop and publishing it to our power platform. Inside my semantic data model or “tabular data model” I had the creative freedom of building out all the tables needed for my model and within each table inside of Power BI desktop I had the ability to use power query to connect and transform data into the respective tables and then model it with relationships in my dataset. This semantic model that I have been creating for years already had data flows or power query capabilities within, and the ability to create tables. I never had a need to create a “separate data flow” outside of my semantic model (in the cloud) to prep the data since all of my prep work was done inside the semantic model behind the scenes of each table in the pbix file. Since upgrading to premium capacity in our power platform I can now see there are data flows and datamart options now, but I'm curious why I would even need either of these since power query is already baked into the semantic model/dataset and why I would need datamarts since I am already querying information from disparate data sources into tables of my tabular data model? I guess what I am asking is it seems like duplicative work in order to build a dataflow outside of my semantic model only to feed into a datamart that will then push to a semantic model when I already have ETL and table creation capability within my semantic model by itself....

Please help explain the differences and why I would need to use either a dataflow or a datamart when for years I have already had these tools as part of the semantic model component. Also, I used to develop these tabular data models with Visual Studio and deploy to analysis services and even in Visual Studio I had the capability to use power query in Visual Studio and never had a need for ETL solutions because I was able to “prep” my data inside of the semantic model or BIM file and could use Tabular Editor to connect and update the metadata. In addition, given that now my semantic model is hosted in a "Premium Workspace" other users are able to use my workspace URL and connect into the semantic model using an analysis services connection to pull in the tables needed for other reporting needs.

Just curious where data flow by itself or datamart by itself makes sense since both of these seemed to already exist in a semantic model development within power BI desktop. Power query is already accessible from Power BI desktop why would I need to create a data flow outside of my tabular data model when that feature is already enabled with the application?

Given that I am just building data models and dashboards in Power BI desktop, does it ever make sense to use a datamart or cloud data flow to pull this information in when the data sources that I'm connecting to currently are various OnPrem, cloud based systems, and flat files…?

Does it make a difference or is it best practice and faster to do everything I described a different way? Why use datamarts at all if I can prep and store everything in a semantic data model OR EVEN prep and transform everything in a dataflow and have the data flow connect directly to the semantic model without ever needing a datamart as the middleman...?? Any thoughts or recommendations or best practice tips would be greatly appreciated here…

Thanks!

T

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @OptalyticBI ,

you will not miss "best practices" when you stick to your working method. You extract, transform, and load data, then you create your model and fínally you visualize the data and share the report with your colleagues. In our organization there are many teams developing Power BI solutions targetting large audiences, there are many teams creating apps for their departments, or sections, we have ~2.4k Pro licences installed.

Regarding dataflows

We recommend everyone who is asking (not every one does, and no one has to) to use dataflows. The reasoning behind this is the different compute architecture, dataflows are performing way much faster then Power Query queries. With every compute second safed we safe money because we do not have to upgrade our capacities, at least not today.

Regarding datamarts

Currently we do not use datamarts, and most probably we never will. With the advent of Microsoft Fabric there are other means to share "raw" data (not the semantic model and also not the data visualizations) with other teams or external guest users. A datamart or a SQL endpoint as we now call it with Microsoft Fabric makes data more accessible, data can be shared with a larger audience, because the lingua franca of data is SQL not python, and "unfortunately" not DAX.

From my perspective, you do not need to change your working method, but you have to keep an eye on

- resource consumption

- the need to share data assets between teams and/or external users

Hopefully, this helps to make up your mind.

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Like you, I was enjoying just using a semantic model as my source of truth, and with all users in our organisation having Pro licences, sharing data and reports was a doddle.

But, over time, as my semantic models grew a bit, I noticed that performance was tanking. That's when I discovered that when you reference tables in the semantic model (like if you aggregate or join/merge), it does not used any cached form of the data, but re-extracts everything from the database again as the source of the aggregation or join/merge. It's not very intuitive that it should do that, but it does explain the poor performance of semantic models that do that sort of thing.

The recommended alternative is data flows that need Premium Per User (PPU) licences, with an associated cost. Data flows extract the data and put it somewhere in the cloud in Power BI, and that can then be used by semantic models more quickly than from the database, although they will still re-extract from those sources multiple times when you do any aggregates or merges.

It doesn't look like there is any good way to short-circuit that either. As soon as you want t do something properly useful at a large enterprise level, the licence levels and associated costs expand.

😞

I haven't worked with data marts, so I am afraid I can't comment on those.

Regards,

K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @OptalyticBI ,

Another benefit of dataflows is that they use PBI service credentials to connect to your data sources. It might be critical in some configurations. For example, user credentials might work from PBI Desktop, but not from PBI Service, hence, the dataset refresh won't happen.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Much appreciated. I figured that was the case because I could not figure out what the value of having a datamart is if I can connect to everything in the Power Platform with data flows. My Semantic Model can connect directly to a data flow in Power BI, and in an Excel file can connect to data flows. so the need for a datamart does not serve any new purpose other than if I need to share information with developers that like to use sql server management studio and will need to provide them a sql connection to do any sort of query against the data. The only value I see in using data flows is if I need to schedule different data calls at different times of the day independent of eachother... otherwise, it makes the most sense simply to build and connect everything together only with the semantic data model if I am pulling everything together at once. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @OptalyticBI

Based on your description it sounds like you are the only developper in your organization who is building the semantic models(Source of truth) to be reused by other Devs. If so then keep on the good work. It wouldn't be a good option anyway before upgrading to Premium Capacity because refresh is not possible for 10+ dataflows across workspaces with a Pro Licenses(Shared WS). Though since moving to Premium capacity then you might reconsider other alternatives and scenarios.

In addition to what you mentioned Dataflows are stored in ADLS Gen 2 which is accessible by all Power Platform family if you wanted to build Apps,Flows and AI capabilities.

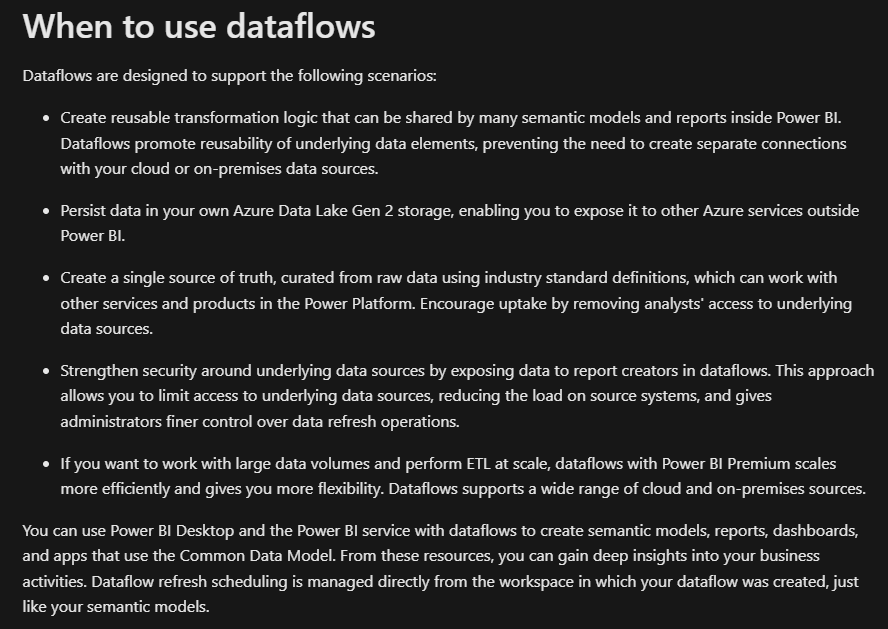

Here is more in details :

https://learn.microsoft.com/en-us/power-bi/transform-model/dataflows/dataflows-introduction-self-ser...

Regards

Amine Jerbi

If I answered your question, please mark this thread as accepted

and you can follow me on

My Website, LinkedIn and Facebook

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @OptalyticBI ,

you will not miss "best practices" when you stick to your working method. You extract, transform, and load data, then you create your model and fínally you visualize the data and share the report with your colleagues. In our organization there are many teams developing Power BI solutions targetting large audiences, there are many teams creating apps for their departments, or sections, we have ~2.4k Pro licences installed.

Regarding dataflows

We recommend everyone who is asking (not every one does, and no one has to) to use dataflows. The reasoning behind this is the different compute architecture, dataflows are performing way much faster then Power Query queries. With every compute second safed we safe money because we do not have to upgrade our capacities, at least not today.

Regarding datamarts

Currently we do not use datamarts, and most probably we never will. With the advent of Microsoft Fabric there are other means to share "raw" data (not the semantic model and also not the data visualizations) with other teams or external guest users. A datamart or a SQL endpoint as we now call it with Microsoft Fabric makes data more accessible, data can be shared with a larger audience, because the lingua franca of data is SQL not python, and "unfortunately" not DAX.

From my perspective, you do not need to change your working method, but you have to keep an eye on

- resource consumption

- the need to share data assets between teams and/or external users

Hopefully, this helps to make up your mind.

Regards,

Tom

Did I answer your question? Mark my post as a solution, this will help others!

Proud to be a Super User!

I accept Kudos 😉

Hamburg, Germany