FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Issue on "Large dataset storage format" settin...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issue on "Large dataset storage format" setting behavior in Power BI workspaces

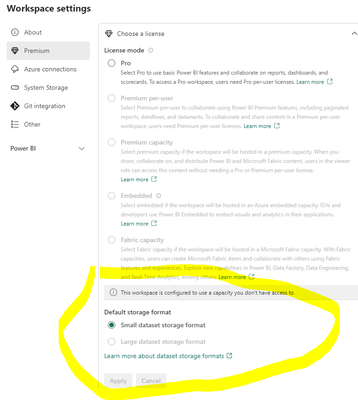

I have encountered an error during data refresh when using the "Large dataset storage format" setting in my Power BI workspace, which was set to "Small dataset" by default. I would like to seek clarification regarding this issue.

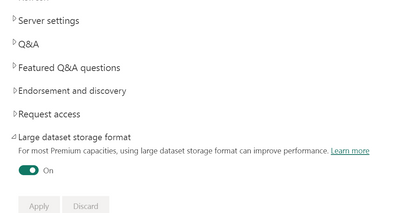

In my workspace, the default dataset storage format is set to "Small dataset," and I do not have the necessary rights or permissions to change this setting. However, I have enabled the "Large dataset storage format" setting at the individual dataset level to accommodate larger datasets. Despite this configuration, I consistently encounter an error during data refresh. The error message received during the refresh process is about out of memory .

below screenshot of workspace configuration :

and the dataset level:

I am uncertain if the error is a result of the default "Small dataset" of the workspace .

Thank you for your assistance

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MAdam90 ,

Please try to consider using other methods:

(1)Using the enhanced refresh REST API , you can perform fine grained data refreshes, so that the memory needed by the refresh can be minimized to fit within your capacity's size.

(2) Optimize the data model by removing unnecessary columns, reducing the number of calculated columns, and using efficient data types. This helps to reduce the memory footprint of the dataset.

(3) Another option is to incrementally refresh the data. You can configure Power BI to refresh only a subset of data, such as the latest data or changed data, rather than refreshing the entire dataset. This helps reduce the memory requirements during the refresh process.

Best Regards,

Neeko Tang

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MAdam90 ,

The error message you received about insufficient memory indicates that the refresh requires more memory than the capacity can fit.

It's possible that the "Small dataset" setting in your workspace is not the direct cause of the issue, but rather the memory limitations of your Power BI capacity.

In the meantime, you can try using the XMLA endpoint to refresh the new partitions one by one, instead of having multiple partitions being refreshed in parallel when the refresh is done by the Power BI Service. If refreshing one partition still gives the error, that means the capacity needs to be increased in size to fit the refresh.

Refer it :Large datasets in Power BI Premium - Power BI | Microsoft Learn

Best Regards,

Neeko Tang

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello @Anonymous, XMLA endpoints for partitioning may not be applicable in my case, as my data is stored as CSV files in Azure, and CSV files don't natively support partitioning. XMLA is more suitable for Power BI datasets directly connected to a database.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MAdam90 ,

Please try to consider using other methods:

(1)Using the enhanced refresh REST API , you can perform fine grained data refreshes, so that the memory needed by the refresh can be minimized to fit within your capacity's size.

(2) Optimize the data model by removing unnecessary columns, reducing the number of calculated columns, and using efficient data types. This helps to reduce the memory footprint of the dataset.

(3) Another option is to incrementally refresh the data. You can configure Power BI to refresh only a subset of data, such as the latest data or changed data, rather than refreshing the entire dataset. This helps reduce the memory requirements during the refresh process.

Best Regards,

Neeko Tang

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just want to know if it's the small data that is initialized for the workspace that could be the cause of this issue. I just need a confirmation, thanks

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!