FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

To celebrate FabCon Vienna, we are offering 50% off select exams. Ends October 3rd. Request your discount now.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: How to avoid the problem of not being able to ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to avoid the problem of not being able to refresh Calculated Tables off Direct Query Tables

Good Afternoon All,

Having fallen for the same problem I have seen many people suffer from on here, I am asking what is the best solution to avoid this?

Problem

You have a Dataset you are accessing via DirectQuery. From this directquery, you build some summerised tables which you then build your PowerBI dashboards from. You can refresh just fine in the Desktop software, great! But if you upload to the PowerBI Service and try to set this to a scehduled refresh, it will fail. In short, you can not have a refresh, in the powerbi service if you are using calculated tables that refernence a table in Direct Query.

Solutions?

So what are peoples solutions for this? My thoughts so far:

1) Calanders - If your building a calander off a date, either hard code the date or DAX calculate a time reference. Neither of these are useful if your data is a constantly moving target

2) Use Power Automate to create a CSV which you then reference. Does work, but my current one is timing out because there are just too many rows to process in the time given

3) Create a PowerBI dataset which doesnt use DQ. Doable but wow what a filesize.

4) Don't use calcualted tables, not really practical for large datasets when you need to minimize the amount of data you are pulling from the cloud

5) Some people have noted changing the person whom owns the dataset will work. Tried, it doesnt work for me or a few others i have also seen.

None of these are great options. Tableua doesn't have this problems so why is PowerBI so behind the times on this? Anyone come up with a better way or just a way in general??

Regards

J

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem

You have a Dataset you are accessing via DirectQuery. From this directquery, you build some summerised tablesThat's your problem all right.

Have you considered using the Analysis Services connector against the dataset semantic model? That will allow you to import tables. Not optimal but a good enough compromise.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How would I do this? I believe from what I have read online that analysis devices would only connect to a server and not a semantic model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can connect to any semantic model as if it were an Analysis Server since a semantic model is pretty much an instance of SSAS Tabular.

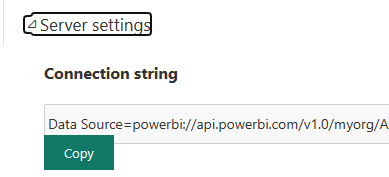

In your workspace navigate to the semantic model's settings page. Go to the Server setting section and copy the connection string

Use that connection string in your new file. It will then give you the option to connect live or via import mode, and you can specify your own DAX query if you want.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, when i try, i have to cut down to the part which goes

api.powerbi.com

It then gives the error: "We could not connect to the Analysis Services server because the connection timed out or the server name is incorrect."

What should be the correct name? Do i need a value in for the database? My original dataset is pulling data from a AWS if that helps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

when i try, i have to cut down to the part which goes

api.powerbi.com

You need to keep that part. Remove the "Data Source=" part, and the "Initial Catalog" part including the semicolon.

The server part should be

powerbi://api.powerbi.com/v1.0/myorg/<workspace name>

The database part is the name of the semantic model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks that really helpful.

I now suffer with this problem:

Details: "This server does not support Windows credentials. Please try Microsoft account authentication."

But no where does it give me the ability to enter MS account details?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's because you tried with the wrong authentication, and Power Query is mean and is remembering that choice. Go to the Data Source settings are clear the credentials. Then try again, with organizational account.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Must be an organisational setting somewhere as this is still failing yet a friend showed me how this would work in a different organisation. However it appears this will still bring in the all the data, the whole point of the summary of direct data it that it doesnt do this.

But thank you for the assistance. I will mark the above as a solution for those whom Organisations allow it.