- Power BI forums

- Updates

- News & Announcements

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Power Platform Integration - Better Together!

- Power Platform Integrations (Read-only)

- Power Platform and Dynamics 365 Integrations (Read-only)

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Community Connections & How-To Videos

- COVID-19 Data Stories Gallery

- Themes Gallery

- Data Stories Gallery

- R Script Showcase

- Webinars and Video Gallery

- Quick Measures Gallery

- 2021 MSBizAppsSummit Gallery

- 2020 MSBizAppsSummit Gallery

- 2019 MSBizAppsSummit Gallery

- Events

- Ideas

- Custom Visuals Ideas

- Issues

- Issues

- Events

- Upcoming Events

- Community Blog

- Power BI Community Blog

- Custom Visuals Community Blog

- Community Support

- Community Accounts & Registration

- Using the Community

- Community Feedback

Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Dataset Refresh Challenges with Incremental Refres...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataset Refresh Challenges with Incremental Refresh

Hello,

For the past two months, I've been trying to resolve the refresh schedule for our dataset, and I've previously asked some questions in this forum. Despite trying so many solutions, I'm still facing failures. I'm hopeful that someone can provide insight into why these issues persist.

We have a premium embedded plan with A2 sku which comes with 5GM RAM.

We need to refresh the dataset every 30 minutes because of business requirement.

We have 2 large tables, one with 9 M rows and the other with 8 M rows.

What I have done:

1- Removed old data (Changed DB from 5 years to 3 years data)

2- Removed any unused columns and relationships.

3- Transferred some calculated columns (which looked complex) to power query and SQL server.

4- Turned off the MDX for large tables

5- Applied incremental refresh

At the beggining (before these changes):

.pbix dataset size: 820 MB

Total Size in memory: 1.95 GB (based on VertiPaq Analyzer)

After making the changes:

.pbix dataset size: 440 MB

Total Size in memory: 1.07 GB

But we have 5 GB RAM in our plan, why should a refresh fail?

After all this effort, I still face a failure rate of 20-25% during data refreshes.

Regarding the incremental refresh, initially, I defined the policy to refresh the past 12 months and archive the entire dataset for the two largest tables. But, this approach resulted in 20 failed refresh attempts within a 40-hour timeframe.

Then, I changed the policy to refresh the last 6 months of data instead of 12. After checking the VertiPaq Analyzer, I noticed that the total memory footprint post-refresh remained unchanged, with only a marginal reduction in refresh time (excluding the failed ones). And it's interesting that during the first 18-hour following this adjustment, there were no failures. But, subsequently, despite no changes in the system and minimal client activity, 10 refresh failures happened out of 25.

Basically, this incremental refresh is not helping in reduing memory during a refresh!

Any idea or comment is appreciated!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At this point, with all the suggestions you have tried, you best option is to open a support ticket with Microsoft and get them to investigate the issue.

After re-reading your post, you have doen all you can on your side to assist with the issue. The only other thing I can see is if the SQL Server database is delaying the data (sql server blocking, bad query plan, etc.). That would cause maybe a timeout on the import side in the semantic model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

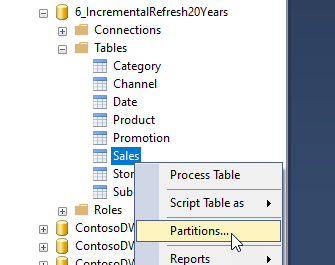

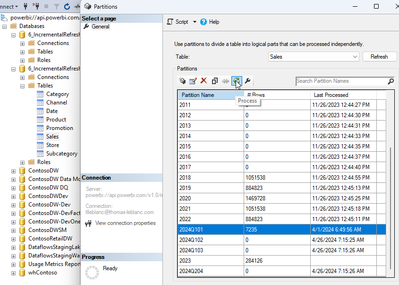

Try connecting in SQL Server Management Studio and refresh one partition at a time:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @3CloudThomas

Yes, I have done that, and each partition (in tables where incremental refresh is defined) is refreshed successfully. But, we still need to schedule a refresh every 30 minutes

Helpful resources

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

Power BI Monthly Update - April 2024

Check out the April 2024 Power BI update to learn about new features.