FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Service

- Re: Data Flows - Extract and Transform with linked...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Data Flows - Extract and Transform with linked tables - Avoid "Cannot acquire lock for model"

Hi everybody,

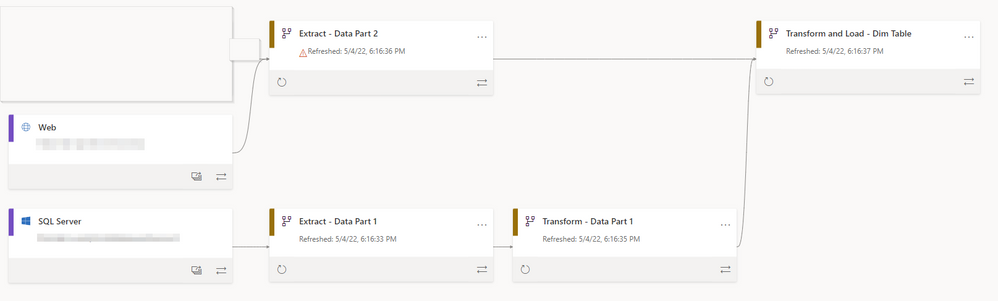

I wanted to create an ETL process using Data Flows in a Premium per User workspace. I tried to stick to Best Practices, so I created one Extraction Dataflow per Data Source and Transformation Dataflows using Linked Tables. Since we have plenty of sources, I tried to create an easy to understand model (Please ignore that "Extract - Data Part 2" has two sources, it is just my Demo data)

My final Data Flow - Transform and Load - is a merge of the two previous Dataflows.

When I refresh my two Extraction Data Flows at the same time, they try both to refresh the downstream data flows. One of them will eventually throw an Error "Cannot acquire lock for model...".

My ideas to avoid this:

- Disable load of linked tables - this would also lead to having to schedule my transformations separately, which I would like to avoid. Also I lose some of the advantages of linked tables.

- Seperate Extraction and Transformation workspaces - this would also lead to separate schedules. Also, the problem would persist, since I sometimes still need to get results of two transformations in another transformation

- Use a real DWH with proper ETL tools - maybe...

Do you have any ideas, links etc. about best practices for ETL in this case?

Thanks in advance

Patrick

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

The error ‘Cannot acquire lock for model… because it is currently in use’ occurs when the dataflow has been blocked which is caused by the refresh logic of linked dataflows. For refresh logic of linked dataflows, you could refer to Link entities between dataflows - Power Query | Microsoft Docs. Here is an example for state the cause. You create a dataflow A. Then you create a dataflow B which connects to dataflow A. When you refresh dataflow B, dataflow A is not allowed to be changed or refreshed. If we change or refresh dataflow A when refreshing dataflow B, this will cause dataflow B to get inconsistent data. So to make sure the consistent, Power BI will block dataflow A from being refreshed or changed when dataflow B is refreshing.

To avoid such kind of issue, you could consider schedule the refresh of the dataflows per the refresh logic.

1 Put source dataflows and destination dataflows are in different workspaces, then source dataflows will be treated as the external data sources of destination dataflows. Then, you could set scheduled refresh for them separately. But you need make sure the refresh of source dataflows will be completed before destination dataflows starts to refresh.

2 If you put source dataflows and destination dataflows in the same workspace, only set scheduled refresh on source dataflows since it will event automatically trigger a refresh process for dependent entities in all destination dataflows in the same workspace.

Once you get this issue, you could check workspace lineage view then check if there’s overlap between dataflow and linked dataflow. If there’s no refresh overlap and dataflow refreshed failed consistently, please consider raising support ticket.

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly. If I misunderstand your needs or you still have problems on it, please feel free to let me know. Thanks a lot!

Best Regards,

Community Support Team _ Caiyun

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

The error ‘Cannot acquire lock for model… because it is currently in use’ occurs when the dataflow has been blocked which is caused by the refresh logic of linked dataflows. For refresh logic of linked dataflows, you could refer to Link entities between dataflows - Power Query | Microsoft Docs. Here is an example for state the cause. You create a dataflow A. Then you create a dataflow B which connects to dataflow A. When you refresh dataflow B, dataflow A is not allowed to be changed or refreshed. If we change or refresh dataflow A when refreshing dataflow B, this will cause dataflow B to get inconsistent data. So to make sure the consistent, Power BI will block dataflow A from being refreshed or changed when dataflow B is refreshing.

To avoid such kind of issue, you could consider schedule the refresh of the dataflows per the refresh logic.

1 Put source dataflows and destination dataflows are in different workspaces, then source dataflows will be treated as the external data sources of destination dataflows. Then, you could set scheduled refresh for them separately. But you need make sure the refresh of source dataflows will be completed before destination dataflows starts to refresh.

2 If you put source dataflows and destination dataflows in the same workspace, only set scheduled refresh on source dataflows since it will event automatically trigger a refresh process for dependent entities in all destination dataflows in the same workspace.

Once you get this issue, you could check workspace lineage view then check if there’s overlap between dataflow and linked dataflow. If there’s no refresh overlap and dataflow refreshed failed consistently, please consider raising support ticket.

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly. If I misunderstand your needs or you still have problems on it, please feel free to let me know. Thanks a lot!

Best Regards,

Community Support Team _ Caiyun

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!