FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Report Server

- Refresh problem for a large size table in Power BI...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Refresh problem for a large size table in Power BI Desktop and in Power BI Services

I have a table - [WorkItems] - 245 000 records, 90 MB original size - which is part of PBI Report.

It contains 30 API references (pulling data directly from API - 30 OData Feeds (Projects)).

Code written in PowerQuery (OData)

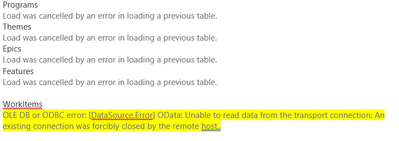

When I am refreshing this PBI report, the [WorkItems] table keeps failing with the following msg -

(The Refresh time is over 10 min)

I was able to lower [WorkItems] tbl size by removing about half of the API calls (OData feeds) - left 12 out of 30.

Then the tbl size reduced to 42MB, and it's now about 100 000 records and refresh is 2 min

(3 min when I publish it on PBI Services; scheduled refresh is now not failing).

But - my problem - I need to keep ALL 30 OData Feeds (Projects)...

To achieve this:

- Are there working and more or less quick ways to reduce this tbl size?

- Should I add some sort of parameter(s) into Data Source settings - in order to speed up the refresh

(to load my records in parts, quicker)?

- One other possible option is to add [Date] filter to reduce a tbl size - [Current Date] - 24 months

I am allowed to do this. (Not sure where in the code and how exactly)?

Here is the code (PowerQuery, OData, I included only 3 out of 30 OData feeds):

Any suggestions would be very helpful

// "$select=ParentWorkItemId, StoryPoints, State, WorkItemType, Title, IterationSK, AreaSK, WorkItemId" & "&$filter=(WorkItemType eq 'Bug' or WorkItemType eq 'User Story')", null, [Implementation="2.0"]),

let

Source = OData.Feed("https://analytics.dev.azure.com/MyCompany/Research and

Development/_odata/v3.0-preview/WorkItems?" &

"$select=ParentWorkItemId, StoryPoints, State,

WorkItemType, Title, IterationSK, AreaSK, WorkItemId,

Area" & "&$filter=(WorkItemType eq 'Bug' or

WorkItemType eq 'User Story')" &

"&$expand=Area($select=AreaPath)", null,

[Implementation="2.0"]),

#"Add all Ops & CP projects" = Table.Combine({

Source,

OData.Feed("https://analytics.dev.azure.com/MyCompany/Cloud

Platform/_odata/v3.0-preview/WorkItems?" &

"$select=ParentWorkItemId, StoryPoints, State, WorkItemType,

Title, IterationSK, AreaSK, WorkItemId, Area" & "&$filter=

(WorkItemType eq 'Bug' or WorkItemType eq 'User Story')" &

"&$expand=Area($select=AreaPath)", null,

[Implementation="2.0"]),

OData.Feed("https://analytics.dev.azure.com/MyCompany/Batch

Management/_odata/v3.0-preview/WorkItems?" &

"$select=ParentWorkItemId, StoryPoints, State, WorkItemType,

Title, IterationSK, AreaSK, WorkItemId, Area" & "&$filter=

(WorkItemType eq 'Bug' or WorkItemType eq 'User Story')" &

"&$expand=Area($select=AreaPath)", null,

[Implementation="2.0"]),

}),

#"Add AreaPath" = Table.ExpandRecordColumn(#"Add all Ops & CP projects",

"Area", {"AreaPath"}, {"AreaPath"}),

// Calculate the date 24 months ago from the current date

Date24MonthsAgo = Date.AddMonths(DateTime.LocalNow(), -24),

// Filter data to include only records from the last 24 months

FilteredData = Table.SelectRows(ConvertedIDColumns, each

DateTime.From([CreatedDate]) >= Date24MonthsAgo),

#"Rename Story Points to Effort" = Table.RenameColumns(#"Add AreaPath",{{"StoryPoints", "Effort"}}),

#"Add Organization" = Table.AddColumn(#"Rename Story Points to Effort", "Organization", each "MyCompany"),

#"Change IDs to text" = Table.TransformColumnTypes(#"Add Organization",{{"WorkItemId", type text}, {"ParentWorkItemId", type text}}),

#"Make IDs unique" = Table.TransformColumns(

#"Change IDs to text",

{

{

"WorkItemId",

each

Text.Combine({(_),"-VSTS"}),

type text

}

}

),

#"Make Parent IDs unique" = Table.TransformColumns(

#"Make IDs unique",

{

{

"ParentWorkItemId",

each

Text.Combine({(_),"-VSTS"}),

type text

}

}

),

#"Replaced Value" = Table.ReplaceValue(#"Make Parent IDs unique","-VSTS","",Replacer.ReplaceValue,{"ParentWorkItemId"}),

#"Parent Orphans to ""No Feature""" = Table.ReplaceValue(#"Replaced Value","","No Feature",Replacer.ReplaceValue,{"ParentWorkItemId"})

in

#"Parent Orphans to ""No Feature"""

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reduce Table Size:

- Filter Data: As you mentioned, adding a filter to include only records from the last 24 months can significantly reduce the size of your table and improve refresh performance.

- Limit Columns: Consider limiting the number of columns you're importing from each OData feed to only those that are necessary for your analysis. This can help reduce the size of your dataset.

- Combine Similar OData Feeds: If multiple OData feeds provide similar data, consider combining them into a single query to reduce the number of API calls and improve performance.

Optimize Query Execution:

- Merge Queries: If possible, try to merge similar queries or apply transformations earlier in the query chain to minimize data movement and improve query performance.

- Data Sampling: During development, you can use data sampling techniques to work with a smaller subset of your data, which can help identify and address performance issues more quickly.

Did I answer your question? Mark my post as a solution! Appreciate your Kudos !!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reduce Table Size:

- Filter Data: As you mentioned, adding a filter to include only records from the last 24 months can significantly reduce the size of your table and improve refresh performance.

- Limit Columns: Consider limiting the number of columns you're importing from each OData feed to only those that are necessary for your analysis. This can help reduce the size of your dataset.

- Combine Similar OData Feeds: If multiple OData feeds provide similar data, consider combining them into a single query to reduce the number of API calls and improve performance.

Optimize Query Execution:

- Merge Queries: If possible, try to merge similar queries or apply transformations earlier in the query chain to minimize data movement and improve query performance.

- Data Sampling: During development, you can use data sampling techniques to work with a smaller subset of your data, which can help identify and address performance issues more quickly.

Did I answer your question? Mark my post as a solution! Appreciate your Kudos !!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you considered setting the Timeout parameter for the Odata.Feed ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tried that and it absolutely didnt help 😞

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!