Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM. Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Using Data to create a file of original/unique...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

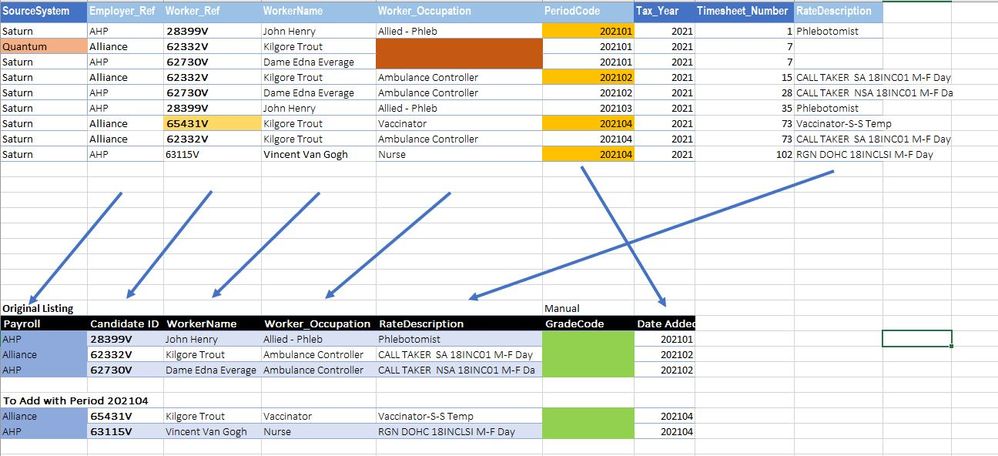

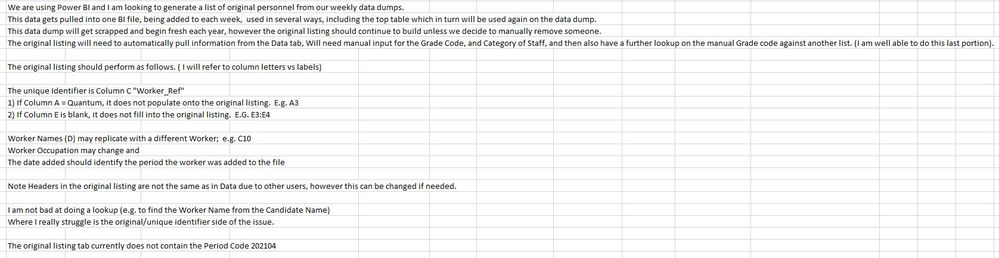

Using Data to create a file of original/unique entries

I am not sure if this is accurate under Power Query area or not.

I need to create a file/report of first entry (based on a unique identifier), accounting for a few exceptions.

The data comes from a weekly data dump that will be pulled into a BI Power Query file.

The same ID's will appear each week, and even multiple times in each week.

REPORT TO BUILD

| Payroll | Candidate ID | WorkerName | Worker_Occupation | RateDescription | GradeCode | Date Added |

| AHP | 28399V | John Henry | Allied - Phleb | Phlebotomist | 202101 | |

| Alliance | 62332V | Kilgore Trout | Ambulance Controller | CALL TAKER SA 18INC01 M-F Day | 202102 | |

| AHP | 62730V | Dame Edna Everage | Ambulance Controller | CALL TAKER NSA 18INC01 M-F Da | 202102 | |

| To Add with Period 202104 | ||||||

| Alliance | 65431V | Kilgore Trout | Vaccinator | Vaccinator-S-S Temp | 202104 | |

| AHP | 63115V | Vincent Van Gogh | Nurse | RGN DOHC 18INCLSI M-F Day | 202104 |

DATA

| SourceSystem | Employer_Ref | Worker_Ref | WorkerName | Worker_Occupation | PeriodCode | Timesheet_Number | RateDescription |

| Saturn | AHP | 28399V | John Henry | Allied - Phleb | 202101 | 1 | Phlebotomist |

| Quantum | Alliance | 62332V | Kilgore Trout | 202101 | 7 | ||

| Saturn | AHP | 62730V | Dame Edna Everage | 202101 | 7 | ||

| Saturn | Alliance | 62332V | Kilgore Trout | Ambulance Controller | 202102 | 15 | CALL TAKER SA 18INC01 M-F Day |

| Saturn | AHP | 62730V | Dame Edna Everage | Ambulance Controller | 202102 | 28 | CALL TAKER NSA 18INC01 M-F Da |

| Saturn | AHP | 28399V | John Henry | Allied - Phleb | 202103 | 35 | Phlebotomist |

| Saturn | Alliance | 65431V | Kilgore Trout | Vaccinator | 202104 | 73 | Vaccinator-S-S Temp |

| Saturn | Alliance | 62332V | Kilgore Trout | Ambulance Controller | 202104 | 73 | CALL TAKER SA 18INC01 M-F Day |

| Saturn | AHP | 63115V | Vincent Van Gogh | Nurse | 202104 | 102 | RGN DOHC 18INCLSI M-F Day |

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

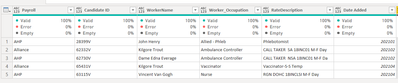

Hi @Mr_Stern

Here is one way, you need to take care of your manual column

output

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jY7BCoJAEIZfZfCcoLtgelzMsDSJii7SYc1BhXUXNg18m56lJ2vXLkWXTjP/z//PN2XpsHTvLBwS0ig6m2WrWgkpSj0ZwYTosAYX9q3AyhjzVIPqu9tgpC16xPd857Io5zSXVzRuQCgl9lzWiUZphJNWo22wvhqFDUGs5KCVEKiNHbM8hxPLksPzAUcGfrgpYs+HnbuGFZ8+UOSNmp8OyJJ6lrLiPUJSSw7JHTVv8E9S8YP6Jl1e", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Payroll = _t, #"Candidate ID" = _t, WorkerName = _t, Worker_Occupation = _t, RateDescription = _t, GradeCode = _t, #"Date Added" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Payroll", type text}, {"Candidate ID", type text}, {"WorkerName", type text}, {"Worker_Occupation", type text}, {"RateDescription", type text}, {"GradeCode", type text}, {"Date Added", Int64.Type}}),

originTable = Table.RemoveColumns(#"Changed Type",{"GradeCode"}),

Custom1 = List.Union({ Table.ToRows( originTable), Table}),

Custom2 = Table.FromRows(Custom1, Table.ColumnNames(originTable)),

#"Added Custom" = Table.AddColumn(Custom2, "GradeCode", each null)

in

#"Added Custom"

the DATA called Table

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rVHbbsIwDP0Vq89UahLK5bFqGWWwjlHUF9SHABFUSpMppJP4G76FL1syVgSsEmOaolh2bJ9j5ywWTkp1pYTTcoJ4aizukX4/M86z3AqImVB7m+O8YGtwYbrlbGnLPIw8ZBx7vx6llmWx007eWjhvFRW6Kr8bqVgx43YwIdgijwu+kYrBXMlKm/gSrmtjC3EzVgd3iWebI1oyGKwFhcEHU3TD7gP8YoSgXFbcFkEohVaSc6ZqVGy39I0Jg8kE5sF4MDseIA0A9UZJ6CF4cZ8govuHx75Hinu3pMkP1ibSRyQkxiF+k4aN/+e3CWr6v4yuVoWgWp4XaFstyFXKTd0U5qx8/199zkx/0Ycg5FvCrDDgQkNGBQzlZmuekkrt2CXJSZXZMIHoNQ5P8JN0VOMfD/Vx8vwT", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [SourceSystem = _t, Employer_Ref = _t, Worker_Ref = _t, WorkerName = _t, Worker_Occupation = _t, PeriodCode = _t, Timesheet_Number = _t, RateDescription = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SourceSystem", type text}, {"Employer_Ref", type text}, {"Worker_Ref", type text}, {"WorkerName", type text}, {"Worker_Occupation", type text}, {"PeriodCode", Int64.Type}, {"Timesheet_Number", Int64.Type}, {"RateDescription", type text}}),

#"Removed Other Columns" = Table.SelectColumns(#"Changed Type",{"Employer_Ref", "Worker_Ref", "WorkerName", "Worker_Occupation", "RateDescription","PeriodCode"}),

#"Filtered Rows" = Table.SelectRows(#"Removed Other Columns", each ([Worker_Occupation] <> "")),

#"Removed Duplicates" = Table.Distinct(#"Filtered Rows", {"Employer_Ref", "Worker_Ref", "WorkerName", "Worker_Occupation"}),

Custom1 = Table.ToRows( #"Removed Duplicates")

in

Custom1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Mr_Stern

Here is one way, you need to take care of your manual column

output

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("jY7BCoJAEIZfZfCcoLtgelzMsDSJii7SYc1BhXUXNg18m56lJ2vXLkWXTjP/z//PN2XpsHTvLBwS0ig6m2WrWgkpSj0ZwYTosAYX9q3AyhjzVIPqu9tgpC16xPd857Io5zSXVzRuQCgl9lzWiUZphJNWo22wvhqFDUGs5KCVEKiNHbM8hxPLksPzAUcGfrgpYs+HnbuGFZ8+UOSNmp8OyJJ6lrLiPUJSSw7JHTVv8E9S8YP6Jl1e", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Payroll = _t, #"Candidate ID" = _t, WorkerName = _t, Worker_Occupation = _t, RateDescription = _t, GradeCode = _t, #"Date Added" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Payroll", type text}, {"Candidate ID", type text}, {"WorkerName", type text}, {"Worker_Occupation", type text}, {"RateDescription", type text}, {"GradeCode", type text}, {"Date Added", Int64.Type}}),

originTable = Table.RemoveColumns(#"Changed Type",{"GradeCode"}),

Custom1 = List.Union({ Table.ToRows( originTable), Table}),

Custom2 = Table.FromRows(Custom1, Table.ColumnNames(originTable)),

#"Added Custom" = Table.AddColumn(Custom2, "GradeCode", each null)

in

#"Added Custom"

the DATA called Table

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("rVHbbsIwDP0Vq89UahLK5bFqGWWwjlHUF9SHABFUSpMppJP4G76FL1syVgSsEmOaolh2bJ9j5ywWTkp1pYTTcoJ4aizukX4/M86z3AqImVB7m+O8YGtwYbrlbGnLPIw8ZBx7vx6llmWx007eWjhvFRW6Kr8bqVgx43YwIdgijwu+kYrBXMlKm/gSrmtjC3EzVgd3iWebI1oyGKwFhcEHU3TD7gP8YoSgXFbcFkEohVaSc6ZqVGy39I0Jg8kE5sF4MDseIA0A9UZJ6CF4cZ8govuHx75Hinu3pMkP1ibSRyQkxiF+k4aN/+e3CWr6v4yuVoWgWp4XaFstyFXKTd0U5qx8/199zkx/0Ycg5FvCrDDgQkNGBQzlZmuekkrt2CXJSZXZMIHoNQ5P8JN0VOMfD/Vx8vwT", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [SourceSystem = _t, Employer_Ref = _t, Worker_Ref = _t, WorkerName = _t, Worker_Occupation = _t, PeriodCode = _t, Timesheet_Number = _t, RateDescription = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"SourceSystem", type text}, {"Employer_Ref", type text}, {"Worker_Ref", type text}, {"WorkerName", type text}, {"Worker_Occupation", type text}, {"PeriodCode", Int64.Type}, {"Timesheet_Number", Int64.Type}, {"RateDescription", type text}}),

#"Removed Other Columns" = Table.SelectColumns(#"Changed Type",{"Employer_Ref", "Worker_Ref", "WorkerName", "Worker_Occupation", "RateDescription","PeriodCode"}),

#"Filtered Rows" = Table.SelectRows(#"Removed Other Columns", each ([Worker_Occupation] <> "")),

#"Removed Duplicates" = Table.Distinct(#"Filtered Rows", {"Employer_Ref", "Worker_Ref", "WorkerName", "Worker_Occupation"}),

Custom1 = Table.ToRows( #"Removed Duplicates")

in

Custom1

Helpful resources

FabCon Global Hackathon

Join the Fabric FabCon Global Hackathon—running virtually through Nov 3. Open to all skill levels. $10,000 in prizes!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.