FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Translate SUMPRODUCT using a Query Power BI

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Translate SUMPRODUCT using a Query Power BI

Dear all,

I have a report in Excel and I need to “translate” it in Power BI.

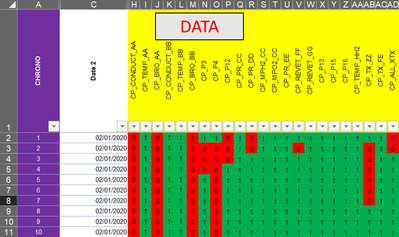

I have data (loaded in P. BI) with date column and the parameters (1=OK, 0=non OK) columns in yellow (see DATA picture). For each parameter I need to calculate a conformity average per day. In excel I use this :

Example for the first parameter (SOMMEPROD=SUMPRODUCT):

SOMMEPROD((Tableau2[[CP_CONDUCT_AA]:[CP_CONDUCT_AA]]=1)*(Tableau2[[Date 2]:[Date 2]]='RED P'!Q$1)*1)/SOMMEPROD((Tableau2[[CP_CONDUCT_AA]:[CP_CONDUCT_AA]]>=0)*(Tableau2[[Date 2]:[Date 2]]='RED P'!Q$1)*1)

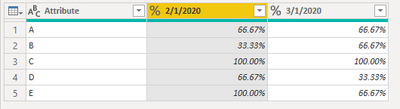

And I obtain my expect result (see Expected result picture) where my parameters are presented as rows (important detail) with the conformity per day as columns.

So, my question is : how can I translate my calculation “SUMPRODUCT” described above and get a query Power BI where my parameters are presented as rows and their respective conformity per day as columns?

Please, I just started using Power BI, could you give some ideas with good descriptions of steps to do it? And the useful DAX or query M functions…

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

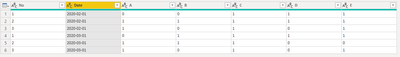

Hi @ItoDiaz ,

After importing the data, click "Transform data" to open qeury editor.

1. Remove "CHRONO" column.

2. Unpivot your parameter columns. You will get the "Attribute" column and the "Value" column.

3. Get a table with Value=1, calculate the row number.

4. Get a table with Value>=0, calculate the row number.

5. Merge these two tables, use division to get the percentage.

6. Remove useless columns.

7. Pivot date column.

Here is the codes in advanced editor.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTIyMDLQNTDSNQBxDKDYEI5jdaKVjNCVYWKQMmNsygxQaJAyhKXGCEsxTTNCV4aMIe5EtdQYp6WxAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [No = _t, Date = _t, A = _t, B = _t, C = _t, D = _t, E = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"No", Int64.Type}, {"Date", type date}, {"A", Int64.Type}, {"B", Int64.Type}, {"C", Int64.Type}, {"D", Int64.Type}, {"E", Int64.Type}}),

#"Removed Columns" = Table.RemoveColumns(#"Changed Type",{"No"}),

#"Unpivoted Columns" = Table.UnpivotOtherColumns(#"Removed Columns", {"Date"}, "Attribute", "Value"),

#"Filtered Rows" = Table.SelectRows(#"Unpivoted Columns", each ([Value] = 1)),

#"Grouped Rows1" = Table.Group(#"Filtered Rows", {"Attribute", "Date"}, {{"Count", each Table.RowCount(_), type number}}),

#"Grouped Rows" = Table.Group(#"Unpivoted Columns", {"Attribute", "Date"}, {{"Count", each Table.RowCount(_), type number}}),

#"Merged Queries" = Table.NestedJoin(#"Grouped Rows", {"Attribute", "Date"}, #"Grouped Rows1", {"Attribute", "Date"}, "Grouped Rows", JoinKind.Inner),

#"Expanded Grouped Rows" = Table.ExpandTableColumn(#"Merged Queries", "Grouped Rows", {"Count"}, {"Grouped Rows.Count"}),

#"Added Custom" = Table.AddColumn(#"Expanded Grouped Rows", "Custom", each [Grouped Rows.Count]/[Count]),

#"Changed Type1" = Table.TransformColumnTypes(#"Added Custom",{{"Custom", Percentage.Type}}),

#"Removed Columns1" = Table.RemoveColumns(#"Changed Type1",{"Count", "Grouped Rows.Count"}),

#"Pivoted Column" = Table.Pivot(Table.TransformColumnTypes(#"Removed Columns1", {{"Date", type text}}, "en-US"), List.Distinct(Table.TransformColumnTypes(#"Removed Columns1", {{"Date", type text}}, "en-US")[Date]), "Date", "Custom")

in

#"Pivoted Column"Here are my test sample and result.

Here is my test file for your reference.

If this post helps, then please consider Accept it as the solution to help the other members find it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ItoDiaz , I know it has already been answered but I liked your issue and i had a go at it.

Supposing dates change and also a product may be added or removed, I've made everything dynamic. I've used @v-eachen-msft's dummy table for the solution:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTIyMDLQNTDSNQBxDKDYEI5jdaKVjNCVYWKQMmNsygxQaJAyhKXGCEsxTTNCV4aMIe5EtdQYp6WxAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [No = _t, Date = _t, A = _t, B = _t, C = _t, D = _t, E = _t]),

PreviousStep = Table.TransformColumnTypes(Source,{{"No", Int64.Type}, {"Date", type date}, {"A", Int64.Type}, {"B", Int64.Type}, {"C", Int64.Type}, {"D", Int64.Type}, {"E", Int64.Type}}),

DateList = List.Transform(List.Distinct(PreviousStep[Date]), each {Text.From(_), Percentage.Type}),

Columns = List.Select(Table.ColumnNames(PreviousStep), each _ <> "Date" and _ <> "No"),

GroupList = List.Transform(Columns, each {_, Expression.Evaluate( "each List.Sum(["& _ & "]) / Table.RowCount(_)", [List.Sum = List.Sum, Table.RowCount = Table.RowCount]), Percentage.Type}),

#"Grouped Rows" = Table.Group(PreviousStep, {"Date"}, GroupList),

#"Demoted Headers" = Table.DemoteHeaders(#"Grouped Rows"),

#"Transposed Table" = Table.Transpose(#"Demoted Headers"),

#"Promoted Headers" = Table.PromoteHeaders(#"Transposed Table", [PromoteAllScalars=true]),

#"Renamed Columns" = Table.RenameColumns(#"Promoted Headers",{{"Date", "Product"}}),

#"Changed Types" = Table.TransformColumnTypes(#"Renamed Columns", List.Combine({{{"Product", type text}}, DateList}))

in

#"Changed Types"

Some explaining.

1) DateList is a list we'll later use to correct our datatypes. Dynamically taking the dates existing and creating a list which will be read for the type transformations.

2) Columns is a list of all our products, supposing here that the columns without products are [No] and [Date]

3) GroupList. Here is where the calculation actually happens. It's a list of list, each containing 3 elements: Name, function, type. This be read as new column calculations on the grouping step. Assuming that good is always 1 and bad is always 0 (no 0.5 values etc.), we calculate the % by suming all the values in every column and dividing by day's total count. I've also assumed there are no nulls.

The rest is a table transpose to actually have products as rows and dates as columns, with the last step being the column type correction.

This solution should also be faster, seeing that there is only one grouping and one transposition.

Cheers,

smauro

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ItoDiaz ,

After importing the data, click "Transform data" to open qeury editor.

1. Remove "CHRONO" column.

2. Unpivot your parameter columns. You will get the "Attribute" column and the "Value" column.

3. Get a table with Value=1, calculate the row number.

4. Get a table with Value>=0, calculate the row number.

5. Merge these two tables, use division to get the percentage.

6. Remove useless columns.

7. Pivot date column.

Here is the codes in advanced editor.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTIyMDLQNTDSNQBxDKDYEI5jdaKVjNCVYWKQMmNsygxQaJAyhKXGCEsxTTNCV4aMIe5EtdQYp6WxAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [No = _t, Date = _t, A = _t, B = _t, C = _t, D = _t, E = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"No", Int64.Type}, {"Date", type date}, {"A", Int64.Type}, {"B", Int64.Type}, {"C", Int64.Type}, {"D", Int64.Type}, {"E", Int64.Type}}),

#"Removed Columns" = Table.RemoveColumns(#"Changed Type",{"No"}),

#"Unpivoted Columns" = Table.UnpivotOtherColumns(#"Removed Columns", {"Date"}, "Attribute", "Value"),

#"Filtered Rows" = Table.SelectRows(#"Unpivoted Columns", each ([Value] = 1)),

#"Grouped Rows1" = Table.Group(#"Filtered Rows", {"Attribute", "Date"}, {{"Count", each Table.RowCount(_), type number}}),

#"Grouped Rows" = Table.Group(#"Unpivoted Columns", {"Attribute", "Date"}, {{"Count", each Table.RowCount(_), type number}}),

#"Merged Queries" = Table.NestedJoin(#"Grouped Rows", {"Attribute", "Date"}, #"Grouped Rows1", {"Attribute", "Date"}, "Grouped Rows", JoinKind.Inner),

#"Expanded Grouped Rows" = Table.ExpandTableColumn(#"Merged Queries", "Grouped Rows", {"Count"}, {"Grouped Rows.Count"}),

#"Added Custom" = Table.AddColumn(#"Expanded Grouped Rows", "Custom", each [Grouped Rows.Count]/[Count]),

#"Changed Type1" = Table.TransformColumnTypes(#"Added Custom",{{"Custom", Percentage.Type}}),

#"Removed Columns1" = Table.RemoveColumns(#"Changed Type1",{"Count", "Grouped Rows.Count"}),

#"Pivoted Column" = Table.Pivot(Table.TransformColumnTypes(#"Removed Columns1", {{"Date", type text}}, "en-US"), List.Distinct(Table.TransformColumnTypes(#"Removed Columns1", {{"Date", type text}}, "en-US")[Date]), "Date", "Custom")

in

#"Pivoted Column"Here are my test sample and result.

Here is my test file for your reference.

If this post helps, then please consider Accept it as the solution to help the other members find it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It works.

It helped me to undertand better the query M philosophy.

With your code I have created weekly, monthly and yearly conformity queries. Now I will work in the comparison between current month and previous month and the creation of an "alarm" if the conformity rate is <80% in both months.

H-WE

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ItoDiaz , I know it has already been answered but I liked your issue and i had a go at it.

Supposing dates change and also a product may be added or removed, I've made everything dynamic. I've used @v-eachen-msft's dummy table for the solution:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMlTSUTIyMDLQNTDSNQBxDKDYEI5jdaKVjNCVYWKQMmNsygxQaJAyhKXGCEsxTTNCV4aMIe5EtdQYp6WxAA==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [No = _t, Date = _t, A = _t, B = _t, C = _t, D = _t, E = _t]),

PreviousStep = Table.TransformColumnTypes(Source,{{"No", Int64.Type}, {"Date", type date}, {"A", Int64.Type}, {"B", Int64.Type}, {"C", Int64.Type}, {"D", Int64.Type}, {"E", Int64.Type}}),

DateList = List.Transform(List.Distinct(PreviousStep[Date]), each {Text.From(_), Percentage.Type}),

Columns = List.Select(Table.ColumnNames(PreviousStep), each _ <> "Date" and _ <> "No"),

GroupList = List.Transform(Columns, each {_, Expression.Evaluate( "each List.Sum(["& _ & "]) / Table.RowCount(_)", [List.Sum = List.Sum, Table.RowCount = Table.RowCount]), Percentage.Type}),

#"Grouped Rows" = Table.Group(PreviousStep, {"Date"}, GroupList),

#"Demoted Headers" = Table.DemoteHeaders(#"Grouped Rows"),

#"Transposed Table" = Table.Transpose(#"Demoted Headers"),

#"Promoted Headers" = Table.PromoteHeaders(#"Transposed Table", [PromoteAllScalars=true]),

#"Renamed Columns" = Table.RenameColumns(#"Promoted Headers",{{"Date", "Product"}}),

#"Changed Types" = Table.TransformColumnTypes(#"Renamed Columns", List.Combine({{{"Product", type text}}, DateList}))

in

#"Changed Types"

Some explaining.

1) DateList is a list we'll later use to correct our datatypes. Dynamically taking the dates existing and creating a list which will be read for the type transformations.

2) Columns is a list of all our products, supposing here that the columns without products are [No] and [Date]

3) GroupList. Here is where the calculation actually happens. It's a list of list, each containing 3 elements: Name, function, type. This be read as new column calculations on the grouping step. Assuming that good is always 1 and bad is always 0 (no 0.5 values etc.), we calculate the % by suming all the values in every column and dividing by day's total count. I've also assumed there are no nulls.

The rest is a table transpose to actually have products as rows and dates as columns, with the last step being the column type correction.

This solution should also be faster, seeing that there is only one grouping and one transposition.

Cheers,

smauro

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your solution. I have applied your code, it works and as you thought it is faster. In my case 1' against 1'30'' for the first solution (important if you have a "lazy" laptop...)

Can I accept two solutions? I don't think so.

Kind regards,

ItoDiaz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @v-eachen-msft ,

Thanks a lot for your advice. Your exemple seems answer to my questions.

Now my turn, act (adapt) and see. I will tell you.

KR

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 14 | |

| 11 | |

| 8 | |

| 7 | |

| 6 |