FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

View all the Fabric Data Days sessions on demand. View schedule

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Running count starting over when the counted v...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Running count starting over when the counted value is not present previous date

Hi guys,

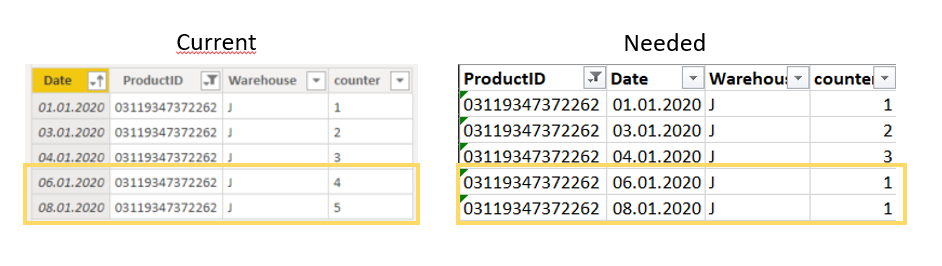

I'm new to PBI and Power Query and I can't find a solution to the following problem. To put it in context I have a dataset showing daily items on stock. The data is generated almost every working day but sometimes there are random time gaps when no data is generated at all. The simplified data looks like this:

| Product ID | Date |

| A | 6.1.2020 |

| B | 6.1.2020 |

| C | 6.1.2020 |

| A | 9.1.2020 |

| B | 9.1.2020 |

| C | 9.1.2020 |

| A | 11.1.2020 |

| B | 11.1.2020 |

| A | 14.1.2020 |

| B | 14.1.2020 |

| C | 14.1.2020 |

| A | 15.1.2020 |

| B | 15.1.2020 |

| C | 15.1.2020 |

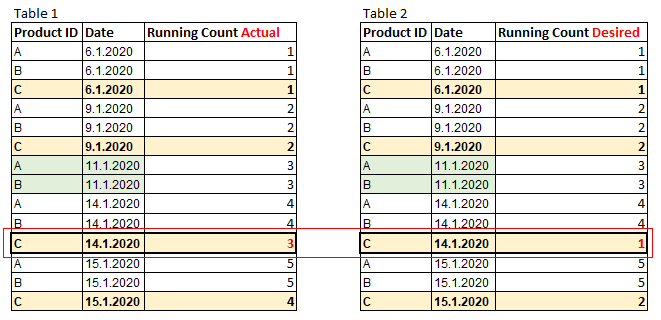

I managed to create a "running count" column using grouping and add index based on this tutorial (https://www.youtube.com/watch?v=camNMc1zaBc&t=189s) and I got the output shown in Table 1. As you can see, Product "C" was not present on 11/1/2020 but was present again on 14/1/2020 so the running count goes continuously on. The ouptut I need though, is the one shown in Table 2. I need the counting to start over anytime when product ID was not present the previous date and at least one product was.

Do you guys have any ideas? I will be sooo glad for any solution either if it's Power Query using raw data or DAX calculated column using the data with actual runnning count.

Thank you so much!

Honzik

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

you have to modify the code like so:

let

fnIdx =

(myTable as table) =>

let

partition = Table.Buffer(myTable),

dates = AllDatesWthIndex,

#"Merged Queries" = Table.NestedJoin(partition, {"Date"}, dates, {"Date"}, "Changed Type", JoinKind.LeftOuter),

#"Expanded Changed Type" = Table.ExpandTableColumn(#"Merged Queries", "Changed Type", {"Index"}, {"Index"}),

Custom1 = Table.ToColumns(#"Expanded Changed Type"),

IndexedTable = Table.Buffer( Table.Sort(Table.FromColumns(Custom1 & { {null} & List.RemoveLastN(List.Last(Custom1),1) }, {"ID", "Date", "Warehouse", "Index", "PrevIndex"}), "Index") ),

Initial = IndexedTable{0} & [Counter = 0, Idx = 1],

Custom3 = List.Generate( ()=> Initial,

each [Counter] < Table.RowCount(IndexedTable),

each [ Counter = [Counter] + 1,

Idx = if IndexedTable[Index]{Counter} - IndexedTable[PrevIndex]{Counter} = 1 then [Idx] + 1 else 1

]),

#"Converted to Table" = Table.FromList(Custom3, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"Idx"}, {"Idx"})[Idx],

Custom2 = Table.FromColumns( Table.ToColumns( IndexedTable ) & { #"Expanded Column1" })

in

Custom2,

Origine = dataSample,

Selection = Table.FirstN(Origine,CountRecordsImke),

AllDates=Table.Buffer( Table.Distinct(Table.SelectColumns(Selection, {"Date"})) ),

AllDatesWthIndex = Table.AddIndexColumn(AllDates, "Index", 0, 1, Int64.Type),

AllProducts = Origine,

#"Grouped Rows" = Table.Group(Selection, {"ProductID"}, {{"ProductPartition", each _}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows", "Custom", each fnIdx([ProductPartition])),

#"Expanded Custom" = Table.ExpandTableColumn(#"Added Custom", "Custom", {"Column2", "Column3", "Column6"}, {"Date", "Warehouse", "Index"}),

#"Removed Columns" = Table.RemoveColumns(#"Expanded Custom",{"ProductPartition"}),

#"Changed Type" = Table.TransformColumnTypes(#"Removed Columns",{{"Date", type date}, {"Index", Int64.Type}, {"ProductID", type text}})

in

#"Changed Type"

Please also see the file attached.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous .

First of all, I congratulate you for being an excellent tester. But then I tell you that I do not agree on the expected result that you indicate on the demo_real file. I am attaching a screen with the result that, from your previous descriptions, I would expect. I have corrected the script and am always curious to know how long it takes to work your complete file.

The script assumes that the data are sorted by date, otherwise you have to add a statement to that effect at the beginning.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

you have to modify the code like so:

let

fnIdx =

(myTable as table) =>

let

partition = Table.Buffer(myTable),

dates = AllDatesWthIndex,

#"Merged Queries" = Table.NestedJoin(partition, {"Date"}, dates, {"Date"}, "Changed Type", JoinKind.LeftOuter),

#"Expanded Changed Type" = Table.ExpandTableColumn(#"Merged Queries", "Changed Type", {"Index"}, {"Index"}),

Custom1 = Table.ToColumns(#"Expanded Changed Type"),

IndexedTable = Table.Buffer( Table.Sort(Table.FromColumns(Custom1 & { {null} & List.RemoveLastN(List.Last(Custom1),1) }, {"ID", "Date", "Warehouse", "Index", "PrevIndex"}), "Index") ),

Initial = IndexedTable{0} & [Counter = 0, Idx = 1],

Custom3 = List.Generate( ()=> Initial,

each [Counter] < Table.RowCount(IndexedTable),

each [ Counter = [Counter] + 1,

Idx = if IndexedTable[Index]{Counter} - IndexedTable[PrevIndex]{Counter} = 1 then [Idx] + 1 else 1

]),

#"Converted to Table" = Table.FromList(Custom3, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"Idx"}, {"Idx"})[Idx],

Custom2 = Table.FromColumns( Table.ToColumns( IndexedTable ) & { #"Expanded Column1" })

in

Custom2,

Origine = dataSample,

Selection = Table.FirstN(Origine,CountRecordsImke),

AllDates=Table.Buffer( Table.Distinct(Table.SelectColumns(Selection, {"Date"})) ),

AllDatesWthIndex = Table.AddIndexColumn(AllDates, "Index", 0, 1, Int64.Type),

AllProducts = Origine,

#"Grouped Rows" = Table.Group(Selection, {"ProductID"}, {{"ProductPartition", each _}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows", "Custom", each fnIdx([ProductPartition])),

#"Expanded Custom" = Table.ExpandTableColumn(#"Added Custom", "Custom", {"Column2", "Column3", "Column6"}, {"Date", "Warehouse", "Index"}),

#"Removed Columns" = Table.RemoveColumns(#"Expanded Custom",{"ProductPartition"}),

#"Changed Type" = Table.TransformColumnTypes(#"Removed Columns",{{"Date", type date}, {"Index", Int64.Type}, {"ProductID", type text}})

in

#"Changed Type"

Please also see the file attached.

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ImkeF,

I tried the script and it runs great and quick. As I'm a beginner to M-code, I'll have to work on understanding it but it serves me very well. Thank you so much for your effort.

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous and @Anonymous ,

you might be interested in an approach that runs the full dataset in seconds instead of minutes:

let

fnIdx =

(myTable as table) =>

let

partition = Table.Buffer(myTable),

dates = AllDatesWthIndex,

#"Merged Queries" = Table.NestedJoin(partition, {"Date"}, dates, {"Date"}, "Changed Type", JoinKind.LeftOuter),

#"Expanded Changed Type" = Table.ExpandTableColumn(#"Merged Queries", "Changed Type", {"Index"}, {"Index"}),

Custom1 = Table.ToColumns(#"Expanded Changed Type"),

IndexedTable = Table.Buffer( Table.FromColumns(Custom1 & { {null} & List.RemoveLastN(List.Last(Custom1),1) }, {"ID", "Date", "Index", "PrevIndex"}) ),

Initial = IndexedTable{0} & [Counter = 0, Idx = 1],

Custom3 = List.Generate( ()=> Initial,

each [Counter] < Table.RowCount(IndexedTable),

each [ Counter = [Counter] + 1,

Idx = if IndexedTable[Index]{Counter} - IndexedTable[PrevIndex]{Counter} = 1 then [Idx] + 1 else 1

]),

#"Converted to Table" = Table.FromList(Custom3, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Expanded Column1" = Table.ExpandRecordColumn(#"Converted to Table", "Column1", {"Idx"}, {"Idx"})[Idx],

Custom2 = Table.FromColumns( Table.ToColumns( IndexedTable ) & { #"Expanded Column1" })

in

Custom2,

Origine = dataSample,

Selection = Table.FirstN(Origine,CountRecordsImke),

AllDates=Table.Buffer( Table.Distinct(Table.SelectColumns(Selection, {"Date"})) ),

AllDatesWthIndex = Table.AddIndexColumn(AllDates, "Index", 0, 1, Int64.Type),

AllProducts = Origine,

#"Grouped Rows" = Table.Group(Selection, {"ProductID"}, {{"ProductPartition", each _}}),

#"Added Custom" = Table.AddColumn(#"Grouped Rows", "Custom", each fnIdx([ProductPartition])),

#"Expanded Custom" = Table.ExpandTableColumn(#"Added Custom", "Custom", {"Column2", "Column5"}, {"Date", "Index"}),

#"Removed Columns" = Table.RemoveColumns(#"Expanded Custom",{"ProductPartition"}),

#"Changed Type" = Table.TransformColumnTypes(#"Removed Columns",{{"Date", type date}, {"Index", Int64.Type}, {"ProductID", type text}})

in

#"Changed Type"

It runs the logic on the level of the individual ProductIDs like I've described here: Performance Tip: Partition your tables at crossjoins where possible - PowerQuery PowerBI – The BIccountant

and uses a shifted index method which also makes comparisons very fast: Fast and easy way to reference previous or next rows in Power Query or Power BI – The BIccountant

Power Query doesn't have to be slow if you do it the right way. I've collected some tipps and tricks to improve performance here: Speed/Performance aspects – The BIccountant

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ImkeF,

thank you very much for your solution. I'll go through it so I can better undestand the concept but looks like great approach to it! One more question - could you please give me a hint how to run it using all columns (including Warehouse) so the warehouse values remain there when it's finished? (Dateset including that column is here https://drive.google.com/file/d/16ooEjU8S-T2dPVic9cf3QvntIpUGl41a/view?usp=sharing.

Thanks a lot!

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try this using small tables initially and check run times. if it works for you with small tables and doesn't take too long, increase the size of the tables as you go.

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WclTSUTLTM9QzMjAyUIrViVZyQhdwRhcAabFE12KJrgVDhaEhuiGGJhhqTNCNQRVxwRABm2OKoQshEgsA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [prid = _t, date = _t]),

d=Origine[date],

all = Table.Group(Origine, {"date"}, {{"all", each _[prid]}})[all],

allprid=List.Union(all),

nprid=List.Count(allprid),

rec0=Record.FromList(List.Repeat({0},nprid),allprid),

lg=List.Generate(

()=>rec0&[n=0],

each [n]<=List.Count(all),

each Record.TransformFields(_, List.Transform(allprid, (p)=> {p, (x)=>if List.Contains(all{[n]},p) then x+1 else 0}))&[n=[n]+1],

each Record.ToTable(Record.RemoveFields(_,"n"))

),

tc=Table.Combine(lg),

#"Filtrate righe" = Table.SelectRows(tc, each ([Value] <> 0)),

ttc=Table.FromColumns({d}&Table.ToColumns(#"Filtrate righe"),{"date","grpid","idx"})

in

ttc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again,

thank you very much for your help. I used the last script that you posted and it worked exactly as I need to. However, when I run it through larger dataset (15 K rows with around 1 K distinct Product IDs and 220 distinct dates) it took hours to process and unfortunately my laptop got frozen so I'll try again and leave it over night. In total, I need it to get through around 60 K rows. If it takes long but works out it's fine if I manage to set up refresh so it only goes through new data that would appear monthly (the data source is a folder where xls. files are put every month with more or less 1,5 K rows every time). Once again, thank you sooo much for your effort! I'd not be able to come up with anything close to this myself.

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WclTSUTLTM9QzMjAyUIrViVZyQhdwRhcAabFE12KJrgVDhaEhuiGGJhhqTNCNQRUB6zLFUIMQiQUA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [prid = _t, date = _t]),

#"Raggruppate righe1" = Table.Group(Origine, {"date"}, {{"all", each _[prid]}}),

#"Rimosse altre colonne" = Table.SelectColumns(#"Raggruppate righe1",{"all"}),

all = #"Rimosse altre colonne"[all],

lg=List.Generate(

()=>[A=0,B=0,C=0,n=0],

each [n]<=List.Count(all),

each [A=if List.Contains(all{[n]},"A")then [A]+1 else 0,

B=if List.Contains(all{[n]},"B")then [B]+1 else 0,

C=if List.Contains(all{[n]},"C")then [C]+1 else 0,

n=[n]+1],

each Table.Transpose(Table.DemoteHeaders(Table.FromRecords({_[[A],[B],[C]]}))

)),

tc=Table.Combine(lg),

#"Filtrate righe" = Table.SelectRows(tc, each ([Column2] <> 0))

in

#"Filtrate righe"

Try using a pattern like this. it's tangled (and for this reason I suggest you toi test carefully the script), but I can't find anything simpler and more direct for the moment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

thank you very much for an advice! Could you please add a little bit of information how to use the code, so the extra runing count column is created? Unfortunately I don't know how to use it properly so it doesn't work at the moment. I'm trying to find out the way myself using various tutorials but without success.

Also, the real dataset contains 10K+ of rows with hundreds of distinct product IDs which are all 14-digit numbers. On top of that, these IDs are added new every month so I need the solution to be flexible whenever there's a change. Do you think I'd be able to use your code if implemented correctly? I'm sorry I didn't mention that earlier but since I don't have much experience I supposed it was not that important but now I guess it probably is. Once again thanks a lot.

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try these versions, should perfom better (and the second better than first)

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WclTSUTLTM9QzMjAyUIrViVZyQhdwRhcAabFE12KJrgVDhaEhuiGGJhhqTNCNQRVxwRABm2OKoQshEgsA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [prid = _t, date = _t]),

d=Origine[date],

all = List.Buffer(Table.Group(Origine, {"date"}, {{"all", each _[prid]}})[all]),

nall=List.Count(all),

allprid=List.Buffer(List.Union(all)),

nprid=List.Count(allprid),

rec0=Record.FromList(List.Repeat({0},nprid),allprid),

lg=List.Generate(

()=>rec0&[n=0],

each [n]<=nall,

each Record.TransformFields(_, List.Transform(allprid, (p)=> {p, (x)=>if List.Contains(all{[n]},p) then x+1 else 0}))&[n=[n]+1],

each Record.ToTable(Record.RemoveFields(_,"n"))

),

tc=Table.Combine(lg),

#"Filtrate righe" = Table.SelectRows(tc, each ([Value] <> 0)),

ttc=Table.FromColumns({d}&Table.ToColumns(#"Filtrate righe"),{"date","grpid","idx"})

in

ttc

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WclTSUTLTM9QzMjAyUIrViVZyQhdwRhcAabFE12KJrgVDhaEhuiGGJhhqTNCNQRVxwRABm2OKoQshEgsA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [prid = _t, date = _t]),

d=Origine[date],

all = List.Buffer(Table.Group(Origine, {"date"}, {{"all", each _[prid]}})[all]),

nall=List.Count(all),

allprid=List.Buffer(List.Union(all)),

nprid=List.Count(allprid),

rec0=Record.FromList(List.Repeat({0},nprid),allprid),

lg=List.Generate(

()=>rec0&[n=0],

each [n]<=nall,

each Record.TransformFields(_, List.Transform(allprid, (p)=> {p, (x)=>if List.Contains(all{[n]},p) then x+1 else 0}))&[n=[n]+1]

),

lglb=List.Buffer(lg),

tc=Table.Combine(List.Transform(lglb, each Record.ToTable(_))),

#"Filtrate righe" = Table.SelectRows(tc, each ([Value] <> 0 and [Name]<>"n")),

ttc=Table.FromColumns({d}&Table.ToColumns(#"Filtrate righe"),{"date","grpid","idx"})

in

ttcPS

I came up with a different idea on how to do it, but I need time to implement it. In this case without using the list.generate function which works one record at a time and therefore could be much faster.

PPS

If you can upload a table with your productIDs and dates, I can do some tests. I don't think publishing productIDs and a list of dates is a problem (since they are just numeric codes), is it? eventually you can also "encode" them and dates by removing or adding something to all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

this is the link where I uploaded the dataset - https://drive.google.com/file/d/16ooEjU8S-T2dPVic9cf3QvntIpUGl41a/view?usp=sharing There's one more column showing Warehouse ID I was asked to add but there's no need to calculate with that value - it's just supposed to be displayed in the final table. I'm slowly starting to understand how some of the M code works but still struggling to do this ony my own.

I have not tried the last script you posted but the one before worked though it took hours for my laptop to process more data. Once again big thanks for everything, you've already helped a lot.

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're genius!

And hopefully the last thing - how do I add back the warehouse column please? The way I meant it was that it has to remain in the final table. I suppose there's no need for any big change in your script but I'm unable to figure out how to run it with original 3-column table (ProductID, Date, Warehouse). The ProductID-Date-Warehouse combination is supposed to be the same as in the raw table. Is there any easy way how to modify it so it remains there? Sorry that I did't explain in clearly in the last reply. I already feel bad for not being able to make that change on my own and bothering you with all that but my guess is it should not be anything too hardt though my skills are currently insufficient.

Thank you!

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Anonymous

exaggerated. Try this one should be even faster than my last one.

I don't know if faster than @ImkeF 's, but this doesn't use List.generate, and you caùn execute all the steps (except the one who does the grouping) with the UI

You have also the extra column [Warehouse]

let

Origine = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WclTSUTLTM9QzMjAyUIrViVZyQhdwRhcAabFE12KJrgVFwAWbFkNDdFMNTTDUmKCbiyoC1mWKocYU3XIkkVgA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [prid = _t, date = _t]),

#"Modificato tipo" = Table.TransformColumnTypes(Origine,{{"date", type date}}),

#"Raggruppate righe" = Table.Group(#"Modificato tipo", {"date"}, {{"all", each _, type table [prid=nullable text, date=nullable text]}}),

#"Aggiunta colonna indice" = Table.AddIndexColumn(#"Raggruppate righe", "Indice", 1, 1, Int64.Type),

#"Tabella all espansa" = Table.ExpandTableColumn(#"Aggiunta colonna indice", "all", {"prid"}, {"prid"}),

#"Ordinate righe" = Table.Sort(#"Tabella all espansa",{{"prid", Order.Ascending}, {"Indice", Order.Ascending}}),

#"Aggiunta colonna indice1" = Table.AddIndexColumn(#"Ordinate righe", "dist", 0, 1, Int64.Type),

#"Raggruppate righe1" = Table.Group(#"Aggiunta colonna indice1", "prid", {"ccc",each Table.Group(_, {"Indice","dist"}, {{"all", (c)=> Table.AddIndexColumn(c,"counter",1,1)}},GroupKind.Local,(x,y)=>Number.From(y[Indice]-x[Indice]>y[dist]))}),

#"Tabella ccc espansa1" = Table.ExpandTableColumn(#"Raggruppate righe1", "ccc", {"Indice", "dist", "all"}, {"Indice", "dist", "all"}),

#"Tabella all espansa1" = Table.ExpandTableColumn(#"Tabella ccc espansa1", "all", {"date", "counter"}, {"date", "counter"}),

#"Ordinate righe1" = Table.Sort(#"Tabella all espansa1",{{"date", Order.Ascending}}),

#"Rimosse colonne" = Table.RemoveColumns(#"Ordinate righe1",{"Indice", "dist"}),

#"Riordinate colonne" = Table.ReorderColumns(#"Rimosse colonne",{"date", "prid", "counter"})

in

#"Riordinate colonne"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

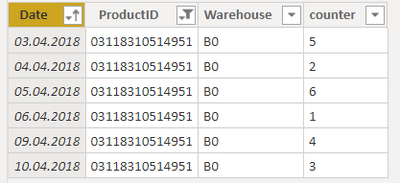

Hi @Anonymous,

Thank you for that. I tested it on my own but for some reason the last script (meaning the one in "ABCricomincia3b.pbix" file and teble including Warehouse column). I randomly picked ProductID and usualy the result was this:

I might have done something wrong but the snapshot was taken from the file unchanged (mentioned above). Idk what exactly caused that but for thos moment I'll use the solution by @ImkeF. Thank you for all the help you provided, I appreciate it a lot.

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem seems to be due to this expression, which should be equivalent to the second, but for some strange reason it doesn't work. Try now. I'm curious to know if you can measure the execution speed.

= Table.Sort(#"Tabella all espansa",{{"ProductID", Order.Ascending}, {"Indice", Order.Ascending}})

= Table.Sort(#"Tabella all espansa",{{"ProductID", Order.Ascending}, {"Date", Order.Ascending}})

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

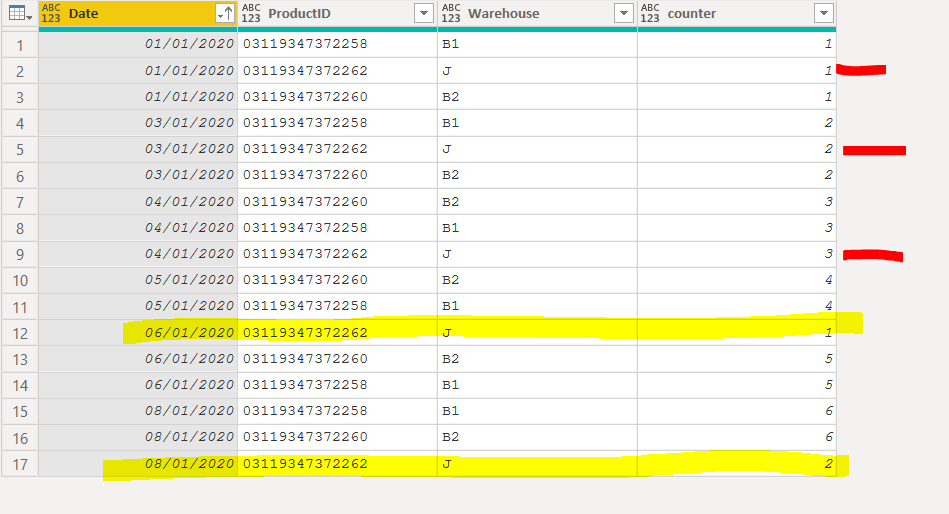

thank you for the update. The previous issue was solved and it runs very fast. I ran it through small sample of data to test it if it starts counting over when ProductID value is missing the previous present Date and unfortunately it did not work out as expected (see below).

This is the sample a ran it through, just 17 rows (https://drive.google.com/file/d/1ctZSZHt8R8M37I5aaQmAii5leVQrMOW0/view?usp=sharing)

Thanks

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

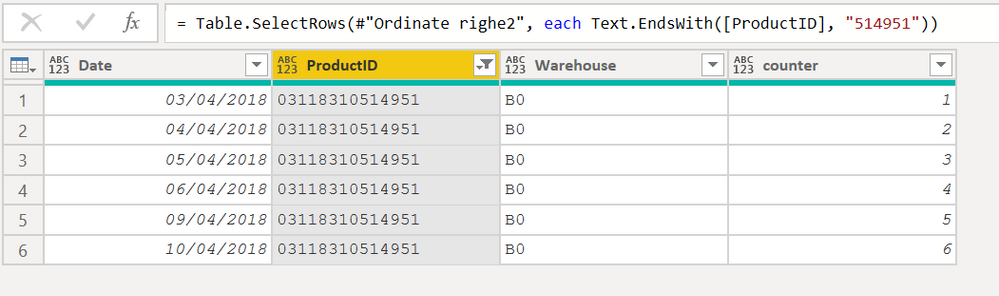

Hi @Anonymous .

First of all, I congratulate you for being an excellent tester. But then I tell you that I do not agree on the expected result that you indicate on the demo_real file. I am attaching a screen with the result that, from your previous descriptions, I would expect. I have corrected the script and am always curious to know how long it takes to work your complete file.

The script assumes that the data are sorted by date, otherwise you have to add a statement to that effect at the beginning.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much for the info, I didn't see that was the issue. Now everything seems to work perfectly and smoothly. It took just 1 or 2 sec tu run through all the data which means the speed is way more than good enough. It is also not complicated so that's awesome for someone who deesn't have much experience with M-code. Once again, thank you fo all your help!

Honzik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

change the statement making the groupings is like the following:

= Table.Group(#"Ordinate righe", "ProductID", {"ccc",each Table.Group(Table.AddIndexColumn(_,"dist",0,1), {"Indice","dist"}, {{"all", (c)=> Table.AddIndexColumn(c,"counter",1,1)}},GroupKind.Local,(x,y)=>Number.From(y[Indice]-x[Indice]>y[dist]-x[dist]))})

I am glad that you find it easy to follow myr solution. I assure you that from the conceptual point of view (not the strictly implementation one) it is not one of the simplest.

When I have time I try to illustrate in detail the idea behind it.

In this way you will be able to submit it to a more stringent and meaningful check.😁

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Helpful resources

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

| User | Count |

|---|---|

| 12 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |