Join us at FabCon Vienna from September 15-18, 2025

The ultimate Fabric, Power BI, SQL, and AI community-led learning event. Save €200 with code FABCOMM.

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Compete to become Power BI Data Viz World Champion! First round ends August 18th. Get started.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Replace NULL with weighted average of previous val...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Replace NULL with weighted average of previous values

My data looks like this:

Analysis Quality Tonnes Date Value Element 207996 H150 1228 2019-06-06 NULL 0,045 207996 H150 1228 2019-06-06 NULL 0,02 207979 H400 1474 2019-06-06 6,84 Vann 207979 H400 1474 2019-06-06 62,2 Fe-tot 207979 H400 1474 2019-06-06 0,52 Fe-Mag 207979 H400 1474 2019-06-06 0,03 Svovel 207979 H400 1474 2019-06-06 NULL P 207979 H400 1474 2019-06-06 5,02 SiO2 207979 H400 1474 2019-06-06 0,28 MnO 207979 H400 1474 2019-06-06 NULL Al2O3 207979 H400 1474 2019-06-06 NULL CaO 207979 H400 1474 2019-06-06 NULL 1,19 207979 H400 1474 2019-06-06 NULL 0,841 207979 H400 1474 2019-06-06 94,4 0,425 207979 H400 1474 2019-06-06 54,2 0,212 207979 H400 1474 2019-06-06 NULL 0,125 207979 H400 1474 2019-06-06 15,5 0,106

Several times a day new rows are added with new Analysis ID, where there are NULL values we want to replace them with the weighted average of the two previous measurements for that element/quality, where do i begin?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

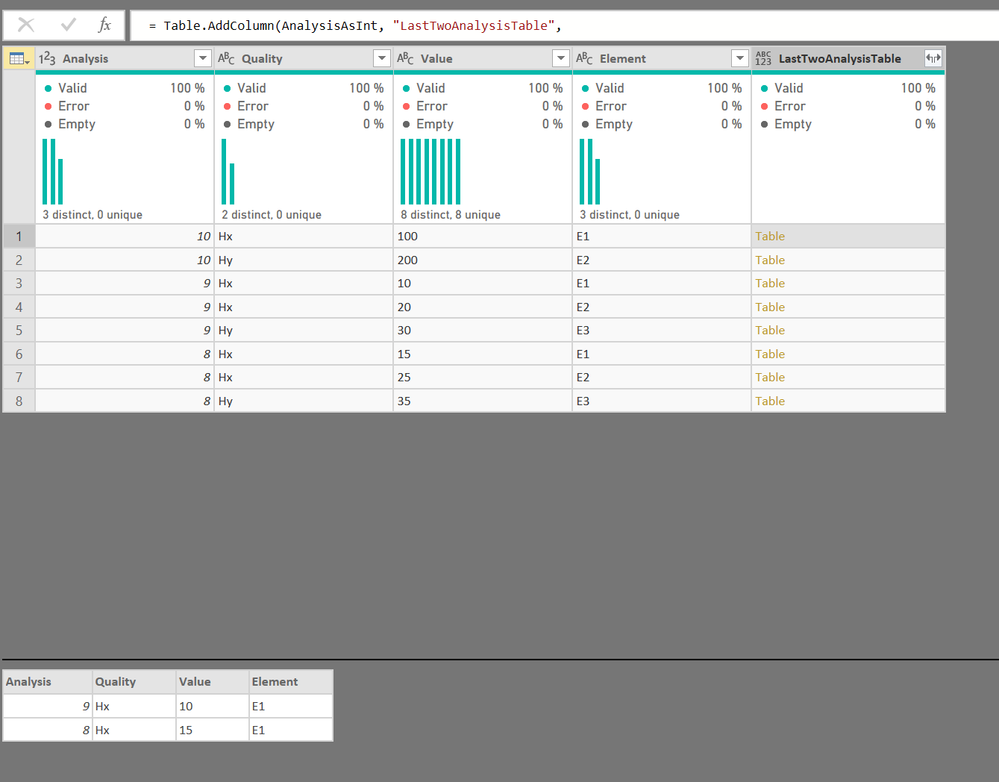

the following code creates a new column which contains at most 2 last analysis where the quality and the element are the same. It should help you, however as @v-juanli-msft said we can't help you solve your problem till the end because of missing logic for calculating weighted avg.

What the code does: For every row, create a table which has the same quality and element values, and which analysis is smaller than the one of current row, sort this new table by analysis column and take up to 2 first rows.

The next step is calculating your weighted avg of this new table in the column LastTwoAnalysisTable.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRQ0lHyqAAShgYgpquhUqwOTLgSSBhBhI3AwpYIxQi1cEEjdJUg/cZgQWOwoAVcuylCO1zQyBSh3QKu3RSqPRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Analysis = _t, Quality = _t, Value = _t, Element = _t]),

AnalysisAsInt = Table.TransformColumnTypes(Source,{{"Analysis", Int64.Type}}),

LastTwoAnalysis = Table.AddColumn(AnalysisAsInt, "LastTwoAnalysisTable",

(parentRow as record) =>

Table.FirstN(

Table.Sort(

Table.SelectRows(AnalysisAsInt,

(childRow as record) =>

childRow[Quality] = parentRow[Quality]

and

childRow[Element] = parentRow[Element]

and

childRow[Analysis] < parentRow[Analysis]

),

{{"Analysis", Order.Descending}}

),

2

)

)

in

LastTwoAnalysisUpdate: Added a screenshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous

I'm not clear how to create the weighted average of the two previous measurements for that element/quality.

Which is the weighted factor?

Could you show me expected value with null replaced based on your example data?

| index | Analysis | Quality | Tonnes | Date | Value | Element | expected value with null replaced |

| 1 | 207996 | H150 | 1228 | 6/6/2019 | NULL | 0,045 | ? |

| 2 | 207996 | H150 | 1228 | 6/6/2019 | NULL | 0,02 | |

| 3 | 207979 | H400 | 1474 | 6/6/2019 | 6,84 | Vann | |

| 4 | 207979 | H400 | 1474 | 6/6/2019 | 62,2 | Fe-tot | |

| 5 | 207979 | H400 | 1474 | 6/6/2019 | 0,52 | Fe-Mag | |

| 6 | 207979 | H400 | 1474 | 6/6/2019 | 0,03 | Svovel | |

| 7 | 207979 | H400 | 1474 | 6/6/2019 | NULL | P | |

| 8 | 207979 | H400 | 1474 | 6/6/2019 | 5,02 | SiO2 | |

| 9 | 207979 | H400 | 1474 | 6/6/2019 | 0,28 | MnO | |

| 10 | 207979 | H400 | 1474 | 6/6/2019 | NULL | Al2O3 | |

| 11 | 207979 | H400 | 1474 | 6/6/2019 | NULL | CaO | |

| 12 | 207979 | H400 | 1474 | 6/6/2019 | NULL | 1,19 | |

| 13 | 207979 | H400 | 1474 | 6/6/2019 | NULL | 0,841 | |

| 14 | 207979 | H400 | 1474 | 6/6/2019 | 94,4 | 0,425 | |

| 15 | 207979 | H400 | 1474 | 6/6/2019 | 54,2 | 0,212 | |

| 16 | 207979 | H400 | 1474 | 6/6/2019 | NULL | 0,125 | |

| 17 | 207979 | H400 | 1474 | 6/6/2019 | 15,5 | 0,106 |

Best Regards

Maggie

Community Support Team _ Maggie Li

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are the values i expect to see, the value should be replaced by the average of the two last analysis for that element weighted by tonnes.

My posted table was a horrible example as it does not contain enough data to demonstrate. Sorry about that. I have altered it so that the bold ones are the matches for the first row.

So the formula for the first row should read

(62.2*1475) + (61.2*1234) / (1475+1234) = 61.744

| Index | Analysis | Quality | Tonnes | Date | Value | Element | Expexted value |

| 1 | 207996 | H150 | 1228 | 6/6/2019 | NULL | Fe-tot | 61.774 |

| 2 | 207996 | H150 | 1228 | 6/6/2019 | NULL | 0,02 | |

| 3 | 207979 | H400 | 1474 | 6/6/2019 | 6,84 | Vann | |

| 4 | 207976 | H150 | 1475 | 6/6/2019 | 62,2 | Fe-tot | |

| 5 | 207979 | H400 | 1474 | 6/6/2019 | 0,52 | Fe-Mag | |

| 6 | 207977 | H150 | 1234 | 6/6/2019 | 61.2 | Fe-tot | |

| 7 | 207979 | H400 | 1474 | 6/6/2019 | NULL | P |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous,

the following code creates a new column which contains at most 2 last analysis where the quality and the element are the same. It should help you, however as @v-juanli-msft said we can't help you solve your problem till the end because of missing logic for calculating weighted avg.

What the code does: For every row, create a table which has the same quality and element values, and which analysis is smaller than the one of current row, sort this new table by analysis column and take up to 2 first rows.

The next step is calculating your weighted avg of this new table in the column LastTwoAnalysisTable.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRQ0lHyqAAShgYgpquhUqwOTLgSSBhBhI3AwpYIxQi1cEEjdJUg/cZgQWOwoAVcuylCO1zQyBSh3QKu3RSqPRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Analysis = _t, Quality = _t, Value = _t, Element = _t]),

AnalysisAsInt = Table.TransformColumnTypes(Source,{{"Analysis", Int64.Type}}),

LastTwoAnalysis = Table.AddColumn(AnalysisAsInt, "LastTwoAnalysisTable",

(parentRow as record) =>

Table.FirstN(

Table.Sort(

Table.SelectRows(AnalysisAsInt,

(childRow as record) =>

childRow[Quality] = parentRow[Quality]

and

childRow[Element] = parentRow[Element]

and

childRow[Analysis] < parentRow[Analysis]

),

{{"Analysis", Order.Descending}}

),

2

)

)

in

LastTwoAnalysisUpdate: Added a screenshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, thank you so much for that! It really helped me a lot. There was a couple of pitfalls that i discovered during testing. Both the table source and the searched table needed to be sorted the same way, or else i would get values from other elements. And i needed to avoid finding null values so i added another clause to the sorting.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRQ0lHyqAAShgYgpquhUqwOTLgSSBhBhI3AwpYIxQi1cEEjdJUg/cZgQWOwoAVcuylCO1zQyBSh3QKu3RSqPRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Analysis = _t, Quality = _t, Value = _t, Element = _t]),

AnalysisAsInt = Table.TransformColumnTypes(Source,{{"Analysis", Int64.Type}}),

Sorting = Table.Sort(AnalysisAsInt, , {{"Analysis", Order.Descending}, {"Element", Order.Ascending}})

LastTwoAnalysis = Table.AddColumn(Sorting , "LastTwoAnalysisTable",

(parentRow as record) =>

Table.FirstN(

Table.Sort(

Table.SelectRows(Sorting ,

(childRow as record) =>

childRow[Quality] = parentRow[Quality]

and

childRow[Element] = parentRow[Element]

and

childRow[Analysis] < parentRow[Analysis]

and

childRow[Value] <> null

),

{{"Analysis", Order.Descending}, {"Element", Order.Ascending}}

),

2

)

)

in

LastTwoAnalysisNow i just need to figure out how to use those values because just expanding the table doubled all the rows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, thank you so much. There was a few pitfalls i discovered while testing it. I had to add Elements to the sorting order and also sort my input data in the exact same way or else i would get the two previous values from another element.

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjRQ0lHyqAAShgYgpquhUqwOTLgSSBhBhI3AwpYIxQi1cEEjdJUg/cZgQWOwoAVcuylCO1zQyBSh3QKu3RSqPRYA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type text) meta [Serialized.Text = true]) in type table [Analysis = _t, Quality = _t, Value = _t, Element = _t]),

AnalysisAsInt = Table.TransformColumnTypes(Source,{{"Analysis", Int64.Type}}),

Sorted = Table.Sort(AnalysisAsInt, {{"Analysis", Order.Descending},{"Element", Order.Ascending}}

LastTwoAnalysis = Table.AddColumn(AnalysisAsInt, "LastTwoAnalysisTable",

(parentRow as record) =>

Table.FirstN(

Table.Sort(

Table.SelectRows(AnalysisAsInt,

(childRow as record) =>

childRow[Quality] = parentRow[Quality]

and

childRow[Element] = parentRow[Element]

and

childRow[Analysis] < parentRow[Analysis]

),

{{"Analysis", Order.Descending},{"Element", Order.Ascending}}

),

2

)

)

in

LastTwoAnalysisHow do i get the values so that i can do calculations on them before returning them?