FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Remove duplicates selectively based on priority or...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Remove duplicates selectively based on priority order

Finally gave up looking for examples to learn from, and decided to post my first question to the community.

Hello, i have a table similar to this:

Row# Col A Col B

1 A1 B1

2 A1 B2

3 A1 B3

4 A2 B2

5 A2 B3

6 A3 B1

7 A3 B3

I would like to remove duplicates on Col A such that -- whenever Col B = B1, I want to keep that row. So between rows 1, 2 and 3, I would like to keep row 1. Between rows 6 and 7, I will keep row 6.

When Col A has duplicated and Col B doesn't have any B1 (a specific value), I don't care which row I keep as long as I keep only one. So between rows 4 and 5, I could keep either, doesn't matter.

My resulting table should look like this:

Row# Col A Col B

1 A1 B1

4 A2 B2

6 A3 B1

OR

Row# Col A Col B

1 A1 B1

5 A2 B3

6 A3 B1

(as i said above, rows 4 and 5 mean the same to me)

Can anyone help out please?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Zubair. Sorry I didn't specify that B1 in my data could appear on any row -- not necessarily on the first row all the time. That makes the remove duplicates function not suitable for my requirement.

I got help from a colleague and ended up learning a bit of M to get the job done. My code looks something like this:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"B1 rows" = Table.Distinct(Table.SelectRows(Source, each ([Col B] = "B1")), {"Col A"}),

#"A1 list" = Table.Column(#"B1 rows", "Col A"),

#"Non B1 Rows" = Table.Distinct(Table.SelectRows(#"Table 1", each not List.Contains(#"A1 list", [DNS])), {"Col A"}),

#"Appended Query" = Table.Combine({#"B1 rows", #"Non B1 Rows"})

in

#"Appended Query"

The exception join (not List.Contains) must not be efficient because my query runs 10 minutes or longer. But it gets the job done, and that's what i care about.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

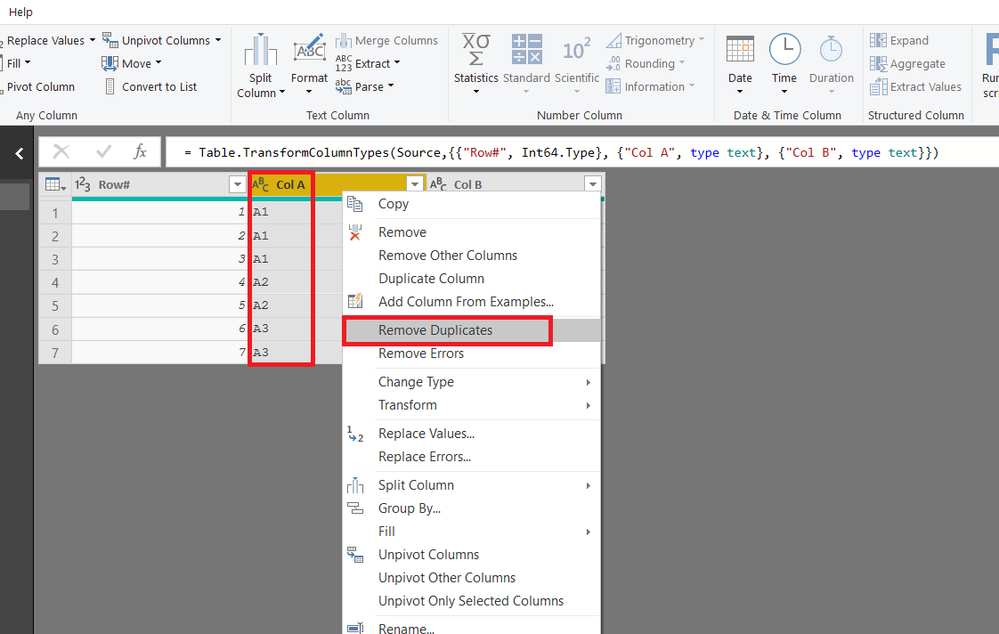

If your data is sorted (as in your sample data) i.e. B1 is the first row for each unique ColA item

you simple have to select Col A>>right click>>remove duplicates

Regards

Zubair

Please try my custom visuals

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Zubair. Sorry I didn't specify that B1 in my data could appear on any row -- not necessarily on the first row all the time. That makes the remove duplicates function not suitable for my requirement.

I got help from a colleague and ended up learning a bit of M to get the job done. My code looks something like this:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"B1 rows" = Table.Distinct(Table.SelectRows(Source, each ([Col B] = "B1")), {"Col A"}),

#"A1 list" = Table.Column(#"B1 rows", "Col A"),

#"Non B1 Rows" = Table.Distinct(Table.SelectRows(#"Table 1", each not List.Contains(#"A1 list", [DNS])), {"Col A"}),

#"Appended Query" = Table.Combine({#"B1 rows", #"Non B1 Rows"})

in

#"Appended Query"

The exception join (not List.Contains) must not be efficient because my query runs 10 minutes or longer. But it gets the job done, and that's what i care about.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Zubair. Sorry I didn't specify that B1 in my data could appear on any row -- not necessarily on the first row all the time. That makes the remove duplicates function is not suitable for my requirement.

I got help from a colleague and ended up learning a bit of M to get the job done. My code looks something like this:

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"B1 rows" = Table.Distinct(Table.SelectRows(Source, each ([Col B] = "B1")), {"Col A"}),

#"A1 list" = Table.Column(#"B1 rows", "Col A"),

#"Non B1 Rows" = Table.Distinct(Table.SelectRows(#"Table 1", each not List.Contains(#"A1 list", [DNS])), {"Col A"}),

#"Appended Query" = Table.Combine({#"B1 rows", #"Non B1 Rows"})

in

#"Appended Query"

The exception join (not List.Contains) must not be efficient because my query runs 10 minutes or longer. But it gets the job done, and that's what i care about.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 19 | |

| 10 | |

| 9 | |

| 8 | |

| 7 |