FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Reading data from CSV files with different col...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reading data from CSV files with different columns

I have a CSV file, when stripped down to the barest form, that looks like this:

WW33

Job,WW31,WW32,WW33

JobA,4,4,4

JobB,2,6,3

JobC,2,4,7

As you can probably guess, this is weekly data, with each Work Week (WW) as column heading. The last column is the most recent WW, and although data goes back only 3 weeks here, in my real data it goes back 12 weeks. So far so good. The next week (WW34 in this case), I will again get a similar file, but the oldest week column (WW31 in this case) eliminated, and the new week added. So, my WW34 and WW35 files would looks like this:

WW34

Job,WW32,WW33,WW34

JobA,4,4,3

JobB,6,3,4

JobC,4,7,8

WW35

Job,WW33,WW34,WW35

JobA,4,3,2

JobB,3,4,1

JobC,7,8,5

Do note that I have no control over the format of data and cannot be changed.

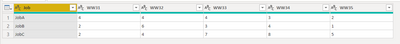

My goal is to set up a PowerBI project where it reads data from a certain folder, and whenever someone drops the new weeks' file, it reads data and combines them all into a single data table that should look like this:

Expected

Job,WW31,WW32,WW33,WW34,WW35

JobA,4,4,4,3,2

JobB,2,6,3,4,1

JobC,2,4,7,8,5

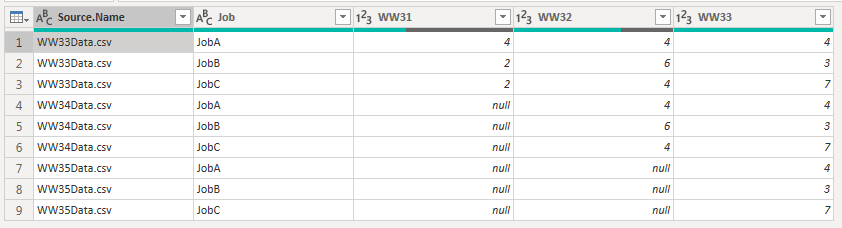

Simply put, it should have as many columns as there are weeks in all files combined, and it shouldn't have duplicate columns. I'm not quite sure how I can achieve this. If I simply set PowerBI to read data from a folder and do 'Combine and Transform', this is what I get, which obviously isn't what I want.

Is there a way to get and format data the way I want?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

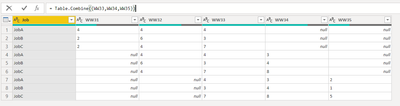

Yes, and it is actually very simple. Table.Combine will do exactly what you need without even complaining that you feed it such crappy (sorry, diverse) data.

And then you can decide how to handle the nulls, and how to add the source file name (do you actually need that?). if you want to get fancy you can build a list of files first, then import the first file, then fetch the columns of all subsequent files and only import the "new" (not already present) columns.

Otherwise just do a few simple transforms.

let

Source = Table.Combine({WW33,WW34,WW35}),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(Source, {"Job"}, "Attribute", "Value"),

#"Removed Duplicates" = Table.Distinct(#"Unpivoted Other Columns"),

#"Pivoted Column" = Table.Pivot(#"Removed Duplicates", List.Distinct(#"Removed Duplicates"[Attribute]), "Attribute", "Value")

in

#"Pivoted Column"

to arrive at this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

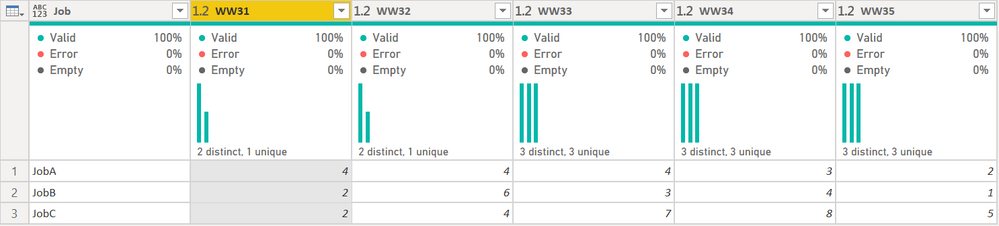

Hi @Anonymous ,

I copied your 3 examples to 3 text files, W33.txt, W34.txt, and W35.txt and I get this output:

This should work to infiity if you continue to have that same rolling 3 week pattern. This will blow up though if you have multiple files with the same week numbers but different values. Week 33 in 2020 vs Week 33 in 2021 for example. The column names would need to be different, or a lot more logic would need to go into this.

That said, here is the M code:

let

Source = Folder.Files("C:\Users\Ed Hansberry\OneDrive\Work Stuff\Power BI Forum Examples\Test Files\Weekly Data"),

#"Filtered Hidden Files1" = Table.SelectRows(Source, each [Attributes]?[Hidden]? <> true),

#"Invoke Custom Function1" = Table.AddColumn(#"Filtered Hidden Files1", "Transform File", each #"Transform File"([Content])),

#"Renamed Columns1" = Table.RenameColumns(#"Invoke Custom Function1", {"Name", "Source.Name"}),

#"Removed Other Columns1" = Table.SelectColumns(#"Renamed Columns1", {"Transform File"}),

AllColumnsNames =

Table.ColumnNames(

Table.Combine(#"Removed Other Columns1"[Transform File])

),

#"Expanded Transform File" = Table.ExpandTableColumn(#"Removed Other Columns1", "Transform File", AllColumnsNames, AllColumnsNames),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(#"Expanded Transform File", {"Job"}, "Column", "Amount"),

#"Changed Type" = Table.TransformColumnTypes(#"Unpivoted Other Columns",{{"Amount", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Job", "Column"}, {{"Amount", each List.Average([Amount]), type nullable number}}),

#"Pivoted Column" = Table.Pivot(#"Grouped Rows", List.Distinct(#"Grouped Rows"[Column]), "Column", "Amount")

in

#"Pivoted Column"

This also assumes when you do the combine operation, the "Transform Sample File" step only has the promote headers step, not the changed type step. That will wreck it too.

I am going to share my PBIX file here because you need to see the full thing including the combine files magic Power Query does. I edited the Transform Sample File query to suit my needs.

The key to this is the AllColumnNames step, which just keeps getting a larger and larger list of columns as the weeks roll on. I guess the Table.ColumnNames function does a unique union for me. I was expecting to have to wrap it in List.Distinct but it wasn't necessary. Those column names are what are used later when the table expands, vs the normal hardcoded columns you get when you manually expand a table column.

Also, my first table has a lot of duplicates. I get rid of those in the Grouped Rows. That step assumes the values are the same. So the Week 33 file for Job b in week 33 has 3. As long as the week 34 and 35 files also have 4 for job B in week 33, you are good. If that changes, then this will not work. I am using MIN() to get that number. MAX() and AVERAGE() will all do the same thing. If you are expecting that to change, MIN/MAX/AVERAGE woulda all return different results obviously, and none of them "4". That would seem to be to be a source data issue, and we'd have to discuss how to handle that scenario.

Here is my PBIX file and the 3 text files, in a single zip.

You'll need to unzip, then change the source in Power Query to point to where those TXT files are.

How to use M code provided in a blank query:

1) In Power Query, select New Source, then Blank Query

2) On the Home ribbon, select "Advanced Editor" button

3) Remove everything you see, then paste the M code I've given you in that box.

4) Press Done

5) See this article if you need help using this M code in your model.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

Is this problem solved?

If it is solved, please always accept the replies making sense as solution to your question so that people who may have the same question can get the solution directly.

If not, please let me know.

Best Regards,

Icey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

He has made two posts about this problem, and another post has been marked as solved. Here is the link:

Getting data from CSV files that have slightly different columns each week

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ugh. That is super frustrating @Anonymous

Please don't create duplicate posts. A lot of time was spent in this thread solving the issue. Please be more careful in the future.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry about the confusing guys.

When I created this post, for some reason the community marked it as spam, and wasn't allowed to post. So I created the other post, and this didn't even show up on my profile for a while.

Thanks for the detailed answer, I'll go thorugh it! Really appreciate you guys help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for that info @Anonymous - I will alert the mods on this. The spam detector may be a bit aggressive.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if w33, w34 and w45 are your tables, this makes the Job (A, B and so on ...) 😁

= let

Source = w35,

Cw35=Table.TransformRows(w35, each w33{[Job=_[Job]]}&w34{[Job=_[Job]]}&_)

in

Table.FromRecords(Cw35)

this is only a sketch of an idea tath coul be modified to manage more general similar situations

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Anonymous ,

I copied your 3 examples to 3 text files, W33.txt, W34.txt, and W35.txt and I get this output:

This should work to infiity if you continue to have that same rolling 3 week pattern. This will blow up though if you have multiple files with the same week numbers but different values. Week 33 in 2020 vs Week 33 in 2021 for example. The column names would need to be different, or a lot more logic would need to go into this.

That said, here is the M code:

let

Source = Folder.Files("C:\Users\Ed Hansberry\OneDrive\Work Stuff\Power BI Forum Examples\Test Files\Weekly Data"),

#"Filtered Hidden Files1" = Table.SelectRows(Source, each [Attributes]?[Hidden]? <> true),

#"Invoke Custom Function1" = Table.AddColumn(#"Filtered Hidden Files1", "Transform File", each #"Transform File"([Content])),

#"Renamed Columns1" = Table.RenameColumns(#"Invoke Custom Function1", {"Name", "Source.Name"}),

#"Removed Other Columns1" = Table.SelectColumns(#"Renamed Columns1", {"Transform File"}),

AllColumnsNames =

Table.ColumnNames(

Table.Combine(#"Removed Other Columns1"[Transform File])

),

#"Expanded Transform File" = Table.ExpandTableColumn(#"Removed Other Columns1", "Transform File", AllColumnsNames, AllColumnsNames),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(#"Expanded Transform File", {"Job"}, "Column", "Amount"),

#"Changed Type" = Table.TransformColumnTypes(#"Unpivoted Other Columns",{{"Amount", Int64.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Job", "Column"}, {{"Amount", each List.Average([Amount]), type nullable number}}),

#"Pivoted Column" = Table.Pivot(#"Grouped Rows", List.Distinct(#"Grouped Rows"[Column]), "Column", "Amount")

in

#"Pivoted Column"

This also assumes when you do the combine operation, the "Transform Sample File" step only has the promote headers step, not the changed type step. That will wreck it too.

I am going to share my PBIX file here because you need to see the full thing including the combine files magic Power Query does. I edited the Transform Sample File query to suit my needs.

The key to this is the AllColumnNames step, which just keeps getting a larger and larger list of columns as the weeks roll on. I guess the Table.ColumnNames function does a unique union for me. I was expecting to have to wrap it in List.Distinct but it wasn't necessary. Those column names are what are used later when the table expands, vs the normal hardcoded columns you get when you manually expand a table column.

Also, my first table has a lot of duplicates. I get rid of those in the Grouped Rows. That step assumes the values are the same. So the Week 33 file for Job b in week 33 has 3. As long as the week 34 and 35 files also have 4 for job B in week 33, you are good. If that changes, then this will not work. I am using MIN() to get that number. MAX() and AVERAGE() will all do the same thing. If you are expecting that to change, MIN/MAX/AVERAGE woulda all return different results obviously, and none of them "4". That would seem to be to be a source data issue, and we'd have to discuss how to handle that scenario.

Here is my PBIX file and the 3 text files, in a single zip.

You'll need to unzip, then change the source in Power Query to point to where those TXT files are.

How to use M code provided in a blank query:

1) In Power Query, select New Source, then Blank Query

2) On the Home ribbon, select "Advanced Editor" button

3) Remove everything you see, then paste the M code I've given you in that box.

4) Press Done

5) See this article if you need help using this M code in your model.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@edhans thank you! This is pretty detailed and the attached PowerBI file helped me a lot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@edhans I do have a follow-up question here.

The original question that I posed contained a slightly simplified version of my actual CSV, and I think that might have been a mistake. In the original I said this is what my columns look like,

Job,WW31,WW32,WW33

But in reality this is what I really have

Type,Job,Unit,Entity,2020WW18,2020WW19,2020WW20,2020WW21,2020WW22,2020WW23,2020WW24,2020WW25,2020WW26,2020WW27,2020WW28,2020WW29,2020WW30,2020WW31

Type and Entity have useless data and in subsequent steps I want to remove those columns, but Job and Unit are important.

So I modified your M code to look like this, and it almost gets me home, but not quite.

let

Source = Folder.Files("MyPath"),

#"Filtered Hidden Files1" = Table.SelectRows(Source, each [Attributes]?[Hidden]? <> true),

#"Invoke Custom Function1" = Table.AddColumn(#"Filtered Hidden Files1", "Transform File", each #"Transform File"([Content])),

#"Renamed Columns1" = Table.RenameColumns(#"Invoke Custom Function1", {"Name", "Source.Name"}),

#"Removed Other Columns1" = Table.SelectColumns(#"Renamed Columns1", {"Transform File"}),

AllColumnsNames =

Table.ColumnNames(

Table.Combine(#"Removed Other Columns1"[Transform File])

),

#"Expanded Transform File" = Table.ExpandTableColumn(#"Removed Other Columns1", "Transform File", AllColumnsNames, AllColumnsNames),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(#"Expanded Transform File", {"Type", "Job", "Unit", "Entity"}, "Column", "Amount"),

#"Changed Type" = Table.TransformColumnTypes(#"Unpivoted Other Columns",{{"Amount", Percentage.Type}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"Job", "Unit", "Column"}, {{"Amount", each List.Average([Amount]), type nullable number}}),

#"Pivoted Column" = Table.Pivot(#"Grouped Rows", List.Distinct(#"Grouped Rows"[Column]), "Column", "Amount")

in

#"Pivoted Column"

I thought this should work, but (assuming I only had one file), it only gives me 4 weeks worth of data (first 4 WW columns) when I have 13 WW columns, and ignores the rest. If I add the next week file, it'll give me 5 WW columns in total. I'm a bit stumped here since in the M code I can't find anything that instructs it to retrieve data from the first 4 WW.

A bit further investigation revlealed me that the problem is likely at the 'AllColumnsNames' steps, as it only shows the first 4 columns (again, assuming I have just the first file in the folder).

List

-----

Type

Job

Unit

Entity

2020WW18

2020WW19

2020WW20

2020WW21

How do I instruct it to grab all WW columns, not just the first 4?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Go back to the "Transform Sample File" query that Power Query made for you and look at the Source line. It may look something like this:

= Csv.Document(Parameter1,[Delimiter=" ", Columns=12, Encoding=1252, QuoteStyle=QuoteStyle.None])You want to remove that Columns=12 statement entirely. Yours probably says Columns=4. You don't need it. Just remove that parameter and it should dynamically get whatever you have.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That did the trick, thank you so much!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad to help @Anonymous . It is an annoying little quirk in the CSV import function and I honestly don't know why it is there, other than to frustrate people. 😂

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree; it should be an Opt-In, not Opt-Out, as it is currently.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great @Anonymous - post back (to either thread 😂) if you need more help with anything.

Did I answer your question? Mark my post as a solution!

Did my answers help arrive at a solution? Give it a kudos by clicking the Thumbs Up!

DAX is for Analysis. Power Query is for Data Modeling

Proud to be a Super User!

MCSA: BI Reporting- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, and it is actually very simple. Table.Combine will do exactly what you need without even complaining that you feed it such crappy (sorry, diverse) data.

And then you can decide how to handle the nulls, and how to add the source file name (do you actually need that?). if you want to get fancy you can build a list of files first, then import the first file, then fetch the columns of all subsequent files and only import the "new" (not already present) columns.

Otherwise just do a few simple transforms.

let

Source = Table.Combine({WW33,WW34,WW35}),

#"Unpivoted Other Columns" = Table.UnpivotOtherColumns(Source, {"Job"}, "Attribute", "Value"),

#"Removed Duplicates" = Table.Distinct(#"Unpivoted Other Columns"),

#"Pivoted Column" = Table.Pivot(#"Removed Duplicates", List.Distinct(#"Removed Duplicates"[Attribute]), "Attribute", "Value")

in

#"Pivoted Column"

to arrive at this.

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

Power BI Monthly Update - November 2025

Check out the November 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 10 | |

| 6 | |

| 5 | |

| 5 | |

| 2 |