FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! It's time to submit your entry. Live now!

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Query Efficiency - Stack References or Duplicate?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Query Efficiency - Stack References or Duplicate?

Long time listener, first time caller.

Power Query RE: building predictive models. Context, I have a dataset (c.400k rows, 140 columns) which is curated elsewhere and ingested into PQ as a flat file.

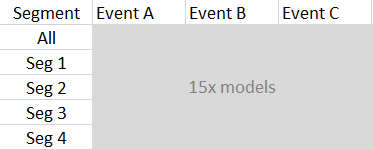

I'm building out 3x models for different events, and segmenting the dataset into say 4x customer segments = 12x models I'm looking to train (on the basis of sufficient data volume to do so and vastly improved AUC as the segments and events are distinct from one another). So it looks sort of like this:

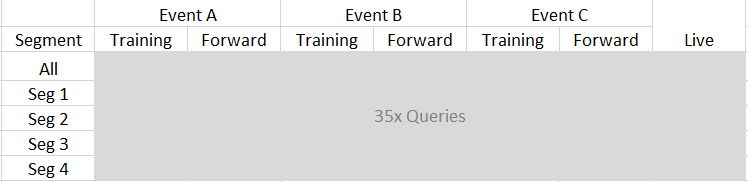

What I want to do here is (working around the limitations of the ML solution in calibrating specifics) force a specific block of data as "training" data (majority of data), create a custom hold out for forward testing (c.25000 rows), and then a third block of data for applying the model against just the latest month (i.e. <=3000 rows).

So in reality my set of data cuts looks like this

I've created the "All", which all reference the Base data, and each Seg is a subset of that

So my question is - is it more process efficient

- Duplicating the "All" queries, or

- Sub referencing the "All" queries

Benefits of sub referencing is it would reduce the amount of filters I need to change each month as they will cascade through, so this is the most likely answer. But specifically my issue here is I'm not sure if it is processing the queries in a manner then which stacking would make them inefficient given the size of the raw dataset.... my hope / assumption here is that when a sub query is called it essentially just "folds" the whole end to end chain, so it isn't in effect buffering the intermediary layer unnecessarily. I've had performance and run time issues so far on other queries using this dataset due to its size, hence optimising is relevant. Answers on a postcard

Thanks in advance

Tom

Incidentally, I found out the hard way that trying to house all of this is (models + data) within a single dataflow was a disaster as essentially I was unable to alter the cut off for segments or events down the track and just had to live with how it had been set up to begin with, hence now breaking data and models into seperate dataflows. Similarly, one area I'm finding the PowerBI ML solution not ideal is I can't set up a dozen models to run overnight, I have to set them up one at a time and wait for it to stop refreshing - there's no way to stop the refresh to let me queue them all up. Lastly, given it's all originated from the same dataset, there's no clean and quick way to say "apply all models" to the final dataset so it's all in the one place - overall, lots of faff and duplication which could probably be avoided if the user had the ability to configure refresh settings individually for each component of a dataflow.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @TomDeas ,

To answer your main question: There's no difference between Referencing and Duplicating in terms of internal processing. There are some edge cases where Power Query/Dataflows will cache results, but it's safest to just assume at least one source hit per query regardless of whether duplicated or referenced.

As you rightly alluded to, the savings will be in your adjustment time. If you can create a core query that contains all generic transformations, then reference this for each subset of unique transformations, you will limit the number of areas that you need to make adjustments in.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @TomDeas ,

To answer your main question: There's no difference between Referencing and Duplicating in terms of internal processing. There are some edge cases where Power Query/Dataflows will cache results, but it's safest to just assume at least one source hit per query regardless of whether duplicated or referenced.

As you rightly alluded to, the savings will be in your adjustment time. If you can create a core query that contains all generic transformations, then reference this for each subset of unique transformations, you will limit the number of areas that you need to make adjustments in.

Pete

Now accepting Kudos! If my post helped you, why not give it a thumbs-up?

Proud to be a Datanaut!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Perfect thanks Pete that's absolutely it. Was keen to take that route (stacked references) as the queries were all ultimately subsets of one another and hence helps ensure integrity of filtering later on, but wasn't sure if I was walking into a processing trap, particularly as I've had some stability issues already.

I ran overnight on basis of stacking references, and didn't encounter any issues. Perhaps my question was phrased "what's best practice here".

Thanks

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! It's time to submit your entry.

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 14 | |

| 13 | |

| 9 | |

| 7 | |

| 7 |