FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

To celebrate FabCon Vienna, we are offering 50% off select exams. Ends October 3rd. Request your discount now.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Preserve ID based on Max value of index

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Preserve ID based on Max value of index

Hi,

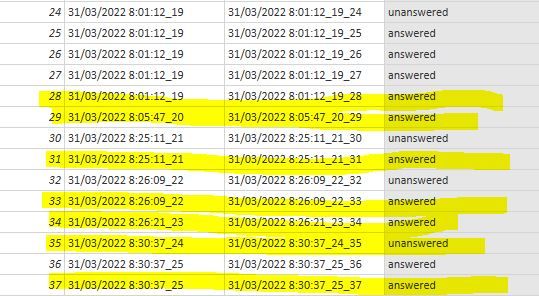

I've got this table that contains ID's of calls, where most of the calls have sub ID's. I'm looking for a solution where I can only preserve the ID's with the highest index, so I can see the end status of every call. So I only want the yellow ones ...

Is this possible?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since, you have already sorted Index column (if not sort on Index), See the working here - Open a blank query - Home - Advanced Editor - Remove everything from there and paste the below code to test

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("RY9BCoMwFETvkrWL6rSaLm21eoaKDD1E72/mJzCLgcfjE/KOIw331KVZY+A/nV2xD1vhr9rRdrSdbCfbbJttnwVfGgOrxa3gW2Ng/QN62963Q8FFY2C7hS18q6BVY2CzCvpoDGwvKGjTCLdBQbtGuA3Z1m1Q0FcjWtt5AQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Index = _t, ID1 = _t, ID2 = _t, Status = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Index", Int64.Type}, {"ID1", type text}, {"ID2", type text}, {"Status", type text}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index.1", 0, 1, Int64.Type),

#"Added Custom" = Table.AddColumn(#"Added Index", "Select", each try if [ID1]<>#"Added Index"[ID1]{[Index.1]+1} then "Y" else "N" otherwise "Y"),

#"Filtered Rows" = Table.SelectRows(#"Added Custom", each ([Select] = "Y")),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows",{"Index.1", "Select"})

in

#"Removed Columns"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since, you have already sorted Index column (if not sort on Index), See the working here - Open a blank query - Home - Advanced Editor - Remove everything from there and paste the below code to test

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("RY9BCoMwFETvkrWL6rSaLm21eoaKDD1E72/mJzCLgcfjE/KOIw331KVZY+A/nV2xD1vhr9rRdrSdbCfbbJttnwVfGgOrxa3gW2Ng/QN62963Q8FFY2C7hS18q6BVY2CzCvpoDGwvKGjTCLdBQbtGuA3Z1m1Q0FcjWtt5AQ==", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Index = _t, ID1 = _t, ID2 = _t, Status = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Index", Int64.Type}, {"ID1", type text}, {"ID2", type text}, {"Status", type text}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index.1", 0, 1, Int64.Type),

#"Added Custom" = Table.AddColumn(#"Added Index", "Select", each try if [ID1]<>#"Added Index"[ID1]{[Index.1]+1} then "Y" else "N" otherwise "Y"),

#"Filtered Rows" = Table.SelectRows(#"Added Custom", each ([Select] = "Y")),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows",{"Index.1", "Select"})

in

#"Removed Columns"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the solution work fine on my smalle testset, but it takes to much processing power when I try to apply this to the real dataset. After 1 hour of refreshing only 350 rowes were loaded. Without this code it takes less dan 1 minute ...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below are 2 approaches tested on 1 million records. First approach based on using buffered list to filter took 160 seconds whereas second approach was based on @AlexisOlson 's approach of using Records (for Max) in the column. The second approach is faster and took 100 seconds for 1 million records.

Excel file (contains VBA code for timing queries) used for this testing with both approaches can be downloaded from - https://1drv.ms/x/s!Akd5y6ruJhvhuXoDyaB0q3-Gj7QA?e=m2SvxC

Edit - There is also an Inner Merge approach which is also quite fast. This is approach 3 below.

Approach 1

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Index", Int64.Type}, {"ID1", type text}, {"ID2", type text}, {"Status", type text}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID1"}, {{"Max", each List.Max([Index]), type number}}),

List = List.Buffer(#"Grouped Rows"[Max]),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each (List.Contains(List,[Index])))

in

#"Filtered Rows"

Approach 2

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Index", Int64.Type}, {"ID1", type text}, {"ID2", type text}, {"Status", type text}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID1"}, {{"Max", each List.Max([Index]), type nullable number}, {"All", each Table.Max(_, "Index"), type record}}),

#"Removed Columns" = Table.RemoveColumns(#"Grouped Rows",{"ID1", "Max"}),

#"Expanded All" = Table.ExpandRecordColumn(#"Removed Columns", "All", {"Index", "ID1", "ID2", "Status"}, {"Index", "ID1", "ID2", "Status"})

in

#"Expanded All"Approach 3

let

Source = Excel.CurrentWorkbook(){[Name="Table1"]}[Content],

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Index", Int64.Type}, {"ID1", type text}, {"ID2", type text}, {"Status", type text}}),

#"Grouped Rows" = Table.Group(#"Changed Type", {"ID1"}, {{"Max", each List.Max([Index]), type nullable number}}),

#"Merged Queries" = Table.NestedJoin(#"Changed Type", {"Index"}, #"Grouped Rows", {"Max"}, "Removed Columns", JoinKind.Inner),

#"Removed Columns1" = Table.RemoveColumns(#"Merged Queries",{"Removed Columns"})

in

#"Removed Columns1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Vijay_A_Verma thnx

I can not open or download the excel file. I get the message the file is to big ....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Download this zip file https://1drv.ms/u/s!Akd5y6ruJhvhuX4Rq3hq9KLVP3lH?e=R0gm7S

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, using table lookup logic inside of custom columns tends to be highly inefficient when the table is very large.

I'd recommend trying the last couple of methods I describe in my blog post here:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content