FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Learn from the best! Meet the four finalists headed to the FINALS of the Power BI Dataviz World Championships! Register now

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Power Query engine performance issue

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Power Query engine performance issue

I had to issue 3 REST API calls (over a corporate fiber link on a Dell workstation with 64GB of RAM, and one of the latest Intel CPUs, with 3 SSDs, etc, so the horsepower should be there):

1_get the auth token

2_get 1,000 ID records

3_get the data linked to these IDs, which is a 1,000 x 96 dataset (rows x columns)

It imported very slowly. Looking at the diagnostis, the PQ engine seems to complete each API calls in ms, so it's got to be the engine processing all the ETL transformations that must be slow. To give you an idea, it takes almost 2 hours to ingest this dataset, and that's before clicking Close&Apply! What is puzzling, the total number of transformations is really small too, with a final pivot as the last step.

I am surprised that a 1,000 x 96 would incur such a performance penalty. It's scary to think what would happen if the dataset to ingest had 10s of 1,000, or 100s of 1,000 rows, for ex. Power Query would be running for more than a week!

It is a lot faster to ingest data from a DB than a REST API. So Power Automate to dump the raw data into a DB, then importing from that DB might be the speedier and preferred solution.

Nonetheless, does anyone have any idea why a 1,000 x 96 dataset would import this slowly from REST API calls that complete in ms?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Without seeing your query code, it's a bit difficult to diagnose for sure, but given the size of the data, I'd recommend buffering the table after loading and before any transformations like pivoting. Scroll down to the Buffering section in this article for some more detail about the Table.Buffer function.

Other posts related to buffering:

https://community.powerbi.com/t5/Desktop/Using-Table-Buffer/td-p/1535407

@ImkeF has a nice list of various recommendations for improving query performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd consider anything that can comfortably fit into your RAM to not be a large table.

As far as buffering, I suggest buffering right after the API stuff so that any further transformations don't attempt to trigger API calls again. You could do this at this step:

rt_table = Table.Buffer(Table.AddColumn(type_change0, "RT_DATA", each getRTData([handleID], token), type record)),

If this table fits in memory nicely, then any subsequent basic transformations should be pretty fast.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlexisOlson - I am wary of this suggestion because Table.Buffer may not like nested Tables or Binary objects. I have seen this happen with Dataflows.

@Element115 - it will help to buffer before running the very expensive Table.Pivot function.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I glad it starting to help. One thing that can slow the performance is the API Throttling Limits. You should check how many send and receives you can make per second or minute.

There are two other things would try, but this will depend on whether original data and API data must fully updated each time.

- try an incremental load using the LastReportTime to avoid reload all records each week.

- if ID_External and Handle_ID are not unique then try running them in a distinct batch to avoid using the API to call the same data more than once.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Buffering loads the table to memory as it exists at that particular step. Deciding when and where to do this is more of an experimental art rather than an exact set of rules to follow, especially without a deep understanding of exactly how the query optimization engine works.

Just because you have some version of the table loaded into memory doesn't mean that there's never a need buffer again after that point. If you do expensive calculations or extensive transformations on a table, sometimes it's worth buffering those intermediate results before doing any further steps so that you have those calculations/transformations stored in a format that can be referenced efficiently.

A rather extreme example is this function I wrote here. As @ImkeF points out, after I've done some initial transformations to set up some chunks to loop through, buffering them to memory helps a lot since it's doing nested iterations on those chunks. Only buffering the initial input wouldn't be nearly as fast.

It's possible that buffering both before and after the pivot is the fastest but that's something that needs to be tested in your specific situation. Don't go too crazy with buffers though. Take them out anywhere they don't help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Element115 -

For 1 - it depends, as @AlexisOlson says it is experimental. However there is one firm rule that you should follow. If you are connecting to foldable datasource like a database, don't buffer until after Query Folding breaks. Buffering at the very start would break folding and effectively load the entire table to temporary memory.

For 2 - Firstly, I believe Pivot is the more expensive transformation. Second, I would want Power Query to full complete all the steps before starting the Pivot transformation. Hence, my strategy would be to place it before Pivot. However, testing might show that there is very little impact from using either approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Without seeing your query code, it's a bit difficult to diagnose for sure, but given the size of the data, I'd recommend buffering the table after loading and before any transformations like pivoting. Scroll down to the Buffering section in this article for some more detail about the Table.Buffer function.

Other posts related to buffering:

https://community.powerbi.com/t5/Desktop/Using-Table-Buffer/td-p/1535407

@ImkeF has a nice list of various recommendations for improving query performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alexis, I changed the code slightly by adding a Table.StopFolding right at the beginning:

let

remove_cols = Table.StopFolding(

Table.RemoveColumns(

#"Renamed Columns",

{

"ID",

...

}

)

),

...

in

query

and at the end of the M srcipt, where I used to have one Table.Buffer call, I wrapped to steps in Table.Buffer with options = BufferMode.Eager, and this accelerated the execution of the M code quite a bit--no more waiting more than 1 hour.

However, changing the data type on 1 or more columns seems to take forever though. Again, 1,000 rows and let's say 100 columns, so 100,000 data points.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'd consider anything that can comfortably fit into your RAM to not be a large table.

As far as buffering, I suggest buffering right after the API stuff so that any further transformations don't attempt to trigger API calls again. You could do this at this step:

rt_table = Table.Buffer(Table.AddColumn(type_change0, "RT_DATA", each getRTData([handleID], token), type record)),

If this table fits in memory nicely, then any subsequent basic transformations should be pretty fast.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlexisOlson - I am wary of this suggestion because Table.Buffer may not like nested Tables or Binary objects. I have seen this happen with Dataflows.

@Element115 - it will help to buffer before running the very expensive Table.Pivot function.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's a good point. getRTData() does return a record with one key and a list of records as a value for each row.

At the moment, each Table.AddColumn (where getHandleID() and getRTData() are called respectively) is buffered.

As I write this, the processing completed without errors in 25 mins. So I'll take that as a pleasant improvement. If it could be done in 5 mins or less, that would be awesome... but perhaps not possible.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, I'd be wary of trying to buffer anything that isn't a flat table too. I've not tried buffering nested objects.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

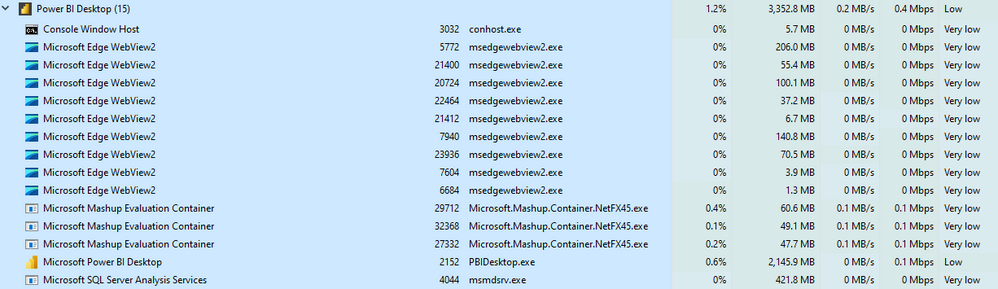

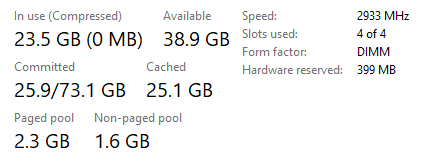

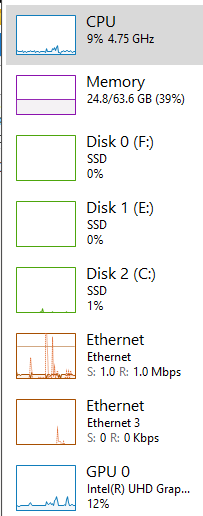

This is what my system perfs look like as the PQ engine is still processing the script. So the sys is not overwhelmed by any stretch of the imagination (total RAM = 64GB).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right, I forgot to mention it... I did that but all the type casting transformations that come after still seem to take forever, as in more than 10 mins.

Does this mean that the subsequent Table.Buffer calls to wrap intermediary steps at the end of the script to buffer the new table after each transformation is unnecessary? Such as this one:

#"Pivoted Column" = Table.Buffer(

Table.Pivot(type_change2, List.Distinct(type_change2[Key]), "Key", "Value"),

[BufferMode = BufferMode.Eager]

),

Thanks again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You shouldn't need buffers for each step but it's worth trying on steps that seem computationally expensive.

It seems odd that type casting on a buffered table is causing a major slowdown unless the transformation is throwing errors. Error handling can definitely slow things down. You could try adding a new logical custom column instead of replacing values in [CLO Enabled]. It could be as simple as

[CLO Enabled] = "True"but you could try writing a more robust version if needed

try if [CLO Enabled] = null then null

else if [CLO Enabled] = "Yes" then true

else if [CLO Enabled] = "No" then false

else null

otherwise null

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree... strange that would I have to define a custom func to do the Replace value thing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the code in full--comment at the very end explain the last step, a type cast, that caused the error, which went away once the type cast step was removed. Now: I am casting from text to logical using text values of 'False' and 'True'. Used to work in the past if I remember correctly.

let

source1 = Sql.Databases("DB_NAME"),

source2 = source1{ [Name="###"] }[Data],

dbo_vOLC = source2{ [Schema="dbo", Item="vOLC"] }[Data],

#"Changed Type" = Table.TransformColumnTypes(dbo_vOLC,{ {"ID_External", Int64.Type} }),

#"Renamed Columns" = Table.RenameColumns(#"Changed Type", { {"FK_ID_###", "ID_###"} } ),

remove_cols = Table.StopFolding( //Table.Buffer( //

Table.RemoveColumns(

#"Renamed Columns",

{

"ID"

, "ID_OLC"

, "Longitude"

, "Latitude"

, "Is_remotely_managed"

, "Model"

, "FirmwareVersion"

, "HardwareAddress"

, "ControlSystem"

, "InstallationDate"

, "CommunicationStatus"

, "NumberOfMeteringChannels"

, "CLO_Enabled"

, "LastReportTime"

, "Is_metered"

, "UserSwitchType"

, "FactorySwitchType"

}

)

),

project_id = fnProjectID(),

getToken = () =>

let

URL = "https://api.###.com",

body = "app_key=###&app_secret=###&service=###&scope=###",

response = Web.Contents(

URL,

[

RelativePath = "oauth/accesstoken"

, Headers = [

#"Authorization"="Basic ###"

, #"Content-Type"="application/x-www-form-urlencoded"

]

, IsRetry = true

, Content = Text.ToBinary(body, BinaryEncoding.Base64)

]

),

jsonResponse = try Json.Document(response),

token = if jsonResponse[HasError] then

error jsonResponse[Error][Message]

else

jsonResponse[Value][token]

in

token,

getHandleID = (external_ID as number, token as text) =>

let

URL = "https://api.###.com",

relative_path = "###" & project_id & "###" & Number.ToText(external_ID) & "###",

headers = [

#"Authorization"="Bearer " & token

, #"Content-Type" = "application/json"

],

external_ID_txt = try Number.ToText(external_ID),

http_resp = if external_ID_txt[HasError] then

error "cannot convert external_ID from number to text"

else

try

Web.Contents(

URL

, [

RelativePath = relative_path

, Headers = headers

, IsRetry = true

]

),

response = if http_resp[HasError] then error http_resp[Error][Message]

else try Json.Document(http_resp[Value])

in

if response[HasError] then

response[Error][Message]

else

try response[Value]{0}[handleId] otherwise response[Value][Message],

token = getToken(),

add_handleID = Table.AddColumn(remove_cols, "handleID", each getHandleID([ID_External], token), type text),

type_change0 = Table.TransformColumnTypes( add_handleID, { {"handleID", type text} } ),

getRTData = (handle_ID as nullable text, token as text) as record =>

let

URL = "https://api.###.com",

relative_path = "###" & project_id & "###",

handleID_num = try Number.From(handle_ID),

rt_data = if handleID_num[HasError] then

[ERROR="Number.From(handle_ID) cannot convert; hanleID=" & handle_ID]

else

let

headers = [

#"Authorization" = "Bearer " & token

, #"Connection" = "keep-alive"

],

query = [handleId = handle_ID],

http_resp = try

Web.Contents(

URL

, [

RelativePath = relative_path

, Headers = headers

, IsRetry = true

, Query = query

]

)

,

data = if not http_resp[HasError] then try Json.Document(http_resp[Value])

else error http_resp[Error][Message],

result = if data[HasError] then [ERROR=data[Error][Message]]

else if Value.Is(data[Value], type list) and List.IsEmpty(data[Value]) then [ERROR="no data: wait for the next server refresh"]

// the API returns a list, ie [] JSON array, when data is available

else if Value.Is(data[Value], type list) and not List.IsEmpty(data[Value]) then data[Value]{0}

// the API returns a record, ie {} JSON object, only if there was an error

else if Value.Is(data[Value], type record) then [ERROR=data[Value][Message]] else null

in

result

in

// returns a record of real-time values or an error msg record

rt_data,

rt_table = Table.AddColumn(type_change0, "RT_DATA", each getRTData([handleID], token), type record),

expand_data = Table.ExpandRecordColumn(rt_table, "RT_DATA", {"status", "DateTime", "properties"}, {"status", "DateTime", "properties"}),

expand_prop_list = Table.ExpandListColumn(expand_data, "properties"),

#"Expanded properties" = Table.ExpandRecordColumn(expand_prop_list, "properties", {"Key", "Value", "Unit"}, {"Key", "Value", "Unit"}),

#"Removed Columns1" = Table.RemoveColumns(#"Expanded properties",{"Unit"}),

#"Filtered Rows" = Table.SelectRows(#"Removed Columns1", each ([Key] <> null)),

type_change1 = Table.TransformColumnTypes(#"Filtered Rows",{{"Key", type text}, {"Value", type text}}),

#"Pivoted Column" = Table.Pivot(type_change1, List.Distinct(type_change1[Key]), "Key", "Value"),

#"Replaced Value" = Table.ReplaceValue(#"Pivoted Column","No","False",Replacer.ReplaceText,{"CLO Enabled"}),

#"Replaced Value1" = Table.ReplaceValue(#"Replaced Value","Yes","True",Replacer.ReplaceText,{"CLO Enabled"})

// and here was the step to cast this [CLO Enabled] column to type logical, after the addition of which I got the OLE DB type mismatch error

in

#"Replaced Value1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Element115 - this is how I would approach this to see if it improves performance.

remove_cols - insert a Table.Buffer after this step. Please check this column is folding as the previous change of data type might break.

getToken - this can be moved to separate query/function. It is not necessary to include in this Query. There is potential issue with this step because we want to get one token for all subseqent requests. There is a risk that you are generating a token for each query. The latency for each token request will add up!.

Text.Buffer would be helpful, but sadly doesn't exist.

You could try to return the Json.Document with the getToken function (i.e return the jsonResponse step instead of text).

In the main query, just add the following:

BufferToken = Binary.Buffer( getToken() ),

Token = Json.Document( BufferToken )[Value][token],

This should force Power Query to get token once.

add_handleID - this steps refers to [ID_External] in the remove_cols, is this correct? This only the ID column. Or does the project_id step do something?

type_change0 - this step is unnecessary, but this is a good time for the second Table.Buffer so you know have the SQL table and the HandleID.

getHandleID - this could be moved to separate query/funciton. I am not sure about the complexity of the error handling. This approach may be chatty. I.e. to couple the steps it needs to execute the web call more than once.

#"Expanded properties" - add the 3rd buffer step after this step, so all the previous steps are completed before the Pivot.

Note the #"Remove Column1" can be avoided by excluding the Unit column.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have implemented all your suggested changes and execution is now screaming!!! Alright, I exaggerate but I'll take 15 mins over 60+ mins anytime of the week. This project has been the best accelerated learning on how to use M. Seriously, thanks a lot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I glad it starting to help. One thing that can slow the performance is the API Throttling Limits. You should check how many send and receives you can make per second or minute.

There are two other things would try, but this will depend on whether original data and API data must fully updated each time.

- try an incremental load using the LastReportTime to avoid reload all records each week.

- if ID_External and Handle_ID are not unique then try running them in a distinct batch to avoid using the API to call the same data more than once.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Daryl-Lynch-Bzy @AlexisOlson There is one last thing I am confused about re where and how many times Table.Buffer should be used.

1__In other words, why not just buffer at the very top of the code like so:

let

Source = some_DB,

transform0 = Table.Buffer( Source ),

...

last_transform = ...

in

last_transform

Would this way make all subsequent transformations use the buffered table? And so accomplish the same gains as when buffering multiple subsequent transformation steps?

2__Last question: In the 2 code snippets below--only the first 2 lines are different--will Table.Buffer have a different impact or will it be the same? My understanding is that every step that follows the use of Table.Buffer will operate on the in-memory table. So whether step type_change2 is buffered or the step immediately following, ie Table.Pivot, it makes no diff because all the steps after these 2 will use the in-memory table. Is that not so? And if that is so, then why not just use Table.Buffer once, at the very beginning of the script?

#1 code snippet

type_change2 = Table.Buffer(

Table.TransformColumnTypes(#"Filtered Rows",{{"Key", type text}, {"Value", type text}}),

[BufferMode = BufferMode.Eager]

),

#"Pivoted Column" = Table.Pivot(type_change2, List.Distinct(type_change2[Key]), "Key", "Value"),

#"Changed Type" = Table.TransformColumnTypes(#"Pivoted Column", ...),

#"Replaced Value" = Table.ReplaceValue(#"Changed Type", ...),

#"Replaced Value1" = Table.ReplaceValue(#"Replaced Value", ...),

#"Replaced Value2" = Table.ReplaceValue(#"Replaced Value1", ...),

#"Replaced Value3" = Table.ReplaceValue(#"Replaced Value2", ...),

#"Changed Type1" = Table.TransformColumnTypes(#"Replaced Value3", ...),

#"Extract Post Delim" = Table.TransformColumns(#"Changed Type1", ...),

#"Replaced Value4" = Table.ReplaceValue(#"Extract Post Delim", ...),

#"Renamed Columns1" = Table.RenameColumns(#"Replaced Value4", ...)

in

#"Renamed Columns1"

vs

#2 code snippet

type_change2 = Table.TransformColumnTypes(#"Filtered Rows",{{"Key", type text}, {"Value", type text}}),

[BufferMode = BufferMode.Eager]

),

#"Pivoted Column" = Table.Buffer(

Table.Pivot(type_change2, List.Distinct(type_change2[Key]), "Key", "Value")

),

#"Changed Type" = Table.TransformColumnTypes(#"Pivoted Column", ...),

#"Replaced Value" = Table.ReplaceValue(#"Changed Type", ...),

#"Replaced Value1" = Table.ReplaceValue(#"Replaced Value", ...),

#"Replaced Value2" = Table.ReplaceValue(#"Replaced Value1", ...),

#"Replaced Value3" = Table.ReplaceValue(#"Replaced Value2", ...),

#"Changed Type1" = Table.TransformColumnTypes(#"Replaced Value3", ...),

#"Extract Post Delim" = Table.TransformColumns(#"Changed Type1", ...),

#"Replaced Value4" = Table.ReplaceValue(#"Extract Post Delim", ...),

#"Renamed Columns1" = Table.RenameColumns(#"Replaced Value4", ...)

in

#"Renamed Columns1"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Element115 -

For 1 - it depends, as @AlexisOlson says it is experimental. However there is one firm rule that you should follow. If you are connecting to foldable datasource like a database, don't buffer until after Query Folding breaks. Buffering at the very start would break folding and effectively load the entire table to temporary memory.

For 2 - Firstly, I believe Pivot is the more expensive transformation. Second, I would want Power Query to full complete all the steps before starting the Pivot transformation. Hence, my strategy would be to place it before Pivot. However, testing might show that there is very little impact from using either approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Buffering loads the table to memory as it exists at that particular step. Deciding when and where to do this is more of an experimental art rather than an exact set of rules to follow, especially without a deep understanding of exactly how the query optimization engine works.

Just because you have some version of the table loaded into memory doesn't mean that there's never a need buffer again after that point. If you do expensive calculations or extensive transformations on a table, sometimes it's worth buffering those intermediate results before doing any further steps so that you have those calculations/transformations stored in a format that can be referenced efficiently.

A rather extreme example is this function I wrote here. As @ImkeF points out, after I've done some initial transformations to set up some chunks to loop through, buffering them to memory helps a lot since it's doing nested iterations on those chunks. Only buffering the initial input wouldn't be nearly as fast.

It's possible that buffering both before and after the pivot is the fastest but that's something that needs to be tested in your specific situation. Don't go too crazy with buffers though. Take them out anywhere they don't help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I removed one buffer from one intermediated steps and removed the buffer also from both Table.AddColumn calls, and instead of buffering Table.Pivot, moved the buffer to the step immediately prior. That gained 2 minutes. Actually, the refresh time in the Service is 9-10 mins now vs 10-12 mins before these changes. (15 mins when running on the Desktop). Interesting and .... strange.

1__One last thing I am still not clear on is this:

By 'breaking folding', do you mean something like this? Using Table.StopFolding immediately as the step after all the DB related calls are done? I currently have it like this:

let

Source0 = Sql.Databases("DB"),

DB = Source{ [Name="DB_name"] }[Data],

dbo_vOLC = DB{ [Schema="dbo", Item="vOLC"] }[Data],

stop_folding = Table.StopFolding(dbo_vOLC),

type_change0 = Table.TransformColumnTypes(stop_folding, { {"ID_External", Int64.Type} }),

#"Renamed Columns" = Table.RenameColumns(type_change0, { ...,

remove_cols = Table.Buffer(

Table.RemoveColumns(

#"Renamed Columns",

2__Finally, I am confused about this buffering business. For instance, let's say you use buffer once at the top. Would this not mean that all subsequent steps will be performed on an in-memory table anyway? I thought that that is what the PQ engine would do and hence I do not quite understand why multiple buffering is required. I mean, how else are the transformations computed if not in memory?

3__which makes me wonder... if some transformations can be done at the source, then PQ instead of doing them on a in-memory table will fold them to the source, say as native SQL?

4__but then, if the source table is huge (> 1 million rows), and can't all fit in memory, is it not dangerous to use Table.StopFolding as I did in the code snippet above? Wouldn't that prevent some transformations and thus never getting a final result that could be used in the model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Breaking folding means that any further steps cannot be pushed back (folded) to be evaluated at the source. This is most relevant when you query something like a SQL database that is better/faster at handling queries than PowerQuery.

- Yes, once you buffer a table, the following steps are done in memory. As explained above, even if a table is in memory doesn't mean it's stored in the most efficient way to do further transformations, which is why additional buffering is sometimes useful.

- It's usually best to try to fold as much of the query as possible to the native source and only use buffering or other things that break query folding when needed.

- If the table is too large to fit into available memory, then Power Query has to process it in chunks. If it can't figure out how to do this, you can definitely get an out-of-memory error that causes the query to fail.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, due to the problem domain, all 1,000 records need to be queried daily multiple times. This is supposed to be a 'real-time' API, which technically it is not, but it's as close as it gets to providing the most up-to-date status of a 1,000 devices. So the ETL has to run multiple times a day. Thankfully, all IDs are unique, new handleIDs (which are just UNIX timestamps) being generated continuously by the API provider.

Helpful resources

Join our Fabric User Panel

Share feedback directly with Fabric product managers, participate in targeted research studies and influence the Fabric roadmap.

Power BI Monthly Update - February 2026

Check out the February 2026 Power BI update to learn about new features.