FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! It's time to submit your entry. Live now!

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Performance problem with power query code refr...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Performance problem with power query code refreshing a dataflow

I don't know if this is the right place to post this, but I have this code:

let

SourceFiltered = Table.SelectRows(Export_Vehicles_AVX_Linked, each [ParkingLot] <> null and [ParkingLot] <> ""),

SelectedColumns = Table.SelectColumns(SourceFiltered, {"VIN Code", "Lista_Fechas", "ParkingLot"}),

BufferedTable = Table.Buffer(SelectedColumns),

AddPreviousDate = Table.AddColumn(BufferedTable, "Fecha Anterior", each

let

CurrentParkingLot = [ParkingLot],

CurrentVINCode = [VIN Code],

CurrentDate = [Lista_Fechas],

FilteredRows = Table.SelectRows(BufferedTable,

(CurrentRow) => CurrentRow[ParkingLot] = CurrentParkingLot

and CurrentRow[VIN Code] = CurrentVINCode

and CurrentRow[Lista_Fechas] < CurrentDate),

MaxDate = List.Max(FilteredRows[Lista_Fechas])

in

if MaxDate <> null then MaxDate else null, type date),

another_vin = Table.AddColumn(AddPreviousDate, "Otro VIN", each

let

CurrentParkingLot = [ParkingLot],

CurrentVINCode = [VIN Code],

CurrentDate = [Lista_Fechas],

FilteredRows = Table.SelectRows(BufferedTable,

(CurrentRow) => CurrentRow[ParkingLot] = CurrentParkingLot

and CurrentRow[VIN Code] <> CurrentVINCode

and CurrentRow[Lista_Fechas] < CurrentDate),

MaxDate = List.Max(FilteredRows[Lista_Fechas])

in

if MaxDate <> null then MaxDate else null, type date),

consecutive_days = Table.AddColumn(another_vin, "Consecutive_Days", each if [Otro VIN] <> null and [Fecha Anterior] <> null and [Fecha Anterior] > [Otro VIN] then Duration.Days([Lista_Fechas] - [Fecha Anterior]) else 0, Int64.Type),

removed_columns = Table.RemoveColumns(consecutive_days,{"Fecha Anterior", "Otro VIN"})

in

removed_columns

This code is in a dataflow, I have a table called Export_Vehicles_AVX_Linked with about 1,6M rows. After the filter on the first instruction it goes down to 60k rows. I don't think there are so many rows for this to process for 30+ mins It's been now. It didn't stop yet and I am posting this because yestarday I tried using this code:

let

SourceFiltered = Table.SelectRows(Export_Vehicles_AVX_Linked, each [ParkingLot] <> null and [ParkingLot] <> ""),

SelectedColumns = Table.SelectColumns(SourceFiltered, {"VIN Code", "Lista_Fechas", "ParkingLot"}),

previous_date = Table.AddColumn(SelectedColumns, "Fecha Anterior", each List.Max(Table.SelectRows(SelectedColumns, (CurrentRow) => CurrentRow[ParkingLot] = [ParkingLot] and CurrentRow[VIN Code] = [VIN Code] and CurrentRow[Lista_Fechas] < [Lista_Fechas]) [Lista_Fechas]), type date),

another_vin = Table.AddColumn(previous_date, "Otro VIN", each List.Max(Table.SelectRows(previous_date, (CurrentRow) => CurrentRow[ParkingLot] = [ParkingLot] and CurrentRow[Lista_Fechas] < [Lista_Fechas] and CurrentRow[Lista_Fechas] > [Fecha Anterior] and CurrentRow[VIN Code] <> [VIN Code]) [Lista_Fechas]), type date),

consecutive_days = Table.AddColumn(another_vin, "Consecutive_Days", each if [Otro VIN] = null and [Fecha Anterior] <> null then Duration.Days([Lista_Fechas] - [Fecha Anterior]) else 0, Int64.Type),

removed_columns = Table.RemoveColumns(consecutive_days,{"Fecha Anterior", "Otro VIN"})

in

removed_columns

And it got stuck refreshing for 19+ HOURS. So this morning I've cancelled the refresh, as at 8 and it seemed to stop at 10:30, but if I check it's refreshes it says it is still cancelling, so I've lost all the dataflows connected to this one since I can't delete it or update the others.

To avoid this happening again I would like to know if the first code I've posted would be efficient in performance because I don't believe it should take so much time to perform the calculations there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Damian_CT_Nom is it coming from a a database? if yes, write native DB query to fetch the required data. If it is not coming from any db that offers server side queries, create a power bi dataset by simply fetching the tables through PQ and don't use any PQ transformation but Incremental refresh on it. Once you release it on the sever, it becomes a SSAS db and you can do all sever SSAS sever side transofrmation.

PQ is not a solution for large tables and it is a not a secret anymore. The workaround is to create a ssas db if the data is located in any db whatsover.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smpa01 thank you for your response.

The main table, Export_Vehicles_AVX_Linked, comes from another dataflow so it's a linked entity on this dataflow. Would that count as a DB? In any case, I don't know how to do what you asked, may you please guide me to some web resources so I can investigate how to do that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dataflows are not db, but datasets are ssas db. Looks like you have premium capacity as you are using dataflows. Instead of creating a dataflow, create a powerbi dataset and don't use any transformation on it. Only config incremental refresh and publish. Once you release it on the workspace, go to setting->Server Settings->Connection string. Once you grab that, create a pbix dataset/dataflow and use Analysis server connector.

The Connection string gives you something like

Data Source=powerbi://api.powerbi.com/v1.0/myorg/wsp;Initial Catalog=Contoso;

Once in AS connecttor use, Server =powerbi://api.powerbi.com/v1.0/myorg/wsp and Database=Contoso

Now, you can write fully qualified SSAS query for data transformation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So... Instead of creating a Stagering dataflow getting the data from my sharepoint I should do that in Power BI Desktop, publish the report and then create a transformation DataFlow connecting to the dataset via Analysis server connector. Then figure out how to do the same I did in power query in SQL. Is that it?

Edit: ok @smpa01 nevermind... IT has that feature disabled... DataSource.Error: AnalysisServices: XMLA endpoint feature is disabled. Turn on Analyze in Excel feature in PowerBI.com to enable this feature. sadly I can't do that.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Instead of creating a Stagering dataflow getting the data from my sharepoint I should do that in Power BI Desktop, publish the report and then create a transformation DataFlow connecting to the dataset via Analysis server connector.- you got it

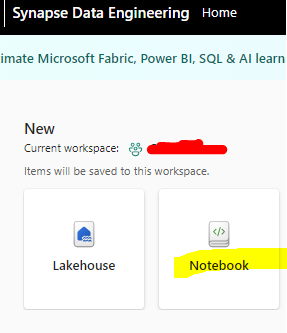

Since you don't have it, the next option would be to utilize fabric if you have it. Actually, that should be the first option, if you have fabric. Fabric has notebook and notebook has blazing fast scala

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thought fabric was how they renamed the entire enviroment for power bi and power apps... Could you tell me how to do that?

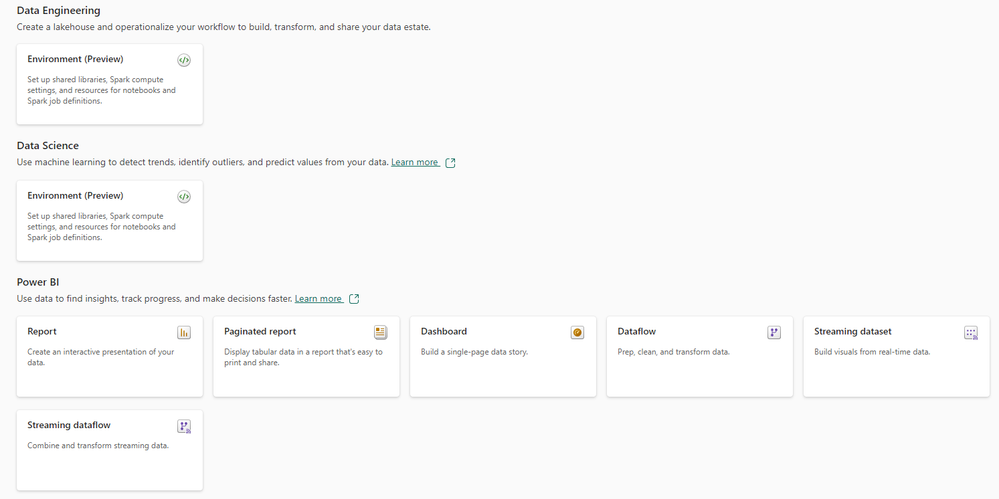

This is what I can create on our workspace.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hate strongly dislike to say it but have you tested one of the standard Nested Join commands?

You may also want to move the Table.Buffer further up (assuming you have the memory)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @lbendlin thank you for your response.

How would I use Nested Joins here? I'm in need to calculate previous date for the same pair of parkinglot + vin code, then a date for another vin code in the same parkinglot .

On the other hand, taking further up the Buffer means going from 60k rows to 1,6M rows... I don't really know if it would change much since the table I'm referencing is a linked entity (it's the outcome table from another dataflow).

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! It's time to submit your entry.

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 14 | |

| 13 | |

| 9 | |

| 7 | |

| 7 |