Fabric Data Days starts November 4th!

Advance your Data & AI career with 50 days of live learning, dataviz contests, hands-on challenges, study groups & certifications and more!

Get registered- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Get Fabric Certified for FREE during Fabric Data Days. Don't miss your chance! Request now

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: PQ to add column from list to an existing tabl...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PQ to add column from list to an existing table

My base table is this

#table({"col1", "col2"}, {{1,2},{3,4}})

I have a list as this

_list = {5,6},

I want to end up with this

| col1 | col2 | col3 |

|------|------|------|

| 1 | 3 | 5 |

| 2 | 4 | 6 |

I tried this which doesn't work.

let

Source = #table({"col1", "col2"}, {{1,2},{3,4}}),

_list = {5,6},

Custom1 = Table.AddColumn(Source,"col3", each _list)

in

Custom1

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

= Table.AddColumn(Source,"col3",each Function.ScalarVector(type function(n as number) as number ,each _list)(_))

i did not really get the trick of Function.ScalarVector, just try and use it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@wdx223_Daniel That's a pretty neat trick I haven't used before. Kudos!

It can indeed be extended to multiple columns by zipping the lists together and creating a record for each row to then expand. I'm sure there are lots of other possible generalizations and I'd love to see how you'd do it.

let

Source = #table({"col1", "col2"}, {{1, 2}, {3, 4}}),

list1 = {5, 6},

list2 = {7, 8},

list3 = {9, 0},

ListsCombined = List.Zip({list1, list2, list3}),

NewColNames = {"col3", "col4", "col5"},

Custom1 = Table.AddColumn(

Source,

"NewCols",

each Record.FromList(

Function.ScalarVector(type function (n as list) as list, each ListsCombined)(_),

NewColNames

)

),

#"Expanded NewCols" = Table.ExpandRecordColumn(Custom1, "NewCols", NewColNames, NewColNames)

in

#"Expanded NewCols"

@smpa01 I'm glad you asked this question. I find it relatively common to need to add existing lists as new columns but never found an approach that feels clean and efficient.

@ImkeF I'd love to hear what you'd suggest for this topic since, to me, it feels similar in flavor to your Transforming Multiple Columns post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

@ImkeF , @smpa01 , @AlexisOlson , @wdx223_Daniel @latimeria

The benefits of "scalar" approach escapes for me. In the example that Chris brought in his table, it assumes performing a row-by-row function, which can be optimised this way.

In this particular case we are dealing with merging two (or more) sets of data which does not have anything in common (except, potentially, an equal number of rows). There is no functional dependency of one set on another from what I see.

Therefore this is unclear which option would be quicker for this particular application. I would say, it is heavily dependant on the implementation. For instance, if the table is mapped to the memory as a set of columns somehow build into a form of a list, breaking it down to columns would not take any time regardless of the number of rows. The new columns would be just added to the list of columns (as references rather than copying blocks of data into a new structure). This can work because since defined variable is immutable, hance the reference is guaranteed to not to change whatever happens.

On the other hand, "scalar" option in this world would have to actually scroll through each item in the list (hence this can be done only once for all new columns at the same time) to add them to the existing table as new columns (i. e. physically copy blocks of data from one place in memory to another). Buffering the table can improve this to some extend, but the mechanics of this I can't explain/envisage.

I've run the following test. See two queries below, one is a "scalar" and another Table.ToColumns option of adding lists. Both operate with 1m rows (both on the table and the lists):

let

data = List.Zip({{1..1000000}, List.Transform({1..1000000}, each Text.From(_) & "." & "0")}),

Source = #table({"col1", "col2"}, data),

list1 = List.Transform({1..1000000}, each Text.From(_) & "." & "1"),

list2 = List.Transform({1..1000000}, each Text.From(_) & "." & "2"),

list3 = List.Transform({1..1000000}, each Text.From(_) & "." & "3"),

// Buffer function input for performance reasons

BufferedTable = List.Buffer(

Table.ToRecords(#table({"col3", "col4", "col5"}, List.Zip({list1, list2, list3})))

),

AddColumn = Table.AddColumn(

Source,

"NewCols",

each Function.ScalarVector(type function (x as record) as record, each BufferedTable)(_)

),

#"Expanded NewCols" = Table.ExpandRecordColumn(AddColumn, "NewCols", {"col3", "col4", "col5"})

in

#"Expanded NewCols"

let

data = List.Zip({{1..1000000}, List.Transform({1..1000000}, each Text.From(_) & "." & "0")}),

Source = #table({"col1", "col2"}, data),

list1 = List.Transform({1..1000000}, each Text.From(_) & "." & "1"),

list2 = List.Transform({1..1000000}, each Text.From(_) & "." & "2"),

list3 = List.Transform({1..1000000}, each Text.From(_) & "." & "3"),

Custom1 = Table.FromColumns(Table.ToColumns(Source) & {list1} & {list2} & {list3})

in

Custom1

The "scalar" option on my laptop refreshes for about 3 sec, which is quick, but the Table.ToColumns refreshes in a blink of the eye, I can't even see the running dots to start counting. Also, IMHO, the table.tocolumns looks a bit neater and straightforward.

This kind of proofs the point with in-memory organisation of tables which favours the Table.ToColumns version. But I can see that this may be case-dependant.

Cheers,

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AlexisOlson ,

I must admint I haven't used this function much so far, but re-reading Chris' article about it, I definitely should change that.

As he pointed out the performance benefits only apply to buffered inputs, you might consider re-writing it like so for example:

let

Source = #table({"col1", "col2"}, {{1, 2}, {3, 4}}),

list1 = {5, 6},

list2 = {7, 8},

list3 = {9, 0},

// Buffer function input for performance reasons

BufferedTable = List.Buffer(

Table.ToRecords(#table({"col3", "col4", "col5"}, List.Zip({list1, list2, list3})))

),

AddColumn = Table.AddColumn(

Source,

"NewCols",

each Function.ScalarVector(type function (x as record) as record, each BufferedTable)(_)

),

#"Expanded NewCols" = Table.ExpandRecordColumn(AddColumn, "NewCols", {"col3", "col4", "col5"})

in

#"Expanded NewCols"

(very strange that it doesn't seem to work with a table input, though...)

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

= Table.AddColumn(Source,"col3",each Function.ScalarVector(type function(n as number) as number ,each _list)(_))

i did not really get the trick of Function.ScalarVector, just try and use it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@wdx223_Daniel this is simply fascinating, just got greedy.

Can we add multiple lists in one go, i.e. adding list1, list2 in Custom1?

let

Source = #table({"col1", "col2"}, {{1, 2}, {3, 4}}),

list1 = {3, 4},

list2 = {5, 6},

Custom1 = Table.AddColumn(

Source,

"col3",

each Function.ScalarVector(type function (n as number) as number, each list2)(_)

)

in

Custom1

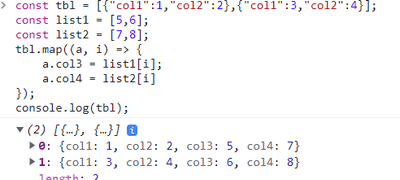

What I am asking is this, can we perform something similar to a javascript map?

const tbl = [{"col1":1,"col2":2},{"col1":3,"col2":4}];

const list1 = [5,6];

const list2 = [7,8];

tbl.map((a, i) => {

a.col3 = list1[i];

a.col4 = list2[i]

});

console.log(tbl);

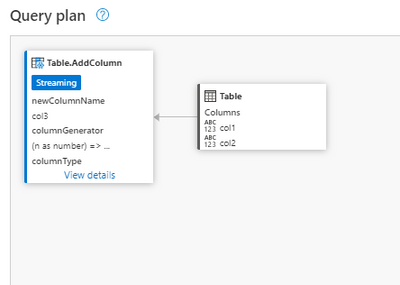

This is the query plan that I see here for @wdx223_Daniel

But PQ does not generate any query plan for @jbwtp @latimeria query. I can't really assess the performance of this query. But I guess the query provided by you would have more overheads cause it needs to perform this `Table.ToColumns(Source)` which I would want to avoid cause my dataset (multiple excel from SharePoint) has 700k+ rows. Thank you nevertheless.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@wdx223_Daniel That's a pretty neat trick I haven't used before. Kudos!

It can indeed be extended to multiple columns by zipping the lists together and creating a record for each row to then expand. I'm sure there are lots of other possible generalizations and I'd love to see how you'd do it.

let

Source = #table({"col1", "col2"}, {{1, 2}, {3, 4}}),

list1 = {5, 6},

list2 = {7, 8},

list3 = {9, 0},

ListsCombined = List.Zip({list1, list2, list3}),

NewColNames = {"col3", "col4", "col5"},

Custom1 = Table.AddColumn(

Source,

"NewCols",

each Record.FromList(

Function.ScalarVector(type function (n as list) as list, each ListsCombined)(_),

NewColNames

)

),

#"Expanded NewCols" = Table.ExpandRecordColumn(Custom1, "NewCols", NewColNames, NewColNames)

in

#"Expanded NewCols"

@smpa01 I'm glad you asked this question. I find it relatively common to need to add existing lists as new columns but never found an approach that feels clean and efficient.

@ImkeF I'd love to hear what you'd suggest for this topic since, to me, it feels similar in flavor to your Transforming Multiple Columns post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

@ImkeF , @smpa01 , @AlexisOlson , @wdx223_Daniel @latimeria

The benefits of "scalar" approach escapes for me. In the example that Chris brought in his table, it assumes performing a row-by-row function, which can be optimised this way.

In this particular case we are dealing with merging two (or more) sets of data which does not have anything in common (except, potentially, an equal number of rows). There is no functional dependency of one set on another from what I see.

Therefore this is unclear which option would be quicker for this particular application. I would say, it is heavily dependant on the implementation. For instance, if the table is mapped to the memory as a set of columns somehow build into a form of a list, breaking it down to columns would not take any time regardless of the number of rows. The new columns would be just added to the list of columns (as references rather than copying blocks of data into a new structure). This can work because since defined variable is immutable, hance the reference is guaranteed to not to change whatever happens.

On the other hand, "scalar" option in this world would have to actually scroll through each item in the list (hence this can be done only once for all new columns at the same time) to add them to the existing table as new columns (i. e. physically copy blocks of data from one place in memory to another). Buffering the table can improve this to some extend, but the mechanics of this I can't explain/envisage.

I've run the following test. See two queries below, one is a "scalar" and another Table.ToColumns option of adding lists. Both operate with 1m rows (both on the table and the lists):

let

data = List.Zip({{1..1000000}, List.Transform({1..1000000}, each Text.From(_) & "." & "0")}),

Source = #table({"col1", "col2"}, data),

list1 = List.Transform({1..1000000}, each Text.From(_) & "." & "1"),

list2 = List.Transform({1..1000000}, each Text.From(_) & "." & "2"),

list3 = List.Transform({1..1000000}, each Text.From(_) & "." & "3"),

// Buffer function input for performance reasons

BufferedTable = List.Buffer(

Table.ToRecords(#table({"col3", "col4", "col5"}, List.Zip({list1, list2, list3})))

),

AddColumn = Table.AddColumn(

Source,

"NewCols",

each Function.ScalarVector(type function (x as record) as record, each BufferedTable)(_)

),

#"Expanded NewCols" = Table.ExpandRecordColumn(AddColumn, "NewCols", {"col3", "col4", "col5"})

in

#"Expanded NewCols"

let

data = List.Zip({{1..1000000}, List.Transform({1..1000000}, each Text.From(_) & "." & "0")}),

Source = #table({"col1", "col2"}, data),

list1 = List.Transform({1..1000000}, each Text.From(_) & "." & "1"),

list2 = List.Transform({1..1000000}, each Text.From(_) & "." & "2"),

list3 = List.Transform({1..1000000}, each Text.From(_) & "." & "3"),

Custom1 = Table.FromColumns(Table.ToColumns(Source) & {list1} & {list2} & {list3})

in

Custom1

The "scalar" option on my laptop refreshes for about 3 sec, which is quick, but the Table.ToColumns refreshes in a blink of the eye, I can't even see the running dots to start counting. Also, IMHO, the table.tocolumns looks a bit neater and straightforward.

This kind of proofs the point with in-memory organisation of tables which favours the Table.ToColumns version. But I can see that this may be case-dependant.

Cheers,

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smpa01 ,

Try this

let

Source = #table({"col1", "col2"}, {{1,2},{3,4}}),

TableToList = Table.ToColumns(Source),

_list = {5,6},

MergeLists = List.Combine( {TableToList, {_list} }),

Custom2 = Table.FromColumns(MergeLists)

in

Custom2- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @smpa01,

I would change the last line to something like this:

Table.FromColumns(MergeLists, Value.Type(Table.AddColumn(Source, "Column3", each null, type number)))to avoid re-setting all column types again.

Cheers,

John

Helpful resources

Fabric Data Days

Advance your Data & AI career with 50 days of live learning, contests, hands-on challenges, study groups & certifications and more!

Power BI Monthly Update - October 2025

Check out the October 2025 Power BI update to learn about new features.