FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

To celebrate FabCon Vienna, we are offering 50% off select exams. Ends October 3rd. Request your discount now.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Optimize performance at Un-Cumulate calculatio...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Optimize performance at Un-Cumulate calculation

Hello, i have the following issue trying to optimize at the moment.

I have a really huge amount of data with thousands of records where unfortunately the dataset i receive is only showing the cummulated Sales Quantities from month to month - so what i have to do at the moment for EVERY Record which is being imported is to look up the record from the previous period for the same Region, Item, Year and subtract this amount from the current record. As this is done (as mentioned) for any record you can imagine the amount and time needed for this action to be performed.

So as i said - i have already found a generally "working" solution - but the problem is that it takes a huge amount of time every time it is being refreshed which is quite frustrating so i was wondering if anybody has a suggestion of how this process could be optimized from a performance point of view:

This is the current function i am using:

Call from Table ==>

= Table.AddColumn(#"Removed Duplicates", "Wert", each if [Kennzahl] = "Menge" then [KummWert] - fxGetPrev(#"Removed Duplicates",_) else [KummWert])

fxGetPrev ==>

= (table as table, record as record) as number =>

let

CurrRows = Table.SelectRows(table, each ([Jahr] = record[Jahr]) and ([Kennzahl] = "Menge") and ([Region] = record[Region])

and ([Artikelnr. Lieferant] = record[Artikelnr. Lieferant]) and ([Datum] < record[Datum])),

CurrCols = Table.SelectColumns(CurrRows, "KummWert"),

TableSorted = Table.Sort(CurrCols, {"KummWert", Order.Descending}),

PrevVal = Table.FirstValue(TableSorted,0)

in

PrevVal

Any suggestions would be highly appreciated !!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

assume your data is sorted by Datum from smallest to largest. then try this code

=Table.FromRecords(List.Accumulate(Table.ToRecords(#"Removed Duplicates"),{{},0},(x,y)=>{x{0}&{y&[Wert=if y[Kennzahl] = "Menge" then y[KummWert] -x{1} else y[KummWert]]},if y[Kennzahl] = "Menge" then y[KummWert] else x{1}}){0})

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I forgot to include the final steps of taking the difference.

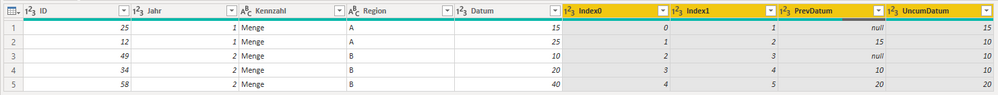

Here's what the result looks like (highlighted columns are new, non-highlighted are starting data):

Here's the full code for this example:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjJV0lEyBGLf1Lz0VCDtCOKbKsXqRCsZGmGRM4LImVqA2EhyTkBsYgCWMzbBImcEkTOxxCJnCJSLBQA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [ID = _t, Jahr = _t, Kennzahl = _t, Region = _t, Datum = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", Int64.Type}, {"Jahr", Int64.Type}, {"Kennzahl", type text}, {"Region", type text}, {"Datum", Int64.Type}}),

#"Sorted Rows1" = Table.Sort(#"Changed Type",{{"Jahr", Order.Ascending}, {"Region", Order.Ascending}, {"Datum", Order.Ascending}}),

#"Added Index0" = Table.AddIndexColumn(#"Sorted Rows1", "Index0", 0, 1, Int64.Type),

#"Added Index1" = Table.AddIndexColumn(#"Added Index0", "Index1", 1, 1, Int64.Type),

#"Merged Queries" = Table.NestedJoin(#"Added Index1", {"Index0", "Jahr", "Kennzahl", "Region"}, #"Added Index1", {"Index1", "Jahr", "Kennzahl", "Region"}, "Added Index1", JoinKind.LeftOuter),

#"Expanded Added Index" = Table.ExpandTableColumn(#"Merged Queries", "Added Index1", {"Datum"}, {"PrevDatum"}),

#"Sorted Rows2" = Table.Sort(#"Expanded Added Index",{{"Jahr", Order.Ascending}, {"Region", Order.Ascending}, {"Datum", Order.Ascending}}),

#"Added Custom" = Table.AddColumn(#"Sorted Rows2", "UncumDatum", each [Datum] - (if [PrevDatum] = null then 0 else [PrevDatum]), Int64.Type)

in

#"Added Custom"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

assume your data is sorted by Datum from smallest to largest. then try this code

=Table.FromRecords(List.Accumulate(Table.ToRecords(#"Removed Duplicates"),{{},0},(x,y)=>{x{0}&{y&[Wert=if y[Kennzahl] = "Menge" then y[KummWert] -x{1} else y[KummWert]]},if y[Kennzahl] = "Menge" then y[KummWert] else x{1}}){0})

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hei i am still trying to make your function work but i still get the Stack Overflow error although i grouped, and indexed my table now. What i do not understand in your function ==> what exactly does your "Seed" of "{{},0}" mean and how was it ment to work ? Could you be so kind to just briefly explain in short words so that i might start understanding the underlying idea? Thanks !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hei @wdx223_Daniel sorry i did not yet want to accept your solution since it does not work unfortunately 😕

First of all , i get a stack overflow as i run the function and in addition I think there is one important detail you missed which is the fact that i do not simply need to un-cummulate row by row but still have to differentiate between the particular Item numbers as well!!

So simply going down and using the sum of the prev is not working since i need to sort by item as well and somehow realize when i enter a new item - then again the first row should be considered KummAmount = Amount and then from the Second row of an item the calculation should start again !

I hope I kinda explained the problem good enough. If there is still something unclear let me know! I will try to find a fix for this issue in the meantime myself, but if you would have some input i could try out it would be highly appreciated !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Calling functions row by row tends to be pretty inefficient.

You can speed things up immensely by doing things with joins instead. Sort your data appropriately, add two index columns, and do a self-merge to grab the prior value.

Here's a sample query to demonstrate the concept:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjJV0lEyBGLf1Lz0VCDtCOKbKsXqRCsZGmGRM4LImVqA2EhyTkBsYgCWMzbBImcEkTOxxCJnCJSLBQA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [ID = _t, Jahr = _t, Kennzahl = _t, Region = _t, Datum = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", Int64.Type}, {"Jahr", Int64.Type}, {"Kennzahl", type text}, {"Region", type text}, {"Datum", Int64.Type}}),

#"Sorted Rows1" = Table.Sort(#"Changed Type",{{"Jahr", Order.Ascending}, {"Region", Order.Ascending}, {"Datum", Order.Ascending}}),

#"Added Index0" = Table.AddIndexColumn(#"Sorted Rows1", "Index0", 0, 1, Int64.Type),

#"Added Index1" = Table.AddIndexColumn(#"Added Index0", "Index1", 1, 1, Int64.Type),

#"Merged Queries" = Table.NestedJoin(#"Added Index1", {"Index0", "Jahr", "Kennzahl", "Region"}, #"Added Index1", {"Index1", "Jahr", "Kennzahl", "Region"}, "Added Index1", JoinKind.LeftOuter),

#"Expanded Added Index" = Table.ExpandTableColumn(#"Merged Queries", "Added Index1", {"Datum"}, {"PrevDatum"}),

#"Sorted Rows2" = Table.Sort(#"Expanded Added Index",{{"Jahr", Order.Ascending}, {"Region", Order.Ascending}, {"Datum", Order.Ascending}})

in

#"Sorted Rows2"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I forgot to include the final steps of taking the difference.

Here's what the result looks like (highlighted columns are new, non-highlighted are starting data):

Here's the full code for this example:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjJV0lEyBGLf1Lz0VCDtCOKbKsXqRCsZGmGRM4LImVqA2EhyTkBsYgCWMzbBImcEkTOxxCJnCJSLBQA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [ID = _t, Jahr = _t, Kennzahl = _t, Region = _t, Datum = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", Int64.Type}, {"Jahr", Int64.Type}, {"Kennzahl", type text}, {"Region", type text}, {"Datum", Int64.Type}}),

#"Sorted Rows1" = Table.Sort(#"Changed Type",{{"Jahr", Order.Ascending}, {"Region", Order.Ascending}, {"Datum", Order.Ascending}}),

#"Added Index0" = Table.AddIndexColumn(#"Sorted Rows1", "Index0", 0, 1, Int64.Type),

#"Added Index1" = Table.AddIndexColumn(#"Added Index0", "Index1", 1, 1, Int64.Type),

#"Merged Queries" = Table.NestedJoin(#"Added Index1", {"Index0", "Jahr", "Kennzahl", "Region"}, #"Added Index1", {"Index1", "Jahr", "Kennzahl", "Region"}, "Added Index1", JoinKind.LeftOuter),

#"Expanded Added Index" = Table.ExpandTableColumn(#"Merged Queries", "Added Index1", {"Datum"}, {"PrevDatum"}),

#"Sorted Rows2" = Table.Sort(#"Expanded Added Index",{{"Jahr", Order.Ascending}, {"Region", Order.Ascending}, {"Datum", Order.Ascending}}),

#"Added Custom" = Table.AddColumn(#"Sorted Rows2", "UncumDatum", each [Datum] - (if [PrevDatum] = null then 0 else [PrevDatum]), Int64.Type)

in

#"Added Custom"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! Actually this seems to be the only viable solution! I have unintentionally accepted the other post also as solution but this did not work out! Thanks a lot for your help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@wdx223_Daniel's solution is brilliant but somewhat difficult to understand and adapt.

Here's an approach inspired by them but (hopefully) a bit more transparent:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WMjJV0lEyBGLf1Lz0VCDtCOKbKsXqRCsZGmGRM4LImVqA2EhyTkBsYgCWMzbBImcEkTOxxCJnCJSLBQA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [ID = _t, Jahr = _t, Kennzahl = _t, Region = _t, Datum = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"ID", Int64.Type}, {"Jahr", Int64.Type}, {"Kennzahl", type text}, {"Region", type text}, {"Datum", Int64.Type}}),

#"Sorted Rows" = Table.Sort(#"Changed Type",{{"Jahr", Order.Ascending}, {"Datum", Order.Ascending}}),

RecordList = Table.ToRecords(#"Sorted Rows"),

ListGen =

List.Generate(

() => [curr = RecordList{0}, prev = RecordList{0}, u = RecordList{0}[Datum], i = 0],

each [i] < List.Count(RecordList),

each [

curr = RecordList{i},

prev = RecordList{i-1},

u = if curr[Kennzahl] = "Menge"

and curr[Jahr] = prev[Jahr]

and curr[Region] = prev[Region]

then curr[Datum] - prev[Datum]

else curr[Datum],

i = [i] + 1

],

each [curr] & [DatumUncum = [u]]

),

RecordsToTable = Table.FromRecords(ListGen),

#"Changed Type1" = Table.TransformColumnTypes(RecordsToTable,{{"ID", Int64.Type}, {"Jahr", Int64.Type}, {"Kennzahl", type text}, {"Region", type text}, {"Datum", Int64.Type}, {"DatumUncum", Int64.Type}})

in

#"Changed Type1"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot! Actually @wdx223_Daniel s solution did not work properly since i got a stack overflow error which i was not able to resolve. Further what is missing in both solutions but was no big challange to add myself was the fact that there are multiple items and therefor i need to un-cummulate the amount per item and year!, which means i cannot simply sort the list and then subtract the prev amount from the line underneath.

What i actually did -

- I sorted per Region / Kennzahl & Date

i created an GroupIndex (per Region/Kennzahl/YEAR AND Item) - since the amount is always being cummulated per Year and Item which means first entry in Jan of any year is the absolute amount - the following entry of same item in Feb is (KummFeb - JanAmount) etc.

- I created a simple record index column

- I created a JoinIndex Col which is Conditional (If GroupIndex = 1 then 1 else [GroupIndex}{[Index]-1})

- THen i can simply make NestedJoin (Left.Outer) by joining GroupIndex & JoinIndex

- Finally i have the corresponding KummAmount to subtract from in one line to calc. Abs. Amount

In this form i have an Absolute Amount per Region/Kennzahl/Year AND ITEM - this is important since prev. solutions (as i understood) where based on the idea like there would only be on single Cummulative Amount but i have to separate between years and items in addition to that.

I will copy the full code next week if anybody is interested !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Both of the solutions I gave are easily extendable to grouping on additional columns.

In the Index0/Index1 version, you include these additional columns to match on in the self-merge. In the List.Generate version, you add additional "and curr[col] = prev[col]" conditions. For both, you do need to make sure that your table is sorted before doing the other stuff.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You might find it faster to `Join` the two tables (Table.Join or Table.NestedJoin). Then retain the columns you need to do the calculation.