FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

To celebrate FabCon Vienna, we are offering 50% off select exams. Ends October 3rd. Request your discount now.

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- How to group rows which belongs together without c...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to group rows which belongs together without common primary key?

Hello,

I have following sample table which I already sorted in PowerQuery according to wagon number and Starttime timestamp. Its only possible because PowerQuery can sort on multiple columns while Excel would mixed it up a bit:

| MaterialNo. | WagonNo | TimestampStart | ||

| 345434 | 111 | 13.01.2023 17:10:33 | ||

| 534534 | 111 | 13.01.2023 17:10:33 | ||

| 534533 | 111 | 13.01.2023 17:11:55 | ||

| 645343 | 555 | 13.01.2023 21:10:49 | ||

| 453453 | 555 | 13.01.2023 21:12:49 | ||

| 345434 | 555 | 13.01.2023 21:13:49 | ||

| 578975 | 111 | 13.01.2023 22:10:33 | ||

| 435434 | 111 | 13.01.2023 22:10:33 | ||

| 453455 | 222 | 13.01.2023 22:10:33 | ||

| 734543 | 222 | 13.01.2023 22:10:49 |

On the table are a record of the transport via different wagon we use to move items between A & B. The quantity of items per transport can differ each time. So every time we load some items on the wagon for it to transport from A to B, it comes back empty to be reloaded again. The MaterialNo. is random, so there isn't a common ID we got from each transport. The only thing I can do is to sort WagonNo. and TimestampStart ascendingly to get the quantity of items which were transported with that wagon.

Is there a way to produce a artificial Index? For example the first transport with WagonNO. "111" which were around 17h is not the same as the one happening at 22h on that same day. Both should get a different Index ID.

For example :

| IndexID | MaterialNo. | WagonNo | TimestampStart | ||

| 1 | 345434 | 111 | 13.01.2023 17:10:33 | ||

| 1 | 534534 | 111 | 13.01.2023 17:10:33 | ||

| 1 | 534533 | 111 | 13.01.2023 17:11:55 | ||

| 2 | 645343 | 555 | 13.01.2023 21:10:49 | ||

| 2 | 453453 | 555 | 13.01.2023 21:12:49 | ||

| 2 | 345434 | 555 | 13.01.2023 21:13:49 | ||

| 3 | 578975 | 111 | 13.01.2023 22:10:33 | ||

| 3 | 435434 | 111 | 13.01.2023 22:10:33 | ||

| 4 | 453455 | 222 | 13.01.2023 22:10:33 | ||

| 4 | 734543 | 222 | 13.01.2023 22:10:49 |

With that I could do all sorts of average, and count calculation because I can differentiate the different transports.

Thank you very much in advance.

Best.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

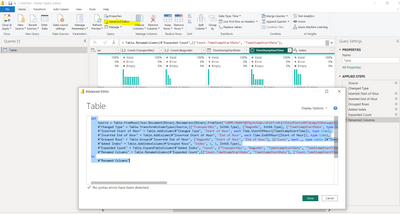

Hi @Applicable88 - please try the following:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("ldDRCcMwDATQVYq+A/GdpLrxKib7rxHLkJIUIuiPweJxnNS7qLmpySIAxluwgisLdXxeqA2lqcq+dPFB/5CaSDT3Kd8RGWMfg19JRKZtU9oMTSRP+d3oSeopvX626g89yctGpsmV7jJ6RibJXNZZNJXRcz8A", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"TransportNo/" = _t, WagonNo = _t, TimeStampStartDate = _t, TimeStampStartTime = _t]),

#"Parsed Time" = Table.TransformColumns(Source,{{"TimeStampStartTime", each Time.From(DateTimeZone.From(_)), type time}}),

#"Parsed Date" = Table.TransformColumns(#"Parsed Time",{{"TimeStampStartDate", each Date.From(DateTimeZone.From(_)), type date}}),

#"Changed Type" = Table.TransformColumnTypes(#"Parsed Date",{{"WagonNo", Int64.Type}, {"TransportNo/", Int64.Type}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 0, 1, Int64.Type),

#"Added Custom" = Table.AddColumn(#"Added Index", "IndexTest", each try #"Added Index"{[Index=([Index]-1)]}[WagonNo] <> [WagonNo] otherwise true , Int64.Type),

#"Added Custom1" = Table.AddColumn(#"Added Custom", "Load Group ID", each if [IndexTest] then [Index] else null, Int64.Type),

#"Filled Down" = Table.FillDown(#"Added Custom1",{"Load Group ID"}),

#"Removed Columns" = Table.RemoveColumns(#"Filled Down",{"Index", "IndexTest"})

in

#"Removed Columns"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Applicable88 - please try the following:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("ldDRCcMwDATQVYq+A/GdpLrxKib7rxHLkJIUIuiPweJxnNS7qLmpySIAxluwgisLdXxeqA2lqcq+dPFB/5CaSDT3Kd8RGWMfg19JRKZtU9oMTSRP+d3oSeopvX626g89yctGpsmV7jJ6RibJXNZZNJXRcz8A", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"TransportNo/" = _t, WagonNo = _t, TimeStampStartDate = _t, TimeStampStartTime = _t]),

#"Parsed Time" = Table.TransformColumns(Source,{{"TimeStampStartTime", each Time.From(DateTimeZone.From(_)), type time}}),

#"Parsed Date" = Table.TransformColumns(#"Parsed Time",{{"TimeStampStartDate", each Date.From(DateTimeZone.From(_)), type date}}),

#"Changed Type" = Table.TransformColumnTypes(#"Parsed Date",{{"WagonNo", Int64.Type}, {"TransportNo/", Int64.Type}}),

#"Added Index" = Table.AddIndexColumn(#"Changed Type", "Index", 0, 1, Int64.Type),

#"Added Custom" = Table.AddColumn(#"Added Index", "IndexTest", each try #"Added Index"{[Index=([Index]-1)]}[WagonNo] <> [WagonNo] otherwise true , Int64.Type),

#"Added Custom1" = Table.AddColumn(#"Added Custom", "Load Group ID", each if [IndexTest] then [Index] else null, Int64.Type),

#"Filled Down" = Table.FillDown(#"Added Custom1",{"Load Group ID"}),

#"Removed Columns" = Table.RemoveColumns(#"Filled Down",{"Index", "IndexTest"})

in

#"Removed Columns"- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Daryl-Lynch-Bzy thats great! Thank you very much. That would solved my problem. You using the INDEX number as new unique identifier.

I just changed one thing: Since my data has many rows it took very long time to evaluate with "try each Index -1 " . I guess it takes a long time to check if previous value has same string.

Instead I made two indexes, one starts at zero and another starts at 1. I made a left outer join to bring that wagonNo. up. And now I continued the same procedure what you did. The left join saved ton of time.

Thank you very much !

Best.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have grouped by wagon number, end time, start time.

Additionally you can group by start date as well, if required.

paste the below code into a blank query for my solution:

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("ldDRCcMwDATQVYq+A/GdpLrxKib7rxHLkJIUIuiPweJxnNS7qLmpySIAxluwgisLdXxeqA2lqcq+dPFB/5CaSDT3Kd8RGWMfg19JRKZtU9oMTSRP+d3oSeopvX626g89yctGpsmV7jJ6RibJXNZZNJXRcz8A", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [#"TransportNo/" = _t, WagonNo = _t, TimeStampStartDate = _t, TimeStampStartTime = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"TransportNo/", Int64.Type}, {"WagonNo", Int64.Type}, {"TimeStampStartDate", type date}, {"TimeStampStartTime", type time}}),

#"Inserted Start of Hour" = Table.AddColumn(#"Changed Type", "Start of Hour", each Time.StartOfHour([TimeStampStartTime]), type time),

#"Inserted End of Hour" = Table.AddColumn(#"Inserted Start of Hour", "End of Hour", each Time.EndOfHour([Start of Hour]), type time),

#"Grouped Rows" = Table.Group(#"Inserted End of Hour", {"WagonNo", "Start of Hour", "End of Hour"}, {{"Count", each _, type table [#"TransportNo/"=nullable number, WagonNo=nullable number, TimeStampStartDate=nullable date, TimeStampStartTime=nullable time, Start of Hour=time, End of Hour=time]}}),

#"Added Index" = Table.AddIndexColumn(#"Grouped Rows", "Index", 1, 1, Int64.Type),

#"Expanded Count" = Table.ExpandTableColumn(#"Added Index", "Count", {"TransportNo/", "WagonNo", "TimeStampStartDate", "TimeStampStartTime"}, {"Count.TransportNo/", "Count.WagonNo", "Count.TimeStampStartDate", "Count.TimeStampStartTime"}),

#"Renamed Columns" = Table.RenameColumns(#"Expanded Count",{{"Count.TimeStampStartDate", "TimeStampStartDate"}, {"Count.TimeStampStartTime", "TimeStampStartTime"}})

in

#"Renamed Columns"

output

Appreciate a thumbs up if this is helpful.

Please accept as the solution if it resolves your query

If my response(s) assisted you in any way, don't forget to drop me a Kudos 🙂

Kind Regards,

Avinash

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @adudani ,

thank you so far, but I think this won't work.

Maybe my table didn't consider all the possiblities. Here is a little change

| IndexID | MaterialNo. | WagonNo | TimestampStart | ||

| 1 | 345434 | 111 | 13.01.2023 17:10:33 | ||

| 1 | 534534 | 111 | 13.01.2023 17:10:33 | ||

| 1 | 534533 | 111 | 13.01.2023 17:11:55 | ||

| 2 | 645343 | 555 | 13.01.2023 21:10:49 | ||

| 2 | 453453 | 555 | 13.01.2023 21:12:49 | ||

| 2 | 345434 | 555 | 13.01.2023 22:13:49 | ||

| 3 | 578975 | 111 | 13.01.2023 22:10:33 | ||

| 3 | 435434 | 111 | 13.01.2023 23:12:33 | ||

| 4 | 453455 | 222 | 13.01.2023 22:10:33 | ||

| 4 | 734543 | 222 | 13.01.2023 22:10:49 |

You simply added a extraction of the hour as a columns for start and end hours. But in reality the transports are very frequent or also hour later. For example the second "111"transport has two items "MaterialNo". 578975 and 435434. Both of them belongs to the same transport, but the second item was prepared much later until it got on the same wagon. When now use MaterialNo. , wagonno. and the start and end hours and also the timestamp for for grouping, it won't be counting the amount of materials of the the same transport correctly.

Hope you have a another idea. The concept is right, but your workaround won't consider items which are not prepared in the same hour. It can also happen that on item got finish at the 59 minute, and the next one is already at the next hour minute 1.