FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Vote for your favorite vizzies from the Power BI Dataviz World Championship submissions. Vote now!

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Re: Dynamic Data Source and Power Query syntax

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dynamic Data Source and Power Query syntax

Hi All!

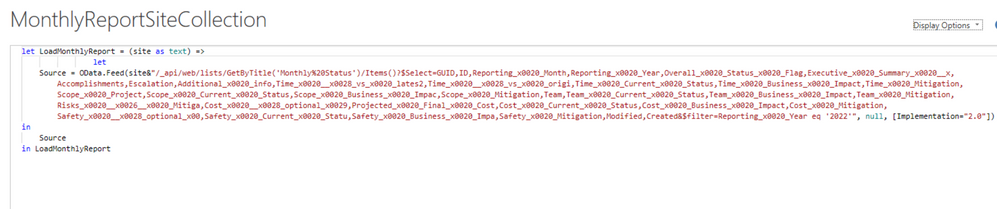

So, currently I was working on fixing a dynamic data source issue and auto-refresh using the power bi service. Now, below is the original query that was used for calling the data from the dynamic source.

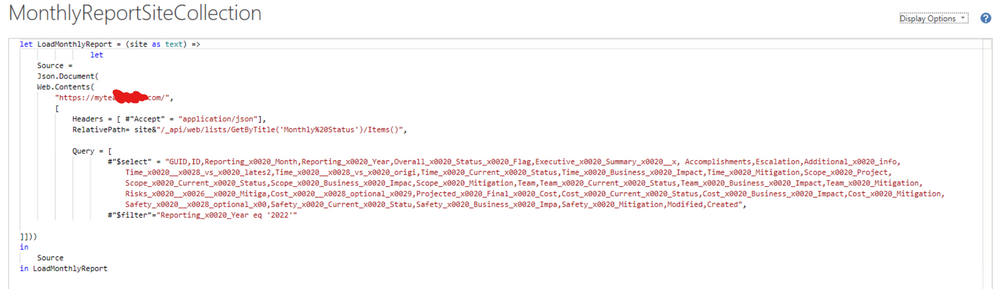

Now, I have changed the query using Web.Contents and RelativePath and that is working as well. I am not getting dynamic data source error in Power Bi service as well. Below is the new query.

Now my doubt is, in all the different forums and also Chris Webb's blogs I have checked, nowhere they have used 'Headers" inside Web.Contents.

But if I don't use the headers, then I am getting an error called "We Found Extra Characters at End of JSON Line". Only by using headers, I am not getting any error.

So, I wanted to know if I am doing anything wrong or that Headers may not be required by doing some other changes?

Also I am asking this because in the old query using 'Odata.feed', data was being returned as 'Table' which gave all the data columns by just expanding once.

But here, data is returned as 'Records' and I have to expand 3 times (1st Record expands to a column of lists -> 2nd that column expands to a column of records again -> 3rd time expands into all the data columns).

This is why I wanted to know if I am doing anything incorrect?

Thanks in advanced.. 😁

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Web.Contents with the api is my preferred method of accessing SharePoint data as you typically get way better performance compared to the OOTB SharePoint connectors and OData.Feed, especially when dealing with libraries/lists with lookups. To answer your questions in order:

Q: ... So, I wanted to know if I am doing anything wrong or that Headers may not be required by doing some other changes?

A: You want to always include the header as you have it to tell the api to return data in JSON format. Otherwise, it returns data in XML format by default, which is much more annoying to work with in Power Query. FYI, probably the reason you get an error when you don't specify Header/accept is because you still have Json.Document in your next step, trying to parse XML, which is resulting in an error.

Q: But here, data is returned as 'Records'[?]

A: The way that Json.Document parses the data is to treat it like one giant nested record, which makes sense if you look at JSON syntax, which is basically a bunch of field/value pairings where a value can be a single value, an array of values, or an array of additional field/value pairings. A typical SharePoint list or library items api reponse in JSON would look like:

{

**one or two query-specific odata field/values**,

"value": [

{

**A few item-specific odata field/values**,

"Id": 1,

"Title": "Title1",

"ID": 1

},

{

**A few item-specific odata field/values**,

"Id": 2,

"Title": "Title2",

"ID": 2

},

{

**A few item-specific odata field/values**,

"Id": 3,

"Title": "Title3",

"ID": 3

}

]

}

So, as you can see from above, the main data (list items) appear as an array of records in the top-level "value" field.

Thus, if your Source is the Web.Contents wrapped in Json.Document, Source[value] should give you a list of records like the below:

Q: ... and I have to expand 3 times[?]

A: Not exactly sure what kind of operations you are doing when you "expand 3 times", but in almost any context, the best method in my experience to handle converting a list of records to a table is Table.FromRecords. Again, if your Source step is the Json.Document/Web.Contents formula, and columns you include in your select argument in the query are not yet finalized, your next step should look something like:

= Table.FromRecords( Source[value], null, MissingField.UseNull )

And once you have finalized what columns you are querying, I've found it to be a good practice to define your expected table schema, e.g. if your query select is "Id,Title":

= Table.FromRecords( Source[value], type table [Id=nullable number, Title=nullable text], MissingField.UseNull )

And before you ask, yes you can specify complex fields when setting the schema. E.g. example with Author (Created By) included where we specified Author/Title, Author/EMail, and Author/JobTitle in the select:

= Table.FromRecords( Source[value], type table [Id=nullable number, Title=nullable text, Author=nullable [Title=text,EMail=text,JobTitle=text] ], MissingField.UseNull )

I'll actually split up my complex field definitions, defining before a full table definition, then put it all together in the Table.FromRecords parsing - here is a full M advanced editor example - note the extra work needed for multiselects:

let

Source = Json.Document( Web.Contents(

"[site url]",

[

RelativePath = "_api/web/lists(guid'[list guid]')/items",

Headers = [accept="application/json"],

Query = [

#"$select"="Id,Title,Created,Author/Title,Author/EMail,Author/JobTitle,MultiSelectLookup/Title",

#"$expand"="Author,MultiSelectLookup",

#"$top"="5000"

]

]

)),

AuthorSchema = type nullable [Title=text,EMail=text,JobTitle=text],

MultiSelectLookupSchema = type table [Title=nullable text],

TableSchema = type table [

Id=nullable Int64.Type, Title=nullable text,

Created = nullable text, Author=AuthorSchema,

MultiSelectLookup=nullable list

],

ParseData = Table.FromRecords( Source[value], TableSchema , MissingField.UseNull ),

ParseTableCols = Table.TransformColumns(

ParseData,

{{

"MultiSelectLookup",

each Table.FromRecords(_, MultiSelectLookupSchema, MissingField.UseNull),

MultiSelectLookupSchema

}}

),

TransformTypes = Table.TransformColumnTypes(ParseTableCols,{{"Created", type datetime}})

in

TransformTypes

Additional notes:

- As you can see from my above example, I prefer using guid's rather than titles given that titles can change. You can extract the guid from the url of the list settings page in SharePoint, or query _api/web/lists to get all the lists and their attributes including guid (Id field)

- It's not true for some types of queries through the SharePoint api (e.g. querying doc library folder), but definitely when dealing with lists you'll need to factor paging into your query. The api only returns 100 items by default, with a max allowance in one web call of 5000 items if you specify with $top in the query. That means, if you need to return >5000 items, you'll need to do multiple calls.

- Re: setting schema, note that most simple values come in as either text, number, or boolean (and pretty sure nothing else). E.g. dates will come in as text in the 'YYYY-MM-DDTHH:NN:SSZ' format. It's still useful IMO to set the schema because a) saves you trouble of removing the extra ID column that always comes in, b) puts columns in the order you specify, and c) allows you to set the type and only use Table.TransformColumnTypes on columns that actually require transformation (okay, last point only applies for the pedantic among us)

- Multiselect fields usually show up as lists of records, which, as discussed above, Table.FromRecords is most effective at parsing. You'll initialize these as a list column, and then can take an additional step to transform into the correctly typed table column

- In a lot of cases you can actually forgo all the nullable / MissingField.UseNull, but I've found accounting for missing fields makes the query more durable over time.

Edit: minor grammar fixes, trying to fix whitespace, removed application/json;odata=nometadata code snippet I decided not to comment on

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Not sure wheter this thread is still active but I use the code below to avoid dynamic source error in PowerBI:

(site as text, listname as text) =>

let

Source =

Json.Document(

Web.Contents(

"https://somecompany.sharepoint.com",

[

Headers = [accept = "application/json"],

RelativePath = "/sites/" & site & "/_api/web/lists/getbytitle('" & listname & "')/items",

Query = [

#"$select" = "Title,Id",

#"$top"="100"

]

]

)

)

in

Source

It does fix a dynamic source error, however it has introduced a new error (only in PowerBI service):

- Data source errorDataFormat.Error: The input URL is invalid. Please provide a URL to the file path on SharePoint up to the file name only (with no query or fragment part).. <ccon>https://somecompany.sharepoint.com. </ccon>;The input URL is invalid. Please provide a URL to the file path on SharePoint up to the file name only (with no query or fragment part).. The exception was raised by the IDataReader interface. Please review the error message and provider documentation for further information and corrective action. Table: Table.

Would anyone know why PBI service is complaining about it?

Many Thanks,

WJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, I got it working.

Apparently, it makes a difference in PowerBI service whether the closing backslash is a part of base URL or relative path. I have moved "/" from "/sites..." to "https://somecompany.sharepoint.com/" in the code above which makes it work.

Thank you,

WJ

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reporting this. It appears to be a bug in our SharePoint logic. I've filed it internally and we'll take a look.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @SDM_1997 ,

I did not look too much into it as I was getting my final output of all data correctly after all the expansions so did not bother too much

So it seems that your issue has been solved, right? If so , please kindly Accept a helpful reply posted above as the solution to make the thread closed. More people will benefit from it.

Best Regards,

Eyelyn Qin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Web.Contents with the api is my preferred method of accessing SharePoint data as you typically get way better performance compared to the OOTB SharePoint connectors and OData.Feed, especially when dealing with libraries/lists with lookups. To answer your questions in order:

Q: ... So, I wanted to know if I am doing anything wrong or that Headers may not be required by doing some other changes?

A: You want to always include the header as you have it to tell the api to return data in JSON format. Otherwise, it returns data in XML format by default, which is much more annoying to work with in Power Query. FYI, probably the reason you get an error when you don't specify Header/accept is because you still have Json.Document in your next step, trying to parse XML, which is resulting in an error.

Q: But here, data is returned as 'Records'[?]

A: The way that Json.Document parses the data is to treat it like one giant nested record, which makes sense if you look at JSON syntax, which is basically a bunch of field/value pairings where a value can be a single value, an array of values, or an array of additional field/value pairings. A typical SharePoint list or library items api reponse in JSON would look like:

{

**one or two query-specific odata field/values**,

"value": [

{

**A few item-specific odata field/values**,

"Id": 1,

"Title": "Title1",

"ID": 1

},

{

**A few item-specific odata field/values**,

"Id": 2,

"Title": "Title2",

"ID": 2

},

{

**A few item-specific odata field/values**,

"Id": 3,

"Title": "Title3",

"ID": 3

}

]

}

So, as you can see from above, the main data (list items) appear as an array of records in the top-level "value" field.

Thus, if your Source is the Web.Contents wrapped in Json.Document, Source[value] should give you a list of records like the below:

Q: ... and I have to expand 3 times[?]

A: Not exactly sure what kind of operations you are doing when you "expand 3 times", but in almost any context, the best method in my experience to handle converting a list of records to a table is Table.FromRecords. Again, if your Source step is the Json.Document/Web.Contents formula, and columns you include in your select argument in the query are not yet finalized, your next step should look something like:

= Table.FromRecords( Source[value], null, MissingField.UseNull )

And once you have finalized what columns you are querying, I've found it to be a good practice to define your expected table schema, e.g. if your query select is "Id,Title":

= Table.FromRecords( Source[value], type table [Id=nullable number, Title=nullable text], MissingField.UseNull )

And before you ask, yes you can specify complex fields when setting the schema. E.g. example with Author (Created By) included where we specified Author/Title, Author/EMail, and Author/JobTitle in the select:

= Table.FromRecords( Source[value], type table [Id=nullable number, Title=nullable text, Author=nullable [Title=text,EMail=text,JobTitle=text] ], MissingField.UseNull )

I'll actually split up my complex field definitions, defining before a full table definition, then put it all together in the Table.FromRecords parsing - here is a full M advanced editor example - note the extra work needed for multiselects:

let

Source = Json.Document( Web.Contents(

"[site url]",

[

RelativePath = "_api/web/lists(guid'[list guid]')/items",

Headers = [accept="application/json"],

Query = [

#"$select"="Id,Title,Created,Author/Title,Author/EMail,Author/JobTitle,MultiSelectLookup/Title",

#"$expand"="Author,MultiSelectLookup",

#"$top"="5000"

]

]

)),

AuthorSchema = type nullable [Title=text,EMail=text,JobTitle=text],

MultiSelectLookupSchema = type table [Title=nullable text],

TableSchema = type table [

Id=nullable Int64.Type, Title=nullable text,

Created = nullable text, Author=AuthorSchema,

MultiSelectLookup=nullable list

],

ParseData = Table.FromRecords( Source[value], TableSchema , MissingField.UseNull ),

ParseTableCols = Table.TransformColumns(

ParseData,

{{

"MultiSelectLookup",

each Table.FromRecords(_, MultiSelectLookupSchema, MissingField.UseNull),

MultiSelectLookupSchema

}}

),

TransformTypes = Table.TransformColumnTypes(ParseTableCols,{{"Created", type datetime}})

in

TransformTypes

Additional notes:

- As you can see from my above example, I prefer using guid's rather than titles given that titles can change. You can extract the guid from the url of the list settings page in SharePoint, or query _api/web/lists to get all the lists and their attributes including guid (Id field)

- It's not true for some types of queries through the SharePoint api (e.g. querying doc library folder), but definitely when dealing with lists you'll need to factor paging into your query. The api only returns 100 items by default, with a max allowance in one web call of 5000 items if you specify with $top in the query. That means, if you need to return >5000 items, you'll need to do multiple calls.

- Re: setting schema, note that most simple values come in as either text, number, or boolean (and pretty sure nothing else). E.g. dates will come in as text in the 'YYYY-MM-DDTHH:NN:SSZ' format. It's still useful IMO to set the schema because a) saves you trouble of removing the extra ID column that always comes in, b) puts columns in the order you specify, and c) allows you to set the type and only use Table.TransformColumnTypes on columns that actually require transformation (okay, last point only applies for the pedantic among us)

- Multiselect fields usually show up as lists of records, which, as discussed above, Table.FromRecords is most effective at parsing. You'll initialize these as a list column, and then can take an additional step to transform into the correctly typed table column

- In a lot of cases you can actually forgo all the nullable / MissingField.UseNull, but I've found accounting for missing fields makes the query more durable over time.

Edit: minor grammar fixes, trying to fix whitespace, removed application/json;odata=nometadata code snippet I decided not to comment on

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You very much for this!

Really helpled in clarifying the fundamentals..

About the 3 times expansion, I am not sure as well. Previously, I did try the Table.FromRecords, but it gave me a conversion error. (don't remember exactly what now ). So, I did not look too much into it as I was getting my final output of all data correctly after all the expansions so did not bother too much 😅

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Table.FromRecords with just the first argument usually will work, but I've found with some SharePoint sources (I think it usually comes down to if even just one item in the query somehow got a field blown away), it will only work if you specify MissingField.UseNull. So, unless I'm being lazy/fast, I'll always include the null (because validator yells at you if you try to leave empty - okay for DAX, not M), and MissingField.UseNull in 2nd and 3rd arguments.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It may be that the site expects that header value, and returns something different (such as a non-json error message) if you don't provide it. To see what the site is returning, you can wrap the call to Web.Contents in Text.FromBinary instead of Json.Document. This will return the raw text, which should help you determine what's going awry.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi! I tried wrapping Web.Contents in Text.FromBinary

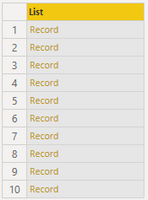

So, for every column of data I am getting this as the output there.

Can this explain what type of output the site is giving?

Thanks in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That looks like xml/OData, not json. Any reason why you switched your original query from OData.Feed to Json.Document?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reason for switch was to activate scheduled refresh in power bi service..

Using Odata.Feed was causing dynamic data source error. The only thing that I found from various blogs is using Web.Contents and keeping the dynamic part in RelativePath will fix the issue.

But I could not find anything for xml/Odata content that can be used along with Web.Contents.. That is why had to use Json.Document.

If there is a way for xml/Odata with Web.Contents let me know then. Maybe there is some other methods that I could not find on google search.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see.. Let me check this once and will let you know..

Also since, I am a complete newbie in Power Bi and Power Query, I wanted to confirm that the three times expansion of records is completely normal in this case then right? Since, Odata.feed's table output needed only 1 expansion?

Thanks for the reply..

Helpful resources

Power BI Dataviz World Championships

Vote for your favorite vizzies from the Power BI World Championship submissions!

Join our Community Sticker Challenge 2026

If you love stickers, then you will definitely want to check out our Community Sticker Challenge!

Power BI Monthly Update - January 2026

Check out the January 2026 Power BI update to learn about new features.

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 6 | |

| 6 | |

| 5 |