FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Power Query

- Conditional Group by depending on value in another...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Conditional Group by depending on value in another column

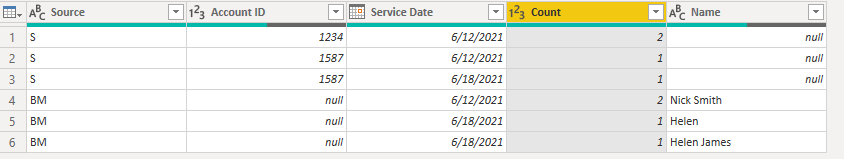

I have data that imports from two different sources - one has a unique identifer Account ID and one doesnt.

I need to group the data to allow me to do a comparison to see if there are any duplications in the submissions for the same Service Date and person.

Previoulsy this was running in two separate queries for the two different sources but I now need to run them in the same query. There are lots of other columns that i need to return at the end as well.

There are signifcantly more rows from source S with an account ID.

Example data

| Source | Account ID | Name | Service Date | ||

| S | 1234 | Harry Truman | 12/6/21 | ||

| S | 1234 | Harry | 12/6/21 | ||

| S | 1587 | Bob Jones | 12/6/21 | ||

| S | 1587 | Bob Jones | 18/6/21 | ||

| BM | Nick Smith | 12/6/21 | |||

| BM | Nick Smith | 12/6/21 | |||

| BM | Helen | 18/6/21 | |||

| BM | Helen James | 18/6/21 |

For the rows with source S i was using the following code so it groups by account ID and service date. The same code was used for the BM source but using Name and Service date instead.

#"Grouped Rows" = Table.Group(#"Removed Columns", {"Account ID", "Service Date"}, {{"Duplicate Submission", each Table.RowCount(_), Int64.Type}, {"Other", each _, type table [Submission ID=nullable number, Submission Date=nullable datetime, Account=nullable text, Account ID=nullable number, Service Date=nullable date, Time=nullable text, Name=nullable text, Your email=nullable text, Your Phone Number=any, Additional Family Member Name 1=nullable text, Additional Family Member Name 2=nullable text, Additional Family Member Name 3=nullable text, Additional Family Member Name 4=nullable text, Additional Family Member Name 5=nullable text, #"COVID-19"=nullable text, Data=nullable text, Mask=nullable text]}}),

#"Subtracted from Column" = Table.TransformColumns(#"Grouped Rows", {{"Duplicate Submission", each _ - 1, type number}}),

#"Expanded Other" = Table.ExpandTableColumn(#"Subtracted from Column", "Other", {"Submission ID", "Submission Date", "Account", "Time", "Name", "Your email", "Additional Family Member Name 1", "Additional Family Member Name 2", "Additional Family Member Name 3", "Additional Family Member Name 4", "Additional Family Member Name 5", "COVID-19", "Data", "Mask", }, {"Submission ID", "Submission Date", "Account", "Time","Name", "Your email", "Additional Family Member Name 1", "Additional Family Member Name 2", "Additional Family Member Name 3", "Additional Family Member Name 4", "Additional Family Member Name 5", "COVID-19", "Data", "Mask"}),

If i was to write the logic it would be

If [Source]=BM then #"Grouped Rows" = Table.Group(#"Removed Columns", {"Name", "Service Date") etc else #"Grouped Rows" = Table.Group(#"Removed Columns", {"Account ID", "Service Date") but i dont know how to make this work

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

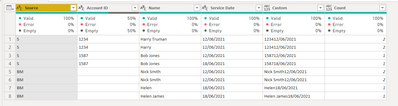

Hi @joooffice ,

Based on the conditional group logic, you can group each source and combine them after it like this query(use the count number as the default group result):

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WClbSUTI0MjYBUh6JRUWVCiFFpbmJeUCukYGRob6ZvqGRUqwOpjrsCkwtzIGUU36Sgld+XmoxqYoswIqcfIFCCkDsl5mcrRCcm1mSgWEQSWo8UnNS83BbA5ZW8ErMRXdLLAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Source = _t, #"Account ID" = _t, Name = _t, #"Service Date" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Source", type text}, {"Account ID", Int64.Type}, {"Name", type text}, {"Service Date", type date}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each ([Source] = "BM")),

#"Grouped Rows" = Table.Group(#"Filtered Rows", {"Source", "Name", "Service Date"}, {{"Count", each Table.RowCount(_), Int64.Type}}),

#"Filtered Rows2" = Table.SelectRows(#"Changed Type", each ([Source] = "S")),

#"Grouped Rows1" = Table.Group(#"Filtered Rows2", {"Source", "Account ID", "Service Date"}, {{"Count", each Table.RowCount(_), Int64.Type}}),

#"Appended Query" = Table.Combine({#"Grouped Rows1", #"Grouped Rows"})

in

#"Appended Query"

Best Regards,

Community Support Team _ Yingjie Li

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @joooffice ,

Based on the conditional group logic, you can group each source and combine them after it like this query(use the count number as the default group result):

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WClbSUTI0MjYBUh6JRUWVCiFFpbmJeUCukYGRob6ZvqGRUqwOpjrsCkwtzIGUU36Sgld+XmoxqYoswIqcfIFCCkDsl5mcrRCcm1mSgWEQSWo8UnNS83BbA5ZW8ErMRXdLLAA=", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Source = _t, #"Account ID" = _t, Name = _t, #"Service Date" = _t]),

#"Changed Type" = Table.TransformColumnTypes(Source,{{"Source", type text}, {"Account ID", Int64.Type}, {"Name", type text}, {"Service Date", type date}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each ([Source] = "BM")),

#"Grouped Rows" = Table.Group(#"Filtered Rows", {"Source", "Name", "Service Date"}, {{"Count", each Table.RowCount(_), Int64.Type}}),

#"Filtered Rows2" = Table.SelectRows(#"Changed Type", each ([Source] = "S")),

#"Grouped Rows1" = Table.Group(#"Filtered Rows2", {"Source", "Account ID", "Service Date"}, {{"Count", each Table.RowCount(_), Int64.Type}}),

#"Appended Query" = Table.Combine({#"Grouped Rows1", #"Grouped Rows"})

in

#"Appended Query"

Best Regards,

Community Support Team _ Yingjie Li

If this post helps, then please consider Accept it as the solution to help the other members find it more quickly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @joooffice

So your goal is to identify duplicated rows on two columns? I actually don't know how to proceed if you go with groupby, you need to seperate them then combine back? How about this way to identify duplicates?

let

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText("i45WClbSUTI0MjYBUh6JRUWVCiFFpbmJeWBRfQMzfSMDI0OlWB1MhThUmFqYAymn/CQFr/y81GLiVVmgqHLyBYoBkV9mcrZCcG5mSQamSaSo8UjNSc3DbQ1YWsErMRfDLbEA", BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true]) in type table [Source = _t, #"Account ID" = _t, Name = _t, #"Service Date" = _t]),

#"Added Custom" = Table.AddColumn(Source, "Custom", each if [Source] = "S" then [Account ID]&[Service Date] else [Name]&[Service Date]),

#"Added Custom1" = Table.AddColumn(#"Added Custom", "Count", each List.Count(#"Added Custom"[Custom]) - List.Count( List.RemoveItems(#"Added Custom"[Custom],{[Custom]})))

in

#"Added Custom1"Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 19 | |

| 10 | |

| 9 | |

| 8 | |

| 7 |