Register now to learn Fabric in free live sessions led by the best Microsoft experts. From Apr 16 to May 9, in English and Spanish.

- Synapse forums

- Forums

- Get Help with Synapse

- General Discussion

- Re: Lakehouse Table Rows Limit

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lakehouse Table Rows Limit

Hi community,

I wanted to ask opinions on how to handle the table row limit of a Fabric Lakehouse.

For a P1 capacity the table row limit is 1.5 bn rows. That is pretty low for a feature that claims to work with big data.

Are there any recommendations on the scenario where our data exceed this limit?

- If we have a multibillion row table, how to we deal with it?

- If it is our fact table?

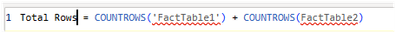

- If we break it into smaller tables, will we then be able to create DAX measures to calculate metrics that may need data from all these tables? Like a total sum or count?

- How the performance will be?

Would love to hear your thoughts.

Thanks.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok thanks for confirming. At the moment yes that is a limit as the directlake feature is (attempting to) paging all the rows into the vertipaq engine cache from the lakehouse table. I don't know whether it is exactly 1.5B rows as there could be a little variance. However if your Fact table is well above that, then yes it may need splitting if you want DirectLake.

You should be able to use a measure to Sum/Count across multiple tables and it should use directlake

You can use Profiler to actually see if a query is using DirectLake or falling back to DQ in your testing.

Learn how to analyze query processing for Direct Lake datasets - Power BI | Microsoft Learn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gp10, the row limit of 1.5B rows is based on the DirectLake functionality - is this what you are referring to? The Lakehouse table itself can hold more, it's just that the DirectLake feature will not work with tables > 1.5B rows and will fall back to DirectQuery.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @AndyDDC , yes this is what I'm referring to.

In this case, what options do I have if I want to use Direct Lake with tables more than 1.5b rows?

Is there a hard limit on the Lakehouse table rows?

Cheers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok thanks for confirming. At the moment yes that is a limit as the directlake feature is (attempting to) paging all the rows into the vertipaq engine cache from the lakehouse table. I don't know whether it is exactly 1.5B rows as there could be a little variance. However if your Fact table is well above that, then yes it may need splitting if you want DirectLake.

You should be able to use a measure to Sum/Count across multiple tables and it should use directlake

You can use Profiler to actually see if a query is using DirectLake or falling back to DQ in your testing.

Learn how to analyze query processing for Direct Lake datasets - Power BI | Microsoft Learn

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response @AndyDDC.

I will accept it as a solution, so far no other alternatives (other than upgrading the capacity) to use Direct Lake with such a table.

In your opinion, how would the performance be if I use such measures?

And performance wise, when splitting the table, does it make sense to create few big tables that will try to approach the 1.5bn row limit, or create many smaller ones?

Thanks for your time.

Helpful resources

Fabric Monthly Update - April 2024

Check out the April 2024 Fabric update to learn about new features.

Microsoft Fabric Learn Together

Covering the world! 9:00-10:30 AM Sydney, 4:00-5:30 PM CET (Paris/Berlin), 7:00-8:30 PM Mexico City

| User | Count |

|---|---|

| 10 | |

| 8 | |

| 8 | |

| 5 | |

| 4 |