FabCon is coming to Atlanta

Join us at FabCon Atlanta from March 16 - 20, 2026, for the ultimate Fabric, Power BI, AI and SQL community-led event. Save $200 with code FABCOMM.

Register now!- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- QuickViz Gallery

- Quick Measures Gallery

- Visual Calculations Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- TMDL Gallery

- R Script Showcase

- Webinars and Video Gallery

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now! Learn more

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: The Csv.Document parameter 'Columns' is invali...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Csv.Document parameter 'Columns' is invalid.

Hi everyone,

I am having the following issue on a couple reports I have built. So far the only options on the community forums is to either change from a personal gateway to an enterprise one (This isnt an option for me), the other is to change the CSV to a txt file. I have done this where I can however the report I am having issues with pulls CSV files from the new get data from a folder option. There doesnt appear to be a function within this to change the type to TXT.

Has anyone got a fix for this?

Thanks,

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My IT department managed to fix the issue. We went through the advanced editor in query mode and manually changed the coding to get it to work.

This is how we did it:

This line of text is incorrect:

Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter=","columns=10, Encoding=1252]),

The columns=10 was counting the columns in the document (10 is correct) but something was going wrong. We deleted this text but lef tin the commas and republished the file. It then failed again due to this error:

The Csv.Document parameter 'QuoteStyle' is invalid. From memory the advanced editor text looked like this:

Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter=","columns=10, Encoding=1252]), QuoteStyle=QuoteStyle""

We deleted the QuoteStyle text and republished. The dashboard now refreshes using the automated refresh feature in Pro.

Thanks,

Giles

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For anyone else who might come across this, I suspect that at least I ran into this issue because I created my query in a newer version of Power Query (Excel M365) and tried to run it in an older version of Power Query (Excel 2016). There was also at least one other function I had to remove parameters from, including Promote Headers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We found a solution by modifying the Csv.Document step as explained below. We encountered this same error in one of our solutions built in Power Query when a client updgraded from Excel 2013 to Excel 2016. The query was importing a CSV and was returning this error on the step that uses Csv.Document. It was and is still working correctly in Excel 2013, but was not working in Excel 2016.

The code that was returning the error was:

Csv.Document([Content],[Delimiter=",", Columns=40, Encoding=1252, QuoteStyle=QuoteStyle.None])

We changed it to the following:

Csv.Document([Content],[Delimiter=",", Column=40, Encoding=1252])

There were 2 changes, highlighted in red. Columns becomes Column (i.e. singular). This then no longer returns the parameter 'Columns' is invalid error message, but then returns an error message related to QuoteStyle. We then removed the QuoteStyle part. This then worked in Excel 2016. This code also works in Excel 2013 and in Power BI Desktop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lyndon,

Thank you for your return !

How the quotations of a .CSV can be ignore as the "QuoteStyle=QuoteStyle.None" no longer works ?

For instance if I have the following csv file :

First name, gender, address

John, male, "1 Fox Street"

And I use : Csv.Document([Content],[Delimiter=",", Column=3, Encoding=1252])

The result looks like:

First name | gender | adress |

John, male, "1 Fox Street"| | |

Without the quotation

First name, gender, address

John, male, 1 Fox Street

The result looks like:

First name | gender | adress |

John |male |1 Fox Street|

The quotations affect the result and there is no way to avoid them?

Best,

Kelian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you post a sample of your CSV file that you are having trouble with, particular the column names and a row or two of data?

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here you go:

| Consignment | Docket | Date Loaded | Depot | Wagon | Product | Gross | Tare | Net | Overcarry |

| RA17221 | RA00318461 | 12/04/2016 5:04 | 010Y | THFY015-N | 13068 | 66.8 | 20.65 | 46.15 | 1.65 |

| RA17221 | RA00318462 | 12/04/2016 5:03 | 010Y | THFY012-J | 13068 | 66.9 | 20.5 | 46.4 | 1.35 |

| RA17221 | RA00318463 | 12/04/2016 5:09 | 010Y | THFY002 | 13068 | 67 | 19.65 | 47.35 | 0 |

All the CSV files are the same, they have roughly 20 rows but the columns will never change. I have done a couple formatting changes to the files such as changing the date loaded column to be split into two columns date and time. The Wagon column is used to created a custom column which removes all the characters apart from the numbers.

Thanks,

Giles

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GilesWalker - Hmmm, I can't replicate it. I took your data and copied and pasted it into a file, wagons_tab.csv and then also created a true comma-separated file, wagons.csv, removed the tabs and replaced with commas. Both imported no problem using CSV as data source. Wasn't expecting that.

Um, what version of Power BI Desktop are you running?

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thats the same thing that happens to me. If I load a single file there is no issue. However some of my other reports had this same issue popping up in the last couple weeks. These are reports which have had no change made to them in over three months. The only fix was to have the individual CSV files data set changed through the settings features in query mode to a TXT file.

The feature to change the file type is not available in the get data FILE catagory. The CSV example I posted is one of over a 500 files contained within this file which grows everyday so no chance of manually changing the files to TXT.

Thanks,

Giles

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Might warrant going here and opening an actual support case:

https://powerbi.microsoft.com/en-us/support/

Contact Support link.

Did you recently update your Desktop?

Follow on LinkedIn

@ me in replies or I'll lose your thread!!!

Instead of a Kudo, please vote for this idea

Become an expert!: Enterprise DNA

External Tools: MSHGQM

YouTube Channel!: Microsoft Hates Greg

Latest book!: DAX For Humans

DAX is easy, CALCULATE makes DAX hard...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My IT department managed to fix the issue. We went through the advanced editor in query mode and manually changed the coding to get it to work.

This is how we did it:

This line of text is incorrect:

Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter=","columns=10, Encoding=1252]),

The columns=10 was counting the columns in the document (10 is correct) but something was going wrong. We deleted this text but lef tin the commas and republished the file. It then failed again due to this error:

The Csv.Document parameter 'QuoteStyle' is invalid. From memory the advanced editor text looked like this:

Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter=","columns=10, Encoding=1252]), QuoteStyle=QuoteStyle""

We deleted the QuoteStyle text and republished. The dashboard now refreshes using the automated refresh feature in Pro.

Thanks,

Giles

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having this same error, but am having difficulty performing the fix as you described.

When you say you deleted the text, what text did you delete? the '10' or the entire 'Columns=10'

This is my line of code as extracted from the Advanced editor

#"Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter="#(tab)", Columns=10 , Encoding=1200, QuoteStyle=QuoteStyle.None]),

So, my understanding of your suggestion is to replace with this:

#"Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter="#(tab)", , Encoding=1200, ]),

When I try that, I get teh error 'invalid identifier' so I know I'm not understanding right

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your line of code:

#"Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter="#(tab)", Columns=10 , Encoding=1200, QuoteStyle=QuoteStyle.None]),

The items to delete are Columns=10 and QuoteStyle=QuoteStyle.None

The text below is what mine looks like and it appears as though you need to also delete "#(tab) and insert a " where the columns was.

Try and insert the text below to see if that works.

#"Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter=",", Encoding=1252]),

Thanks

Giles

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I applied those changes in the Advanced Editor I get a new error

'Expression.Error: The column 'User(Login Name' of the table wasn't found.'

This is a column that was removed when I was 'grooming' the data after the binaries were combined. I have opened a ticket with Microsoft, but they don't really know what is going on either.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

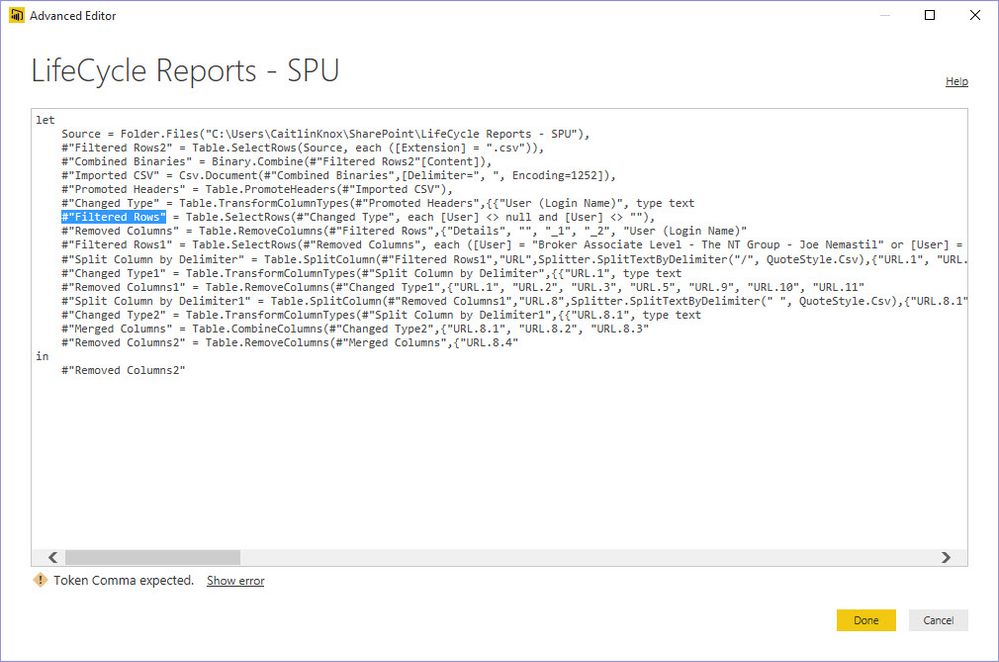

let

Source = Folder.Files("C:\Users\CaitlinKnox\SharePoint\LifeCycle Reports - SPU"),

#"Filtered Rows2" = Table.SelectRows(Source, each ([Extension] = ".csv")),

#"Combined Binaries" = Binary.Combine(#"Filtered Rows2"[Content]),

#"Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter="#(tab)", Columns=10, Encoding=1200, QuoteStyle=QuoteStyle.None]),

#"Promoted Headers" = Table.PromoteHeaders(#"Imported CSV"),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"User (Login Name)", type text}, {"User", type text}, {"URL", type text}, {"Type", type text}, {"Action", type text}, {"Time", type datetime}, {"Details", type text}, {"", type text}, {"_1", type text}, {"_2", type text}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [User] <> null and [User] <> ""),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows",{"Details", "", "_1", "_2", "User (Login Name)"}),

#"Filtered Rows1" = Table.SelectRows(#"Removed Columns", each ([User] = "Broker Associate Level - The NT Group - Joe Nemastil" or [User] = "Broker Executive Level - Nology - Brendon Liner" or [User] = "Broker Master Level - Integrated - Mary Heinen" or [User] = "Broker Professional Level - Millermiller Industrial Solutions - Karl Miller" or [User] = "Broker Professional Level - Muhammad Alam" or [User] = "Broker Professional Level - TechNoir - James Velco" or [User] = "Broker Professional Level - USPCNET - Elias Kousoulas" or [User] = "Greg LaCoste" or [User] = "Heather Raymond" or [User] = "Joellyn Mayer" or [User] = "John Kennedy" or [User] = "Morgan Spencer" or [User] = "Scott Morgan")),

#"Split Column by Delimiter" = Table.SplitColumn(#"Filtered Rows1","URL",Splitter.SplitTextByDelimiter("/", QuoteStyle.Csv),{"URL.1", "URL.2", "URL.3", "URL.4", "URL.5", "URL.6", "URL.7", "URL.8", "URL.9", "URL.10", "URL.11"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"URL.1", type text}, {"URL.2", type text}, {"URL.3", type text}, {"URL.4", type text}, {"URL.5", type text}, {"URL.6", type text}, {"URL.7", type text}, {"URL.8", type text}, {"URL.9", type text}, {"URL.10", Int64.Type}, {"URL.11", type text}}),

#"Removed Columns1" = Table.RemoveColumns(#"Changed Type1",{"URL.1", "URL.2", "URL.3", "URL.5", "URL.9", "URL.10", "URL.11"}),

#"Split Column by Delimiter1" = Table.SplitColumn(#"Removed Columns1","URL.8",Splitter.SplitTextByDelimiter(" ", QuoteStyle.Csv),{"URL.8.1", "URL.8.2", "URL.8.3", "URL.8.4"}),

#"Changed Type2" = Table.TransformColumnTypes(#"Split Column by Delimiter1",{{"URL.8.1", type text}, {"URL.8.2", type text}, {"URL.8.3", type text}, {"URL.8.4", type text}}),

#"Merged Columns" = Table.CombineColumns(#"Changed Type2",{"URL.8.1", "URL.8.2", "URL.8.3"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"URL"),

#"Removed Columns2" = Table.RemoveColumns(#"Merged Columns",{"URL.8.4"})

in

#"Removed Columns2"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Caitlin_KnoxTry this and see if it works, i have removed some information and changed the coding from 1200 to 1252. 1252 is the encoding of characters to English.

let

Source = Folder.Files("C:\Users\CaitlinKnox\SharePoint\LifeCycle Reports - SPU"),

#"Filtered Rows2" = Table.SelectRows(Source, each ([Extension] = ".csv")),

#"Combined Binaries" = Binary.Combine(#"Filtered Rows2"[Content]),

#"Imported CSV" = Csv.Document(#"Combined Binaries",[Delimiter=", ", Encoding=1252]),

#"Promoted Headers" = Table.PromoteHeaders(#"Imported CSV"),

#"Changed Type" = Table.TransformColumnTypes(#"Promoted Headers",{{"User (Login Name)", type text}, {"User", type text}, {"URL", type text}, {"Type", type text}, {"Action", type text}, {"Time", type datetime}, {"Details", type text}, {"", type text}, {"_1", type text}, {"_2", type text}}),

#"Filtered Rows" = Table.SelectRows(#"Changed Type", each [User] <> null and [User] <> ""),

#"Removed Columns" = Table.RemoveColumns(#"Filtered Rows",{"Details", "", "_1", "_2", "User (Login Name)"}),

#"Filtered Rows1" = Table.SelectRows(#"Removed Columns", each ([User] = "Broker Associate Level - The NT Group - Joe Nemastil" or [User] = "Broker Executive Level - Nology - Brendon Liner" or [User] = "Broker Master Level - Integrated - Mary Heinen" or [User] = "Broker Professional Level - Millermiller Industrial Solutions - Karl Miller" or [User] = "Broker Professional Level - Muhammad Alam" or [User] = "Broker Professional Level - TechNoir - James Velco" or [User] = "Broker Professional Level - USPCNET - Elias Kousoulas" or [User] = "Greg LaCoste" or [User] = "Heather Raymond" or [User] = "Joellyn Mayer" or [User] = "John Kennedy" or [User] = "Morgan Spencer" or [User] = "Scott Morgan")),

#"Split Column by Delimiter" = Table.SplitColumn(#"Filtered Rows1","URL",Splitter.SplitTextByDelimiter("/", QuoteStyle.Csv),{"URL.1", "URL.2", "URL.3", "URL.4", "URL.5", "URL.6", "URL.7", "URL.8", "URL.9", "URL.10", "URL.11"}),

#"Changed Type1" = Table.TransformColumnTypes(#"Split Column by Delimiter",{{"URL.1", type text}, {"URL.2", type text}, {"URL.3", type text}, {"URL.4", type text}, {"URL.5", type text}, {"URL.6", type text}, {"URL.7", type text}, {"URL.8", type text}, {"URL.9", type text}, {"URL.10", Int64.Type}, {"URL.11", type text}}),

#"Removed Columns1" = Table.RemoveColumns(#"Changed Type1",{"URL.1", "URL.2", "URL.3", "URL.5", "URL.9", "URL.10", "URL.11"}),

#"Split Column by Delimiter1" = Table.SplitColumn(#"Removed Columns1","URL.8",Splitter.SplitTextByDelimiter(" ", QuoteStyle.Csv),{"URL.8.1", "URL.8.2", "URL.8.3", "URL.8.4"}),

#"Changed Type2" = Table.TransformColumnTypes(#"Split Column by Delimiter1",{{"URL.8.1", type text}, {"URL.8.2", type text}, {"URL.8.3", type text}, {"URL.8.4", type text}}),

#"Merged Columns" = Table.CombineColumns(#"Changed Type2",{"URL.8.1", "URL.8.2", "URL.8.3"},Combiner.CombineTextByDelimiter("", QuoteStyle.None),"URL"),

#"Removed Columns2" = Table.RemoveColumns(#"Merged Columns",{"URL.8.4"})

in

#"Removed Columns2"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I get the error 'Token Comma expected' When I click show error, it highlights the text as shown in the screen capture. But looking at the lines around, I cant determine where it is expecting a comma.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This happened to me also. By changing the inormation in the first couple steps this can then have an effect on the remaining steps. Is it possible for you in the query editor to remove all steps after the promoted headers step? Then see what the data looks like and go through and do your editing again?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This happened to me also. By changing the inormation in the first couple steps this can then have an effect on the remaining steps. Is it possible for you in the query editor to remove all steps after the promoted headers step? Then see what the data looks like and go through and do your editing again?

Helpful resources

Power BI Dataviz World Championships

The Power BI Data Visualization World Championships is back! Get ahead of the game and start preparing now!

| User | Count |

|---|---|

| 37 | |

| 37 | |

| 33 | |

| 32 | |

| 29 |

| User | Count |

|---|---|

| 130 | |

| 88 | |

| 82 | |

| 68 | |

| 64 |