Join the #PBI10 DataViz contest

Power BI is turning 10, and we’re marking the occasion with a special community challenge. Use your creativity to tell a story, uncover trends, or highlight something unexpected.

Get started- Power BI forums

- Get Help with Power BI

- Desktop

- Service

- Report Server

- Power Query

- Mobile Apps

- Developer

- DAX Commands and Tips

- Custom Visuals Development Discussion

- Health and Life Sciences

- Power BI Spanish forums

- Translated Spanish Desktop

- Training and Consulting

- Instructor Led Training

- Dashboard in a Day for Women, by Women

- Galleries

- Webinars and Video Gallery

- Data Stories Gallery

- Themes Gallery

- Contests Gallery

- Quick Measures Gallery

- Notebook Gallery

- Translytical Task Flow Gallery

- R Script Showcase

- Ideas

- Custom Visuals Ideas (read-only)

- Issues

- Issues

- Events

- Upcoming Events

Join us for an expert-led overview of the tools and concepts you'll need to become a Certified Power BI Data Analyst and pass exam PL-300. Register now.

- Power BI forums

- Forums

- Get Help with Power BI

- Desktop

- Re: Storing and using information from a dynamic d...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Storing and using information from a dynamic data source using PBI desktop.

Hello,

This is something that I've worked in for a couple of months, and have been successfully using it for 3 months, and now would like to share with the community. If you want to know where this comes from, please visit this post.

Now I'm going to explain the whole process, as detailed as possible.

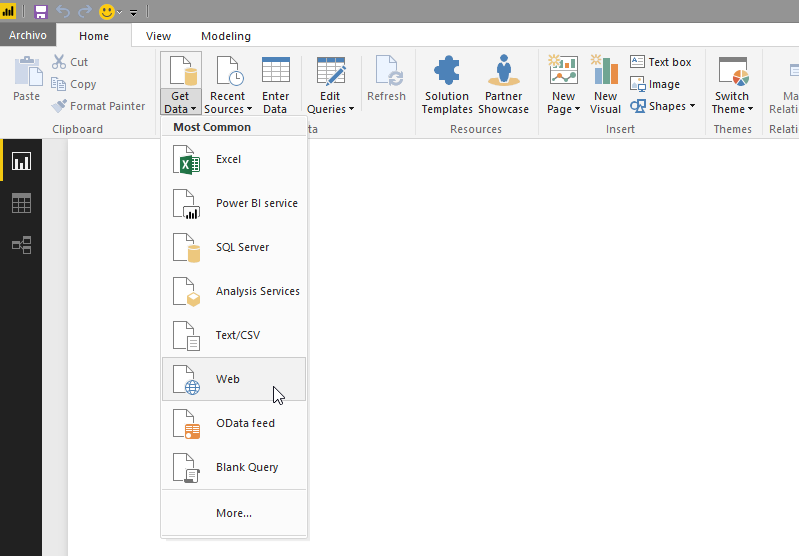

1) Connect Power BI to a data source.

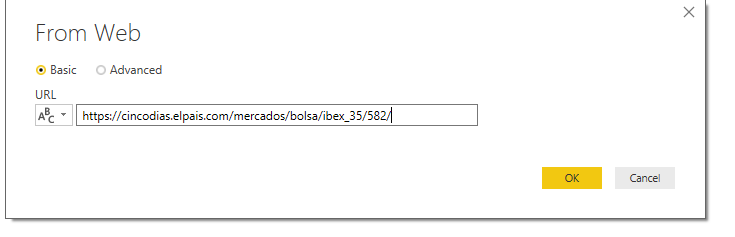

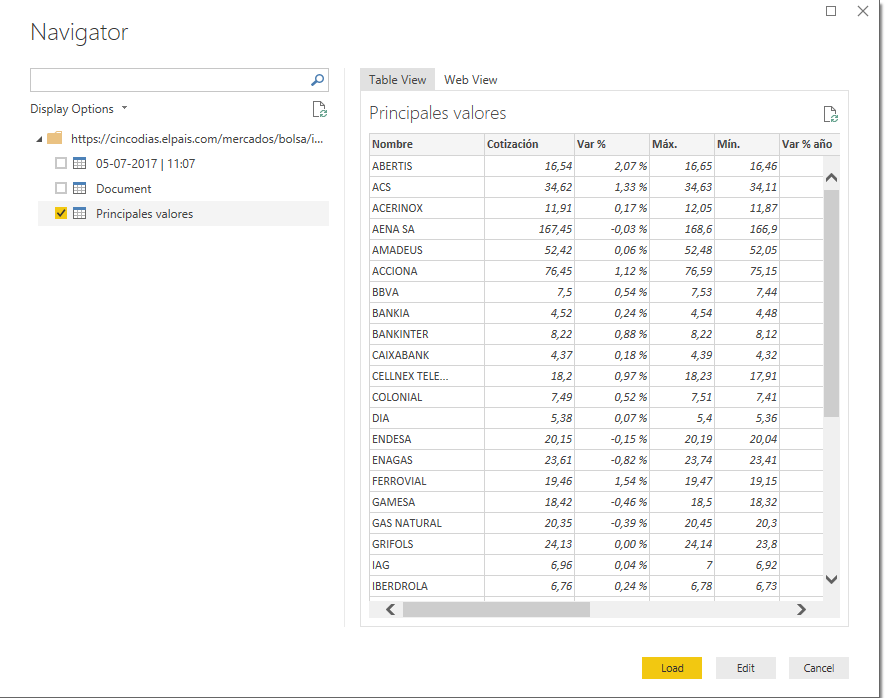

For this example, I’m going to connect to a web page that publishes information regarding IBEX 35

After that, we will create a column: Refreshment date = now ()

*For users that use regional settings different to English ones, I recommend to disable time intelligence, under Options, Data Load.

2) Prepare the system to use the script.

I use a Virtual Machine for this, but it can be done from any pc that meets the requirements.

First, you will need to install Power Shell’s Package Manager. https://www.microsoft.com/en-us/download/details.aspx?id=51451

*Edited: It seems this is not necessary in windows 10.

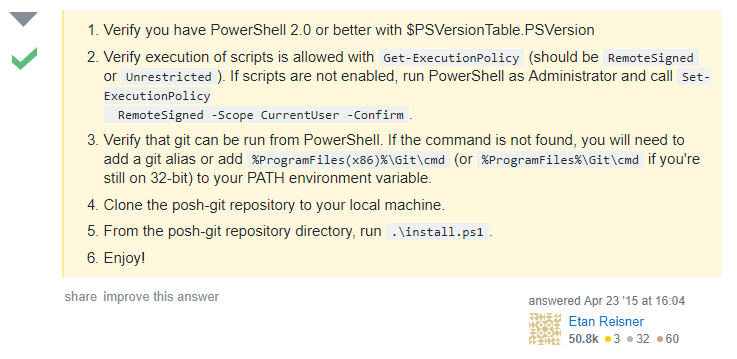

Second, run PowerShell as an admin, and follow these steps. (from https://stackoverflow.com/questions/29828756/the-term-install-module-is-not-recognized-as-the-name-o...)

Third, download and install SQL_AS_AMO.msi and SQL_AS_ADOMD.msi from https://www.microsoft.com/download/details.aspx?id=52676

Now, you are ready to edit the script.

3) Edit the script.

You can download it from my onedrive. Instructions are placed inside the script.

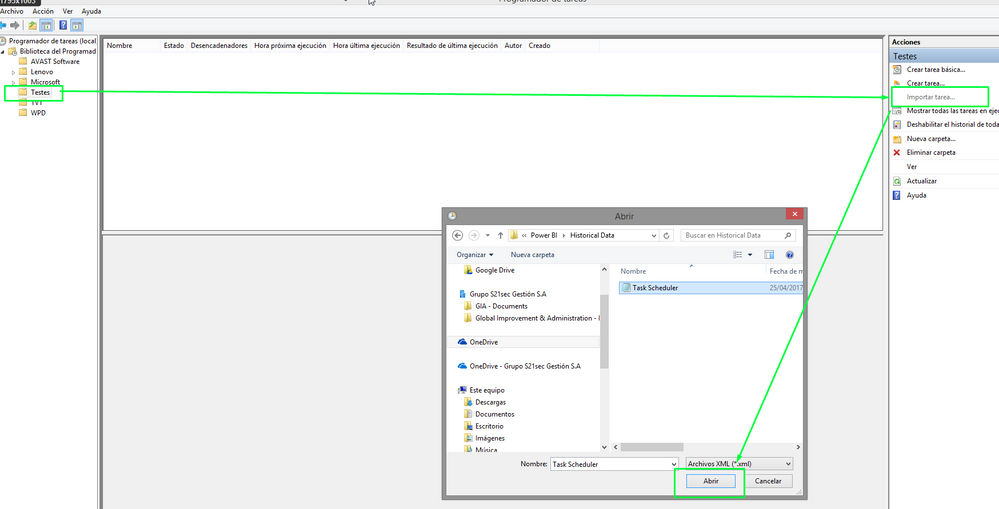

4) Use the task scheduler.

Since we don’t want to run the script manually every day (weekends included), let´s program that task!

Download this file first, then open the task scheduler.

Then, select where you want to store the task, and import it.

I have it set to 10 PM every day, you can change it. Then go to actions, and update the file path to the PowerShell script.

After that, just to check everything is working fine, I’d run the task, just in case…

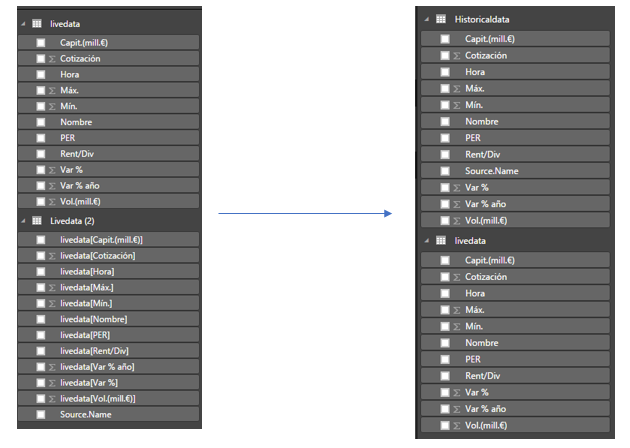

5) Add the stored information to the data model.

Now we can go back to power bi. At this point, I prefer to make a copy of the pbix file, with a different name, so that the one that is being used for the script is as simple as it can be.

First, get data from a folder, should be the folder with your table(s) name, inside the “daily” folder created on your desktop.

Then, click on Edit, Combine & Edit.

We check that everything is in order…

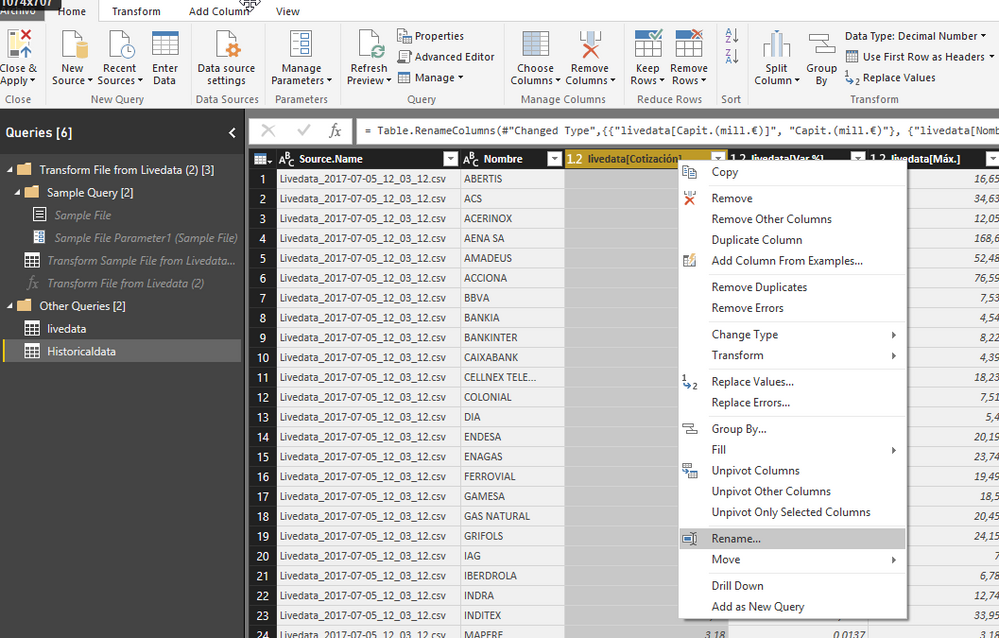

Now we have two tables, one with the information from the dynamic feed, and one from the info that we have stored. Now we need to rename the (2) table, and the columns.

I recommend doing it from the Query Editor.

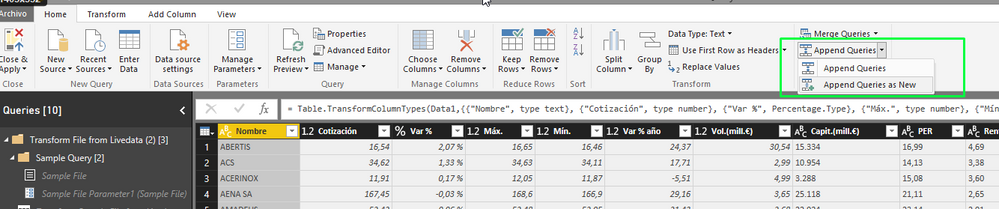

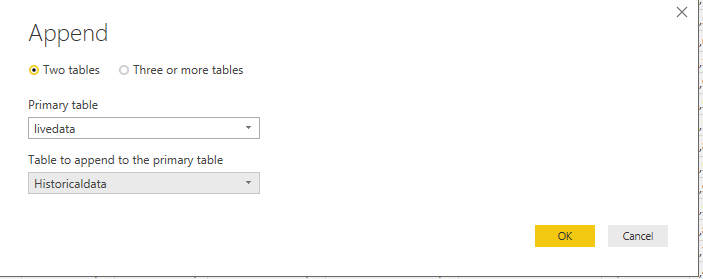

6) Appending Queries

Now is time to put all the info together, and for that we will “Append Queries as new”.

From the query editor, Append queries as new.

Now we have everything in one table, I recommend changing the appends name, and hiding the other two tables in the report view.

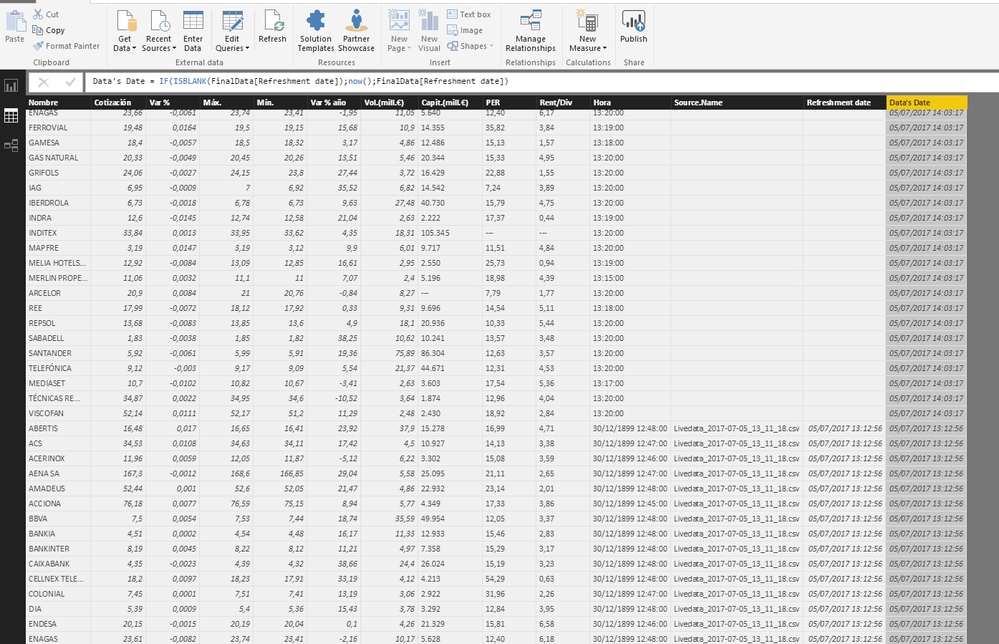

After that, we will create a column: Data's Date = IF (ISBLANK (FinalData [Refreshment date]); now (); FinalData [Refreshment date])

That way, we will be able to know when that data was stored.

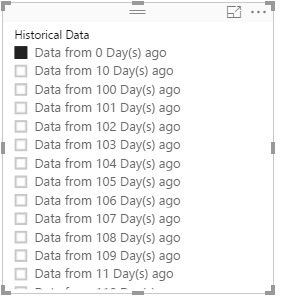

In my case, since I need to use the “live” data and the historical one, I use another column to separate it:

Historical Data = "Data from " & DATEDIFF (FinalData [Data's Date (Text)]; NOW (); DAY) & " Day(s) ago"

In case you need to establish relationships between tables, by using this script to store historical data, you will no longer have unique ids to stablish relationships in some cases, what I do to solve that issue, is to add the date to the ids.

xxxID = table1[_xxxid_value] & FORMAT(Table1[Data's Date];"MM/DD/YYYY")

If you are using power bi desktop, you don’t need to do anything else, but if you want to publish it to pbi online, you will need to set up a data gateway.

I hope you’ve enjoyed this long guide, in case you have any doubts, questions, or would like to add something, please feel free to send me an email to salva.gm@outlook.es

I would also like to give my special thanks to Jorge Diaz, for his guidance and support whilst developing this, without him, this would have probably ended as a “happy idea”, and to Imke, from thebiccountant.com, for pointing us the right direction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Fantastic -- Really nice solution! Thanks for sharing!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Salvador,

that's pretty awseome!

Thanks for taking the time for such a neat write-up of the steps - can't wait to try this out!

Cheers, Imke

Imke Feldmann (The BIccountant)

If you liked my solution, please give it a thumbs up. And if I did answer your question, please mark this post as a solution. Thanks!

How to integrate M-code into your solution -- How to get your questions answered quickly -- How to provide sample data -- Check out more PBI- learning resources here -- Performance Tipps for M-queries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team

What happened if you got massive amount of data, stored like 1g or more? Will this process apply the same or will it need some external data source to support it? Also when scheduling for time using power shell. the script will automatically run until it stop executing schedule job? I want to know as i need to implement it if it is possible, on my case i want to know if can retrieve all the reports between 1-3 months and onwards as CVS with data of per specific months. I am worried about the amount of data that this script will executing and performance it may likely to show and it might be slow. Please advice me and provide along support if this stragety can be applied to my situation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is GREAT thanks for sharing

Did this historic auditing stuff with SQLserver in the past but this is especially nice for all those strange datasources that PBI can access to quickly activate this. Have to be careful though how big your data is with this stuff (else Warehouse territory) but for smaller stuff or when carefully selecting this is great.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks ^^

In my case, had to do this to be able to properly track KPI's from Dynamics CRM, been using this for 6 tables, 107 days, 800mb of data stored, it takes a couple of minutes to refresh the data 😄

Helpful resources

Join our Fabric User Panel

This is your chance to engage directly with the engineering team behind Fabric and Power BI. Share your experiences and shape the future.

Power BI Monthly Update - June 2025

Check out the June 2025 Power BI update to learn about new features.

| User | Count |

|---|---|

| 64 | |

| 56 | |

| 54 | |

| 36 | |

| 34 |

| User | Count |

|---|---|

| 84 | |

| 73 | |

| 55 | |

| 45 | |

| 43 |